IEEE Spectrum IEEE Spectrum

-

Why the Art of Invention Is Always Being Reinvented

by Peter B. Meyer on 01. November 2024. at 14:00

Every invention begins with a problem—and the creative act of seeing a problem where others might just see unchangeable reality. For one 5-year-old, the problem was simple: She liked to have her tummy rubbed as she fell asleep. But her mom, exhausted from working two jobs, often fell asleep herself while putting her daughter to bed. “So [the girl] invented a teddy bear that would rub her belly for her,” explains Stephanie Couch, executive director of the Lemelson MIT Program. Its mission is to nurture the next generation of inventors and entrepreneurs.

Anyone can learn to be an inventor, Couch says, given the right resources and encouragement. “Invention doesn’t come from some innate genius, it’s not something that only really special people get to do,” she says. Her program creates invention-themed curricula for U.S. classrooms, ranging from kindergarten to community college.

We’re biased, but we hope that little girl grows up to be an engineer. By the time she comes of age, the act of invention may be something entirely new—reflecting the adoption of novel tools and the guiding forces of new social structures. Engineers, with their restless curiosity and determination to optimize the world around them, are continuously in the process of reinventing invention.

In this special issue, we bring you stories of people who are in the thick of that reinvention today. IEEE Spectrum is marking 60 years of publication this year, and we’re celebrating by highlighting both the creative act and the grindingly hard engineering work required to turn an idea into something world changing. In these pages, we take you behind the scenes of some awe-inspiring projects to reveal how technology is being made—and remade—in our time.

Inventors Are Everywhere

Invention has long been a democratic process. The economist B. Zorina Khan of Bowdoin College has noted that the U.S. Patent and Trademark Office has always endeavored to allow essentially anyone to try their hand at invention. From the beginning, the patent examiners didn’t care who the applicants were—anyone with a novel and useful idea who could pay the filing fee was officially an inventor.

This ethos continues today. It’s still possible for an individual to launch a tech startup from a garage or go on “Shark Tank” to score investors. The Swedish inventor Simone Giertz, for example, made a name for herself with YouTube videos showing off her hilariously bizarre contraptions, like an alarm clock with an arm that slapped her awake. The MIT innovation scholar Eric von Hippel has spotlighted today’s vital ecosystem of “user innovation,” in which inventors such as Giertz are motivated by their own needs and desires rather than ambitions of mass manufacturing.

But that route to invention gets you only so far, and the limits of what an individual can achieve have become starker over time. To tackle some of the biggest problems facing humanity today, inventors need a deep-pocketed government sponsor or corporate largess to muster the equipment and collective human brainpower required.

When we think about the challenges of scaling up, it’s helpful to remember Alexander Graham Bell and his collaborator Thomas Watson. “They invent this cool thing that allows them to talk between two rooms—so it’s a neat invention, but it’s basically a gadget,” says Eric Hintz, a historian of invention at the Smithsonian Institution. “To go from that to a transcontinental long-distance telephone system, they needed a lot more innovation on top of the original invention.” To scale their invention, Hintz says, Bell and his colleagues built the infrastructure that eventually evolved into Bell Labs, which became the standard-bearer for corporate R&D.

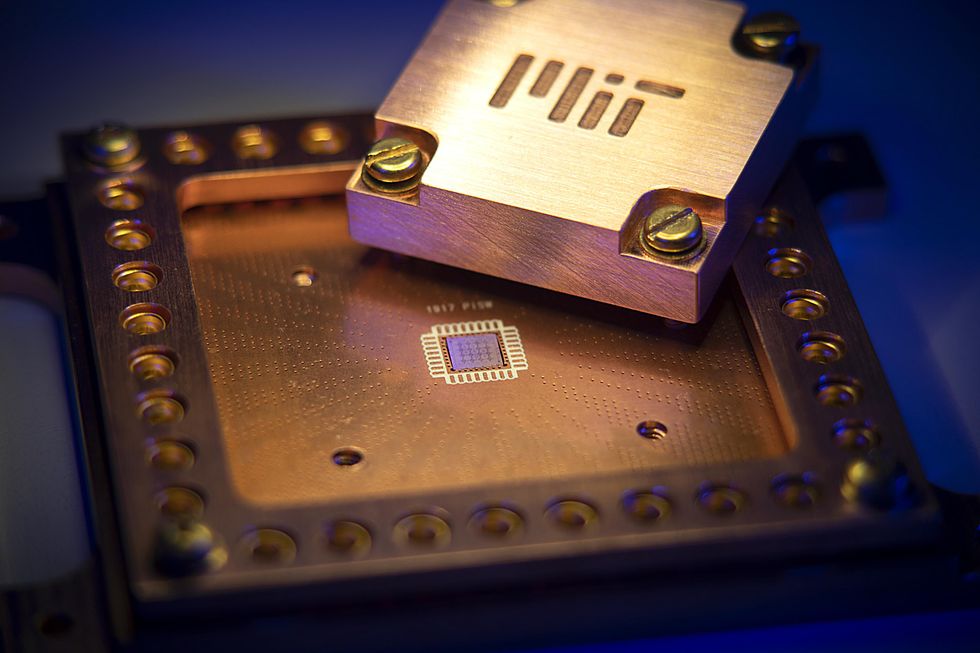

In this issue, we see engineers grappling with challenges of scale in modern problems. Consider the semiconductor technology supported by the U.S. CHIPS and Science Act, a policy initiative aimed at bolstering domestic chip production. Beyond funding manufacturing, it also provides US $11 billion for R&D, including three national centers where companies can test and pilot new technologies. As one startup tells the tale, this infrastructure will drastically speed up the lab-to-fab process.

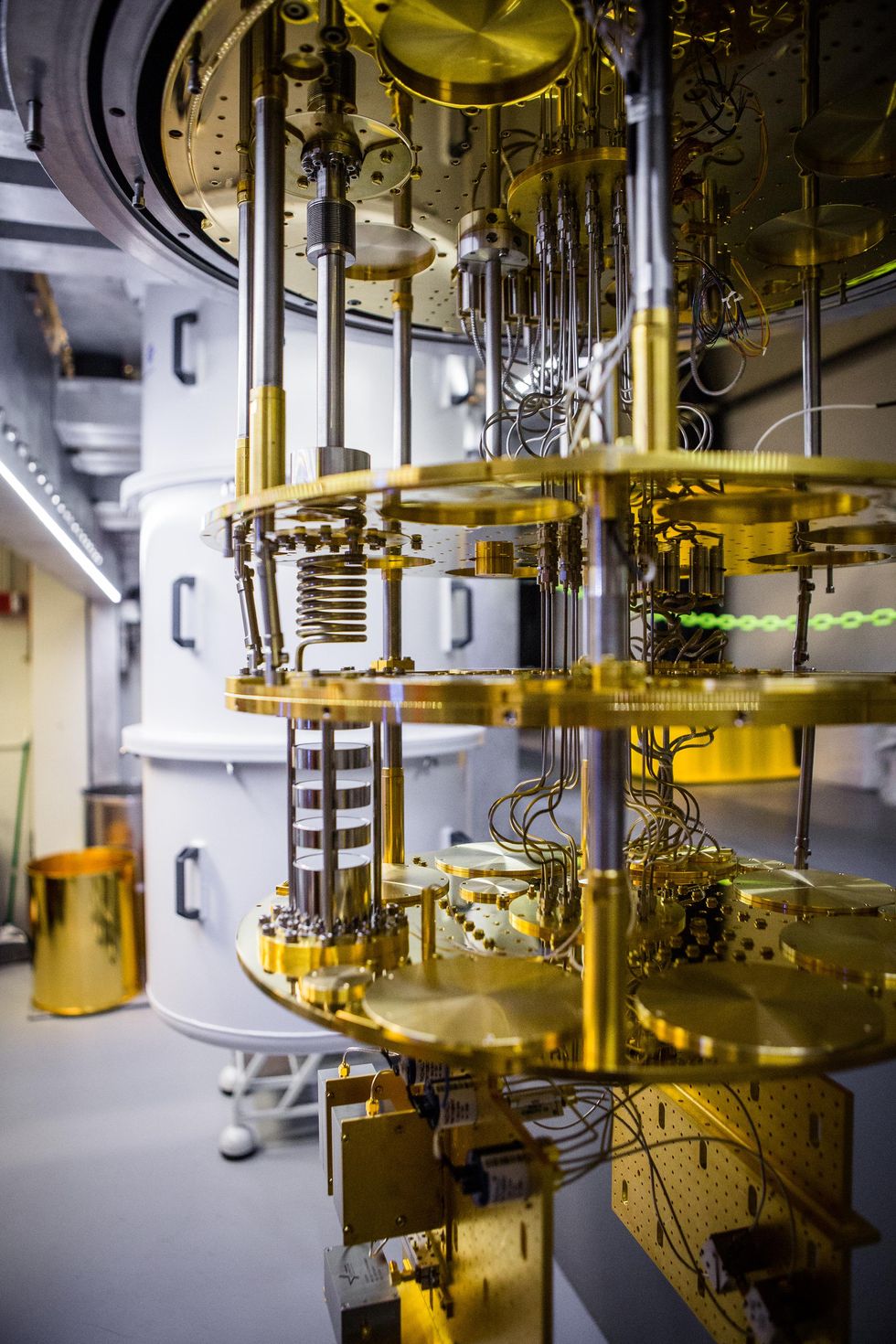

And then there are atomic clocks, the epitome of precision timekeeping. When researchers decided to build a commercial version, they had to shift their perspective, taking a sprawling laboratory setup and reimagining it as a portable unit fit for mass production and the rigors of the real world. They had to stop optimizing for precision and instead choose the most robust laser, and the atom that would go along with it.

These technology efforts benefit from infrastructure, brainpower, and cutting-edge new tools. One tool that may become ubiquitous across industries is artificial intelligence—and it’s a tool that could further expand access to the invention arena.

What if you had a team of indefatigable assistants at your disposal, ready to scour the world’s technical literature for material that could spark an idea, or to iterate on a concept 100 times before breakfast? That’s the promise of today’s generative AI. The Swiss company Iprova is exploring whether its AI tools can automate “eureka” moments for its clients, corporations that are looking to beat their competitors to the next big idea. The serial entrepreneur Steve Blank similarly advises young startup founders to embrace AI’s potential to accelerate product development; he even imagines testing product ideas on digital twins of customers. Although it’s still early days, generative AI offers inventors tools that have never been available before.

Measuring an Invention’s Impact

If AI accelerates the discovery process, and many more patentable ideas come to light as a result, then what? As it is, more than a million patents are granted every year, and we struggle to identify the ones that will make a lasting impact. Bryan Kelly, an economist at the Yale School of Management, and his collaborators made an attempt to quantify the impact of patents by doing a technology-assisted deep dive into U.S. patent records dating back to 1840. Using natural language processing, they identified patents that introduced novel phrasing that was then repeated in subsequent patents—an indicator of radical breakthroughs. For example, Elias Howe Jr.’s 1846 patent for a sewing machine wasn’t closely related to anything that came before but quickly became the basis of future sewing-machine patents.

Another foundational patent was the one awarded to an English bricklayer in 1824 for the invention of Portland cement, which is still the key ingredient in most of the world’s concrete. As Ted C. Fishman describes in his fascinating inquiry into the state of concrete today, this seemingly stable industry is in upheaval because of its heavy carbon emissions. The AI boom is fueling a construction boom in data centers, and all those buildings require billions of tons of concrete. Fishman takes readers into labs and startups where researchers are experimenting with climate-friendly formulations of cement and concrete. Who knows which of those experiments will result in a patent that echoes down the ages?

Some engineers start their invention process by thinking about the impact they want to make on the world. The eminent Indian technologist Raghunath Anant Mashelkar, who has popularized the idea of “Gandhian engineering”, advises inventors to work backward from “what we want to achieve for the betterment of humanity,” and to create problem-solving technologies that are affordable, durable, and not only for the elite.

Durability matters: Invention isn’t just about creating something brand new. It’s also about coming up with clever ways to keep an existing thing going. Such is the case with the Hubble Space Telescope. Originally designed to last 15 years, it’s been in orbit for twice that long and has actually gotten better with age, because engineers designed the satellite to be fixable and upgradable in space.

For all the invention activity around the globe—the World Intellectual Property Organization says that 3.5 million applications for patents were filed in 2022—it may be harder to invent something useful than it used to be. Not because “everything that can be invented has been invented,” as in the apocryphal quote attributed to the unfortunate head of the U.S. patent office in 1889. Rather, because so much education and experience are required before an inventor can even understand all the dimensions of the door they’re trying to crack open, much less come up with a strategy for doing so. Ben Jones, an economist at Northwestern’s Kellogg School of Management, has shown that the average age of great technological innovators rose by about six years over the course of the 20th century. “Great innovation is less and less the provenance of the young,” Jones concluded.

Consider designing something as complex as a nuclear fusion reactor, as Tom Clynes describes in “An Off-the-Shelf Stellarator.” Fusion researchers have spent decades trying to crack the code of commercially viable fusion—it’s more akin to a calling than a career. If they succeed, they will unlock essentially limitless clean energy with no greenhouse gas emissions or meltdown danger. That’s the dream that the physicists in a lab in Princeton, N.J., are chasing. But before they even started, they first had to gain an intimate understanding of all the wrong ways to build a fusion reactor. Once the team was ready to proceed, what they created was an experimental reactor that accelerates the design-build-test cycle. With new AI tools and unprecedented computational power, they’re now searching for the best ways to create the magnetic fields that will confine the plasma within the reactor. Already, two startups have spun out of the Princeton lab, both seeking a path to commercial fusion.

The stellarator story and many other articles in this issue showcase how one innovation leads to the next, and how one invention can enable many more. The legendary Dean Kamen, best known for mechanical devices like the Segway and the prosthetic “Luke” arm, is now trying to push forward the squishy world of biological manufacturing. In an interview, Kamen explains how his nonprofit is working on the infrastructure—bioreactors, sensors, and controls—that will enable companies to explore the possibilities of growing replacement organs. You could say that he’s inventing the launchpad so others can invent the rockets.

Sometimes everyone in a research field knows where the breakthrough is needed, but that doesn’t make it any easier to achieve. Case in point: the quest for a household humanoid robot that can perform domestic chores, switching effortlessly from frying an egg to folding laundry. Roboticists need better learning software that will enable their bots to navigate the uncertainties of the real world, and they also need cheaper and lighter actuators. Major advances in these two areas would unleash a torrent of creativity and may finally bring robot butlers into our homes.

And maybe the future roboticists who make those breakthroughs will have cause to thank Marina Umaschi Bers, a technologist at Boston College who cocreated the ScratchJr programming language and the KIBO robotics kit to teach kids the basics of coding and robotics in entertaining ways. She sees engineering as a playground, a place for children to explore and create, to be goofy or grandiose. If today’s kindergartners learn to think of themselves as inventors, who knows what they’ll create tomorrow?

-

Honor a Loved One With an IEEE Foundation Memorial Fund

by IEEE Foundation on 31. October 2024. at 18:00

As the philanthropic partner of IEEE, the IEEE Foundation expands the organization’s charitable body of work by inspiring philanthropic engagement that ignites a donor’s innermost interests and values.

One way the Foundation does so is by partnering with IEEE units to create memorial funds, which pay tribute to members, family, friends, teachers, professors, students, and others. This type of giving honors someone special while also supporting future generations of engineers and celebrating innovation.

Below are three recently created memorial funds that not only have made an impact on their beneficiaries and perpetuated the legacy of the namesake but also have a deep meaning for those who launched them.

EPICS in IEEE Fischer Mertel Community of Projects

The EPICS in IEEE Fischer Mertel Community of Projects was established to support projects “designed to inspire multidisciplinary teams of engineering students to collaborate and engineer solutions to address local community needs.”

The fund was created by the children of Joe Fischer and Herb Mertel to honor their fathers’ passion for mentoring students. Longtime IEEE members, Fischer and Mertel were active with the IEEE Electromagnetic Compatibility Society. Fischer was the society’s 1972 president and served on its board of directors for six years. Mertel served on the society’s board from 1979 to 1983 and again from 1989 to 1993.

“The EPICS in IEEE Fischer Mertel Community of Projects was established to inspire and support outstanding engineering ideas and efforts that help communities worldwide,” says Tina Mertel, Herb’s daughter. “Joe Fischer and my father had a lifelong friendship and excelled as engineering leaders and founders of their respective companies [Fischer Custom Communications and EMACO]. I think that my father would have been proud to know that their friendship and work are being honored in this way.”

The nine projects supported thus far have the potential to impact more than 104,000 people because of the work and collaboration of 190 students worldwide. The projects funded are intended to represent at least two of the EPICS in IEEE’s focus categories: education and outreach; human services; environmental; and access and abilities.

Here are a few of the projects:

- The Engineering Outreach at San Diego K–12 Schools project aims to bridge the city’s STEM education gap by sending IEEE members to schools to teach project-based lessons in mechanical, aerospace, electrical and computer engineering, as well as computer science. The project is led by the IEEE–Eta Kappa Nu honor society’s Kappa Psi chapter at the University of California San Diego and the San Diego Unified School District.

- Students from the Sahrdaya College of Engineering and Technology student branch in Kodakara, India, are developing an exoskeleton for nurses to support their lumbar spine region.

- Volunteers from the IEEE Uganda Section and local engineering students are designing and fabricating a stationary bicycle to act as a generator, providing power to families living in underserved communities. The goal of the project is to reduce air pollution caused by generators and supply reliable, affordable power to people in need.

- The IEEE Colombian Caribbean Section’s Increasing Inclusion of Visually Impaired People with a Mobile Application for English Learning project aims to ensure visually impaired students can learn to read, write, and speak English alongside their peers. The section’s members are developing a mobile app to help accomplish their goal.

IEEE AESS Michael C. Wicks Radar Student Travel Grant

The IEEE Michael C. Wicks Radar Student Travel Grant was established by IEEE Fellow Michael Wicks prior to his death in 2022. The grant provides travel support for graduate students who are the primary authors on a paper being presented at the annual IEEE Radar Conference. Wicks was an electronics engineer and a radio industry leader who was known for developing knowledge-based space-time adaptive processing. He believed in investing in the next generation and he wanted to provide an opportunity for that to happen.Ten graduate students have been awarded the Wicks grant to date. This year two students from Region 8 (Africa, Europe, Middle East) and two students from Region 10 (Asia and Pacific) were able to travel to Denver to attend the IEEE Radar Conference and present their research. The papers they presented are “Target Shape Reconstruction From Multi-Perspective Shadows in Drone-Borne SAR Systems” and “Design of Convolutional Neural Networks for Classification of Ships from ISAR Images.”

Life Fellow Fumio Koyama and IEEE Fellow Constance J. Chang-Hasnain proudly display their IEEE Nick Holonyak, Jr. Medal for Semiconductor Optoelectronic Technologies at this year’s IEEE Honors Ceremony. They are accompanied by IEEE President-Elect Kathleen Kramer and IEEE President Tom Coughlin.Robb Cohen

Life Fellow Fumio Koyama and IEEE Fellow Constance J. Chang-Hasnain proudly display their IEEE Nick Holonyak, Jr. Medal for Semiconductor Optoelectronic Technologies at this year’s IEEE Honors Ceremony. They are accompanied by IEEE President-Elect Kathleen Kramer and IEEE President Tom Coughlin.Robb Cohen

IEEE Nick Holonyak Jr. Medal for Semiconductor Optoelectronic Technologies

The IEEE Nick Holonyak Jr. Medal for Semiconductor Optoelectronic Technologies was created with a memorial fund supported by some of Holonyak’s former graduate students to honor his work as a professor and mentor. Presented on behalf of the IEEE Board of Directors, the medal recognizes outstanding contributions to semiconductor optoelectronic devices and systems including high-energy-efficiency semiconductor devices and electronics.

Holonyak was a prolific inventor and longtime professor of electrical engineering and physics. In 1962, while working as a scientist at General Electric’s Advanced Semiconductor Laboratory in Syracuse, N.Y., he invented the first practical visible-spectrum LED and laser diode. His innovations are the basis of the devices now used in high-efficiency light bulbs and laser diodes. He left GE in 1963 to join the University of Illinois Urbana-Champaign as a professor of electrical engineering and physics at the invitation of John Bardeen, his Ph.D. advisor and a two-time Nobel Prize winner in physics. Holonyak retired from UIUC in 2013 but continued research collaborations at the university with young faculty members.

“In addition to his remarkable technical contributions, he was an excellent teacher and mentor to graduate students and young electrical engineers,” says Russell Dupuis, one of his doctoral students. “The impact of his innovations has improved the lives of most people on the earth, and this impact will only increase with time. It was my great honor to be one of his students and to help create this important IEEE medal to ensure that his work will be remembered in the future.”

The award was presented for the first time at this year’s IEEE Honors Ceremony, in Boston, to IEEE Fellow Constance Chang-Hasnain and Life Fellow Fumio Koyama for “pioneering contributions to vertical cavity surface-emitting laser (VCSEL) and VCSEL-based photonics for optical communications and sensing.”

Establishing a memorial fund through the IEEE Foundation is a gratifying way to recognize someone who has touched your life while also advancing technology for humanity. If you are interested in learning more about memorial and tribute funds, reach out to the IEEE Foundation team: donate@ieee.org.

-

New Carrier Fluid Makes Hydrogen Way Easier to Transport

by Willie D. Jones on 31. October 2024. at 12:00

Imagine pulling up to a refueling station and filling your vehicle’s tank with liquid hydrogen, as safe and convenient to handle as gasoline or diesel, without the need for high-pressure tanks or cryogenic storage. This vision of a sustainable future could become a reality if a Calgary, Canada–based company, Ayrton Energy, can scale up its innovative method of hydrogen storage and distribution. Ayrton’s technology could make hydrogen a viable, one-to-one replacement for fossil fuels in existing infrastructure like pipelines, fuel tankers, rail cars, and trucks.

The company’s approach is to use liquid organic hydrogen carriers (LOHCs) to make it easier to transport and store hydrogen. The method chemically bonds hydrogen to carrier molecules, which absorb hydrogen molecules and make them more stable—kind of like hydrogenating cooking oil to produce margarine.

A researcher pours a sample of Ayrton’s LOHC fluid into a vial.Ayrton Energy

A researcher pours a sample of Ayrton’s LOHC fluid into a vial.Ayrton EnergyThe approach would allow liquid hydrogen to be transported and stored in ambient conditions, rather than in the high-pressure, cryogenic tanks (to hold it at temperatures below -252 ºC) currently required for keeping hydrogen in liquid form. It would also be a big improvement on gaseous hydrogen, which is highly volatile and difficult to keep contained.

Founded in 2021, Ayrton is one of several companies across the globe developing LOHCs, including Japan’s Chiyoda and Mitsubishi, Germany’s Covalion, and China’s Hynertech. But toxicity, energy density, and input energy issues have limited LOHCs as contenders for making liquid hydrogen feasible. Ayrton says its formulation eliminates these trade-offs.

Safe, Efficient Hydrogen Fuel for Vehicles

Conventional LOHC technologies used by most of the aforementioned companies rely on substances such as toluene, which forms methylcyclohexane when hydrogenated. These carriers pose safety risks due to their flammability and volatility. Hydrogenious LOHC Technologies in Erlanger, Germany and other hydrogen fuel companies have shifted toward dibenzyltoluene, a more stable carrier that holds more hydrogen per unit volume than methylcyclohexane, though it requires higher temperatures (and thus more energy) to bind and release the hydrogen. Dibenzyltoluene hydrogenation occurs at between 3 and 10 megapascals (30 and 100 bar) and 200–300 ºC, compared with 10 MPa (100 bar), and just under 200 ºC for methylcyclohexane.

Ayrton’s proprietary oil-based hydrogen carrier not only captures and releases hydrogen with less input energy than is required for other LOHCs, but also stores more hydrogen than methylcyclohexane can—55 kilograms per cubic meter compared with methylcyclohexane’s 50 kg/m³. Dibenzyltoluene holds more hydrogen per unit volume (up to 65 kg/m³), but Ayrton’s approach to infusing the carrier with hydrogen atoms promises to cost less. Hydrogenation or dehydrogenation with Ayrton’s carrier fluid occurs at 0.1 megapascal (1 bar) and about 100 ºC, says founder and CEO Natasha Kostenuk. And as with the other LOHCs, after hydrogenation it can be transported and stored at ambient temperatures and pressures.

Judges described [Ayrton's approach] as a critical technology for the deployment of hydrogen at large scale.” —Katie Richardson, National Renewable Energy Lab

Ayrton’s LOHC fluid is as safe to handle as margarine, but it’s still a chemical, says Kostenuk. “I wouldn’t drink it. If you did, you wouldn’t feel very good. But it’s not lethal,” she says.

Kostenuk and fellow Ayrton cofounder Brandy Kinkead (who serves as the company’s chief technical officer) were originally trying to bring hydrogen generators to market to fill gaps in the electrical grid. “We were looking for fuel cells and hydrogen storage. Fuel cells were easy to find, but we couldn’t find a hydrogen storage method or medium that would be safe and easy to transport to fuel our vision of what we were trying to do with hydrogen generators,” Kostenuk says. During the search, they came across LOHC technology but weren’t satisfied with the trade-offs demanded by existing liquid hydrogen carriers. “We had the idea that we could do it better,” she says. The duo pivoted, adjusting their focus from hydrogen generators to hydrogen storage solutions.

“Everybody gets excited about hydrogen production and hydrogen end use, but they forget that you have to store and manage the hydrogen,” Kostenuk says. Incompatibility with current storage and distribution has been a barrier to adoption, she says. “We’re really excited about being able to reuse existing infrastructure that’s in place all over the world.” Ayrton’s hydrogenated liquid has fuel-cell-grade (99.999 percent) hydrogen purity, so there’s no advantage in using pure liquid hydrogen with its need for subzero temperatures, according to the company.

The main challenge the company faces is the set of issues that come along with any technology scaling up from pilot-stage production to commercial manufacturing, says Kostenuk. “A crucial part of that is aligning ourselves with the right manufacturing partners along the way,” she notes.

Asked about how Ayrton is dealing with some other challenges common to LOHCs, Kostenuk says Ayrton has managed to sidestep them. “We stayed away from materials that are expensive and hard to procure, which will help us avoid any supply chain issues,” she says. By performing the reactions at such low temperatures, Ayrton can get its carrier fluid to withstand 1,000 hydrogenation-dehydrogenation cycles before it no longer holds enough hydrogen to be useful. Conventional LOHCs are limited to a couple of hundred cycles before the high temperatures required for bonding and releasing the hydrogen breaks down the fluid and diminishes its storage capacity, Kostenuk says.

Breakthrough in Hydrogen Storage Technology

In acknowledgement of what Ayrton’s nontoxic, oil-based carrier fluid could mean for the energy and transportation sectors, the U.S. National Renewable Energy Lab (NREL) at its annual Industry Growth Forum in May named Ayrton an “outstanding early-stage venture.” A selection committee of more than 180 climate tech and cleantech investors and industry experts chose Ayrton from a pool of more than 200 initial applicants, says Katie Richardson, group manager of NREL’s Innovation and Entrepreneurship Center, which organized the forum. The committee based its decision on the company’s innovation, market positioning, business model, team, next steps for funding, technology, capital use, and quality of pitch presentation. “Judges described Ayrton’s approach as a critical technology for the deployment of hydrogen at large scale,” Richardson says.

As a next step toward enabling hydrogen to push gasoline and diesel aside, “we’re talking with hydrogen producers who are right now offering their customers cryogenic and compressed hydrogen,” says Kostenuk. “If they offered LOHC, it would enable them to deliver across longer distances, in larger volumes, in a multimodal way.” The company is also talking to some industrial site owners who could use the hydrogenated LOHC for buffer storage to hold onto some of the energy they’re getting from clean, intermittent sources like solar and wind. Another natural fit, she says, is energy service providers that are looking for a reliable method of seasonal storage beyond what batteries can offer. The goal is to eventually scale up enough to become the go-to alternative (or perhaps the standard) fuel for cars, trucks, trains, and ships.

-

The AI Boom Rests on Billions of Tonnes of Concrete

by Ted C. Fishman on 30. October 2024. at 13:00

Along the country road that leads to ATL4, a giant data center going up east of Atlanta, dozens of parked cars and pickups lean tenuously on the narrow dirt shoulders. The many out-of-state plates are typical of the phalanx of tradespeople who muster for these massive construction jobs. With tech giants, utilities, and governments budgeting upwards of US $1 trillion for capital expansion to join the global battle for AI dominance, data centers are the bunkers, factories, and skunkworks—and concrete and electricity are the fuel and ammunition.

To the casual observer, the data industry can seem incorporeal, its products conjured out of weightless bits. But as I stand beside the busy construction site for DataBank’s ATL4, what impresses me most is the gargantuan amount of material—mostly concrete—that gives shape to the goliath that will house, secure, power, and cool the hardware of AI. Big data is big concrete. And that poses a big problem.

Concrete is not just a major ingredient in data centers and the power plants being built to energize them. As the world’s most widely manufactured material, concrete—and especially the cement within it—is also a major contributor to climate change, accounting for around 6 percent of global greenhouse gas emissions. Data centers use so much concrete that the construction boom is wrecking tech giants’ commitments to eliminate their carbon emissions. Even though Google, Meta, and Microsoft have touted goals to be carbon neutral or negative by 2030, and Amazon by 2040, the industry is now moving in the wrong direction.

Last year, Microsoft’s carbon emissions jumped by over 30 percent, primarily due to the materials in its new data centers. Google’s greenhouse emissions are up by nearly 50 percent over the past five years. As data centers proliferate worldwide, Morgan Stanley projects that data centers will release about 2.5 billion tonnes of CO2 each year by 2030—or about 40 percent of what the United States currently emits from all sources.

But even as innovations in AI and the big-data construction boom are boosting emissions for the tech industry’s hyperscalers, the reinvention of concrete could also play a big part in solving the problem. Over the last decade, there’s been a wave of innovation, some of it profit-driven, some of it from academic labs, aimed at fixing concrete’s carbon problem. Pilot plants are being fielded to capture CO 2 from cement plants and sock it safely away. Other projects are cooking up climate-friendlier recipes for cements. And AI and other computational tools are illuminating ways to drastically cut carbon by using less cement in concrete and less concrete in data centers, power plants, and other structures.

Demand for green concrete is clearly growing. Amazon, Google, Meta, and Microsoft recently joined an initiative led by the Open Compute Project Foundation to accelerate testing and deployment of low-carbon concrete in data centers, for example. Supply is increasing, too—though it’s still minuscule compared to humanity’s enormous appetite for moldable rock. But if the green goals of big tech can jump-start innovation in low-carbon concrete and create a robust market for it as well, the boom in big data could eventually become a boon for the planet.

Hyperscaler Data Centers: So Much Concrete

At the construction site for ATL4, I’m met by Tony Qoori, the company’s big, friendly, straight-talking head of construction. He says that this giant building and four others DataBank has recently built or is planning in the Atlanta area will together add 133,000 square meters (1.44 million square feet) of floor space.

They all follow a universal template that Qoori developed to optimize the construction of the company’s ever-larger centers. At each site, trucks haul in more than a thousand prefabricated concrete pieces: wall panels, columns, and other structural elements. Workers quickly assemble the precision-measured parts. Hundreds of electricians swarm the building to wire it up in just a few days. Speed is crucial when construction delays can mean losing ground in the AI battle.

The ATL4 data center outside Atlanta is one of five being built by DataBank. Together they will add over 130,000 square meters of floor space.DataBank

The ATL4 data center outside Atlanta is one of five being built by DataBank. Together they will add over 130,000 square meters of floor space.DataBank

That battle can be measured in new data centers and floor space. The United States is home to more than 5,000 data centers today, and the Department of Commerce forecasts that number to grow by around 450 a year through 2030. Worldwide, the number of data centers now exceeds 10,000, and analysts project another 26.5 million m2 of floor space over the next five years. Here in metro Atlanta, developers broke ground last year on projects that will triple the region’s data-center capacity. Microsoft, for instance, is planning a 186,000-m2 complex; big enough to house around 100,000 rack-mounted servers, it will consume 324 megawatts of electricity.

The velocity of the data-center boom means that no one is pausing to await greener cement. For now, the industry’s mantra is “Build, baby, build.”

“There’s no good substitute for concrete in these projects,” says Aaron Grubbs, a structural engineer at ATL4. The latest processors going on the racks are bigger, heavier, hotter, and far more power hungry than previous generations. As a result, “you add a lot of columns,” Grubbs says.

1,000 Companies Working on Green Concrete

Concrete may not seem an obvious star in the story of how electricity and electronics have permeated modern life. Other materials—copper and silicon, aluminum and lithium—get higher billing. But concrete provides the literal, indispensable foundation for the world’s electrical workings. It is the solid, stable, durable, fire-resistant stuff that makes power generation and distribution possible. It undergirds nearly all advanced manufacturing and telecommunications. What was true in the rapid build-out of the power industry a century ago remains true today for the data industry: Technological progress begets more growth—and more concrete. Although each generation of processor and memory squeezes more computing onto each chip, and advances in superconducting microcircuitry raise the tantalizing prospect of slashing the data center’s footprint, Qoori doesn’t think his buildings will shrink to the size of a shoebox anytime soon. “I’ve been through that kind of change before, and it seems the need for space just grows with it,” he says.

By weight, concrete is not a particularly carbon-intensive material. Creating a kilogram of steel, for instance, releases about 2.4 times as much CO2 as a kilogram of cement does. But the global construction industry consumes about 35 billion tonnes of concrete a year. That’s about 4 tonnes for every person on the planet and twice as much as all other building materials combined. It’s that massive scale—and the associated cost and sheer number of producers—that creates both a threat to the climate and inertia that resists change.

At its Edmonton, Alberta, plant [above], Heidelberg Materials is adding systems to capture carbon dioxide produced by the manufacture of Portland cement.Heidelberg Materials North America

At its Edmonton, Alberta, plant [above], Heidelberg Materials is adding systems to capture carbon dioxide produced by the manufacture of Portland cement.Heidelberg Materials North America

Yet change is afoot. When I visited the innovation center operated by the Swiss materials giant Holcim, in Lyon, France, research executives told me about the database they’ve assembled of nearly 1,000 companies working to decarbonize cement and concrete. None yet has enough traction to measurably reduce global concrete emissions. But the innovators hope that the boom in data centers—and in associated infrastructure such as new nuclear reactors and offshore wind farms, where each turbine foundation can use up to 7,500 cubic meters of concrete—may finally push green cement and concrete beyond labs, startups, and pilot plants.

Why cement production emits so much carbon

Though the terms “cement” and “concrete” are often conflated, they are not the same thing. A popular analogy in the industry is that cement is the egg in the concrete cake. Here’s the basic recipe: Blend cement with larger amounts of sand and other aggregates. Then add water, to trigger a chemical reaction with the cement. Wait a while for the cement to form a matrix that pulls all the components together. Let sit as it cures into a rock-solid mass.

Portland cement, the key binder in most of the world’s concrete, was serendipitously invented in England by William Aspdin, while he was tinkering with earlier mortars that his father, Joseph, had patented in 1824. More than a century of science has revealed the essential chemistry of how cement works in concrete, but new findings are still leading to important innovations, as well as insights into how concrete absorbs atmospheric carbon as it ages.

As in the Aspdins’ day, the process to make Portland cement still begins with limestone, a sedimentary mineral made from crystalline forms of calcium carbonate. Most of the limestone quarried for cement originated hundreds of millions of years ago, when ocean creatures mineralized calcium and carbonate in seawater to make shells, bones, corals, and other hard bits.

Cement producers often build their large plants next to limestone quarries that can supply decades’ worth of stone. The stone is crushed and then heated in stages as it is combined with lesser amounts of other minerals that typically include calcium, silicon, aluminum, and iron. What emerges from the mixing and cooking are small, hard nodules called clinker. A bit more processing, grinding, and mixing turns those pellets into powdered Portland cement, which accounts for about 90 percent of the CO2 emitted by the production of conventional concrete [see infographic, “Roads to Cleaner Concrete”].

Karen Scrivener, shown in her lab at EPFL, has developed concrete recipes that reduce emissions by 30 to 40 percent.Stefan Wermuth/Bloomberg/Getty Images

Karen Scrivener, shown in her lab at EPFL, has developed concrete recipes that reduce emissions by 30 to 40 percent.Stefan Wermuth/Bloomberg/Getty Images

Decarbonizing Portland cement is often called heavy industry’s “hard problem” because of two processes fundamental to its manufacture. The first process is combustion: To coax limestone’s chemical transformation into clinker, large heaters and kilns must sustain temperatures around 1,500 °C. Currently that means burning coal, coke, fuel oil, or natural gas, often along with waste plastics and tires. The exhaust from those fires generates 35 to 50 percent of the cement industry’s emissions. Most of the remaining emissions result from gaseous CO 2 liberated by the chemical transformation of the calcium carbonate (CaCO3) into calcium oxide (CaO), a process called calcination. That gas also usually heads straight into the atmosphere.

Concrete production, in contrast, is mainly a business of mixing cement powder with other ingredients and then delivering the slurry speedily to its destination before it sets. Most concrete in the United States is prepared to order at batch plants—souped-up materials depots where the ingredients are combined, dosed out from hoppers into special mixer trucks, and then driven to job sites. Because concrete grows too stiff to work after about 90 minutes, concrete production is highly local. There are more ready-mix batch plants in the United States than there are Burger King restaurants.

Batch plants can offer thousands of potential mixes, customized to fit the demands of different jobs. Concrete in a hundred-story building differs from that in a swimming pool. With flexibility to vary the quality of sand and the size of the stone—and to add a wide variety of chemicals—batch plants have more tricks for lowering carbon emissions than any cement plant does.

Cement plants that capture carbon

China accounts for more than half of the concrete produced and used in the world, but companies there are hard to track. Outside of China, the top three multinational cement producers—Holcim, Heidelberg Materials in Germany, and Cemex in Mexico—have launched pilot programs to snare CO2 emissions before they escape and then bury the waste deep underground. To do that, they’re taking carbon capture and storage (CCS) technology already used in the oil and gas industry and bolting it onto their cement plants.

These pilot programs will need to scale up without eating profits—something that eluded the coal industry when it tried CCS decades ago. Tough questions also remain about where exactly to store billions of tonnes of CO 2 safely, year after year.

The appeal of CCS for cement producers is that they can continue using existing plants while still making progress toward carbon neutrality, which trade associations have committed to reach by 2050. But with well over 3,000 plants around the world, adding CCS to all of them would take enormous investment. Currently less than 1 percent of the global supply is low-emission cement. Accenture, a consultancy, estimates that outfitting the whole industry for carbon capture could cost up to $900 billion.

“The economics of carbon capture is a monster,” says Rick Chalaturnyk, a professor of geotechnical engineering at the University of Alberta, in Edmonton, Canada, who studies carbon capture in the petroleum and power industries. He sees incentives for the early movers on CCS, however. “If Heidelberg, for example, wins the race to the lowest carbon, it will be the first [cement] company able to supply those customers that demand low-carbon products”—customers such as hyperscalers.

Though cement companies seem unlikely to invest their own billions in CCS, generous government subsidies have enticed several to begin pilot projects. Heidelberg has announced plans to start capturing CO2 from its Edmonton operations in late 2026, transforming it into what the company claims would be “the world’s first full-scale net-zero cement plant.” Exhaust gas will run through stations that purify the CO2 and compress it into a liquid, which will then be transported to chemical plants to turn it into products or to depleted oil and gas reservoirs for injection underground, where hopefully it will stay put for an epoch or two.

Chalaturnyk says that the scale of the Edmonton plant, which aims to capture a million tonnes of CO2 a year, is big enough to give CCS technology a reasonable test. Proving the economics is another matter. Half the $1 billion cost for the Edmonton project is being paid by the governments of Canada and Alberta.

ROADS TO CLEANER CONCRETE

As the big-data construction boom boosts the tech industry’s emissions, the reinvention of concrete could play a major role in solving the problem.

• CONCRETE TODAY Most of the greenhouse emissions from concrete come from the production of Portland cement, which requires high heat and releases carbon dioxide (CO2) directly into the air.

• CONCRETE TOMORROW At each stage of cement and concrete production, advances in ingredients, energy supplies, and uses of concrete promise to reduce waste and pollution.

The U.S. Department of Energy has similarly offered Heidelberg up to $500 million to help cover the cost of attaching CCS to its Mitchell, Ind., plant and burying up to 2 million tonnes of CO2 per year below the plant. And the European Union has gone even bigger, allocating nearly €1.5 billion ($1.6 billion) from its Innovation Fund to support carbon capture at cement plants in seven of its member nations.

These tests are encouraging, but they are all happening in rich countries, where demand for concrete peaked decades ago. Even in China, concrete production has started to flatten. All the growth in global demand through 2040 is expected to come from less-affluent countries, where populations are still growing and quickly urbanizing. According to projections by the Rhodium Group, cement production in those regions is likely to rise from around 30 percent of the world’s supply today to 50 percent by 2050 and 80 percent before the end of the century.

So will rich-world CCS technology translate to the rest of the world? I asked Juan Esteban Calle Restrepo, the CEO of Cementos Argos, the leading cement producer in Colombia, about that when I sat down with him recently at his office in Medellín. He was frank. “Carbon capture may work for the U.S. or Europe, but countries like ours cannot afford that,” he said.

Better cement through chemistry

As long as cement plants run limestone through fossil-fueled kilns, they will generate excessive amounts of carbon dioxide. But there may be ways to ditch the limestone—and the kilns. Labs and startups have been finding replacements for limestone, such as calcined kaolin clay and fly ash, that don’t release CO 2 when heated. Kaolin clays are abundant around the world and have been used for centuries in Chinese porcelain and more recently in cosmetics and paper. Fly ash—a messy, toxic by-product of coal-fired power plants—is cheap and still widely available, even as coal power dwindles in many regions.

At the Swiss Federal Institute of Technology Lausanne (EPFL), Karen Scrivener and colleagues developed cements that blend calcined kaolin clay and ground limestone with a small portion of clinker. Calcining clay can be done at temperatures low enough that electricity from renewable sources can do the job. Various studies have found that the blend, known as LC3, can reduce overall emissions by 30 to 40 percent compared to those of Portland cement.

LC3 is also cheaper to make than Portland cement and performs as well for nearly all common uses. As a result, calcined clay plants have popped up across Africa, Europe, and Latin America. In Colombia, Cementos Argos is already producing more than 2 million tonnes of the stuff annually. The World Economic Forum’s Centre for Energy and Materials counts LC3 among the best hopes for the decarbonization of concrete. Wide adoption by the cement industry, the centre reckons, “can help prevent up to 500 million tonnes of CO2 emissions by 2030.”

In a win-win for the environment, fly ash can also be used as a building block for low- and even zero-emission concrete, and the high heat of processing neutralizes many of the toxins it contains. Ancient Romans used volcanic ash to make slow-setting but durable concrete: The Pantheon, built nearly two millennia ago with ash-based cement, is still in great shape.

Coal fly ash is a cost-effective ingredient that has reactive properties similar to those of Roman cement and Portland cement. Many concrete plants already add fresh fly ash to their concrete mixes, replacing 15 to 35 percent of the cement. The ash improves the workability of the concrete, and though the resulting concrete is not as strong for the first few months, it grows stronger than regular concrete as it ages, like the Pantheon.

University labs have tested concretes made entirely with fly ash and found that some actually outperform the standard variety. More than 15 years ago, researchers at Montana State University used concrete made with 100 percent fly ash in the floors and walls of a credit union and a transportation research center. But performance depends greatly on the chemical makeup of the ash, which varies from one coal plant to the next, and on following a tricky recipe. The decommissioning of coal-fired plants has also been making fresh fly ash scarcer and more expensive.

At Sublime Systems’ pilot plant in Massachusetts, the company is using electrochemistry instead of heat to produce lime silicate cements that can replace Portland cement.Tony Luong

At Sublime Systems’ pilot plant in Massachusetts, the company is using electrochemistry instead of heat to produce lime silicate cements that can replace Portland cement.Tony Luong

That has spurred new methods to treat and use fly ash that’s been buried in landfills or dumped into ponds. Such industrial burial grounds hold enough fly ash to make concrete for decades, even after every coal plant shuts down. Utah-based Eco Material Technologies is now producing cements that include both fresh and recovered fly ash as ingredients. The company claims it can replace up to 60 percent of the Portland cement in concrete—and that a new variety, suitable for 3D printing, can substitute entirely for Portland cement.

Hive 3D Builders, a Houston-based startup, has been feeding that low-emissions concrete into robots that are printing houses in several Texas developments. “We are 100 percent Portland cement–free,” says Timothy Lankau, Hive 3D’s CEO. “We want our homes to last 1,000 years.”

Sublime Systems, a startup spun out of MIT by battery scientists, uses electrochemistry rather than heat to make low-carbon cement from rocks that don’t contain carbon. Similar to a battery, Sublime’s process uses a voltage between an electrode and a cathode to create a pH gradient that isolates silicates and reactive calcium, in the form of lime (CaO). The company mixes those ingredients together to make a cement with no fugitive carbon, no kilns or furnaces, and binding power comparable to that of Portland cement. With the help of $87 million from the U.S. Department of Energy, Sublime is building a plant in Holyoke, Mass., that will be powered almost entirely by hydroelectricity. Recently the company was tapped to provide concrete for a major offshore wind farm planned off the coast of Martha’s Vineyard.

Software takes on the hard problem of concrete

It is unlikely that any one innovation will allow the cement industry to hit its target of carbon neutrality before 2050. New technologies take time to mature, scale up, and become cost-competitive. In the meantime, says Philippe Block, a structural engineer at ETH Zurich, smart engineering can reduce carbon emissions through the leaner use of materials.

His research group has developed digital design tools that make clever use of geometry to maximize the strength of concrete structures while minimizing their mass. The team’s designs start with the soaring architectural elements of ancient temples, cathedrals, and mosques—in particular, vaults and arches—which they miniaturize and flatten and then 3D print or mold inside concrete floors and ceilings. The lightweight slabs, suitable for the upper stories of apartment and office buildings, use much less concrete and steel reinforcement and have a CO2 footprint that’s reduced by 80 percent.

There’s hidden magic in such lean design. In multistory buildings, much of the mass of concrete is needed just to hold the weight of the material above it. The carbon savings of Block’s lighter slabs thus compound, because the size, cost, and emissions of a building’s conventional-concrete elements are slashed.

Vaulted, a Swiss startup, uses digital design tools to minimize the concrete in floors and ceilings, cutting their CO2 footprint by 80 percent.Vaulted

Vaulted, a Swiss startup, uses digital design tools to minimize the concrete in floors and ceilings, cutting their CO2 footprint by 80 percent.Vaulted

In Dübendorf, Switzerland, a wildly shaped experimental building has floors, roofs, and ceilings created by Block’s structural system. Vaulted, a startup spun out of ETH, is engineering and fabricating the lighter floors of a 10-story office building under construction in Zug, Switzerland.

That country has also been a leader in smart ways to recycle and reuse concrete, rather than simply landfilling demolition rubble. This is easier said than done—concrete is tough stuff, riddled with rebar. But there’s an economic incentive: Raw materials such as sand and limestone are becoming scarcer and more costly. Some jurisdictions in Europe now require that new buildings be made from recycled and reused materials. The new addition of the Kunsthaus Zürich museum, a showcase of exquisite Modernist architecture, uses recycled material for all but 2 percent of its concrete.

As new policies goose demand for recycled materials and threaten to restrict future use of Portland cement across Europe, Holcim has begun building recycling plants that can reclaim cement clinker from old concrete. It recently turned the demolition rubble from some 1960s apartment buildings outside Paris into part of a 220-unit housing complex—touted as the first building made from 100 percent recycled concrete. The company says it plans to build concrete recycling centers in every major metro area in Europe and, by 2030, to include 30 percent recycled material in all of its cement.

Further innovations in low-carbon concrete are certain to come, particularly as the powers of machine learning are applied to the problem. Over the past decade, the number of research papers reporting on computational tools to explore the vast space of possible concrete mixes has grown exponentially. Much as AI is being used to accelerate drug discovery, the tools learn from huge databases of proven cement mixes and then apply their inferences to evaluate untested mixes.

Researchers from the University of Illinois and Chicago-based Ozinga, one of the largest private concrete producers in the United States, recently worked with Meta to feed 1,030 known concrete mixes into an AI. The project yielded a novel mix that will be used for sections of a data-center complex in DeKalb, Ill. The AI-derived concrete has a carbon footprint 40 percent lower than the conventional concrete used on the rest of the site. Ryan Cialdella, Ozinga’s vice president of innovation, smiles as he notes the virtuous circle: AI systems that live in data centers can now help cut emissions from the concrete that houses them.

A sustainable foundation for the information age

Cheap, durable, and abundant yet unsustainable, concrete made with Portland cement has been one of modern technology’s Faustian bargains. The built world is on track to double in floor space by 2060, adding 230,000 km 2, or more than half the area of California. Much of that will house the 2 billion more people we are likely to add to our numbers. As global transportation, telecom, energy, and computing networks grow, their new appendages will rest upon concrete. But if concrete doesn’t change, we will perversely be forced to produce even more concrete to protect ourselves from the coming climate chaos, with its rising seas, fires, and extreme weather.

The AI-driven boom in data centers is a strange bargain of its own. In the future, AI may help us live even more prosperously, or it may undermine our freedoms, civilities, employment opportunities, and environment. But solutions to the bad climate bargain that AI’s data centers foist on the planet are at hand, if there’s a will to deploy them. Hyperscalers and governments are among the few organizations with the clout to rapidly change what kinds of cement and concrete the world uses, and how those are made. With a pivot to sustainability, concrete’s unique scale makes it one of the few materials that could do most to protect the world’s natural systems. We can’t live without concrete—but with some ambitious reinvention, we can thrive with it.

-

Teens Gain Experience at IEEE’s TryEngineering Summer Institute

by Robert Schneider on 29. October 2024. at 19:00

The future of engineering is bright, and it’s being shaped by the young minds at the TryEngineering Summer Institute (TESI), a program administered by IEEE Educational Activities. This year more than 300 students attended TESI to fuel their passion for engineering and prepare for higher education and careers. Sessions were held from 30 June through 2 August on the campuses of Rice University, the University of Pennsylvania, and the University of San Diego.

The program is an immersive experience designed for students ages 13 to 17. It offers hands-on projects, interactive workshops, field trips, and insights into the profession from practicing engineers. Participants get to stay on a college campus, providing them with a preview of university life.

Student turned instructor

One future innovator is Natalie Ghannad, who participated in the program as a student in 2022 and was a member of this year’s instructional team in Houston at Rice University. Ghannad is in her second year as an electrical engineering student at the University of San Francisco. University students join forces with science and engineering teachers at each TESI location to serve as instructors.

For many years, Ghannad wanted to follow in her mother’s footsteps and become a pediatric neurosurgeon. As a high school junior in Houston in 2022, however, she had a change of heart and decided to pursue engineering after participating in the TESI at Rice. She received a full scholarship from the IEEE Foundation TESI Scholarship Fund, supported by IEEE societies and councils.

“I really liked that it was hands-on,” Ghannad says. “From the get-go, we were introduced to 3D printers and laser cutters.”

The benefit of participating in the program, she says, was “having the opportunity to not just do the academic side of STEM but also to really get to play around, get your hands dirty, and figure out what you’re doing.”

“Looking back,” she adds, “there are so many parallels between what I’ve actually had to do as a college student, and having that knowledge from the Summer Institute has really been great.”

She was inspired to volunteer as a teaching assistant because, she says, “I know I definitely want to teach, have the opportunity to interact with kids, and also be part of the future of STEM.”

More than 90 students attended the program at Rice. They visited Space Center Houston, where former astronauts talked to them about the history of space exploration.

Participants also were treated to presentations by guest speakers including IEEE Senior Member Phil Bautista, the founder of Bull Creek Data, a consulting company that provides technical solutions; IEEE Senior Member Christopher Sanderson, chair of the IEEE Region 5 Houston Section; and James Burroughs, a standards manager for Siemens in Atlanta. Burroughs, who spoke at all three TESI events this year, provided insight on overcoming barriers to do the important work of an engineer.

Learning about transit systems and careers

The University of Pennsylvania, in Philadelphia, hosted the East Coast TESI event this year. Students were treated to a field trip to the Southeastern Pennsylvania Transportation Association (SEPTA), one of the largest transit systems in the country. Engineers from AECOM, a global infrastructure consulting firm with offices in Philadelphia that worked closely with SEPTA on its most recent station renovation, collaborated with IEEE to host the trip.

The benefit of participating in the program was “having the opportunity to not just do the academic side of STEM but also to really get to play around, get your hands dirty, and figure out what you’re doing.” — Natalie Ghannad

Participants also heard from guest speakers including Api Appulingam, chief development officer of the Philadelphia International Airport, who told the students the inspiring story of her career.

Guest speakers from Google and Meta

Students who attended the TESI camp at the University of San Diego visited Qualcomm. Hosted by the IEEE Region 6 director, Senior Member Kathy Herring Hayashi, they learned about cutting-edge technology and toured the Qualcomm Museum.

Students also heard from guest speakers including IEEE Member Andrew Saad, an engineer at Google; Gautam Deryanni, a silicon validation engineer at Meta; Kathleen Kramer, 2025 IEEE president and a professor of electrical engineering at the University of San Diego; as well as Burroughs.

“I enjoyed the opportunity to meet new, like-minded people and enjoy fun activities in the city, as well as get a sense of the dorm and college life,” one participant said.

Hands-on projects

In addition to field trips and guest speakers, participants at each location worked on several hands-on projects highlighting the engineering design process. In the toxic popcorn challenge, the students designed a process to safely remove harmful kernels. Students tackling the bridge challenge designed and built a span out of balsa wood and glue, then tested its strength by gradually adding weight until it failed. The glider challenge gave participants the tools and knowledge to build and test their aircraft designs.

One participant applauded the hands-on activities, saying, “All of them gave me a lot of experience and helped me have a better idea of what engineering field I want to go in. I love that we got to participate in challenges and not just listen to lectures—which can be boring.”

The students also worked on a weeklong sparking solutions challenge. Small teams identified a societal problem, such as a lack of clean water or limited mobility for senior citizens, then designed a solution to address it. On the last day of camp, they pitched their prototypes to a team of IEEE members that judged the projects based on their originality and feasibility. Each student on the winning teams at each location were awarded the programmable Mech-5 robot.

Twenty-nine scholarships were awarded with funding from the IEEE Foundation. IEEE societies that donated to the cause were the IEEE Computational Intelligence Society, the IEEE Computer Society, the IEEE Electronics Packaging Society, the IEEE Industry Applications Society, the IEEE Oceanic Engineering Society, the IEEE Power & Energy Society, the IEEE Power Electronics Society, the IEEE Signal Processing Society, and the IEEE Solid-State Circuits Society.

-

Principles of PID Controllers

by Zurich Instruments on 29. October 2024. at 17:37

Thanks to their ability to adjust the system’s output accurately and quickly without detailed knowledge about its dynamics, PID control loops stand as a powerful and widely used tool for maintaining a stable and predictable output in a variety of applications. In this paper, we review the fundamental principles and characteristics of these control systems, providing insight into their functioning, tuning strategies, advantages, and trade-offs.

As a result of their integrated architecture, Zurich Instruments’ lock-in amplifiers allow users to make the most of all the advantages of digital PID control loops, so that their operation can be adapted to match the needs of different use cases.

-

The Unlikely Inventor of the Automatic Rice Cooker

by Allison Marsh on 29. October 2024. at 14:00

“Cover, bring to a boil, then reduce heat. Simmer for 20 minutes.” These directions seem simple enough, and yet I have messed up many, many pots of rice over the years. My sympathies to anyone who’s ever had to boil rice on a stovetop, cook it in a clay pot over a kerosene or charcoal burner, or prepare it in a cast-iron cauldron. All hail the 1955 invention of the automatic rice cooker!

How the automatic rice cooker was invented

It isn’t often that housewives get credit in the annals of invention, but in the story of the automatic rice cooker, a woman takes center stage. That happened only after the first attempts at electrifying rice cooking, starting in the 1920s, turned out to be utter failures. Matsushita, Mitsubishi, and Sony all experimented with variations of placing electric heating coils inside wooden tubs or aluminum pots, but none of these cookers automatically switched off when the rice was done. The human cook—almost always a wife or daughter—still had to pay attention to avoid burning the rice. These electric rice cookers didn’t save any real time or effort, and they sold poorly.

But Shogo Yamada, the energetic development manager of the electric appliance division for Toshiba, became convinced that his company could do better. In post–World War II Japan, he was demonstrating and selling electric washing machines all over the country. When he took a break from his sales pitch and actually talked to women about their daily household labors, he discovered that cooking rice—not laundry—was their most challenging chore. Rice was a mainstay of the Japanese diet, and women had to prepare it up to three times a day. It took hours of work, starting with getting up by 5:00 am to fan the flames of a kamado, a traditional earthenware stove fueled by charcoal or wood on which the rice pot was heated. The inability to properly mind the flame could earn a woman the label of “failed housewife.”

In 1951, Yamada became the cheerleader of the rice cooker within Toshiba, which was understandably skittish given the past failures of other companies. To develop the product, he turned to Yoshitada Minami, the manager of a small family factory that produced electric water heaters for Toshiba. The water-heater business wasn’t great, and the factory was on the brink of bankruptcy.

How Sources Influence the Telling of History

As someone who does a lot of research online, I often come across websites that tell very interesting histories, but without any citations. It takes only a little bit of digging before I find entire passages copied and pasted from one site to another, and so I spend a tremendous amount of time trying to track down the original source. Accounts of popular consumer products, such as the rice cooker, are particularly prone to this problem. That’s not to say that popular accounts are necessarily wrong; plus they are often much more engaging than boring academic pieces. This is just me offering a note of caution because every story offers a different perspective depending on its sources.

For example, many popular blogs sing the praises of Fumiko Minami and her tireless contributions to the development of the rice maker. But in my research, I found no mention of Minami before Helen Macnaughtan’s 2012 book chapter, “Building up Steam as Consumers: Women, Rice Cookers and the Consumption of Everyday Household Goods in Japan,” which itself was based on episode 42 of the Project X: Challengers documentary series that was produced by NHK and aired in 2002.

If instead I had relied solely on the description of the rice cooker’s early development provided by the Toshiba Science Museum (here’s an archived page from 2007), this month’s column would have offered a detailed technical description of how uncooked rice has a crystalline structure, but as it cooks, it becomes a gelatinized starch. The museum’s website notes that few engineers had ever considered the nature of cooking rice before the rice-cooker project, and it refers simply to the “project team” that discovered the process. There’s no mention of Fumiko.

Both stories are factually correct, but they emphasize different details. Sometimes it’s worth asking who is part of the “project team” because the answer might surprise you. —A.M.

Although Minami understood the basic technical principles for an electric rice cooker, he didn’t know or appreciate the finer details of preparing perfect rice. And so Minami turned to his wife, Fumiko.

Fumiko, the mother of six children, spent five years researching and testing to document the ideal recipe. She continued to make rice three times a day, carefully measuring water-to-rice ratios, noting temperatures and timings, and prototyping rice-cooker designs. Conventional wisdom was that the heat source needed to be adjusted continuously to guarantee fluffy rice, but Fumiko found that heating the water and rice to a boil and then cooking for exactly 20 minutes produced consistently good results.

But how would an automatic rice cooker know when the 20 minutes was up? A suggestion came from Toshiba engineers. A working model based on a double boiler (a pot within a pot for indirect heating) used evaporation to mark time. While the rice cooked in the inset pot, a bimetallic switch measured the temperature in the external pot. Boiling water would hold at a constant 100 °C, but once it had evaporated, the temperature would soar. When the internal temperature of the double boiler surpassed 100 °C, the switch would bend and cut the circuit. One cup of boiling water in the external pot took 20 minutes to evaporate. The same basic principle is still used in modern cookers.

Yamada wanted to ensure that the rice cooker worked in all climates, so Fumiko tested various prototypes in extreme conditions: on her rooftop in cold winters and scorching summers and near steamy bathrooms to mimic high humidity. When Fumiko became ill from testing outside, her children pitched in to help. None of the aluminum and glass prototypes, it turned out, could maintain their internal temperature in cold weather. The final design drew inspiration from the Hokkaidō region, Japan’s northernmost prefecture. Yamada had seen insulated cooking pots there, so the Minami family tried covering the rice cooker with a triple-layered iron exterior. It worked.

How Toshiba sold its automatic rice cooker

Toshiba’s automatic rice cooker went on sale on 10 December 1955, but initially, sales were slow. It didn’t help that the rice cooker was priced at 3,200 yen, about a third of the average Japanese monthly salary. It took some salesmanship to convince women they needed the new appliance. This was Yamada’s time to shine. He demonstrated using the rice cooker to prepare takikomi gohan, a rice dish seasoned with dashi, soy sauce, and a selection of meats and vegetables. When the dish was cooked in a traditional kamado, the soy sauce often burned, making the rather simple dish difficult to master. Women who saw Yamada’s demo were impressed with the ease offered by the rice cooker.

Another clever sales technique was to get electricity companies to serve as Toshiba distributors. At the time, Japan was facing a national power surplus stemming from the widespread replacement of carbon-filament lightbulbs with more efficient tungsten ones. The energy savings were so remarkable that operations at half of the country’s power plants had to be curtailed. But with utilities distributing Toshiba rice cookers, increased demand for electricity was baked in.

Within a year, Toshiba was selling more than 200,000 rice cookers a month. Many of them came from the Minamis’ factory, which was rescued from near-bankruptcy in the process.

How the automatic rice cooker conquered the world

From there, the story becomes an international one with complex localization issues. Japanese sushi rice is not the same as Thai sticky rice which is not the same as Persian tahdig, Indian basmati, Italian risotto, or Spanish paella. You see where I’m going with this. Every culture that has a unique rice dish almost always uses its own regional rice with its own preparation preferences. And so countries wanted their own type of automatic electric rice cooker (although some rejected automation in favor of traditional cooking methods).

Yoshiko Nakano, a professor at the University of Hong Kong, wrote a book in 2009 about the localized/globalized nature of rice cookers. Where There Are Asians, There Are Rice Cookers traces the popularization of the rice cooker from Japan to China and then the world by way of Hong Kong. One of the key differences between the Japanese and Chinese rice cooker is that the latter has a glass lid, which Chinese cooks demanded so they could see when to add sausage. More innovation and diversification followed. Modern rice cookers have settings to give Iranians crispy rice at the bottom of the pot, one to let Thai customers cook noodles, one for perfect rice porridge, and one for steel-cut oats.

My friend Hyungsub Choi, in his 2022 article “Before Localization: The Story of the Electric Rice Cooker in South Korea,” pushes back a bit on Nakano’s argument that countries were insistent on tailoring cookers to their tastes. From 1965, when the first domestic rice cooker appeared in South Korea, to the early 1990s, Korean manufacturers engaged in “conscious copying,” Choi argues. That is, they didn’t bother with either innovation or adaptation. As a result, most Koreans had to put up with inferior domestic models. Even after the Korean government made it a national goal to build a better rice cooker, manufacturers failed to deliver one, perhaps because none of the engineers involved knew how to cook rice. It’s a good reminder that the history of technology is not always the story of innovation and progress.

Eventually, the Asian diaspora brought the rice cooker to all parts of the globe, including South Carolina, where I now live and which coincidentally has a long history of rice cultivation. I bought my first rice cooker on a whim, but not for its rice-cooking ability. I was intrigued by the yogurt-making function. Similar to rice, yogurt requires a constant temperature over a specific length of time. Although successful, my yogurt experiment was fleeting—store-bought was just too convenient. But the rice cooking blew my mind. Perfect rice. Every. Single. Time. I am never going back to overflowing pots of starchy water.

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the November 2024 print issue as “The Automatic Rice Cooker’s Unlikely Inventor.”

References

Helen Macnaughtan’s 2012 book chapter, “Building up Steam as Consumers: Women, Rice Cookers and the Consumption of Everyday Household Goods in Japan,” was a great resource in understanding the development of the Toshiba ER-4. The chapter appeared in The Historical Consumer: Consumption and Everyday Life in Japan, 1850-2000, edited by Penelope Francks and Janet Hunter (Palgrave Macmillan).

Yoshiko Nakano’s book Where There are Asians, There are Rice Cookers (Hong Kong University Press, 2009) takes the story much further with her focus on the National (Panasonic) rice cooker and its adaptation and adoption around the world.

The Toshiba Science Museum, in Kawasaki, Japan, where we sourced our main image of the original ER-4, closed to the public in June. I do not know what the future holds for its collections, but luckily some of its Web pages have been archived to continue to help researchers like me.

-

Multiband Antenna Simulation and Wireless KPI Extraction

by Ansys on 29. October 2024. at 13:07

In this upcoming webinar, explore how to leverage the state-of-the-art high-frequency simulation capabilities of Ansys HFSS to innovate and develop advanced multiband antenna systems.

Overview

This webinar will explore how to leverage the state-of-the-art high-frequency simulation capabilities of Ansys HFSS to innovate and develop advanced multiband antenna systems. Attendees will learn how to optimize antenna performance and analyze installed performance within wireless networks. The session will also demonstrate how this approach enables users to extract valuable wireless and network KPIs, providing a comprehensive toolset for enhancing antenna design, optimizing multiband communication, and improving overall network performance. Join us to discover how Ansys HFSS can transform wireless system design and network efficiency approach.

What Attendees will Learn

- How to design interleaved multiband antenna systems using the latest capabilities in HFSS

- How to extract Network Key Performance Indicators

- How to run and extract RF Channels for the dynamic environment

Who Should Attend

This webinar is valuable to anyone involved in antenna, R&D, product design, and wireless networks.

-

For this Stanford Engineer, Frugal Invention Is a Calling

by Greg Uyeno on 29. October 2024. at 13:00

Manu Prakash spoke with IEEE Spectrum shortly after returning to Stanford University from a month aboard a research vessel off the coast of California, where he was testing tools to monitor oceanic carbon sequestration. The associate professor conducts fieldwork around the world to better understand the problems he’s working on, as well as the communities that will be using his inventions.

Prakash develops imaging instruments and diagnostic tools, often for use in global health and environmental sciences. His devices typically cost radically less than conventional equipment—he aims for reductions of two or more orders of magnitude. Whether he’s working on pocketable microscopes, mosquito or plankton monitors, or an autonomous malaria diagnostic platform, Prakash always includes cost and access as key aspects of his engineering. He calls this philosophy “frugal science.”

Why should we think about science frugally?

Manu Prakash: To me, when we are trying to ask and solve problems and puzzles, it becomes important: In whose hands are we putting these solutions? A frugal approach to solving the problem is the difference between 1 percent of the population or billions of people having access to that solution.

Lack of access creates these kinds of barriers in people’s minds, where they think they can or cannot approach a kind of problem. It’s important that we as scientists or just citizens of this world create an environment that feels that anybody has a chance to make important inventions and discoveries if they put their heart to it. The entrance to all that is dependent on tools, but those tools are just inaccessible.

How did you first encounter the idea of “frugal science”?

Prakash: I grew up in India and lived with very little access to things. And I got my Ph.D. at MIT. I was thinking about this stark difference in worlds that I had seen and lived in, so when I started my lab, it was almost a commitment to [asking]: What does it mean when we make access one of the critical dimensions of exploration? So, I think a lot of the work I do is primarily driven by curiosity, but access brings another layer of intellectual curiosity.

How do you identify a problem that might benefit from frugal science?

Prakash: Frankly, it’s hard to find a problem that would not benefit from access. The question to ask is “Where are the neglected problems that we as a society have failed to tackle?” We do a lot of work in diagnostics. A lot [of our solutions] beat the conventional methods that are neither cost effective nor any good. It’s not about cutting corners; it’s about deeply understanding the problem—better solutions at a fraction of the cost. It does require invention. For that order of magnitude change, you really have to start fresh.

Where does your involvement with an invention end?

Prakash: Inventions are part of our soul. Your involvement never ends. I just designed the 415th version of Foldscope [a low-cost “origami” microscope]. People only know it as version 3. We created Foldscope a long time ago; then I realized that nobody was going to provide access to it. So we went back and invented the manufacturing process for Foldscope to scale it. We made the first 100,000 Foldscopes in the lab, which led to millions of Foldscopes being deployed.

So it’s continuous. If people are scared of this, they should never invent anything [laughs], because once you invent something, it’s a lifelong project. You don’t put it aside; the project doesn’t put you aside. You can try to, but that’s not really possible if your heart is in it. You always see problems. Nothing is ever perfect. That can be ever consuming. It’s hard. I don’t want to minimize this process in any way or form.

-

The Patent Battle That Won’t Quit

by Harry Goldstein on 28. October 2024. at 21:00