IEEE Spectrum IEEE Spectrum

-

The Forgotten History of Chinese Keyboards

by Thomas S. Mullaney on 28. May 2024. at 19:00

Today, typing in Chinese works by converting QWERTY keystrokes into Chinese characters via a software interface, known as an input method editor. But this was not always the case. Thomas S. Mullaney’s new book, The Chinese Computer: A Global History of the Information Age, published by the MIT Press, unearths the forgotten history of Chinese input in the 20th century. In this article, which was adapted from an excerpt of the book, he details the varied Chinese input systems of the 1960s and ’70s that renounced QWERTY altogether.

Today, typing in Chinese works by converting QWERTY keystrokes into Chinese characters via a software interface, known as an input method editor. But this was not always the case. Thomas S. Mullaney’s new book, The Chinese Computer: A Global History of the Information Age, published by the MIT Press, unearths the forgotten history of Chinese input in the 20th century. In this article, which was adapted from an excerpt of the book, he details the varied Chinese input systems of the 1960s and ’70s that renounced QWERTY altogether.“This will destroy China forever,” a young Taiwanese cadet thought as he sat in rapt attention. The renowned historian Arnold J. Toynbee was on stage, delivering a lecture at Washington and Lee University on “A Changing World in Light of History.” The talk plowed the professor’s favorite field of inquiry: the genesis, growth, death, and disintegration of human civilizations, immortalized in his magnum opus A Study of History. Tonight’s talk threw the spotlight on China.

China was Toynbee’s outlier: Ancient as Egypt, it was a civilization that had survived the ravages of time. The secret to China’s continuity, he argued, was character-based Chinese script. Character-based script served as a unifying medium, placing guardrails against centrifugal forces that might otherwise have ripped this grand and diverse civilization apart. This millennial integrity was now under threat. Indeed, as Toynbee spoke, the government in Beijing was busily deploying Hanyu pinyin, a Latin alphabet–based Romanization system.

The Taiwanese cadet listening to Toynbee was Chan-hui Yeh, a student of electrical engineering at the nearby Virginia Military Institute (VMI). That evening with Arnold Toynbee forever altered the trajectory of his life. It changed the trajectory of Chinese computing as well, triggering a cascade of events that later led to the formation of arguably the first successful Chinese IT company in history: Ideographix, founded by Yeh 14 years after Toynbee stepped offstage.

During the late 1960s and early 1970s, Chinese computing underwent multiple sea changes. No longer limited to small-scale laboratories and solo inventors, the challenge of Chinese computing was taken up by engineers, linguists, and entrepreneurs across Asia, the United States, and Europe—including Yeh’s adoptive home of Silicon Valley.

Chan-hui Yeh’s IPX keyboard featured 160 main keys, with 15 characters each. A peripheral keyboard of 15 keys was used to select the character on each key. Separate “shift” keys were used to change all of the character assignments of the 160 keys. Computer History Museum

Chan-hui Yeh’s IPX keyboard featured 160 main keys, with 15 characters each. A peripheral keyboard of 15 keys was used to select the character on each key. Separate “shift” keys were used to change all of the character assignments of the 160 keys. Computer History MuseumThe design of Chinese computers also changed dramatically. None of the competing designs that emerged in this era employed a QWERTY-style keyboard. Instead, one of the most successful and celebrated systems—the IPX, designed by Yeh—featured an interface with 120 levels of “shift,” packing nearly 20,000 Chinese characters and other symbols into a space only slightly larger than a QWERTY interface. Other systems featured keyboards with anywhere from 256 to 2,000 keys. Still others dispensed with keyboards altogether, employing a stylus and touch-sensitive tablet, or a grid of Chinese characters wrapped around a rotating cylindrical interface. It’s as if every kind of interface imaginable was being explored except QWERTY-style keyboards.

IPX: Yeh’s 120-dimensional hypershift Chinese keyboard

Yeh graduated from VMI in 1960 with a B.S. in electrical engineering. He went on to Cornell University, receiving his M.S. in nuclear engineering in 1963 and his Ph.D. in electrical engineering in 1965. Yeh then joined IBM, not to develop Chinese text technologies but to draw upon his background in automatic control to help develop computational simulations for large-scale manufacturing plants, like paper mills, petrochemical refineries, steel mills, and sugar mills. He was stationed in IBM’s relatively new offices in San Jose, Calif.

Toynbee’s lecture stuck with Yeh, though. While working at IBM, he spent his spare time exploring the electronic processing of Chinese characters. He felt convinced that the digitization of Chinese must be possible, that Chinese writing could be brought into the computational age. Doing so, he felt, would safeguard Chinese script against those like Chairman Mao Zedong, who seemed to equate Chinese modernization with the Romanization of Chinese script. The belief was so powerful that Yeh eventually quit his good-paying job at IBM to try and save Chinese through the power of computing.

Yeh started with the most complex parts of the Chinese lexicon and worked back from there. He fixated on one character in particular: ying 鷹 (“eagle”), an elaborate graph that requires 24 brushstrokes to compose. If he could determine an appropriate data structure for such a complex character, he reasoned, he would be well on his way. Through careful analysis, he determined that a bitmap comprising 24 vertical dots and 20 horizontal dots would do the trick, taking up 60 bytes of memory, excluding metadata. By 1968, Yeh felt confident enough to take the next big step—to patent his project, nicknamed “Iron Eagle.” The Iron Eagle project quickly garnered the interest of the Taiwanese military. Four years later, with the promise of Taiwanese government funding, Yeh founded Ideographix, in Sunnyvale, Calif.

A single key of the IPX keyboard contained 15 characters. This key contains the character zhong (中 “central”), which is necessary to spell “China.” MIT Press

A single key of the IPX keyboard contained 15 characters. This key contains the character zhong (中 “central”), which is necessary to spell “China.” MIT PressThe flagship product of Ideographix was the IPX, a computational typesetting and transmission system for Chinese built upon the complex orchestration of multiple subsystems.

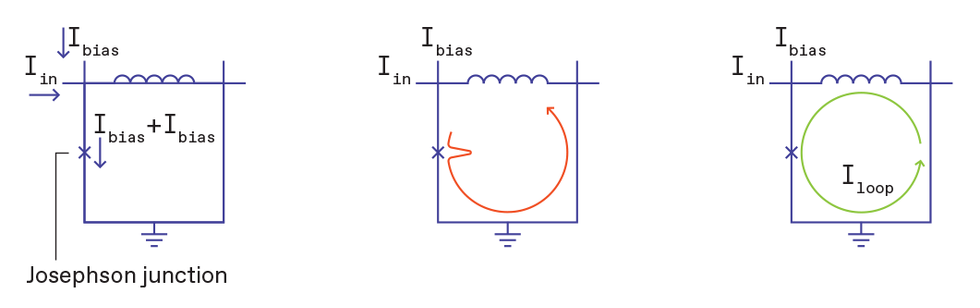

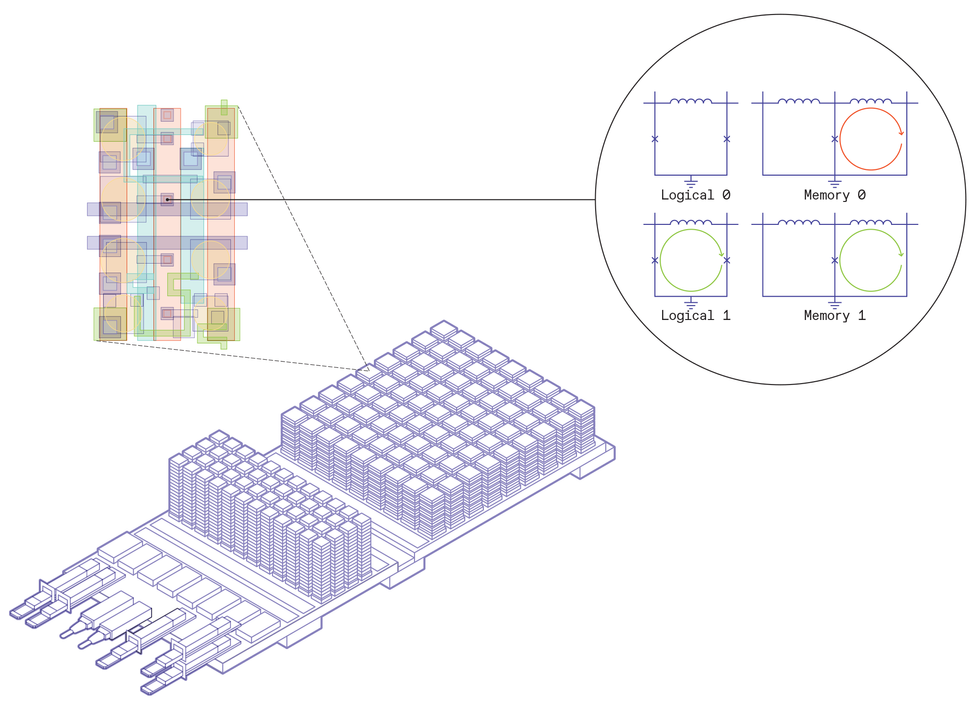

The marvel of the IPX system was the keyboard subsystem, which enabled operators to enter a theoretical maximum of 19,200 Chinese characters despite its modest size: 59 centimeters wide, 37 cm deep, and 11 cm tall. To achieve this remarkable feat, Yeh and his colleagues decided to treat the keyboard not merely as an electronic peripheral but as a full-fledged computer unto itself: a microprocessor-controlled “intelligent terminal” completely unlike conventional QWERTY-style devices.

Seated in front of the IPX interface, the operator looked down on 160 keys arranged in a 16-by-10 grid. Each key contained not a single Chinese character but a cluster of 15 characters arranged in a miniature 3-by-5 array. Those 160 keys with 15 characters on each key yielded 2,400 Chinese characters.

The process of typing on the IPX keyboard involved using a booklet of characters used to depress one of 160 keys, selecting one of 15 numbers to pick a character within the key, and using separate “shift” keys to indicate when a page of the booklet was flipped. MIT Press

The process of typing on the IPX keyboard involved using a booklet of characters used to depress one of 160 keys, selecting one of 15 numbers to pick a character within the key, and using separate “shift” keys to indicate when a page of the booklet was flipped. MIT PressChinese characters were not printed on the keys, the way that letters and numbers are emblazoned on the keys of QWERTY devices. The 160 keys themselves were blank. Instead, the 2,400 Chinese characters were printed on laminated paper, bound together in a spiral-bound booklet that the operator laid down flat atop the IPX interface.The IPX keys weren’t buttons, as on a QWERTY device, but pressure-sensitive pads. An operator would push down on the spiral-bound booklet to depress whichever key pad was directly underneath.

To reach characters 2,401 through 19,200, the operator simply turned the spiral-bound booklet to whichever page contained the desired character. The booklets contained up to eight pages—and each page contained 2,400 characters—so the total number of potential symbols came to just shy of 20,000.

For the first seven years of its existence, the use of IPX was limited to the Taiwanese military. As years passed, the exclusivity relaxed, and Yeh began to seek out customers in both the private and public sectors. Yeh’s first major nonmilitary clients included Taiwan’s telecommunication administration and the National Taxation Bureau of Taipei. For the former, the IPX helped process and transmit millions of phone bills. For the latter, it enabled the production of tax return documents at unprecedented speed and scale. But the IPX wasn’t the only game in town.

Loh Shiu-chang, a professor at the Chinese University of Hong Kong, developed what he called “Loh’s keyboard” (Le shi jianpan 樂氏鍵盤), featuring 256 keys. Loh Shiu-chang

Loh Shiu-chang, a professor at the Chinese University of Hong Kong, developed what he called “Loh’s keyboard” (Le shi jianpan 樂氏鍵盤), featuring 256 keys. Loh Shiu-changMainland China’s “medium-sized” keyboards

By the mid-1970s, the People’s Republic of China was far more advanced in the arena of mainframe computing than most outsiders realized. In July 1972, just months after the famed tour by U.S. president Richard Nixon, a veritable blue-ribbon committee of prominent American computer scientists visited the PRC. The delegation visited China’s main centers of computer science at the time, and upon learning what their counterparts had been up to during the many years of Sino-American estrangement, the delegation was stunned.

But there was one key arena of computing that the delegation did not bear witness to: the computational processing of Chinese characters. It was not until October 1974 that mainland Chinese engineers began to dive seriously into this problem. Soon after, in 1975, the newly formed Chinese Character Information Processing Technology Research Office at Peking University set out upon the goal of creating a “Chinese Character Information Processing and Input System” and a “Chinese Character Keyboard.”

The group evaluated more than 10 proposals for Chinese keyboard designs. The designs fell into three general categories: a large-keyboard approach, with one key for every commonly used character; a small-keyboard approach, like the QWERTY-style keyboard; and a medium-size keyboard approach, which attempted to tread a path between these two poles.

Peking University’s medium-sized keyboard design included a combination of Chinese characters and character components, as shown in this explanatory diagram. Public Domain

Peking University’s medium-sized keyboard design included a combination of Chinese characters and character components, as shown in this explanatory diagram. Public DomainThe team leveled two major criticisms against QWERTY-style small keyboards. First, there were just too few keys, which meant that many Chinese characters were assigned identical input sequences. What’s more, QWERTY keyboards did a poor job of using keys to their full potential. For the most part, each key on a QWERTY keyboard was assigned only two symbols, one of which required the operator to depress and hold the shift key to access. A better approach, they argued, was the technique of “one key, many uses”— yijian duoyong—assigning each key a larger number of symbols to make the most use of interface real estate.

The team also examined the large-keyboard approach, in which 2,000 or more commonly used Chinese characters were assigned to a tabletop-size interface. Several teams across China worked on various versions of these large keyboards. The Peking team, however, regarded the large-keyboard approach as excessive and unwieldy. Their goal was to exploit each key to its maximum potential, while keeping the number of keys to a minimum.

After years of work, the team in Beijing settled upon a keyboard with 256 keys, 29 of which would be dedicated to various functions, such as carriage return and spacing, and the remaining 227 used to input text. Each keystroke generated an 8-bit code, stored on punched paper tape (hence the choice of 256, or 28, keys). These 8-bit codes were then translated into a 14-bit internal code, which the computer used to retrieve the desired character.

In their assignment of multiple characters to individual keys, the team’s design was reminiscent of Ideographix’s IPX machine. But there was a twist. Instead of assigning only full-bodied, stand-alone Chinese characters to each key, the team assigned a mixture of both Chinese characters and character components. Specifically, each key was associated with up to four symbols, divided among three varieties:

- full-body Chinese characters (limited to no more than two per key)

- partial Chinese character components (no more than three per key)

- the uppercase symbol, reserved for switching to other languages (limited to one per key)

In all, the keyboard contained 423 full-body Chinese characters and 264 character components. When arranging these 264 character components on the keyboard, the team hit upon an elegant and ingenious way to help operators remember the location of each: They treated the keyboard as if it were a Chinese character itself. The team placed each of the 264 character components in the regions of the keyboard that corresponded to the areas where they usually appeared in Chinese characters.

In its final design, the Peking University keyboard was capable of inputting a total of 7,282 Chinese characters, which in the team’s estimation would account for more than 90 percent of all characters encountered on an average day. Within this character set, the 423 most common characters could be produced via one keystroke; 2,930 characters could be produced using two keystrokes; and a further 3,106 characters could be produced using three keystrokes. The remaining 823 characters required four or five keystrokes.

The Peking University keyboard was just one of many medium-size designs of the era. IBM created its own 256-key keyboard for Chinese and Japanese. In a design reminiscent of the IPX system, this 1970s-era keyboard included a 12-digit keypad with which the operator could “shift” between the 12 full-body Chinese characters outfitted on each key (for a total of 3,072 characters in all). In 1980, Chinese University of Hong Kong professor Loh Shiu-chang developed what he called “Loh’s keyboard” (Le shi jianpan 樂氏鍵盤), which also featured 256 keys.

But perhaps the strangest Chinese keyboard of the era was designed in England.

The cylindrical Chinese keyboard

On a winter day in 1976, a young boy in Cambridge, England, searched for his beloved Meccano set. A predecessor of the American Erector set, the popular British toy offered aspiring engineers hours of modular possibility. Andrew had played with the gears, axles, and metal plates recently, but today they were nowhere to be found.

Wandering into the kitchen, he caught the thief red-handed: his father, the Cambridge University researcher Robert Sloss. For three straight days and nights, Sloss had commandeered his son’s toy, engrossed in the creation of a peculiar gadget that was cylindrical and rotating. It riveted the young boy’s attention—and then the attention of the Telegraph-Herald, which dispatched a journalist to see it firsthand. Ultimately, it attracted the attention and financial backing of the U.K. telecommunications giant Cable & Wireless.

Robert Sloss was building a Chinese computer.

The elder Sloss was born in 1927 in Scotland. He joined the British navy, and was subjected to a series of intelligence tests that revealed a proclivity for foreign languages. In 1946 and 1947, he was stationed in Hong Kong. Sloss went on to join the civil service as a teacher and later, in the British air force, became a noncommissioned officer. Owing to his pedagogical experience, his knack for language, and his background in Asia, he was invited to teach Chinese at Cambridge and appointed to a lectureship in 1972.

At Cambridge, Sloss met Peter Nancarrow. Twelve years Sloss’s junior, Nancarrow trained as a physicist but later found work as a patent agent. The bearded 38-year-old then taught himself Norwegian and Russian as a “hobby” before joining forces with Sloss in a quest to build an automatic Chinese-English translation machine.

In 1976, Robert Sloss and Peter Nancarrow designed the Ideo-Matic Encoder, a Chinese input keyboard with a grid of 4,356 keys wrapped around a cylinder. PK Porthcurno

In 1976, Robert Sloss and Peter Nancarrow designed the Ideo-Matic Encoder, a Chinese input keyboard with a grid of 4,356 keys wrapped around a cylinder. PK PorthcurnoThey quickly found that the choke point in their translator design was character input— namely, how to get handwritten Chinese characters, definitions, and syntax data into a computer.

Over the following two years, Sloss and Nancarrow dedicated their energy to designing a Chinese computer interface. It was this effort that led Sloss to steal and tinker with his son’s Meccano set. Sloss’s tinkering soon bore fruit: a working prototype that the duo called the “Binary Signal Generator for Encoding Chinese Characters into Machine-compatible form”—also known as the Ideo-Matic Encoder and the Ideo-Matic 66 (named after the machine’s 66-by-66 grid of characters).

Each cell in the machine’s grid was assigned a binary code corresponding to the X-column and the Y-row values. In terms of total space, each cell was 7 millimeters squared, with 3,500 of the 4,356 cells dedicated to Chinese characters. The rest were assigned to Japanese syllables or left blank.

The distinguishing feature of Sloss and Nancarrow’s interface was not the grid, however. Rather than arranging their 4,356 cells across a rectangular interface, the pair decided to wrap the grid around a rotating, tubular structure. The typist used one hand to rotate the cylindrical grid and the other hand to move a cursor left and right to indicate one of the 4,356 cells. The depression of a button produced a binary signal that corresponded to the selected Chinese character or other symbol.

The Ideo-Matic Encoder was completed and delivered to Cable & Wireless in the closing years of the 1970s. Weighing in at 7 kilograms and measuring 68 cm wide, 57 cm deep, and 23 cm tall, the machine garnered industry and media attention. Cable & Wireless purchased rights to the machine in hopes of mass-manufacturing it for the East Asian market.

QWERTY’s comeback

The IPX, the Ideo-Matic 66, Peking University’s medium-size keyboards, and indeed all of the other custom-built devices discussed here would soon meet exactly the same fate—oblivion. There were changes afoot. The era of custom-designed Chinese text-processing systems was coming to an end. A new era was taking shape, one that major corporations, entrepreneurs, and inventors were largely unprepared for. This new age has come to be known by many names: the software revolution, the personal-computing revolution, and less rosily, the death of hardware.

From the late 1970s onward, custom-built Chinese interfaces steadily disappeared from marketplaces and laboratories alike, displaced by wave upon wave of Western-built personal computers crashing on the shores of the PRC. With those computers came the resurgence of QWERTY for Chinese input, along the same lines as the systems used by Sinophone computer users today—ones mediated by a software layer to transform the Latin alphabet into Chinese characters. This switch to typing mediated by an input method editor, or IME, did not lead to the downfall of Chinese civilization, as the historian Arnold Toynbee may have predicted. However, it did fundamentally change the way Chinese speakers interact with the digital world and their own language.

This article appears in the June 2024 print issue.

-

Physics Nobel Laureate Herbert Kroemer Dies at 95

by Amanda Davis on 28. May 2024. at 18:00

Herbert Kroemer

Nobel Laureate

Life Fellow, 95; died 8 March

Kroemer, a pioneering physicist, is a Nobel laureate, receiving the 2000 Nobel Prize in Physics for developing semiconductor heterostructures for high-speed and opto-electronics. The devices laid the foundation for the modern era of microchips, computers, and information technology. Heterostructures describe the interfaces between two semiconductors that serve as the building blocks between more elaborate nanostructures.

He also received the 2002 IEEE Medal of Honor for “contributions to high-frequency transistors and hot-electron devices, especially heterostructure devices from heterostructure bipolar transistors to lasers, and their molecular beam epitaxy technology.”

Kroemer was professor emeritus of electrical and computer engineering at the University of California, Santa Barbara, when he died.

He began his career in 1952 at the telecommunications research laboratory of the German Postal Service, in Darmstadt. The postal service also ran the telephone system and had a small semiconductor research group, which included Kroemer and about nine other scientists, according to IEEE Spectrum.

In the mid-1950s, he took a research position at RCA Laboratories, in Princeton, N.J. There, Kroemer originated the concept of the heterostructure bipolar transistor (HBT), a device that contains differing semiconductor materials for the emitter and base regions, creating a heterojunction. HBTs can handle high-frequency signals (up to several thousand gigahertz) and are commonly used in radio frequency systems, including RF power amplifiers in cell phones.

In 1957, he returned to Germany to research potential uses of gallium arsenide at Phillips Research Laboratory, in Hamburg. Two years later, Kroemer moved back to the United States to join Varian Associates, an electronics company in Palo Alto, Calif., where he invented the double heterostructure laser. It was the first laser to operate continuously at room temperature. The innovation paved the way for semiconductor lasers used in CD players, fiber optics, and other applications.

In 1964, Kroemer became the first researcher to publish an explanation of the Gunn Effect, a high-frequency oscillation of electrical current flowing through certain semiconducting solids. The effect, first observed by J.B. Gunn in the early 1960s, produces short radio waves called microwaves.

Kroemer taught electrical engineering at the University of Colorado, Boulder, from 1968 to 1976 before joining UCSB, where he led the university’s semiconductor research program. With his colleague Charles Kittel, Kroemer co-authored the 1980 textbook Thermal Physics. He also wrote Quantum Mechanics for Engineering, Materials Science, and Applied Physics, published in 1994.

He was a Fellow of the American Physics Society and a foreign associate of the U.S. National Academy of Engineering.

Born and educated in Germany, Kroemer received a bachelor’s degree from the University of Jena, and master’s and doctoral degrees from the University of Göttingen, all in physics.

Vladimir G. “Walt” Gelnovatch

Past president of the IEEE Microwave Theory and Technology Society

Life Fellow, 86; died 1 March

Gelnovatch served as 1989 president of the IEEE Microwave Theory and Technology Society (formerly the IEEE Microwave Theory and Techniques Society). He was an electrical engineer for nearly 40 years at the Signal Corps Laboratories, in Fort Monmouth, N.J.

Gelnovatch served in the U.S. Army from 1956 to 1959. While stationed in Germany, he helped develop a long-line microwave radiotelephone network, a military telecommunications network that spanned most of Western Europe.

As an undergraduate student at Monmouth University, in West Long Branch, N.J., he founded the school’s first student chapter of the Institute of Radio Engineers, an IEEE predecessor society. After graduating with a bachelor’s degree in electronics engineering, Gelnovatch earned a master’s degree in electrical engineering in 1967 from New York University, in New York City.

Following a brief stint as a professor of electrical engineering at the University of Virginia, in Charlottesville, Gelnovatch joined the Signal Corps Engineering Laboratory (SCEL) as a research engineer. His initial work focused on developing CAD programs to help researchers design microwave circuits and communications networks. He then shifted his focus to developing mission electronics. Over the next four years, he studied vacuum technology, germanium, silicon, and semiconductors.

He also spearheaded the U.S. Army’s research on monolithic microwave-integrated circuits. The integrated circuit devices operate at microwave frequencies and typically perform functions such as power amplification, low-noise amplification, and high-frequency switching.

Gelnovatch retired in 1997 as director of the U.S. Army Electron Devices and Technology Laboratory, the successor to SCEL.

During his career, Gelnovatch published 50 research papers and was granted eight U.S. patents. He also served as associate editor and contributor to the Microwave Journal for more than 20 years.

Gelnovatch received the 1997 IEEE MTT-S Distinguished Service Award. The U.S. Army also honored him in 1990 with its highest civilian award—the Exceptional Service Award.

Adolf Goetzberger

Solar energy pioneer

Life Fellow, 94; died 24 February

Goetzberger founded the Fraunhofer Institute for Solar Energy Systems (ISE), a solar energy R&D company in Freiburg, Germany. He is known for pioneering the concept of agrivoltaics—the dual use of land for solar energy production and agriculture.

After earning a Ph.D. in physics in 1955 from the University of Munich, Goetzberger moved to the United States. He joined Shockley Semiconductor Laboratory in Palo Alto, Calif., in 1956 as a researcher. The semiconductor manufacturer was founded by Nobel laureate William Shockley. Goetzberger later left Shockley to join Bell Labs, in Murray Hill, N.J.

He moved back to Germany in 1968 and was appointed director of the Fraunhofer Institute for Applied Solid-State Physics, in Breisgau. There, he founded a solar energy working group and pushed for an independent institute dedicated to the field, which became ISE in 1981.

In 1983, Goetzberger became the first German national to receive the J.J. Ebers Award from the IEEE Electron Devices Society. It honored him for developing a silicon field-effect transistor. Goetzberger also received the 1997 IEEE William R. Cherry Award, the 1989 Medal of the Merit of the State of Baden-Württemberg, and the 1992 Order of Merit First Class of the Federal Republic of Germany.

Michael Barnoski

Fiber optics pioneer

Life senior member, 83; died 23 February

Barnoski founded two optics companies and codeveloped the optical time domain reflectometer, a device that detects breaks in fiber optic cables.

After receiving a bachelor’s degree in electrical engineering from the University of Dayton, in Ohio, Barnoski joined Honeywell in Boston. After 10 years at the company, he left to work at Hughes Research Laboratories, in Malibu, Calif. For a decade, he led all fiber optics–related activities for Hughes Aircraft and managed a global team of scientists, engineers, and technicians.

In 1976, Barnoski collaborated with Corning Glass Works, a materials science company in New York, to develop the optical time domain reflectometer.

Three years later, Theodore Mainman, inventor of the laser, recruited Barnoski to join TRW, an electronics company in Euclid, Ohio. In 1980, Barnoski founded PlessCor Optronics laboratory, an integrated electrical-optical interface supplier, in Chatsworth, Calif. He served as president and CEO until 1990, when he left and began consulting.

In 2002, Barnoski founded Nanoprecision Products Inc., a company that specialized in ultraprecision 3D stamping, in El Segundo, Calif.

In addition to his work in the private sector, Barnoski taught summer courses at the University of California, Santa Barbara, for 20 years. He also wrote and edited three books on the fundamentals of optical fiber communications. He retired in 2018.

For his contributions to fiber optics, he received the 1988 John Tyndall Award, jointly presented by the IEEE Photonics Society and the Optical Society of America.

Barnoski also earned a master’s degree in microwave electronics and a Ph.D. in electrical engineering and applied physics, both from Cornell.

Kanaiyalal R. Shah

Founder of Shah and Associates

Senior member, 84; died 6 December

Shah was founder and president of Shah and Associates (S&A), an electrical systems consulting firm, in Gaithersburg, Md.

Shah received a bachelor’s degree in electrical engineering in 1961 from the Baroda College (now the Maharaja Sayajirao University of Baroda), in India. After earning a master’s degree in electrical machines in 1963 from Gujarat University, in India, Shah emigrated to the United States. Two years later, he received a master’s degree in electrical engineering from the University of Missouri in Rolla.

In 1967, he moved to Virginia and joined the Virginia Military Institute’s electrical engineering faculty, in Lexington. He left to move to Missouri, earning a Ph.D. in EE from the University of Missouri in Columbia, in 1969. He then moved back to Virginia and taught electrical engineering for two years at Virginia Tech.

From 1971 to 1973, Shah worked as a research engineer at Hughes Research Laboratories, in Malibu, Calif. He left to manage R&D at engineering services company Gilbert/Commonwealth International, in Jackson, Mich.

Around this time, Shah founded S&A, where he designed safe and efficient electrical systems. He developed novel approaches to ensuring safety in electrical power transmission and distribution, including patenting a UV lighting power system. He also served as an expert witness in electrical safety injury lawsuits.

He later returned to academia, lecturing at George Washington University and Ohio State University. Shah also wrote a series of short courses on power engineering. In 2005, he funded the construction and running of the Dr. K.R. Shah Higher Secondary School and the Smt. D.K. Shah Primary School in his hometown of Bhaner, Gujarat, in India.

John Brooks Slaughter

First African American director of the National Science Foundation

Life Fellow, 89; died 6 December

Slaughter, former director of the NSF in the early 1980s, was a passionate advocate for providing opportunities for underrepresented minorities and women in the science, technology, engineering, and mathematics fields.

Later in his career, he was a distinguished professor of engineering and education at the University of Southern California Viterbi School of Engineering, in Los Angeles. He helped found the school’s Center for Engineering Diversity, which was renamed the John Brooks Slaughter Center for Engineering Diversity in 2023, as a tribute to his efforts.

After earning a bachelor’s degree in engineering in 1956 from Kansas State University, in Manhattan, Slaughter developed military aircraft at General Dynamics’ Convair division in San Diego. From there, he moved on to the information systems technology department in the U.S. Navy Electronics Laboratory, also located in the city. He earned a master’s degree in engineering in 1961 from the University of California, Los Angeles.

Slaughter earned his Ph.D. from the University of California, San Diego, in 1971 and was promoted to director of the Navy Electronics Laboratory on the same day he defended his dissertation, according to The Institute.

In 1975, he left the organization to become director of the Applied Physics Laboratory at the University of Washington, in Seattle. Two years later, Slaughter was appointed assistant director in charge of the NSF’s Astronomical, Atmospheric, Earth and Ocean Sciences Division (now called the Division of Atmospheric and Geospace Sciences), in Washington, D.C.

In 1979, he accepted the position of academic vice president and provost of Washington State University, in Pullman. The following year, he was appointed director of the NSF by U.S. President Jimmy Carter’s administration. Under Slaughter’s leadership, the organization bolstered funding for science programs at historically Black colleges and universities, including Howard University, in Washington, D.C. While Harvard, Stanford, and CalTech traditionally received preference from the NSF for funding new facilities and equipment, Slaughter encouraged less prestigious universities to apply and compete for those grants.

He resigned just two years after accepting the post because he could not publicly support President Ronald Reagan’s initiatives to eradicate funding for science education, he told The Institute in a 2023 interview.

In 1981, Slaughter was appointed chancellor of the University of Maryland, in College Park. He left in 1988 to become president of Occidental College, in Los Angeles, where he helped transform the school into one of the country’s most diverse liberal arts colleges.

In 2000, Slaughter became CEO and president of the National Action Council for Minorities in Engineering, the largest provider of college scholarships for underrepresented minorities pursuing degrees at engineering schools, in Alexandria, Va.

Slaughter left the council in 2010 and joined USC. He taught courses on leadership, diversity, and technological literacy at Rossier Graduate School of Education until retiring in 2022.

Slaughter received the 2002 IEEE Founders Medal for “leadership and administration significantly advancing inclusion and racial diversity in the engineering profession across government, academic, and nonprofit organizations.”

Don Bramlett

Former IEEE Region 4 Director

Life senior member, 73; died 2 December

Bramlett served as 2009–2010 director of IEEE Region 4. He was an active volunteer with the IEEE Southeastern Michigan Section.

He worked as a senior project manager for 35 years at DTE Energy, an energy services company, in Detroit.

Bramlett was also active in the Boy Scouts of America (which will be known as Scouting America beginning in 2025). He served as leader of his local troop and was a council member. The Boy Scouts honored him with a Silver Beaver award recognizing his “exceptional character and distinguished service.”

Bramlett earned a bachelor’s degree in electrical engineering from the University of Detroit Mercy.

-

Will Scaling Solve Robotics?

by Nishanth J. Kumar on 28. May 2024. at 10:00

This post was originally published on the author’s personal blog.

Last year’s Conference on Robot Learning (CoRL) was the biggest CoRL yet, with over 900 attendees, 11 workshops, and almost 200 accepted papers. While there were a lot of cool new ideas (see this great set of notes for an overview of technical content), one particular debate seemed to be front and center: Is training a large neural network on a very large dataset a feasible way to solve robotics?1

Of course, some version of this question has been on researchers’ minds for a few years now. However, in the aftermath of the unprecedented success of ChatGPT and other large-scale “foundation models” on tasks that were thought to be unsolvable just a few years ago, the question was especially topical at this year’s CoRL. Developing a general-purpose robot, one that can competently and robustly execute a wide variety of tasks of interest in any home or office environment that humans can, has been perhaps the holy grail of robotics since the inception of the field. And given the recent progress of foundation models, it seems possible that scaling existing network architectures by training them on very large datasets might actually be the key to that grail.

Given how timely and significant this debate seems to be, I thought it might be useful to write a post centered around it. My main goal here is to try to present the different sides of the argument as I heard them, without bias towards any side. Almost all the content is taken directly from talks I attended or conversations I had with fellow attendees. My hope is that this serves to deepen people’s understanding around the debate, and maybe even inspire future research ideas and directions.

I want to start by presenting the main arguments I heard in favor of scaling as a solution to robotics.

Why Scaling Might Work

- It worked for Computer Vision (CV) and Natural Language Processing (NLP), so why not robotics? This was perhaps the most common argument I heard, and the one that seemed to excite most people given recent models like GPT4-V and SAM. The point here is that training a large model on an extremely large corpus of data has recently led to astounding progress on problems thought to be intractable just 3-4 years ago. Moreover, doing this has led to a number of emergent capabilities, where trained models are able to perform well at a number of tasks they weren’t explicitly trained for. Importantly, the fundamental method here of training a large model on a very large amount of data is general and not somehow unique to CV or NLP. Thus, there seems to be no reason why we shouldn’t observe the same incredible performance on robotics tasks.

- We’re already starting to see some evidence that this might work well: Chelsea Finn, Vincent Vanhoucke, and several others pointed to the recent RT-X and RT-2 papers from Google DeepMind as evidence that training a single model on large amounts of robotics data yields promising generalization capabilities. Russ Tedrake of Toyota Research Institute (TRI) and MIT pointed to the recent Diffusion Policies paper as showing a similar surprising capability. Sergey Levine of UC Berkeley highlighted recent efforts and successes from his group in building and deploying a robot-agnostic foundation model for navigation. All of these works are somewhat preliminary in that they train a relatively small model with a paltry amount of data compared to something like GPT4-V, but they certainly do seem to point to the fact that scaling up these models and datasets could yield impressive results in robotics.

- Progress in data, compute, and foundation models are waves that we should ride: This argument is closely related to the above one, but distinct enough that I think it deserves to be discussed separately. The main idea here comes from Rich Sutton’s influential essay: The history of AI research has shown that relatively simple algorithms that scale well with data always outperform more complex/clever algorithms that do not. A nice analogy from Karol Hausman’s early career keynote is that improvements to data and compute are like a wave that is bound to happen given the progress and adoption of technology. Whether we like it or not, there will be more data and better compute. As AI researchers, we can either choose to ride this wave, or we can ignore it. Riding this wave means recognizing all the progress that’s happened because of large data and large models, and then developing algorithms, tools, datasets, etc. to take advantage of this progress. It also means leveraging large pre-trained models from vision and language that currently exist or will exist for robotics tasks.

- Robotics tasks of interest lie on a relatively simple manifold, and training a large model will help us find it: This was something rather interesting that Russ Tedrake pointed out during a debate in the workshop on robustly deploying learning-based solutions. The manifold hypothesis as applied to robotics roughly states that, while the space of possible tasks we could conceive of having a robot do is impossibly large and complex, the tasks that actually occur practically in our world lie on some much lower-dimensional and simpler manifold of this space. By training a single model on large amounts of data, we might be able to discover this manifold. If we believe that such a manifold exists for robotics — which certainly seems intuitive — then this line of thinking would suggest that robotics is not somehow different from CV or NLP in any fundamental way. The same recipe that worked for CV and NLP should be able to discover the manifold for robotics and yield a shockingly competent generalist robot. Even if this doesn’t exactly happen, Tedrake points out that attempting to train a large model for general robotics tasks could teach us important things about the manifold of robotics tasks, and perhaps we can leverage this understanding to solve robotics.

- Large models are the best approach we have to get at “common sense” capabilities, which pervade all of robotics: Another thing Russ Tedrake pointed out is that “common sense” pervades almost every robotics task of interest. Consider the task of having a mobile manipulation robot place a mug onto a table. Even if we ignore the challenging problems of finding and localizing the mug, there are a surprising number of subtleties to this problem. What if the table is cluttered and the robot has to move other objects out of the way? What if the mug accidentally falls on the floor and the robot has to pick it up again, re-orient it, and place it on the table? And what if the mug has something in it, so it’s important it’s never overturned? These “edge cases” are actually much more common that it might seem, and often are the difference between success and failure for a task. Moreover, these seem to require some sort of ‘common sense’ reasoning to deal with. Several people argued that large models trained on a large amount of data are the best way we know of to yield some aspects of this ‘common sense’ capability. Thus, they might be the best way we know of to solve general robotics tasks.

As you might imagine, there were a number of arguments against scaling as a practical solution to robotics. Interestingly, almost no one directly disputes that this approach could work in theory. Instead, most arguments fall into one of two buckets: (1) arguing that this approach is simply impractical, and (2) arguing that even if it does kind of work, it won’t really “solve” robotics.

Why Scaling Might Not Work

It’s impractical

- We currently just don’t have much robotics data, and there’s no clear way we’ll get it: This is the elephant in pretty much every large-scale robot learning room. The Internet is chock-full of data for CV and NLP, but not at all for robotics. Recent efforts to collect very large datasets have required tremendous amounts of time, money, and cooperation, yet have yielded a very small fraction of the amount of vision and text data on the Internet. CV and NLP got so much data because they had an incredible “data flywheel”: tens of millions of people connecting to and using the Internet. Unfortunately for robotics, there seems to be no reason why people would upload a bunch of sensory input and corresponding action pairs. Collecting a very large robotics dataset seems quite hard, and given that we know that a lot of important “emergent” properties only showed up in vision and language models at scale, the inability to get a large dataset could render this scaling approach hopeless.

- Robots have different embodiments: Another challenge with collecting a very large robotics dataset is that robots come in a large variety of different shapes, sizes, and form factors. The output control actions that are sent to a Boston Dynamics Spot robot are very different to those sent to a KUKA iiwa arm. Even if we ignore the problem of finding some kind of common output space for a large trained model, the variety in robot embodiments means we’ll probably have to collect data from each robot type, and that makes the above data-collection problem even harder.

- There is extremely large variance in the environments we want robots to operate in: For a robot to really be “general purpose,” it must be able to operate in any practical environment a human might want to put it in. This means operating in any possible home, factory, or office building it might find itself in. Collecting a dataset that has even just one example of every possible building seems impractical. Of course, the hope is that we would only need to collect data in a small fraction of these, and the rest will be handled by generalization. However, we don’t know how much data will be required for this generalization capability to kick in, and it very well could also be impractically large.

- Training a model on such a large robotics dataset might be too expensive/energy-intensive: It’s no secret that training large foundation models is expensive, both in terms of money and in energy consumption. GPT-4V — OpenAI’s biggest foundation model at the time of this writing — reportedly cost over US $100 million and 50 million KWh of electricity to train. This is well beyond the budget and resources that any academic lab can currently spare, so a larger robotics foundation model would need to be trained by a company or a government of some kind. Additionally, depending on how large both the dataset and model itself for such an endeavor are, the costs may balloon by another order-of-magnitude or more, which might make it completely infeasible.

Even if it works as well as in CV/NLP, it won’t solve robotics

- The 99.X problem and long tails: Vincent Vanhoucke of Google Robotics started a talk with a provocative assertion: Most — if not all — robot learning approaches cannot be deployed for any practical task. The reason? Real-world industrial and home applications typically require 99.X percent or higher accuracy and reliability. What exactly that means varies by application, but it’s safe to say that robot learning algorithms aren’t there yet. Most results presented in academic papers top out at 80 percent success rate. While that might seem quite close to the 99.X percent threshold, people trying to actually deploy these algorithms have found that it isn’t so: getting higher success rates requires asymptotically more effort as we get closer to 100 percent. That means going from 85 to 90 percent might require just as much — if not more — effort than going from 40 to 80 percent. Vincent asserted in his talk that getting up to 99.X percent is a fundamentally different beast than getting even up to 80 percent, one that might require a whole host of new techniques beyond just scaling.

- Existing big models don’t get to 99.X percent even in CV and NLP: As impressive and capable as current large models like GPT-4V and DETIC are, even they don’t achieve 99.X percent or higher success rate on previously-unseen tasks. Current robotics models are very far from this level of performance, and I think it’s safe to say that the entire robot learning community would be thrilled to have a general model that does as well on robotics tasks as GPT-4V does on NLP tasks. However, even if we had something like this, it wouldn’t be at 99.X percent, and it’s not clear that it’s possible to get there by scaling either.

- Self-driving car companies have tried this approach, and it doesn’t fully work (yet): This is closely related to the above point, but important and subtle enough that I think it deserves to stand on its own. A number of self-driving car companies — most notably Tesla and Wayve — have tried training such an end-to-end big model on large amounts of data to achieve Level 5 autonomy. Not only do these companies have the engineering resources and money to train such models, but they also have the data. Tesla in particular has a fleet of over 100,000 cars deployed in the real world that it is constantly collecting and then annotating data from. These cars are being teleoperated by experts, making the data ideal for large-scale supervised learning. And despite all this, Tesla has so far been unable to produce a Level 5 autonomous driving system. That’s not to say their approach doesn’t work at all. It competently handles a large number of situations — especially highway driving — and serves as a useful Level 2 (i.e., driver assist) system. However, it’s far from 99.X percent performance. Moreover, data seems to suggest that Tesla’s approach is faring far worse than Waymo or Cruise, which both use much more modular systems. While it isn’t inconceivable that Tesla’s approach could end up catching up and surpassing its competitors performance in a year or so, the fact that it hasn’t worked yet should serve as evidence perhaps that the 99.X percent problem is hard to overcome for a large-scale ML approach. Moreover, given that self-driving is a special case of general robotics, Tesla’s case should give us reason to doubt the large-scale model approach as a full solution to robotics, especially in the medium term.

- Many robotics tasks of interest are quite long-horizon: Accomplishing any task requires taking a number of correct actions in sequence. Consider the relatively simple problem of making a cup of tea given an electric kettle, water, a box of tea bags, and a mug. Success requires pouring the water into the kettle, turning it on, then pouring the hot water into the mug, and placing a tea-bag inside it. If we want to solve this with a model trained to output motor torque commands given pixels as input, we’ll need to send torque commands to all 7 motors at around 40 Hz. Let’s suppose that this tea-making task requires 5 minutes. That requires 7 * 40 * 60 * 5 = 84,000 correct torque commands. This is all just for a stationary robot arm; things get much more complicated if the robot is mobile, or has more than one arm. It is well-known that error tends to compound with longer-horizons for most tasks. This is one reason why — despite their ability to produce long sequences of text — even LLMs cannot yet produce completely coherent novels or long stories: small deviations from a true prediction over time tend to add up and yield extremely large deviations over long-horizons. Given that most, if not all robotics tasks of interest require sending at least thousands, if not hundreds of thousands, of torques in just the right order, even a fairly well-performing model might really struggle to fully solve these robotics tasks.

Okay, now that we’ve sketched out all the main points on both sides of the debate, I want to spend some time diving into a few related points. Many of these are responses to the above points on the ‘against’ side, and some of them are proposals for directions to explore to help overcome the issues raised.

Miscellaneous Related Arguments

We can probably deploy learning-based approaches robustly

One point that gets brought up a lot against learning-based approaches is the lack of theoretical guarantees. At the time of this writing, we know very little about neural network theory: we don’t really know why they learn well, and more importantly, we don’t have any guarantees on what values they will output in different situations. On the other hand, most classical control and planning approaches that are widely used in robotics have various theoretical guarantees built-in. These are generally quite useful when certifying that systems are safe.

However, there seemed to be general consensus amongst a number of CoRL speakers that this point is perhaps given more significance than it should. Sergey Levine pointed out that most of the guarantees from controls aren’t really that useful for a number of real-world tasks we’re interested in. As he put it: “self-driving car companies aren’t worried about controlling the car to drive in a straight line, but rather about a situation in which someone paints a sky onto the back of a truck and drives in front of the car,” thereby confusing the perception system. Moreover, Scott Kuindersma of Boston Dynamics talked about how they’re deploying RL-based controllers on their robots in production, and are able to get the confidence and guarantees they need via rigorous simulation and real-world testing. Overall, I got the sense that while people feel that guarantees are important, and encouraged researchers to keep trying to study them, they don’t think that the lack of guarantees for learning-based systems means that they cannot be deployed robustly.

What if we strive to deploy Human-in-the-Loop systems?

In one of the organized debates, Emo Todorov pointed out that existing successful ML systems, like Codex and ChatGPT, work well only because a human interacts with and sanitizes their output. Consider the case of coding with Codex: it isn’t intended to directly produce runnable, bug-free code, but rather to act as an intelligent autocomplete for programmers, thereby making the overall human-machine team more productive than either alone. In this way, these models don’t have to achieve the 99.X percent performance threshold, because a human can help correct any issues during deployment. As Emo put it: “humans are forgiving, physics is not.”

Chelsea Finn responded to this by largely agreeing with Emo. She strongly agreed that all successfully-deployed and useful ML systems have humans in the loop, and so this is likely the setting that deployed robot learning systems will need to operate in as well. Of course, having a human operate in the loop with a robot isn’t as straightforward as in other domains, since having a human and robot inhabit the same space introduces potential safety hazards. However, it’s a useful setting to think about, especially if it can help address issues brought on by the 99.X percent problem.

Maybe we don’t need to collect that much real world data for scaling

A number of people at the conference were thinking about creative ways to overcome the real-world data bottleneck without actually collecting more real world data. Quite a few of these people argued that fast, realistic simulators could be vital here, and there were a number of works that explored creative ways to train robot policies in simulation and then transfer them to the real world. Another set of people argued that we can leverage existing vision, language, and video data and then just ‘sprinkle in’ some robotics data. Google’s recent RT-2 model showed how taking a large model trained on internet scale vision and language data, and then just fine-tuning it on a much smaller set robotics data can produce impressive performance on robotics tasks. Perhaps through a combination of simulation and pretraining on general vision and language data, we won’t actually have to collect too much real-world robotics data to get scaling to work well for robotics tasks.

Maybe combining classical and learning-based approaches can give us the best of both worlds

As with any debate, there were quite a few people advocating the middle path. Scott Kuindersma of Boston Dynamics titled one of his talks “Let’s all just be friends: model-based control helps learning (and vice versa)”. Throughout his talk, and the subsequent debates, his strong belief that in the short to medium term, the best path towards reliable real-world systems involves combining learning with classical approaches. In her keynote speech for the conference, Andrea Thomaz talked about how such a hybrid system — using learning for perception and a few skills, and classical SLAM and path-planning for the rest — is what powers a real-world robot that’s deployed in tens of hospital systems in Texas (and growing!). Several papers explored how classical controls and planning, together with learning-based approaches can enable much more capability than any system on its own. Overall, most people seemed to argue that this ‘middle path’ is extremely promising, especially in the short to medium term, but perhaps in the long-term either pure learning or an entirely different set of approaches might be best.

What Can/Should We Take Away From All This?

If you’ve read this far, chances are that you’re interested in some set of takeaways/conclusions. Perhaps you’re thinking “this is all very interesting, but what does all this mean for what we as a community should do? What research problems should I try to tackle?” Fortunately for you, there seemed to be a number of interesting suggestions that had some consensus on this.

We should pursue the direction of trying to just scale up learning with very large datasets

Despite the various arguments against scaling solving robotics outright, most people seem to agree that scaling in robot learning is a promising direction to be investigated. Even if it doesn’t fully solve robotics, it could lead to a significant amount of progress on a number of hard problems we’ve been stuck on for a while. Additionally, as Russ Tedrake pointed out, pursuing this direction carefully could yield useful insights about the general robotics problem, as well as current learning algorithms and why they work so well.

We should also pursue other existing directions

Even the most vocal proponents of the scaling approach were clear that they don’t think everyone should be working on this. It’s likely a bad idea for the entire robot learning community to put its eggs in the same basket, especially given all the reasons to believe scaling won’t fully solve robotics. Classical robotics techniques have gotten us quite far, and led to many successful and reliable deployments: pushing forward on them or integrating them with learning techniques might be the right way forward, especially in the short to medium terms.

We should focus more on real-world mobile manipulation and easy-to-use systems

Vincent Vanhoucke made an observation that most papers at CoRL this year were limited to tabletop manipulation settings. While there are plenty of hard tabletop problems, things generally get a lot more complicated when the robot — and consequently its camera view — moves. Vincent speculated that it’s easy for the community to fall into a local minimum where we make a lot of progress that’s specific to the tabletop setting and therefore not generalizable. A similar thing could happen if we work predominantly in simulation. Avoiding these local minima by working on real-world mobile manipulation seems like a good idea.

Separately, Sergey Levine observed that a big reason why LLM’s have seen so much excitement and adoption is because they’re extremely easy to use: especially by non-experts. One doesn’t have to know about the details of training an LLM, or perform any tough setup, to prompt and use these models for their own tasks. Most robot learning approaches are currently far from this. They often require significant knowledge of their inner workings to use, and involve very significant amounts of setup. Perhaps thinking more about how to make robot learning systems easier to use and widely applicable could help improve adoption and potentially scalability of these approaches.

We should be more forthright about things that don’t work

There seemed to be a broadly-held complaint that many robot learning approaches don’t adequately report negative results, and this leads to a lot of unnecessary repeated effort. Additionally, perhaps patterns might emerge from consistent failures of things that we expect to work but don’t actually work well, and this could yield novel insight into learning algorithms. There is currently no good incentive for researchers to report such negative results in papers, but most people seemed to be in favor of designing one.

We should try to do something totally new

There were a few people who pointed out that all current approaches — be they learning-based or classical — are unsatisfying in a number of ways. There seem to be a number of drawbacks with each of them, and it’s very conceivable that there is a completely different set of approaches that ultimately solves robotics. Given this, it seems useful to try think outside the box. After all, every one of the current approaches that’s part of the debate was only made possible because the few researchers that introduced them dared to think against the popular grain of their times.

Acknowledgements: Huge thanks to Tom Silver and Leslie Kaelbling for providing helpful comments, suggestions, and encouragement on a previous draft of this post.

—

1 In fact, this was the topic of a popular debate hosted at a workshop on the first day; many of the points in this post were inspired by the conversation during that debate. - It worked for Computer Vision (CV) and Natural Language Processing (NLP), so why not robotics? This was perhaps the most common argument I heard, and the one that seemed to excite most people given recent models like GPT4-V and SAM. The point here is that training a large model on an extremely large corpus of data has recently led to astounding progress on problems thought to be intractable just 3-4 years ago. Moreover, doing this has led to a number of emergent capabilities, where trained models are able to perform well at a number of tasks they weren’t explicitly trained for. Importantly, the fundamental method here of training a large model on a very large amount of data is general and not somehow unique to CV or NLP. Thus, there seems to be no reason why we shouldn’t observe the same incredible performance on robotics tasks.

-

Do We Dare Use Generative AI for Mental Health?

by Aaron Pavez on 26. May 2024. at 15:00

The mental-health app Woebot launched in 2017, back when “chatbot” wasn’t a familiar term and someone seeking a therapist could only imagine talking to a human being. Woebot was something exciting and new: a way for people to get on-demand mental-health support in the form of a responsive, empathic, AI-powered chatbot. Users found that the friendly robot avatar checked in on them every day, kept track of their progress, and was always available to talk something through.

Today, the situation is vastly different. Demand for mental-health services has surged while the supply of clinicians has stagnated. There are thousands of apps that offer automated support for mental health and wellness. And ChatGPT has helped millions of people experiment with conversational AI.

But even as the world has become fascinated with generative AI, people have also seen its downsides. As a company that relies on conversation, Woebot Health had to decide whether generative AI could make Woebot a better tool, or whether the technology was too dangerous to incorporate into our product.

Woebot is designed to have structured conversations through which it delivers evidence-based tools inspired by cognitive behavioral therapy (CBT), a technique that aims to change behaviors and feelings. Throughout its history, Woebot Health has used technology from a subdiscipline of AI known as natural-language processing (NLP). The company has used AI artfully and by design—Woebot uses NLP only in the service of better understanding a user’s written texts so it can respond in the most appropriate way, thus encouraging users to engage more deeply with the process.

Woebot, which is currently available in the United States, is not a generative-AI chatbot like ChatGPT. The differences are clear in both the bot’s content and structure. Everything Woebot says has been written by conversational designers trained in evidence-based approaches who collaborate with clinical experts; ChatGPT generates all sorts of unpredictable statements, some of which are untrue. Woebot relies on a rules-based engine that resembles a decision tree of possible conversational paths; ChatGPT uses statistics to determine what its next words should be, given what has come before.

With ChatGPT, conversations about mental health ended quickly and did not allow a user to engage in the psychological processes of change.

The rules-based approach has served us well, protecting Woebot’s users from the types of chaotic conversations we observed from early generative chatbots. Prior to ChatGPT, open-ended conversations with generative chatbots were unsatisfying and easily derailed. One famous example is Microsoft’s Tay, a chatbot that was meant to appeal to millennials but turned lewd and racist in less than 24 hours.

But with the advent of ChatGPT in late 2022, we had to ask ourselves: Could the new large language models (LLMs) powering chatbots like ChatGPT help our company achieve its vision? Suddenly, hundreds of millions of users were having natural-sounding conversations with ChatGPT about anything and everything, including their emotions and mental health. Could this new breed of LLMs provide a viable generative-AI alternative to the rules-based approach Woebot has always used? The AI team at Woebot Health, including the authors of this article, were asked to find out.

Woebot, a mental-health chatbot, deploys concepts from cognitive behavioral therapy to help users. This demo shows how users interact with Woebot using a combination of multiple-choice responses and free-written text.

The Origin and Design of Woebot

Woebot got its start when the clinical research psychologist Alison Darcy, with support from the AI pioneer Andrew Ng, led the build of a prototype intended as an emotional support tool for young people. Darcy and another member of the founding team, Pierre Rappolt, took inspiration from video games as they looked for ways for the tool to deliver elements of CBT. Many of their prototypes contained interactive fiction elements, which then led Darcy to the chatbot paradigm. The first version of the chatbot was studied in a randomized control trial that offered mental-health support to college students. Based on the results, Darcy raised US $8 million from New Enterprise Associates and Andrew Ng’s AI Fund.

The Woebot app is intended to be an adjunct to human support, not a replacement for it. It was built according to a set of principles that we call Woebot’s core beliefs, which were shared on the day it launched. These tenets express a strong faith in humanity and in each person’s ability to change, choose, and grow. The app does not diagnose, it does not give medical advice, and it does not force its users into conversations. Instead, the app follows a Buddhist principle that’s prevalent in CBT of “sitting with open hands”—it extends invitations that the user can choose to accept, and it encourages process over results. Woebot facilitates a user’s growth by asking the right questions at optimal moments, and by engaging in a type of interactive self-help that can happen anywhere, anytime.

A Convenient Companion

Users interact with Woebot either by choosing prewritten responses or by typing in whatever text they’d like, which Woebot parses using AI techniques. Woebot deploys concepts from cognitive behavioral therapy to help users change their thought patterns. Here, it first asks a user to write down negative thoughts, then explains the cognitive distortions at work. Finally, Woebot invites the user to recast a negative statement in a positive way. (Not all exchanges are shown.)

These core beliefs strongly influenced both Woebot’s engineering architecture and its product-development process. Careful conversational design is crucial for ensuring that interactions conform to our principles. Test runs through a conversation are read aloud in “table reads,” and then revised to better express the core beliefs and flow more naturally. The user side of the conversation is a mix of multiple-choice responses and “free text,” or places where users can write whatever they wish.

Building an app that supports human health is a high-stakes endeavor, and we’ve taken extra care to adopt the best software-development practices. From the start, enabling content creators and clinicians to collaborate on product development required custom tools. An initial system using Google Sheets quickly became unscalable, and the engineering team replaced it with a proprietary Web-based “conversational management system” written in the JavaScript library React.

Within the system, members of the writing team can create content, play back that content in a preview mode, define routes between content modules, and find places for users to enter free text, which our AI system then parses. The result is a large rules-based tree of branching conversational routes, all organized within modules such as “social skills training” and “challenging thoughts.” These modules are translated from psychological mechanisms within CBT and other evidence-based techniques.

How Woebot Uses AI

While everything Woebot says is written by humans, NLP techniques are used to help understand the feelings and problems users are facing; then Woebot can offer the most appropriate modules from its deep bank of content. When users enter free text about their thoughts and feelings, we use NLP to parse these text inputs and route the user to the best response.

In Woebot’s early days, the engineering team used regular expressions, or “regexes,” to understand the intent behind these text inputs. Regexes are a text-processing method that relies on pattern matching within sequences of characters. Woebot’s regexes were quite complicated in some cases, and were used for everything from parsing simple yes/no responses to learning a user’s preferred nickname.

Later in Woebot’s development, the AI team replaced regexes with classifiers trained with supervised learning. The process for creating AI classifiers that comply with regulatory standards was involved—each classifier required months of effort. Typically, a team of internal-data labelers and content creators reviewed examples of user messages (with all personally identifiable information stripped out) taken from a specific point in the conversation. Once the data was placed into categories and labeled, classifiers were trained that could take new input text and place it into one of the existing categories.

This process was repeated many times, with the classifier repeatedly evaluated against a test dataset until its performance satisfied us. As a final step, the conversational-management system was updated to “call” these AI classifiers (essentially activating them) and then to route the user to the most appropriate content. For example, if a user wrote that he was feeling angry because he got in a fight with his mom, the system would classify this response as a relationship problem.

The technology behind these classifiers is constantly evolving. In the early days, the team used an open-source library for text classification called fastText, sometimes in combination with regular expressions. As AI continued to advance and new models became available, the team was able to train new models on the same labeled data for improvements in both accuracy and recall. For example, when the early transformer model BERT was released in October 2018, the team rigorously evaluated its performance against the fastText version. BERT was superior in both precision and recall for our use cases, and so the team replaced all fastText classifiers with BERT and launched the new models in January 2019. We immediately saw improvements in classification accuracy across the models.

Woebot and Large Language Models

When ChatGPT was released in November 2022, Woebot was more than 5 years old. The AI team faced the question of whether LLMs like ChatGPT could be used to meet Woebot’s design goals and enhance users’ experiences, putting them on a path to better mental health.

We were excited by the possibilities, because ChatGPT could carry on fluid and complex conversations about millions of topics, far more than we could ever include in a decision tree. However, we had also heard about troubling examples of chatbots providing responses that were decidedly not supportive, including advice on how to maintain and hide an eating disorder and guidance on methods of self-harm. In one tragic case in Belgium, a grieving widow accused a chatbot of being responsible for her husband’s suicide.

The first thing we did was try out ChatGPT ourselves, and we quickly became experts in prompt engineering. For example, we prompted ChatGPT to be supportive and played the roles of different types of users to explore the system’s strengths and shortcomings. We described how we were feeling, explained some problems we were facing, and even explicitly asked for help with depression or anxiety.

A few things stood out. First, ChatGPT quickly told us we needed to talk to someone else—a therapist or doctor. ChatGPT isn’t intended for medical use, so this default response was a sensible design decision by the chatbot’s makers. But it wasn’t very satisfying to constantly have our conversation aborted. Second, ChatGPT’s responses were often bulleted lists of encyclopedia-style answers. For example, it would list six actions that could be helpful for depression. We found that these lists of items told the user what to do but didn’t explain how to take these steps. Third, in general, the conversations ended quickly and did not allow a user to engage in the psychological processes of change.

It was clear to our team that an off-the-shelf LLM would not deliver the psychological experiences we were after. LLMs are based on reward models that value the delivery of correct answers; they aren’t given incentives to guide a user through the process of discovering those results themselves. Instead of “sitting with open hands,” the models make assumptions about what the user is saying to deliver a response with the highest assigned reward.

We had to decide whether generative AI could make Woebot a better tool, or whether the technology was too dangerous to incorporate into our product.

To see if LLMs could be used within a mental-health context, we investigated ways of expanding our proprietary conversational-management system. We looked into frameworks and open-source techniques for managing prompts and prompt chains—sequences of prompts that ask an LLM to achieve a task through multiple subtasks. In January of 2023, a platform called LangChain was gaining in popularity and offered techniques for calling multiple LLMs and managing prompt chains. However, LangChain lacked some features that we knew we needed: It didn’t provide a visual user interface like our proprietary system, and it didn’t provide a way to safeguard the interactions with the LLM. We needed a way to protect Woebot users from the common pitfalls of LLMs, including hallucinations (where the LLM says things that are plausible but untrue) and simply straying off topic.

Ultimately, we decided to expand our platform by implementing our own LLM prompt-execution engine, which gave us the ability to inject LLMs into certain parts of our existing rules-based system. The engine allows us to support concepts such as prompt chains while also providing integration with our existing conversational routing system and rules. As we developed the engine, we were fortunate to be invited into the beta programs of many new LLMs. Today, our prompt-execution engine can call more than a dozen different LLM models, including variously sized OpenAI models, Microsoft Azure versions of OpenAI models, Anthropic’s Claude, Google Bard (now Gemini), and open-source models running on the Amazon Bedrock platform, such as Meta’s Llama 2. We use this engine exclusively for exploratory research that’s been approved by an institutional review board, or IRB.

It took us about three months to develop the infrastructure and tooling support for LLMs. Our platform allows us to package features into different products and experiments, which in turn lets us maintain control over software versions and manage our research efforts while ensuring that our commercially deployed products are unaffected. We’re not using LLMs in any of our products; the LLM-enabled features can be used only in a version of Woebot for exploratory studies.

A Trial for an LLM-Augmented Woebot

We had some false starts in our development process. We first tried creating an experimental chatbot that was almost entirely powered by generative AI; that is, the chatbot directly used the text responses from the LLM. But we ran into a couple of problems. The first issue was that the LLMs were eager to demonstrate how smart and helpful they are! This eagerness was not always a strength, as it interfered with the user’s own process.

For example, the user might be doing a thought-challenging exercise, a common tool in CBT. If the user says, “I’m a bad mom,” a good next step in the exercise could be to ask if the user’s thought is an example of “labeling,” a cognitive distortion where we assign a negative label to ourselves or others. But LLMs were quick to skip ahead and demonstrate how to reframe this thought, saying something like “A kinder way to put this would be, ‘I don’t always make the best choices, but I love my child.’” CBT exercises like thought challenging are most helpful when the person does the work themselves, coming to their own conclusions and gradually changing their patterns of thinking.

A second difficulty with LLMs was in style matching. While social media is rife with examples of LLMs responding in a Shakespearean sonnet or a poem in the style of Dr. Seuss, this format flexibility didn’t extend to Woebot’s style. Woebot has a warm tone that has been refined for years by conversational designers and clinical experts. But even with careful instructions and prompts that included examples of Woebot’s tone, LLMs produced responses that didn’t “sound like Woebot,” maybe because a touch of humor was missing, or because the language wasn’t simple and clear.

The LLM-augmented Woebot was well-behaved, refusing to take inappropriate actions like diagnosing or offering medical advice.

However, LLMs truly shone on an emotional level. When coaxing someone to talk about their joys or challenges, LLMs crafted personalized responses that made people feel understood. Without generative AI, it’s impossible to respond in a novel way to every different situation, and the conversation feels predictably “robotic.”