IEEE Spectrum IEEE Spectrum

-

Powering Planes With Microwaves Is Not the Craziest Idea

by Ian McKay on 24. June 2024. at 13:00

Imagine it’s 2050 and you’re on a cross-country flight on a new type of airliner, one with no fuel on board. The plane takes off, and you rise above the airport. Instead of climbing to cruising altitude, though, your plane levels out and the engines quiet to a low hum. Is this normal? No one seems to know. Anxious passengers crane their necks to get a better view out their windows. They’re all looking for one thing.

Then it appears: a massive antenna array on the horizon. It’s sending out a powerful beam of electromagnetic radiation pointed at the underside of the plane. After soaking in that energy, the engines power up, and the aircraft continues its climb. Over several minutes, the beam will deliver just enough energy to get you to the next ground antenna located another couple hundred kilometers ahead.

The person next to you audibly exhales. You sit back in your seat and wait for your drink. Old-school EV-range anxiety is nothing next to this.

Electromagnetic waves on the fly

Beamed power for aviation is, I admit, an outrageous notion. If physics doesn’t forbid it, federal regulators or nervous passengers probably will. But compared with other proposals for decarbonizing aviation, is it that crazy?

Batteries, hydrogen, alternative carbon-based fuels—nothing developed so far can store energy as cheaply and densely as fossil fuels, or fully meet the needs of commercial air travel as we know it. So, what if we forgo storing all the energy on board and instead beam it from the ground? Let me sketch what it would take to make this idea fly.

Beamed Power for Aviation

Fly by Microwave: Warm up to a new kind of air travel

For the wireless-power source, engineers would likely choose microwaves because this type of electromagnetic radiation can pass unruffled through clouds and because receivers on planes could absorb it completely, with nearly zero risk to passengers.

To power a moving aircraft, microwave radiation would need to be sent in a tight, steerable beam. This can be done using technology known as a phased array, which is commonly used to direct radar beams. With enough elements spread out sufficiently and all working together, phased arrays can also be configured to focus power on a point a certain distance away, such as the receiving antenna on a plane.

Phased arrays work on the principle of constructive and destructive interference. The radiation from the antenna elements will, of course, overlap. In some directions the radiated waves will interfere destructively and cancel out one another, and in other directions the waves will fall perfectly in phase, adding together constructively. Where the waves overlap constructively, energy radiates in that direction, creating a beam of power that can be steered electronically.

How far we can send energy in a tight beam with a phased array is governed by physics—specifically, by something called the diffraction limit. There’s a simple way to calculate the optimal case for beamed power: D1 D2 > λ R. In this mathematical inequality, D1 and D2 are the diameters of the sending and receiving antennas, λ is the wavelength of the radiation, and R is the distance between those antennas.

Now, let me offer some ballpark numbers to figure out how big the transmitting antenna (D1) must be. The size of the receiving antenna on the aircraft is probably the biggest limiting factor. A medium-size airliner has a wing and body area of about 1,000 square meters, which should provide for the equivalent of a receiving antenna that’s 30 meters wide (D2) built into the underside of the plane.

If physics doesn’t forbid it, federal regulators or nervous passengers probably will.

Next, let’s guess how far we would need to beam the energy. The line of sight to the horizon for someone in an airliner at cruising altitude is about 360 kilometers long, assuming the terrain below is level. But mountains would interfere, plus nobody wants range anxiety, so let’s place our ground antennas every 200 km along the flight path, each beaming energy half of that distance. That is, set R to 100 km.

Finally, assume the microwave wavelength (λ) is 5 centimeters. This provides a happy medium between a wavelength that’s too small to penetrate clouds and one that’s too large to gather back together on a receiving dish. Plugging these numbers into the equation above shows that in this scenario the diameter of the ground antennas (D1) would need to be at least about 170 meters. That’s gigantic, but perhaps not unreasonable. Imagine a series of three or four of these antennas, each the size of a football stadium, spread along the route, say, between LAX and SFO or between AMS and BER.

Power beaming in the real world

While what I’ve described is theoretically possible, in practice engineers have beamed only a fraction of the amount of power needed for an airliner, and they’ve done that only over much shorter distances.

NASA holds the record from an experiment in 1975, when it beamed 30 kilowatts of power over 1.5 km with a dish the size of a house. To achieve this feat, the team used an analog device called a klystron. The geometry of a klystron causes electrons to oscillate in a way that amplifies microwaves of a particular frequency—kind of like how the geometry of a whistle causes air to oscillate and produce a particular pitch.

Klystrons and their cousins, cavity magnetrons (found in ordinary microwave ovens), are quite efficient because of their simplicity. But their properties depend on their precise geometry, so it’s challenging to coordinate many such devices to focus energy into a tight beam.

In more recent years, advances in semiconductor technology have allowed a single oscillator to drive a large number of solid-state amplifiers in near-perfect phase coordination. This has allowed microwaves to be focused much more tightly than was possible before, enabling more-precise energy transfer over longer distances.

In 2022, the Auckland-based startup Emrod showed just how promising this semiconductor-enabled approach could be. Inside a cavernous hangar in Germany owned by Airbus, the researchers beamed 550 watts across 36 meters and kept over 95 percent of the energy flowing in a tight beam—far better than could be achieved with analog systems. In 2021, the U.S. Naval Research Laboratory showed that these techniques could handle higher power levels when it sent more than a kilowatt between two ground antennas over a kilometer apart. Other researchers have energized drones in the air, and a few groups even intend to use phased arrays to beam solar power from satellites to Earth.

A rectenna for the ages

So beaming energy to airliners might not be entirely crazy. But please remain seated with your seat belts fastened; there’s some turbulence ahead for this idea. A Boeing 737 aircraft at takeoff requires about 30 megawatts—a thousand times as much power as any power-beaming experiment has demonstrated. Scaling up to this level while keeping our airplanes aerodynamic (and flyable) won’t be easy.

Consider the design of the antenna on the plane, which receives and converts the microwaves to an electric current to power the aircraft. This rectifying antenna, or rectenna, would need to be built onto the underside surfaces of the aircraft with aerodynamics in mind. Power transmission will be maximized when the plane is right above the ground station, but it would be far more limited the rest of the time, when ground stations are far ahead or behind the plane. At those angles, the beam would activate only either the front or rear surfaces of the aircraft, making it especially hard to receive enough power.

With 30 MW blasting onto that small of an area, power density will be an issue. If the aircraft is the size of Boeing 737, the rectenna would have to cram about 25 W into each square centimeter. Because the solid-state elements of the array would be spaced about a half-wavelength—or 2.5 cm—apart, this translates to about 150 W per element—perilously close to the maximum power density of any solid-state power-conversion device. The top mark in the 2016 IEEE/Google Little Box Challenge was about 150 W per cubic inch (less than 10 W per cubic centimeter).

The rectenna will also have to weigh very little and minimize the disturbance to the airflow over the plane. Compromising the geometry of the rectenna for aerodynamic reasons might lower its efficiency. State-of-the art power-transfer efficiencies are only about 30 percent, so the rectenna can’t afford to compromise too much.

A Boeing 737 aircraft at takeoff requires about 30 megawatts—a thousand times as much power as any power-beaming experiment has demonstrated.

And all of this equipment will have to work in an electric field of about 7,000 volts per meter—the strength of the power beam. The electric field inside a microwave oven, which is only about a third as strong, can create a corona discharge, or electric arc, between the tines of a metal fork, so just imagine what might happen inside the electronics of the rectenna.

And speaking of microwave ovens, I should mention that, to keep passengers from cooking in their seats, the windows on any beamed-power airplane would surely need the same wire mesh that’s on the doors of microwave ovens—to keep those sizzling fields outside the plane. Birds, however, won’t have that protection.

Fowl flying through our power beam near the ground might encounter a heating of more than 1,000 watts per square meter—stronger than the sun on a hot day. Up higher, the beam will narrow to a focal point with much more heat. But because that focal point would be moving awfully fast and located higher than birds typically fly, any roasted ducks falling from the sky would be rare in both senses of the word. Ray Simpkin, chief science officer at Emrod, told me it’d take “more than 10 minutes to cook a bird” with Emrod’s relatively low-power system.

Legal challenges would surely come, though, and not just from the National Audubon Society. Thirty megawatts beamed through the air would be about 10 billion times as strong as typical signals at 5-cm wavelengths (a band currently reserved for amateur radio and satellite communications). Even if the transmitter could successfully put 99 percent of the waves into a tight beam, the 1 percent that’s leaked would still be a hundred million times as strong as approved transmissions today.

And remember that aviation regulators make us turn off our cellphones during takeoff to quiet radio noise, so imagine what they’ll say about subjecting an entire plane to electromagnetic radiation that’s substantially stronger than that of a microwave oven. All these problems are surmountable, perhaps, but only with some very good engineers (and lawyers).

Compared with the legal obstacles and the engineering hurdles we’d need to overcome in the air, the challenges of building transmitting arrays on the ground, huge as they would have to be, seem modest. The rub is the staggering number of them that would have to be built. Many flights occur over mountainous terrain, producing a line of sight to the horizon that is less than 100 km. So in real-world terrain we’d need more closely spaced transmitters. And for the one-third of airline miles that occur over oceans, we would presumably have to build floating arrays. Clearly, building out the infrastructure would be an undertaking on the scale of the Eisenhower-era U.S. interstate highway system.

Decarbonizing with the world’s largest microwave

People might be able to find workarounds for many of these issues. If the rectenna is too hard to engineer, for example, perhaps designers will find that they don’t have to turn the microwaves back into electricity—there are precedents for using heat to propel airplanes. A sawtooth flight path—with the plane climbing up as it approaches each emitter station and gliding down after it passes by—could help with the power-density and field-of-view issues, as could flying-wing designs, which have much more room for large rectennas. Perhaps using existing municipal airports or putting ground antennas near solar farms could reduce some of the infrastructure cost. And perhaps researchers will find shortcuts to radically streamline phased-array transmitters. Perhaps, perhaps.

To be sure, beamed power for aviation faces many challenges. But less-fanciful options for decarbonizing aviation have their own problems. Battery-powered planes don’t even come close to meeting the needs of commercial airlines. The best rechargeable batteries have about 5 percent of the effective energy density of jet fuel. At that figure, an all-electric airliner would have to fill its entire fuselage with batteries—no room for passengers, sorry—and it’d still barely make it a tenth as far as an ordinary jet. Given that the best batteries have improved by only threefold in the past three decades, it’s safe to say that batteries won’t power commercial air travel as we know it anytime soon.

Any roasted ducks falling from the sky would be rare in both senses of the word.

Hydrogen isn’t much further along, despite early hydrogen-powered flights occurring nearly 40 years ago. And it’s potentially dangerous—enough that some designs for hydrogen planes have included two separate fuselages: one for fuel and one for people to give them more time to get away if the stuff gets explode-y. The same factors that have kept hydrogen cars off the road will probably keep hydrogen planes out of the sky.

Synthetic and biobased jet fuels are probably the most reasonable proposal. They’ll give us aviation just as we know it today, just at a higher cost—perhaps 20 to 50 percent more expensive per ticket. But fuels produced from food crops can be worse for the environment than the fossil fuels they replace, and fuels produced from CO2 and electricity are even less economical. Plus, all combustion fuels could still contribute to contrail formation, which makes up more than half of aviation’s climate impact.

The big problem with the “sane” approach for decarbonizing aviation is that it doesn’t present us with a vision of the future at all. At the very best, we’ll get a more expensive version of the same air travel experience the world has had since the 1970s.

True, beamed power is far less likely to work. But it’s good to examine crazy stuff like this from time to time. Airplanes themselves were a crazy idea when they were first proposed. If we want to clean up the environment and produce a future that actually looks like a future, we might have to take fliers on some unlikely sounding schemes.

-

Tsunenobu Kimoto Leads the Charge in Power Devices

by Willie D. Jones on 23. June 2024. at 18:00

Tsunenobu Kimoto, a professor of electronic science and engineering at Kyoto University, literally wrote the book on silicon carbide technology. Fundamentals of Silicon Carbide Technology, published in 2014, covers properties of SiC materials, processing technology, theory, and analysis of practical devices.

Kimoto, whose silicon carbide research has led to better fabrication techniques, improved the quality of wafers and reduced their defects. His innovations, which made silicon carbide semiconductor devices more efficient and more reliable and thus helped make them commercially viable, have had a significant impact on modern technology.

Tsunenobu Kimoto

Employer

Kyoto University

Title

Professor of electronic science and engineering

Member grade

Fellow

Alma mater

Kyoto University

For his contributions to silicon carbide material and power devices, the IEEE Fellow was honored with this year’s IEEE Andrew S. Grove Award, sponsored by the IEEE Electron Devices Society.

Silicon carbide’s humble beginnings

Decades before a Tesla Model 3 rolled off the assembly line with an SiC inverter, a small cadre of researchers, including Kimoto, foresaw the promise of silicon carbide technology. In obscurity they studied it and refined the techniques for fabricating power transistors with characteristics superior to those of the silicon devices then in mainstream use.

Today MOSFETs and other silicon carbide transistors greatly reduce on-state loss and switching losses in power-conversion systems, such as the inverters in an electric vehicle used to convert the battery’s direct current to the alternating current that drives the motor. Lower switching losses make the vehicles more efficient, reducing the size and weight of their power electronics and improving power-train performance. Silicon carbide–based chargers, which convert alternating current to direct current, provide similar improvements in efficiency.

But those tools didn’t just appear. “We had to first develop basic techniques such as how to dope the material to make n-type and p-type semiconductor crystals,” Kimoto says. N-type crystals’ atomic structures are arranged so that electrons, with their negative charges, move freely through the material’s lattice. Conversely, the atomic arrangement of p-type crystals’ contains positively charged holes.

Kimoto’s interest in silicon carbide began when he was working on his Ph.D. at Kyoto University in 1990.

“At that time, few people were working on silicon carbide devices,” he says. “And for those who were, the main target for silicon carbide was blue LED.

“There was hardly any interest in silicon carbide power devices, like MOSFETs and Schottky barrier diodes.”

Kimoto began by studying how SiC might be used as the basis of a blue LED. But then he read B. Jayant Baliga’s 1989 paper “Power Semiconductor Device Figure of Merit for High-Frequency Applications” in IEEE Electron Device Letters, and he attended a presentation by Baliga, the 2014 IEEE Medal of Honor recipient, on the topic.

“I was convinced that silicon carbide was very promising for power devices,” Kimoto says. “The problem was that we had no wafers and no substrate material,” without which it was impossible to fabricate the devices commercially.

In order to get silicon carbide power devices, “researchers like myself had to develop basic technology such as how to dope the material to make p-type and n-type crystals,” he says. “There was also the matter of forming high-quality oxides on silicon carbide.” Silicon dioxide is used in a MOSFET to isolate the gate and prevent electrons from flowing into it.

The first challenge Kimoto tackled was producing pure silicon carbide crystals. He decided to start with carborundum, a form of silicon carbide commonly used as an abrasive. Kimoto took some factory waste materials—small crystals of silicon carbide measuring roughly 5 millimeters by 8 mm—and polished them.

He found he had highly doped n-type crystals. But he realized having only highly doped n-type SiC would be of little use in power applications unless he also could produce lightly doped (high purity) n-type and p-type SiC.

Connecting the two material types creates a depletion region straddling the junction where the n-type and p-type sides meet. In this region, the free, mobile charges are lost because of diffusion and recombination with their opposite charges, and an electric field is established that can be exploited to control the flow of charges across the boundary.

“Silicon carbide is a family with many, many brothers.”

By using an established technique, chemical vapor deposition, Kimoto was able to grow high-purity silicon carbide. The technique grows SiC as a layer on a substrate by introducing gasses into a reaction chamber.

At the time, silicon carbide, gallium nitride, and zinc selenide were all contenders in the race to produce a practical blue LED. Silicon carbide, Kimoto says, had only one advantage: It was relatively easy to make a silicon carbide p-n junction. Creating p-n junctions was still difficult to do with the other two options.

By the early 1990s, it was starting to become clear that SiC wasn’t going to win the blue-LED sweepstakes, however. The inescapable reality of the laws of physics trumped the SiC researchers’ belief that they could somehow overcome the material’s inherent properties. SiC has what is known as an indirect band gap structure, so when charge carriers are injected, the probability of the charges recombining and emitting photons is low, leading to poor efficiency as a light source.

While the blue-LED quest was making headlines, many low-profile advances were being made using SiC for power devices. By 1993, a team led by Kimoto and Hiroyuki Matsunami demonstrated the first 1,100-volt silicon carbide Schottky diodes, which they described in a paper in IEEE Electron Device Letters. The diodes produced by the team and others yielded fast switching that was not possible with silicon diodes.

“With silicon p-n diodes,” Kimoto says, “we need about a half microsecond for switching. But with a silicon carbide, it takes only 10 nanoseconds.”

The ability to switch devices on and off rapidly makes power supplies and inverters more efficient because they waste less energy as heat. Higher efficiency and less heat also permit designs that are smaller and lighter. That’s a big deal for electric vehicles, where less weight means less energy consumption.

Kimoto’s second breakthrough was identifying which form of the silicon carbide material would be most useful for electronics applications.

“Silicon carbide is a family with many, many brothers,” Kimoto says, noting that more than 100 variants with different silicon-carbon atomic structures exist.

The 6H-type silicon carbide was the default standard phase used by researchers targeting blue LEDs, but Kimoto discovered that the 4H-type has much better properties for power devices, including high electron mobility. Now all silicon carbide power devices and wafer products are made with the 4H-type.

Silicon carbide power devices in electric vehicles can improve energy efficiency by about 10 percent compared with silicon, Kimoto says. In electric trains, he says, the power required to propel the cars can be cut by 30 percent compared with those using silicon-based power devices.

Challenges remain, he acknowledges. Although silicon carbide power transistors are used in Teslas, other EVs, and electric trains, their performance is still far from ideal because of defects present at the silicon dioxide–SiC interface, he says. The interface defects lower the performance and reliability of MOS-based transistors, so Kimoto and others are working to reduce the defects.

A career sparked by semiconductors

When Kimoto was an only child growing up in Wakayama, Japan, near Osaka, his parents insisted he study medicine, and they expected him to live with them as an adult. His father was a garment factory worker; his mother was a homemaker. His move to Kyoto to study engineering “disappointed them on both counts,” he says.

His interest in engineering was sparked, he recalls, when he was in junior high school, and Japan and the United States were competing for semiconductor industry supremacy.

At Kyoto University, he earned bachelor’s and master’s degrees in electrical engineering, in 1986 and 1988. After graduating, he took a job at Sumitomo Electric Industries’ R&D center in Itami. He worked with silicon-based materials there but wasn’t satisfied with the center’s research opportunities.

He returned to Kyoto University in 1990 to pursue his doctorate. While studying power electronics and high-temperature devices, he also gained an understanding of material defects, breakdown, mobility, and luminescence.

“My experience working at the company was very valuable, but I didn’t want to go back to industry again,” he says. By the time he earned his doctorate in 1996, the university had hired him as a research associate.

He has been there ever since, turning out innovations that have helped make silicon carbide an indispensable part of modern life.

Growing the silicon carbide community at IEEE

Kimoto joined IEEE in the late 1990s. An active volunteer, he has helped grow the worldwide silicon carbide community.

He is an editor of IEEE Transactions on Electron Devices, and he has served on program committees for conferences including the International Symposium on Power Semiconductor Devices and ICs and the IEEE Workshop on Wide Bandgap Power Devices and Applications.

“Now when we hold a silicon carbide conference, more than 1,000 people gather,” he says. “At IEEE conferences like the International Electron Devices Meeting or ISPSD, we always see several well-attended sessions on silicon carbide power devices because more IEEE members pay attention to this field now.”

-

Video Friday: Morphy Drone

by Evan Ackerman on 21. June 2024. at 18:33

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboCup 2024: 17–22 July 2024, EINDHOVEN, NETHERLANDS

ICRA@40: 23–26 September 2024, ROTTERDAM, NETHERLANDS

IROS 2024: 14–18 October 2024, ABU DHABI, UAE

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH

Enjoy today’s videos!

We present Morphy, a novel compliant and morphologically aware flying robot that integrates sensorized flexible joints in its arms, thus enabling resilient collisions at high speeds and the ability to squeeze through openings narrower than its nominal dimensions.

Morphy represents a new class of soft flying robots that can facilitate unprecedented resilience through innovations both in the “body” and “brain.” The novel soft body can, in turn, enable new avenues for autonomy. Collisions that previously had to be avoided have now become acceptable risks, while areas that are untraversable for a certain robot size can now be negotiated through self-squeezing. These novel bodily interactions with the environment can give rise to new types of embodied intelligence.

[ ARL ]

Thanks, Kostas!

Segments of daily training for robots driven by reinforcement learning. Multiple tests done in advance for friendly service humans. The training includes some extreme tests. Please do not imitate!

[ Unitree ]

Sphero is not only still around, it’s making new STEM robots!

[ Sphero ]

Googly eyes mitigate all robot failures.

[ WVUIRL ]

Here I am, without the ability or equipment (or desire) required to iron anything that I own, and Flexiv’s got robots out there ironing fancy leather car seats.

[ Flexiv ]

Thanks, Noah!

We unveiled a significant leap forward in perception technology for our humanoid robot GR-1. The newly adapted pure-vision solution integrates bird’s-eye view, transformer models, and an occupancy network for precise and efficient environmental perception.

[ Fourier ]

Thanks, Serin!

LimX Dynamics’ humanoid robot CL-1 was launched in December 2023. It climbed stairs based on real-time terrain perception, two steps per stair. Four months later, in April 2024, the second demo video showcased CL-1 in the same scenario. It had advanced to climb the same stair, one step per stair.

[ LimX Dynamics ]

Thanks, Ou Yan!

New research from the University of Massachusetts Amherst shows that programming robots to create their own teams and voluntarily wait for their teammates results in faster task completion, with the potential to improve manufacturing, agriculture, and warehouse automation.

[ HCRL ] via [ UMass Amherst ]

Thanks, Julia!

LASDRA (Large-size Aerial Skeleton with Distributed Rotor Actuation system (ICRA18) is a scalable and modular aerial robot. It can assume a very slender, long, and dexterous form factor and is very lightweight.

[ SNU INRoL ]

We propose augmenting initially passive structures built from simple repeated cells, with novel active units to enable dynamic, shape-changing, and robotic applications. Inspired by metamaterials that can employ mechanisms, we build a framework that allows users to configure cells of this passive structure to allow it to perform complex tasks.

[ CMU ]

Testing autonomous exploration at the Exyn Office using Spot from Boston Dynamics. In this demo, Spot autonomously explores our flight space while on the hunt for one of our engineers.

[ Exyn ]

Meet Heavy Picker, the strongest robot in bulky-waste sorting and an absolute pro at lifting and sorting waste. With skills that would make a concert pianist jealous and a work ethic that never needs coffee breaks, Heavy Picker was on the lookout for new challenges.

[ Zen Robotics ]

AI is the biggest and most consequential business, financial, legal, technological, and cultural story of our time. In this panel, you will hear from the underrepresented community of women scientists who have been leading the AI revolution—from the beginning to now.

[ Stanford HAI ]

-

Honoring the Legacy of Chip Design Innovator Lynn Conway

by Joanna Goodrich on 20. June 2024. at 18:00

Lynn Conway, codeveloper of very-large-scale integration, died on 9 June at the age of 86. The VLSI process, which creates integrated circuits by combining thousands of transistors into a single chip, revolutionized microchip design.

Conway, an IEEE Fellow, was transfeminine and was a transgender-rights activist who played a key role in updating the IEEE Code of Conduct to prohibit discrimination based on sexual orientation, gender identity, and gender expression.

She shared her experiences on a blog to help others considering or beginning to transition their gender identity. She also mentored many trans people through their transitioning.

“Lynn Conway’s example of engineering impact and personal courage has been a great source of inspiration for me and countless others,” Michael Wellman, a professor of computer science and engineering at the University of Michigan in Ann Arbor, told the Michigan Engineering News website. Conway was a professor emerita at the university.

The profile of Conway below is based on an interview The Institute conducted with her in December.

Some engineers dream their pioneering technologies will one day earn them a spot in history books. But what happens when your contributions are overlooked because of your gender identity?

If you’re like Lynn Conway—who faced that dilemma—you fight back.

Conway helped develop very-large-scale integration: the process of creating integrated circuits by combining thousands of transistors into a single chip. VLSI chips are at the core of electronic devices used today. The technology provides processing power, memory, and other functionalities to smartphones, laptops, smartwatches, televisions, and household appliances.

She and her research partner Carver Mead developed VLSI in the 1970s while she was working at Xerox’s Palo Alto Research Center, in California. Mead was an engineering professor at CalTech at the time. For years, Conway’s role was overlooked partly because she was a woman, she asserts, and partly because she was transfeminine.

Since coming out publicly in 1999, Conway has been fighting for her contributions to be recognized, and she’s succeeding. Over the years, the IEEE Fellow has been honored by a variety of organizations, most recently the National Inventors Hall of Fame, which inducted her last year almost 15 years after it recognized Mead.

From budding physicist to electrical engineer

Conway initially was interested in studying physics because of the role it played in World War II.

“After the war ended, physicists became famous for blowing up the world in order to save it,” she says. “I was naive and saw physics as the source of all wisdom. I went off to MIT, not fully understanding the subject I chose to major in.”

She took many electrical engineering courses because, she says, they allowed her to be creative. It was through those classes that she found her calling.

She left MIT in 1957, then earned bachelor’s and master’s degrees in electrical engineering from Columbia in 1962 and 1963. While at Columbia, she conducted an independent study under the guidance of Herb Schorr, an adjunct professor and a researcher at IBM Research in Yorktown Heights, N.Y. The study involved installing a list-processing language on the IBM 1620 computer, “which was the most arcane machine to attempt to do that on,” she says laughing. “It was a cool language that Maurice Wilkes from Cambridge had developed to experiment with self-compiling compilers.”

She must have made quite an impression on Schorr, she says, because after she earned her master’s degree, he recruited her to join him at the research center. While working on the advanced computing systems project there, she invented multiple-out-of-order dynamic instruction scheduling, a technique that allows a CPU to reorder instructions based on their availability and readiness instead of following the program order strictly.

That work led to the creation of the superscalar CPU, which manages multiple instruction pipelines to execute several instructions concurrently.

The company eventually transferred her to its offices in California’s Bay Area.

Although her career was thriving, Conway was struggling with gender dysphoria, the distress people experience when their gender identity differs from their sex assigned at birth. In 1967 she moved forward with gender-affirming care “to resolve the terrible existential situation I had faced since childhood,” she says.

She notified IBM of her intention to transition, with the hope the company would allow her to do so quietly. Instead, IBM fired her, convinced that her transition would cause “extreme emotional distress in fellow employees,” she says. (In 2020 the company issued an apology for terminating her.)

After completing her transition, at the end of 1968 Conway began her career anew as a contract programmer. By 1971 she was working as a computer architect at Memorex in Silicon Valley. She joined the company in what she calls “stealth mode.” No one other than close family members and friends knew she was transfeminine. Conway was afraid of discrimination and losing her job again, she says. Because of her decision to keep her transition a secret, she says, she could not claim credit for the techniques she had invented at IBM Research because they were credited to the name she had been assigned at birth, her “dead name.”

She was recruited in 1975 to join Xerox PARC as a research fellow and manager of its VLSI system design group.

It was there that she made history.

Conway was recruited in 1975 to join Xerox PARC as a research fellow.Lynn Conway

Conway was recruited in 1975 to join Xerox PARC as a research fellow.Lynn ConwayStarting the Mead and Conway Revolution

Concerned with how Moore’s Law would affect the performance of microelectronics, the Advanced Research Project Agency (now known as the Defense Advanced Research Projects Agency) created a coalition of companies and research universities, including PARC and CalTech, to improve microchip design. After Conway joined PARC’s VLSI system design group, she worked closely with Carver Mead on chip design. Mead, now an IEEE Life Fellow, is credited with coining the term Moore’s Law.

Making chips at the time involved manually designing transistors and connecting them with circuits. The process was time-consuming and error-prone.

“A whole bunch of different pieces of design were being done at different abstraction levels, including the basic architecture, the logic design, the circuit design, and the layout design—all by different people,” Conway said in a 2023 IEEE Annals of the History of Computing interview. “And the various people in the different layers passed the design down in kind of a paternalistic top-down system. The people at any one layer may have no clue what the people at the other levels in that system are doing or what they know.”

Conway and Mead decided the best way to address that communication problem was to use CAD tools to automate the process.

The two also introduced the structured-design method of creating chips. It emphasized high-level abstraction and modular design techniques such as logic gates and modules—which made the process more efficient and scalable.

Conway also created a simplified set of rules for chip design that enabled the integrated circuits to be numerically encoded, scaled, and reused as Moore’s Law advanced.

The method was so radical, she says, that it needed help catching on. Conway and Mead wrote Introduction to VLSI Systems to take the new concepts straight to the next generation of engineers and programmers. The textbook included the basics of structured designs and how to validate and verify them. Before its publication in 1980, Conway tested how well it explained the method by teaching the first VLSI course in 1978 at MIT.

The textbook was successful, becoming the foundational resource for teaching the technology. By 1983 it was being used by nearly 120 universities.

Conway and Mead’s work resulted in what is known as the Mead and Conway Revolution, enabling faster, smaller, and more powerful devices to be developed.

Throughout the 1980s, Conway and Mead were known as the dynamic duo that created VLSI. They received multiple joint awards including the Electronics magazine 1981 Award for Achievement, the University of Pennsylvania’s 1984 Pender Award, and the Franklin Institute’s 1985 Wetherill Medal.

Conway left Xerox PARC in 1983 to join DARPA as assistant director for strategic computing. She led planning of the strategic computing initiative, an effort to expand the technology base for intelligent-weapons systems.

Two years later she began her academic career at the University of Michigan as a professor of electrical engineering and computer science. She was the university’s associate dean of engineering and taught there until 1998, when she retired.

Becoming an activist

In 1999 Conway decided to come out as a transfeminine engineer, knowing that not only would her previous work be credited to her again, she says, but also that she could be a source of strength and inspiration for others like her.

In the 2000s Conway’s honors began to dry up, while Mead continued to receive awards for VLSI, including a 2002 U.S. National Medal of Technology and Innovation.

After publicly coming out, she spoke openly about her experience and lobbied to be credited for her work.

Some organizations, including IEEE, began to recognize Conway. The IEEE Computer Society awarded her its 2009 Computer Pioneer Award. She received the 2015 IEEE/RSE Maxwell Medal, which honors contributions that had an exceptional impact on the development of electronics and electrical engineering.

-

Apple, Microsoft Shrink AI Models to Improve Them

by Shubham Agarwal on 20. June 2024. at 16:00

Tech companies have been caught up in a race to build the biggest large language models (LLMs). In April, for example, Meta announced the 400-billion-parameter Llama 3, which contains twice the number of parameters—or variables that determine how the model responds to queries—than OpenAI’s original ChatGPT model from 2022. Although not confirmed, GPT-4 is estimated to have about 1.8 trillion parameters.

In the last few months, however, some of the largest tech companies, including Apple and Microsoft, have introduced small language models (SLMs). These models are a fraction of the size of their LLM counterparts and yet, on many benchmarks, can match or even outperform them in text generation.

On 10 June, at Apple’s Worldwide Developers Conference, the company announced its “Apple Intelligence” models, which have around 3 billion parameters. And in late April, Microsoft released its Phi-3 family of SLMs, featuring models housing between 3.8 billion and 14 billion parameters.

OpenAI’s CEO Sam Altman believes we’re at the end of the era of giant models.

In a series of tests, the smallest of Microsoft’s models, Phi-3-mini, rivalled OpenAI’s GPT-3.5 (175 billion parameters), which powers the free version of ChatGPT, and outperformed Google’s Gemma (7 billion parameters). The tests evaluated how well a model understands language by prompting it with questions about mathematics, philosophy, law, and more. What’s more interesting, Microsoft’s Phi-3-small, with 7 billion parameters, fared remarkably better than GPT-3.5 in many of these benchmarks.

Aaron Mueller, who researches language models at Northeastern University in Boston, isn’t surprised SLMs can go toe-to-toe with LLMs in select functions. He says that’s because scaling the number of parameters isn’t the only way to improve a model’s performance: Training it on higher-quality data can yield similar results too.

Microsoft’s Phi models were trained on fine-tuned “textbook-quality” data, says Mueller, which have a more consistent style that’s easier to learn from than the highly diverse text from across the Internet that LLMs typically rely on. Similarly, Apple trained its SLMs exclusively on richer and more complex datasets.

The rise of SLMs comes at a time when the performance gap between LLMs is quickly narrowing and tech companies look to deviate from standard scaling laws and explore other avenues for performance upgrades. At an event in April, OpenAI’s CEO Sam Altman said he believes we’re at the end of the era of giant models. “We’ll make them better in other ways.”

Because SLMs don’t consume nearly as much energy as LLMs, they can also run locally on devices like smartphones and laptops (instead of in the cloud) to preserve data privacy and personalize them to each person. In March, Google rolled out Gemini Nano to the company’s Pixel line of smartphones. The SLM can summarize audio recordings and produce smart replies to conversations without an Internet connection. Apple is expected to follow suit later this year.

More importantly, SLMs can democratize access to language models, says Mueller. So far, AI development has been concentrated into the hands of a couple of large companies that can afford to deploy high-end infrastructure, while other, smaller operations and labs have been forced to license them for hefty fees.

Since SLMs can be easily trained on more affordable hardware, says Mueller, they’re more accessible to those with modest resources and yet still capable enough for specific applications.

In addition, while researchers agree there’s still a lot of work ahead to overcome hallucinations, carefully curated SLMs bring them a step closer toward building responsible AI that is also interpretable, which would potentially allow researchers to debug specific LLM issues and fix them at the source.

For researchers like Alex Warstadt, a computer science researcher at ETH Zurich, SLMs could also offer new, fascinating insights into a longstanding scientific question: How children acquire their first language. Warstadt, alongside a group of researchers including Northeastern’s Mueller, organizes BabyLM, a challenge in which participants optimize language-model training on small data.

Not only could SLMs potentially unlock new secrets of human cognition, but they also help improve generative AI. By the time children turn 13, they’re exposed to about 100 million words and are better than chatbots at language, with access to only 0.01 percent of the data. While no one knows what makes humans so much more efficient, says Warstadt, “reverse engineering efficient humanlike learning at small scales could lead to huge improvements when scaled up to LLM scales.”

-

Vodafone Launches Private 5G Tech to Compete With Wi-Fi

by Margo Anderson on 20. June 2024. at 15:01

As the world’s 5G rollout continues with its predictable fits and starts, the cellular technology is also starting to move into a space already dominated by another wireless tech: Wi-Fi. Private 5G networks—in which a person or company sets up their own facility-wide cellular network—are today finding applications where Wi-Fi was once the only viable game in town. This month, the Newbury, England–based telecom company Vodafone is releasing a Raspberry Pi–based private 5G base station that it is now being aimed at developers, who might then jump-start a wave of private 5G innovation.

“The Raspberry Pi is the most affordable CPU[-based] computer that you can get,” says Santiago Tenorio, network architecture director at Vodafone. “Which means that what we build, in essence, has a similar bill of materials as a good quality Wi-Fi router.”

The company has teamed with the Surrey, England–based Lime Microsystems to release a crowd-funded range of private 5G base-station kits ranging in price from US $800 to $12,000.

“In a Raspberry Pi—in this case, a Raspberry Pi 4 is what we used—then you can be sure you can run that anywhere, because it’s the tiniest processor that you can have,” Tenorio says.

Santiago Tenorio holds one of Lime Microsystems’ private 5G base-station kits.Vodafone

Santiago Tenorio holds one of Lime Microsystems’ private 5G base-station kits.VodafonePrivate 5G for Drones and Bakeries

There are a range of reasons, Tenorio says, why someone might want their own private 5G network. At the moment, the scenarios mostly concern companies and organizations—although individual expert users could still be drawn to, for instance, 5G’s relatively low latency and network flexibility.

Tenorio highlighted security and mobility as two big selling points for private 5G.

A commercial storefront business, for instance, might be attracted to the extra security protections that a SIM card can provide compared to password-based wireless network security. Because each SIM card contains its own unique identifier and encryption keys, thereby also enabling a network to be able to recognize and authorize each individual connection, Tenorio says private 5G network security is a considerable selling point.

Plus, Tenorio says, it’s simpler for customers to access. Envisioning a use case of a bakery with its own privately deployed 5G network, he says, “You don’t need a password. You don’t need a conversation [with a clerk behind a counter] or a QR code. You simply walk into the bakery, and you are into the bakery’s network.”

As to mobility, Tenorio suggests one emergency relief and rescue application that might rely on the presence of a nearby 5G station that causes devices in its range to ping.

Setting up a private 5G base station on a drone, Tenorio says, would enable that drone to fly over a disaster area and, via its airborne network, send a challenge signal to all devices in its coverage area to report in. Any device receiving that signal with a compatible SIM card then responds with its unique identification information.

“Then any phone would try to register,” Tenorio says. “And then you would know if there is someone [there].”

Not only that, but because the ping would be from a device with a SIM card, the private 5G rescue drone in the above scenario could potentially provide crucial information about each individual, just based on the device’s identifier alone. And that user-identifying feature of private 5G isn’t exactly irrelevant to a bakery owner—or to any other commercial customer—either, Tenorio says.

“If you are a bakery,” he says, “You could actually know who your customers are, because anyone walking into the bakery would register on your network and would leave their [International Mobile Subscriber Identity].”

Winning the Lag Race

According to Christian Wietfeld, professor of electrical engineering at the Technical University of Dortmund in Germany, private 5G networks also bring the possibility of less lag. His team has tested private 5G deployments—although, Wietfeld says that they haven’t yet tested the present Vodafone/Lime Microsystem base station—and have found private 5G to provide reliably better connectivity.

Wietfeld’s team will present their research at the IEEE International Symposium on Personal, Indoor and Mobile Radio Communications in September in Valencia, Spain. They found that private 5G can deliver connections up to 10 times as fast as connections in networks with high loads, compared to Wi-Fi (the IEEE 802.11 wireless standard).

“The additional cost and effort to operate a private 5G network pays off in lower downtimes of production and less delays in delivery of goods,” Wietfeld says. “Also, for safety-critical use cases such as on-campus teleoperated driving, private 5G networks provide the necessary reliability and predictability of performance.”

For Lime Networks, according to the company’s CEO and founder Ebrahim Bushehri, the challenge comes in developing a private 5G base station that maximized versatility and openness to whatever kinds of applications developers could envision—while still being reasonably inexpensive and retaining a low-power envelope.

“The solution had to be ultraportable and with an optional battery pack which could be mounted on drones and autonomous robots, for remote and tactical deployments, such as emergency-response scenarios and temporary events,” Bushehri says.

Meanwhile, the crowdfunding behind the device’s rollout, via the website Crowd Supply, allows both companies to keep tabs on the kinds of applications the developer community is envisioning for this technology, he says.

“Crowdfunding,” Bushehri says, “Is one of the key indicators of community interest and engagement. Hence the reason for launching the campaign on Crowd Supply to get feedback from early adopters.”

-

IEEE Educational Video for Kids Spotlights Climate Change

by Robert Schneider on 19. June 2024. at 18:00

When it comes to addressing climate change, the “in unity there’s strength” adage certainly applies.

To support IEEE’s climate change initiative, which highlights innovative solutions and approaches to the climate crisis, IEEE’s TryEngineering program has created a collection of lesson plans, activities, and events that cover electric vehicles, solar and wind power systems, and more.

TryEngineering, a program within IEEE Educational Activities, aims to foster the next generation of technology innovators by providing preuniversity educators and students with resources.

To help bring the climate collection to more students, TryEngineering has partnered with the Museum of Science in Boston. The museum, one of the world’s largest science centers, reaches nearly 5 million people annually through its physical location, nearby classrooms, and online platforms.

TryEngineering worked with the museum to distribute a nearly four-minute educational video created by Moment Factory, a multimedia studio specializing in immersive experiences. Using age-appropriate language, the video, which is posted on TryEngineering’s climate change page, explores the issue through visual models and scientific explanations.

“Since the industrial revolution, humans have been digging up fossil fuels and burning them, which releases CO2 into the atmosphere in unprecedented quantities,” the video says. It notes that in the past 60 years, atmospheric carbon dioxide increased at a rate 100 times faster than previous natural changes.

“We are committed to energizing students around important issues like climate change and helping them understand how engineering can make a difference.”

The video explains the impact of pollutants such as lead and ash, and it adds that “when we work together, we can change the global environment.” The video encourages students to contribute to a global solution by making small, personal changes.

“We’re thrilled to contribute to the IEEE climate change initiative by providing IEEE volunteers and educators access to TryEngineering’s collection, so they have resources to use with students,” says Debra Gulick, director of IEEE student and academic education programs.

“We are excited to partner with the Museum of Science to bring even more awareness and exposure of this important issue to the school setting,” Gulick says. “Working with prominent partners like the museum, we are committed to energizing students around important issues like climate change and helping them understand how engineering can make a difference.”

-

For EVs, Semi-Solid-State Batteries Offer a Step Forward

by Willie D. Jones on 19. June 2024. at 16:00

Earlier this month, China announced that it is pouring 6 billion yuan (about US $826 million) into a fund meant to spur the development of solid-state batteries by the nation’s leading battery manufacturers. Solid-state batteries use electrolytes of either glass, ceramic, or solid polymer material instead of the liquid lithium salts that are in the vast majority of today’s electric vehicle (EV) batteries. They’re greatly anticipated because they will have three or four times as much energy density as batteries with liquid electrolytes, offer more charge-discharge cycles over their lifetimes, and be far less susceptible to the thermal runaway reaction that occasionally causes lithium batteries to catch fire.

But China’s investment in the future of batteries won’t likely speed up the timetable for mass production and use in production vehicles. As IEEE Spectrum pointed out in January, it’s not realistic to look for solid-state batteries in production vehicles anytime soon. Experts Spectrum consulted at the time “noted a pointed skepticism toward the technical merits of these announcements. None could isolate anything on the horizon indicating that solid-state technology can escape the engineering and ‘production hell’ that lies ahead.”

“To state at this point that any one battery and any one country’s investments in battery R&D will dominate in the future is simply incorrect.” —Steve W. Martin, Iowa State University

Reaching scale production of solid-state batteries for EVs will first require validating existing solid-state battery technologies—now being used for other, less demanding applications—in terms of performance, life-span, and relative cost for vehicle propulsion. Researchers must still determine how those batteries take and hold a charge and deliver power as they age. They’ll also need to provide proof that a glass or ceramic battery can stand up to the jarring that comes with driving on bumpy roads and certify that it can withstand the occasional fender bender.

Here Come Semi-Solid-State Batteries

Meanwhile, as the world waits for solid electrolytes to shove liquids aside, Chinese EV manufacturer Nio and battery maker WeLion New Energy Technology Co. have partnered to stake a claim on the market for a third option that splits the difference: semi-solid-state batteries, with gel electrolytes.

CarNewsChina.com reported in April that the WeLion cells have an energy density of 360 watt-hours per kilogram. Fully packaged, the battery’s density rating is 260 Wh/kg. That’s still a significant improvement over lithium iron phosphate batteries, whose density tops out at 160 Wh/kg. In tests conducted last month with Nio’s EVs in Shanghai, Chengdu, and several other cities, the WeLion battery packs delivered more than 1,000 kilometers of driving range on a single charge. Nio says it plans to roll out the new battery type across its vehicle lineup beginning this month.

But the Beijing government’s largesse and the Nio-WeLion partnership’s attempt to be first to get semi-solid-state batteries into production vehicles shouldn’t be a temptation to call the EV propulsion game prematurely in China’s favor.

So says Steve W. Martin, a professor of materials science and engineering at Iowa State University, in Ames. Martin, whose research areas include glassy solid electrolytes for solid-state lithium batteries and high-capacity reversible anodes for lithium batteries, believes that solid-state batteries are the future and that hybrid semi-solid batteries will likely be a transition between liquid and solid-state batteries. However, he says, “to state at this point that any one battery and any one country’s investments in battery R&D will dominate in the future is simply incorrect.” Martin explains that “there are too many different kinds of solid-state batteries being developed right now and no one of these has a clear technological lead.”

The Advantages of Semi-Solid-State Batteries

The main innovation that gives semi-solid-state batteries an advantage over conventional batteries is the semisolid electrolyte from which they get their name. The gel electrolyte contains ionic conductors such as lithium salts just as liquid electrolytes do, but the way they are suspended in the gel matrix supports much more efficient ion conductivity. Enhanced transport of ions from one side of the battery to the other boosts the flow of current in the opposite direction that makes a complete circuit. This is important during the charging phase because the process happens more rapidly than it can in a battery with a liquid electrolyte. The gel’s structure also resists the formation of dendrites, the needlelike structures that can form on the anode during charging and cause short circuits. Additionally, gels are less volatile than liquid electrolytes and are therefore less prone to catching fire.

Though semi-solid-state batteries won’t reach the energy densities and life-spans that are expected from those with solid electrolytes, they’re at an advantage in the short term because they can be made on conventional lithium-ion battery production lines. Just as important, they have been tested and are available now rather than at some as yet unknown date.

Semi-solid-state batteries can be made on conventional lithium-ion battery production lines.

Several companies besides WeLion are actively developing semi-solid-state batteries. China’s prominent battery manufacturers, including CATL, BYD, and the state-owned automakers FAW Group and SAIC Group are, like WeLion, beneficiaries of Beijing’s plans to advance next-generation battery technology domestically. Separately, the startup Farasis Energy, founded in Ganzhou, China, in 2009, is collaborating with Mercedes-Benz to commercialize advanced batteries.

The Road Forward to Solid-State Batteries

U.S. startup QuantumScape says the solid-state lithium metal batteries it’s developing will offer energy density of around 400 Wh/kg. The company notes that its cells eliminate the charging bottleneck that occurs in conventional lithium-ion cells, where lithium must diffuse into the carbon particles. QuantumScape’s advanced batteries will therefore allow fast charging from 10 to 80 percent in 15 minutes. That’s a ways off, but the Silicon Valley–based company announced in March that it had begun shipping its prototype Alpha-2 semi-solid-state cells to manufacturers for testing.

Toyota is among a group of companies not looking to hedge their bets. The automaker, ignoring naysayers, aims to commercialize solid-state batteries by 2027 that it says will give an EV a range of 1,200 km on a single charge and allow 10-minute fast charging. It attributes its optimism to breakthroughs addressing durability issues. And for companies like Solid Power, it’s also solid-state or bust. Solid Power, which aims to commercialize a lithium battery with a proprietary sulfide-based solid electrolyte, has partnered with major automakers Ford and BMW. ProLogium Technology, which is also forging ahead with preparations for a solid-state battery rollout, claims that it will start delivering batteries this year that combine a ceramic oxide electrolyte with a lithium-free soft cathode (for energy density exceeding 500 Wh/kg). The company, which has teamed up with Mercedes-Benz, demonstrated confidence in its timetable by opening the world’s first giga-level solid-state lithium ceramic battery factory earlier this year in Taoyuan, Taiwan.

-

Here’s the Most Buglike Robot Bug Yet

by Evan Ackerman on 19. June 2024. at 11:00

Insects have long been an inspiration for robots. The insect world is full of things that are tiny, fully autonomous, highly mobile, energy efficient, multimodal, self-repairing, and I could go on and on but you get the idea—insects are both an inspiration and a source of frustration to roboticists because it’s so hard to get robots to have anywhere close to insect capability.

We’re definitely making progress, though. In a paper published last month in IEEE Robotics and Automation Letters, roboticists from Shanghai Jong Tong University demonstrated the most buglike robotic bug I think I’ve ever seen.

A Multi-Modal Tailless Flapping-Wing Robot www.youtube.com

Okay so it may not look the most buglike, but it can do many very buggy bug things, including crawling, taking off horizontally, flying around (with six degrees of freedom control), hovering, landing, and self-righting if necessary. JT-fly weighs about 35 grams and has a wingspan of 33 centimeters, using four wings at once to fly at up to 5 meters per second and six legs to scurry at 0.3 m/s. Its 380 milliampere-hour battery powers it for an actually somewhat useful 8-ish minutes of flying and about 60 minutes of crawling.

While that amount of endurance may not sound like a lot, robots like these aren’t necessarily intended to be moving continuously. Rather, they move a little bit, find a nice safe perch, and then do some sensing or whatever until you ask them to move to a new spot. Ideally, most of that movement would be crawling, but having the option to fly makes JT-fly exponentially more useful.

Or, potentially more useful, because obviously this is still very much a research project. It does seem like there’s a bunch more optimization that could be done here. For example, JT-fly uses completely separate systems for flying and crawling, with two motors powering the legs and two additional motors powering the wings—plus two wing servos for control. There’s currently a limited amount of onboard autonomy, with an inertial measurement unit, barometer, and wireless communication, but otherwise not much in the way of useful payload.

Insects are both an inspiration and a source of frustration to roboticists because it’s so hard to get robots to have anywhere close to insect capability.

It won’t surprise you to learn that the researchers have disaster-relief applications in mind for this robot, suggesting that “after natural disasters such as earthquakes and mudslides, roads and buildings will be severely damaged, and in these scenarios, JT-fly can rely on its flight ability to quickly deploy into the mission area.” One day, robots like these will actually be deployed for disaster relief, and although that day is not today, we’re just a little bit closer than we were before.

“A Multi-Modal Tailless Flapping-Wing Robot Capable of Flying, Crawling, Self-Righting and Horizontal Takeoff,” by Chaofeng Wu, Yiming Xiao, Jiaxin Zhao, Jiawang Mou, Feng Cui, and Wu Liu from Shanghai Jong Tong University, is published in the May issue of IEEE Robotics and Automation Letters. -

Could Advanced Nuclear Reactors Fuel Terrorist Bombs?

by Glenn Zorpette on 18. June 2024. at 19:21

Various scenarios to getting to net zero carbon emissions from power generation by 2050 hinge on the success of some hugely ambitious initiatives in renewable energy, grid enhancements, and other areas. Perhaps none of these are more audacious than an envisioned renaissance of nuclear power, driven by advanced-technology reactors that are smaller than traditional nuclear power reactors.

What many of these reactors have in common is that they would use a kind of fuel called high-assay low-enriched uranium (HALEU). Its composition varies, but for power generation, a typical mix contains slightly less than 20 percent by mass of the highly fissionable isotope uranium-235 (U-235). That’s in contrast to traditional reactor fuels, which range from 3 percent to 5 percent U-235 by mass, and natural uranium, which is just 0.7 percent U-235.

Now, though, a paper in Science magazine has identified a significant wrinkle in this nuclear option: HALEU fuel can theoretically be used to make a fission bomb—a fact that the paper’s authors use to argue for the tightening of regulations governing access to, and transportation of, the material. Among the five authors of the paper, which is titled “The Weapons Potential of High-Assay Low-Enriched Uranium,” is IEEE Life Fellow Richard L. Garwin. Garwin was the key figure behind the design of the thermonuclear bomb, which was tested in 1952.

The Science paper is not the first to argue for a reevaluation of the nuclear proliferation risks of HALEU fuel. A report published last year by the National Academies, “Merits and Viability of Different Nuclear Fuel Cycles and Technology Options and the Waste Aspects of Advanced Nuclear Reactors,” devoted most of a chapter to the risks of HALEU fuel. It reached similar technical conclusions to those of the Science article, but did not go as far in its recommendations regarding the need to tighten regulations.

Why is HALEU fuel concerning?

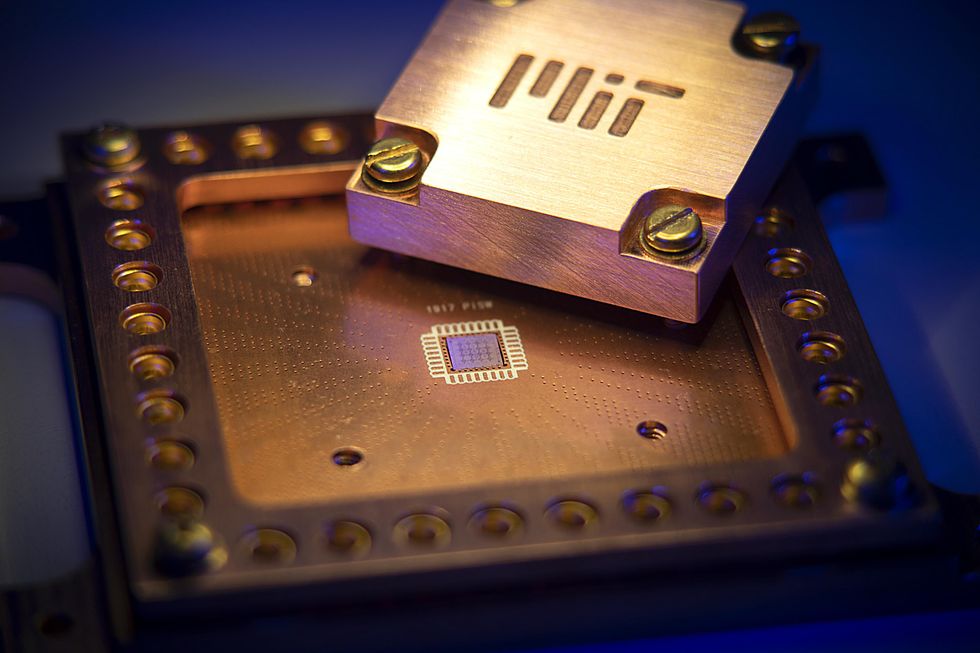

Conventional wisdom had it that U-235 concentrations below 20 percent were not usable for a bomb. But “we found this testimony in 1984 from the chief of the theoretical division of Los Alamos, who basically confirmed that, yes, indeed, it is usable down to 10 percent,” says R. Scott Kemp of MIT, another of the paper’s authors. “So you don’t even need centrifuges, and that’s what really is important here.”

Centrifuges arranged very painstakingly into cascades are the standard means of enriching uranium to bomb-grade material, and they require scarce and costly resources, expertise, and materials to operate. In fact, the difficulty of building and operating such cascades on an industrial scale has for decades served as an effective barrier to would-be builders of nuclear weapons. So any route to a nuclear weapon that bypassed enrichment would offer an undoubtedly easier alternative. The question now is, how much easier?

“It’s not a very good bomb, but it could explode and wreak all kinds of havoc.”

Adding urgency to that question is an anticipated gold rush in HALEU, after years of quiet U.S. government support. The U.S. Department of Energy is spending billions to expand production of the fuel, including US $150 million awarded in 2022 to a subsidiary of Centrus Energy Corp., the only private company in the United States enriching uranium to HALEU concentrations. (Outside of the United States, only Russia and China are producing HALEU in substantial quantities.) Government support also extends to the companies building the reactors that will use HALEU. Two of the largest reactor startups, Terrapower (backed in part by Bill Gates) and X-Energy, have designed reactors that run on forms of HALEU fuel, and have received billions in funding under a DOE program called Advanced Reactor Demonstration Projects.

The difficulty of building a bomb based on HALEU is a murky subject, because many of the specific techniques and practices of nuclear weapons design are classified. But basic information about the standard type of fission weapon, known as an implosion device, has long been known publicly. (The first two implosion devices were detonated in 1945, one in the Trinity test and the other over Nagasaki, Japan.) An implosion device is based on a hollow sphere of nuclear material. In a modern weapon this material is typically plutonium-239, but it can also be a mixture of uranium isotopes that includes a percentage of U-235 ranging from 100 percent all the way down to, apparently, around 10 percent. The sphere is surrounded by shaped chemical explosives that are exploded simultaneously, creating a shockwave that physically compresses the sphere, reducing the distance between its atoms and increasing the likelihood that neutrons emitted from their nuclei will encounter other nuclei and split them, releasing more neutrons. As the sphere shrinks it goes from a subcritical state, in which that chain reaction of neutrons splitting nuclei and creating other neutrons cannot sustain itself, to a critical state, in which it can. As the sphere continues to compress it achieves supercriticality, after which an injected flood of neutrons triggers the superfast, runaway chain reaction that is a fission explosion. All this happens in less than a millisecond.

The authors of the Science paper had to walk a fine line between not revealing too many details about weapons design while still clearly indicating the scope of the challenge of building a bomb based on HALEU. They acknowledge that the amount of HALEU material needed for a 15-kiloton bomb—roughly as powerful as the one that destroyed Hiroshima during the second World War—would be relatively large: in the hundreds of kilograms, but not more than 1,000 kg. For comparison, about 8 kg of Pu-239 is sufficient to build a fission bomb of modest sophistication. Any HALEU bomb would be commensurately larger, but still small enough to be deliverable “using an airplane, a delivery van, or a boat sailed into a city harbor,” the authors wrote.

They also acknowledged a key technical challenge for any would-be weapons makers seeking to use HALEU to make a bomb: preinitiation. The large amount of U-238 in the material would produce many neutrons, which would likely result in a nuclear chain reaction occurring too soon. That would sap energy from the subsequent triggered runaway chain reaction, limiting the explosive yield and producing what’s known in the nuclear bomb business as a “fizzle.“ However, “although preinitiation may have a bigger impact on some designs than others, even those that are sensitive to it could still produce devastating explosive power,” the authors conclude.

In other words, “it’s not a very good bomb, but it could explode and wreak all kinds of havoc,” says John Lee, professor emeritus of nuclear engineering at the University of Michigan. Lee was a contributor to the 2023 National Academies report that also considered risks of HALEU fuel and made policy recommendations similar to those of the Science paper.

Critics of that paper argue that the challenges of building a HALEU bomb, while not insurmountable, would stymie a nonstate group. And a national weapons program, which would likely have the resources to surmount them, would not be interested in such a bomb, because of its limitations and relative unreliability.

“That’s why the IAEA [International Atomic Energy Agency], in their wisdom, said, ‘This is not a direct-use material,’” says Steven Nesbit, a nuclear-engineering consultant and past president of the American Nuclear Society, a professional organization. “It’s just not a realistic pathway to a nuclear weapon.”

The Science authors conclude their paper by recommending that the U.S. Congress direct the DOE’s National Nuclear Security Administration (NNSA) to conduct a “fresh review” of the risks posed by HALEU fuel. In response to an email inquiry from IEEE Spectrum, an NNSA spokesman, Craig Branson, replied: “To meet net-zero emissions goals, the United States has prioritized the design, development, and deployment of advanced nuclear technologies, including advanced and small modular reactors. Many will rely on HALEU to achieve smaller designs, longer operating cycles, and increased efficiencies over current technologies. They will be essential to our efforts to decarbonize while meeting growing energy demand. As these technologies move forward, the Department of Energy and NNSA have programs to work with willing industrial partners to assess the risk and enhance the safety, security, and safeguards of their designs.”

The Science authors also called on the U.S. Nuclear Regulatory Commission (NRC) and the IAEA to change the way they categorize HALEU fuel. Under the NRC’s current categorization, even large quantities of HALEU are now considered category II, which means that security measures focus on the early detection of theft. The authors want weapons-relevant quantities of HALEU reclassified as category I, the same as for quantities of weapons-grade plutonium or highly enriched uranium sufficient to make a bomb. Category I would require much tighter security, focusing on the prevention of theft.

Nesbit scoffs at the proposal, citing the difficulties of heisting perhaps a metric tonne of nuclear material. “Blindly applying all of the baggage that goes with protecting nuclear weapons to something like this is just way overboard,” he says.

But Lee, who performed experiments with HALEU fuel in the 1980s, agrees with his colleagues. “Dick Garwin and Frank von Hipple [and the other authors of the Science paper] have raised some proper questions,” he declares. “They’re saying the NRC should take more precautions. I’m all for that.”

-

Autonomous Vehicles Are Great at Driving Straight

by Matthew S. Smith on 18. June 2024. at 16:10

Autonomous vehicles (AVs) have made headlines in recent months, though often for all the wrong reasons. Cruise, Waymo, and Tesla are all under U.S. federal investigation for a variety of accidents, some of which caused serious injury or death.

A new paper published in Nature puts numbers to the problem. Its authors analyzed over 37,000 accidents involving autonomous and human-driven vehicles to gauge risk across several accident scenarios. The paper reports AVs were generally less prone to accidents than those driven by humans, but significantly underperformed humans in some situations.

“The conclusion may not be surprising given the technological context,” said Shengxuan Ding, an author on the paper. “However, challenges remain under specific conditions, necessitating advanced algorithms and sensors and updates to infrastructure to effectively support AV technology.”

The paper, authored by two researchers at the University of Central Florida, analyzed data from 2,100 accidents involving advanced driving systems (SAE Level 4) and advanced driver-assistance systems (SAE Level 2) alongside 35,113 accidents involving human-driven vehicles. The study pulled from publicly available data on human-driven vehicle accidents in the state of California and the AVOID autonomous vehicle operation incident dataset, which the authors made public last year.

While the breadth of the paper’s data is significant, the paper’s “matched case-control analysis” is what sets it apart. Autonomous and human-driven vehicles tend to encounter different roads in different conditions, which can skew accident data. The paper categorizes risks by the variables surrounding the accident, such as whether the vehicle was moving straight or turning, and the conditions of the road and weather.

Level 4 self-driving vehicles were roughly 36 percent less likely to be involved in moderate injury accidents and 90 percent less likely to be involved in a fatal accident.

SAE Level 4 self-driving vehicles (those capable of full self-driving without a human at the wheel) performed especially well by several metrics. They were roughly 36 percent less likely to be involved in moderate injury accidents and 90 percent less likely to be involved in a fatal accident. Compared to human-driven vehicles, the risk of rear-end collision was roughly halved, and the risk of a broadside collision was roughly one-fifth. Level 4 AVs were close to one-fifthtieth as likely to run off the road.

The paper’s findings are generally favorable for level 4 AVs, but they perform worse in turns, and at dawn and dusk.Nature

The paper’s findings are generally favorable for level 4 AVs, but they perform worse in turns, and at dawn and dusk.NatureThese figures look good for AVs. However, Missy Cummings, director of George Mason University’s Autonomy and Robotics Center and former safety advisor for the National Highway Traffic Safety Administration, was skeptical of the findings.

“The ground rules should be that when you analyze AV accidents, you cannot combine accidents with self-driving cars [SAE Level 4] with the accidents of Teslas [SAE Level 2],” said Cummings. She took issue with discussing them in tandem and points out these categories of vehicles operate differently—so much so that Level 4 AVs aren’t legal in every state, while Level 2 AVs are.

Mohamed Abdel-Aty, an author on the paper and director of the Smart & Safe Transportation Lab at the University of Central Florida, said that while the paper touches on both levels of autonomy, the focus was on Level 4 autonomy. “The model which is the main contribution to this research compared only level 4 to human-driven vehicles,” he said.

And while many findings were generally positive, the authors highlighted two significant negative outcomes for level 4 AVs. It found they were over five times more likely to be involved in an accident at dawn and dusk. They were relatively bad at navigating turns as well, with the odds of an accident during a turn almost doubled compared to those for human-driven vehicles.

More data required for AVs to be “reassuring”

The study’s finding of higher accident rates during turns and in unusual lighting conditions highlight two major categories of challenges facing self-driving vehicles: intelligence and data.

J. Christian Gerdes, codirector of the Center for Automotive Research at Stanford University, said turning through traffic is among the most demanding situations for an AV’s artificial intelligence. “That decision is based a lot on the actions of other road users around you, and you’re going to make the choice based on what you predict.”

Cummings agreed with Gerdes. “Any time uncertainty increases [for an AV], you’re going to see an increased risk of accident. Just by the fact you’re turning, that increases uncertainty, and increases risk.”

AVs’ dramatically higher risk of accidents at dawn and dusk, on the other hand, points towards issues with the data captured by a vehicle’s sensors. Most AVs use a combination of radar and visual sensor systems, and the latter is prone to error in difficult lighting.

It’s not all bad news for sensors, though. Level 4 AVs were drastically better in rain and fog, which suggests that the presence of radar and lidar systems gives AVs an advantage in weather conditions that reduce visibility. Gerdes also said AVs, unlike humans, don’t tire or become distracted when driving through weather that requires more vigilance.