IEEE Spectrum IEEE Spectrum

-

IEEE Offers AI Training Courses and a Mini MBA Program

by Angelique Parashis on 21. February 2025. at 19:00

Artificial intelligence is changing the way business is conducted. Organizations that understand where to deploy AI strategically—whether through process improvements, more effective data use, or elsewhere—are expected to outperform their competition and have greater growth and efficiency.

In its annual report, “The Impact of Technology in 2025 and Beyond: An IEEE Global Study,” IEEE surveyed 350 global technology leaders including CIOs, CTOs, and IT directors. The survey revealed that more than half of the respondents ranked AI, which encompasses predictive and generative AI, machine learning, and natural language processing, as the most important technology coming into 2025.

The tech leaders said they were ready to adopt AI, with many already leveraging its benefits and planning further exploration. Specifically, 20 percent of respondents reported regular use of generative AI, noting that it added value to their operations. Additionally, 24 percent acknowledged the benefits of the technology and said they intended to explore its practical applications. More than 30 percent had high expectations for AI and plan to experiment with it on smaller projects.

The strategic importance of AI for companies

AI’s impact varies across industries, with technology leading the way in integration. Despite AI’s increasing presence in company operations around the globe, it remains a source of confusion for most employees. As AI usage continues to rise, businesses should invest in bringing their staff up to speed on how to integrate the technology to improve their operations.

In a survey last year by technology research and advisory company Valoir, 84 percent of employees reported being unclear about what generative AI is or how it works. Similarly, in Slingshot’s Digital Work Trends Report, 77 percent of employees surveyed said they didn’t have adequate training in AI tools or fully understand how AI related to their job.

The effective use of AI can help companies and their employees make informed, data-driven decisions, improve resource allocation, provide more targeted and personalized customer experiences, and streamline project management. Business leaders who have a firm grasp on what AI can deliver will be better positioned for success.

IEEE offers educational resources for AI training

For businesses that want to train their staff on the technology, IEEE offers a comprehensive education program designed to enhance knowledge and skills in the rapidly evolving field.

The resources, produced by IEEE Educational Activities, can ensure that employees are well-versed in the latest advancements and equipped with practical skills to drive innovation and efficiency within the organization.

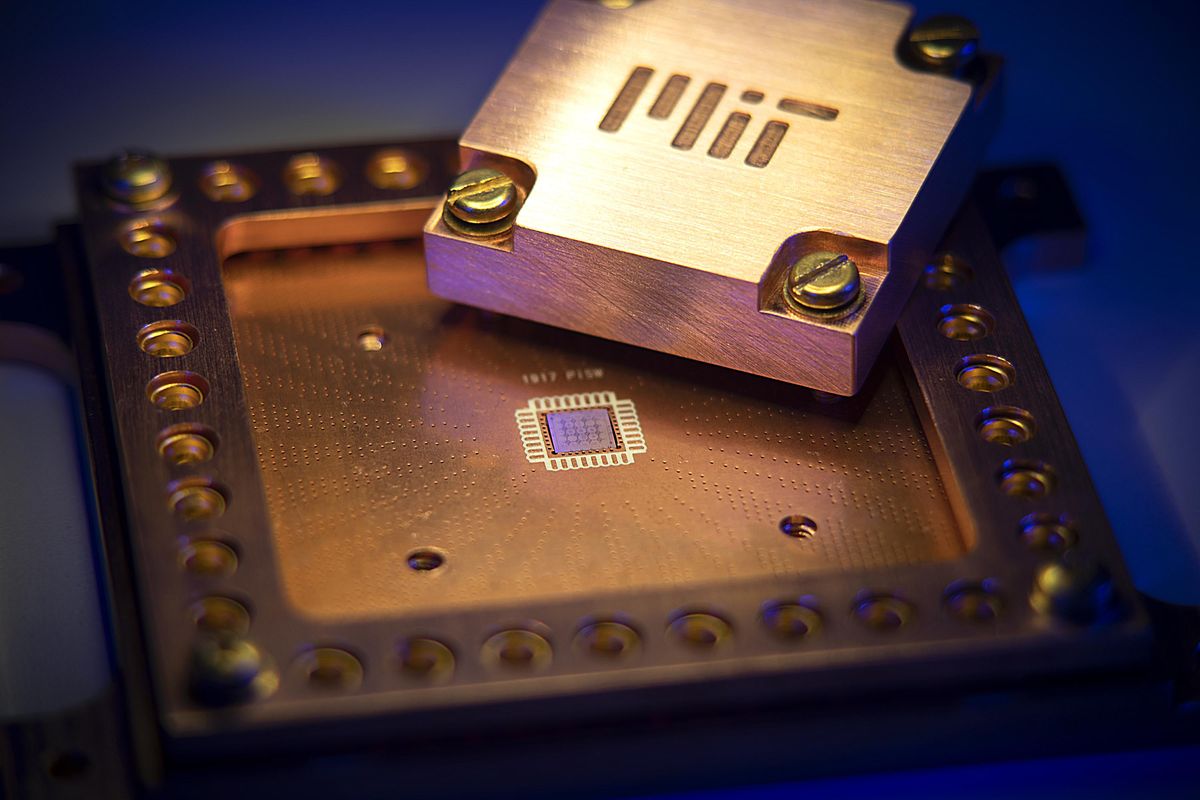

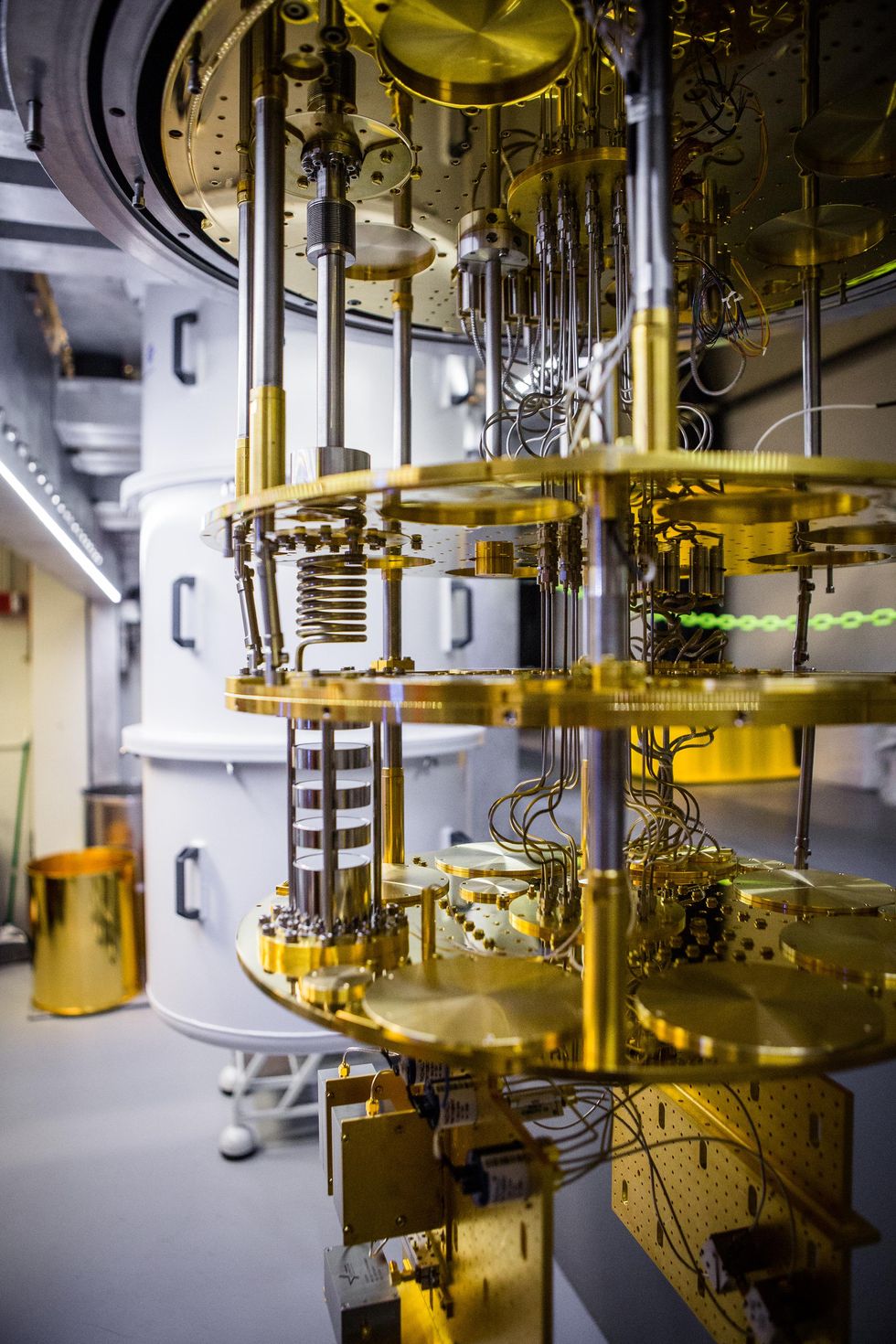

- Created in partnership with IEEE Future Directions, Artificial Intelligence and Machine Learning in Chip Design is a four-hour course series that covers applications in design automation and deployment strategies, as well as the future of design.

- Developed in partnership with the IEEE Computer Society, Integrating Edge AI and Advanced Nanotechnology in Semiconductor Applications is a five-hour course series that explores the intersection of AI, edge computing, and nanotechnology.

- The comprehensive AI Integration in Semiconductor Manufacturing five-hour course series, created in partnership with the IEEE Computer Society, covers how AI enhances production efficiency, optimizes processes, and improves product quality.

The courses are also available to individuals through the IEEE Learning Network.

Upon successfully completing each course, participants earn professional development credits including professional development hours (PDHs) and continuing education units (CEUs). Additionally, they receive a shareable digital badge highlighting their proficiency—which can be showcased on social media platforms.

IEEE and Rutgers offer a mini MBA

The new IEEE | Rutgers Online Mini-MBA: Artificial Intelligence program is designed to help organizations and their employees master AI for innovation. The program provides learners with an enhanced understanding of applications tailored to specific industries and job functions. Participants learn how to strategically leverage the technology to address business challenges, optimize processes, make more effective use of data, better serve customer needs, and improve overall organizational success.

For employers, the program is invaluable in training staff to stay ahead of the competition in a fast-evolving landscape. It offers individual access and company-specific cohorts, providing flexible learning options to meet your organization’s needs.

IEEE members receive a 10 percent discount.

Whether you’re an experienced professional or just starting out, IEEE’s education offerings can be invaluable for staying ahead. Learn more about IEEE’s corporate solutions, professional development programs, and individual eLearning courses.

-

Video Friday: Helix

by Evan Ackerman on 21. February 2025. at 17:00

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboCup German Open: 12–16 March 2025, NUREMBERG, GERMANY

German Robotics Conference: 13–15 March 2025, NUREMBERG, GERMANY

European Robotics Forum: 25–27 March 2025, STUTTGART, GERMANY

RoboSoft 2025: 23–26 April 2025, LAUSANNE, SWITZERLAND

ICUAS 2025: 14–17 May 2025, CHARLOTTE, N.C.

ICRA 2025: 19–23 May 2025, ATLANTA, GA.

London Humanoids Summit: 29–30 May 2025, LONDON

IEEE RCAR 2025: 1–6 June 2025, TOYAMA, JAPAN

2025 Energy Drone & Robotics Summit: 16–18 June 2025, HOUSTON

RSS 2025: 21–25 June 2025, LOS ANGELES

ETH Robotics Summer School: 21–27 June 2025, GENEVA

IAS 2025: 30 June–4 July 2025, GENOA, ITALY

ICRES 2025: 3–4 July 2025, PORTO, PORTUGAL

IEEE World Haptics: 8–11 July 2025, SUWON, KOREA

IFAC Symposium on Robotics: 15–18 July 2025, PARIS

RoboCup 2025: 15–21 July 2025, BAHIA, BRAZIL

Enjoy today’s videos!

We’re introducing Helix, a generalist Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control to overcome multiple longstanding challenges in robotics.

This is moderately impressive; my favorite part is probably the handoffs and that extra little bit of HRI with what we’d call eye contact if these robots had faces. But keep in mind that you’re looking at close to best case for robotic manipulation, and that if the robots had been given the bag instead of well-spaced objects on a single color background, or if the fridge had a normal human amount of stuff in it, they might be having a much different time of it. Also, is it just me, or is the sound on this video very weird? Like, some things make noise, some things don’t, and the robots themselves occasionally sound more like someone just added in some “soft actuator sound” or something. Also also, I’m of a suspicious nature, and when there is an abrupt cut between “robot grasps door” and “robot opens door,” I assume the worst.

[ Figure ]

Researchers at EPFL have developed a highly agile flat swimming robot. This robot is smaller than a credit card, and propels on the water surface using a pair of undulating soft fins. The fins are driven at resonance by artificial muscles, allowing the robot to perform complex maneuvers. In the future, this robot can be used for monitoring water quality or help with measuring fertilizer concentrations in rice fields

[ Paper ] via [ Science Robotics ]

I don’t know about you, but I always dance better when getting beaten with a stick.

[ Unitree Robotics ]

This is big news, people: Sweet Bite Ham Ham, one of the greatest and most useless robots of all time, has a new treat.

All yours for about US $100, overseas shipping included.

[ Ham Ham ] via [ Robotstart ]

MagicLab has announced the launch of its first generation self-developed dexterous hand product, the MagicHand S01. The MagicHand S01 has 11 degrees of freedom in a single hand. The MagicHand S01 has a hand load capacity of up to 5 kilograms, and in work environments, can carry loads of over 20 kilograms.

[ MagicLab ]

Thanks, Ni Tao!

No, I’m not creeped out at all, why?

[ Clone Robotics ]

Happy 40th Birthday to the MIT Media Lab!

Since 1985, the MIT Media Lab has provided a home for interdisciplinary research, transformative technologies, and innovative approaches to solving some of humanity’s greatest challenges. As we celebrate our 40th anniversary year, we’re looking ahead to decades more of imagining, designing, and inventing a future in which everyone has the opportunity to flourish.

[ MIT Media Lab ]

While most soft pneumatic grippers that operate with a single control parameter (such as pressure or airflow) are limited to a single grasping modality, this article introduces a new method for incorporating multiple grasping modalities into vacuum-driven soft grippers. This is achieved by combining stiffness manipulation with a bistable mechanism. Adjusting the airflow tunes the energy barrier of the bistable mechanism, enabling changes in triggering sensitivity and allowing swift transitions between grasping modes. This results in an exceptional versatile gripper, capable of handling a diverse range of objects with varying sizes, shapes, stiffness, and roughness, controlled by a single parameter, airflow, and its interaction with objects.

Thanks, Bram!

In this article, we present a design concept, in which a monolithic soft body is incorporated with a vibration-driven mechanism, called Leafbot. This proposed investigation aims to build a foundation for further terradynamics study of vibration-driven soft robots in a more complicated and confined environment, with potential applications in inspection tasks.

[ Paper ] via [ IEEE Transactions on Robots ]

We present a hybrid aerial-ground robot that combines the versatility of a quadcopter with enhanced terrestrial mobility. The vehicle features a passive, reconfigurable single wheeled leg, enabling seamless transitions between flight and two ground modes: a stable stance and a dynamic cruising configuration.

[ Robotics and Intelligent Systems Laboratory ]

I’m not sure I’ve ever seen this trick performed by a robot with soft fingers before.

[ Paper ]

There are a lot of robots involved in car manufacturing. Like, a lot.

Steve Willits shows us some recent autonomous drone work being done at the AirLab at CMU’s Robotics Institute.

[ Carnegie Mellon University Robotics Institute ]

Somebody’s got to test all those luxury handbags and purses. And by somebody, I mean somerobot.

[ Qb Robotics ]

Do not trust people named Evan.

[ Tufts University Human-Robot Interaction Lab ]

Meet the Mind: MIT Professor Andreea Bobu.

[ MIT ]

-

Reinforcement Learning Triples Spot’s Running Speed

by Evan Ackerman on 21. February 2025. at 14:00

About a year ago, Boston Dynamics released a research version of its Spot quadruped robot, which comes with a low-level application programming interface (API) that allows direct control of Spot’s joints. Even back then, the rumor was that this API unlocked some significant performance improvements on Spot, including a much faster running speed. That rumor came from the Robotics and AI (RAI) Institute, formerly The AI Institute, formerly the Boston Dynamics AI Institute, and if you were at Marc Raibert’s talk at the ICRA@40 conference in Rotterdam last fall, you already know that it turned out not to be a rumor at all.

Today, we’re able to share some of the work that the RAI Institute has been doing to apply reality-grounded reinforcement learning techniques to enable much higher performance from Spot. The same techniques can also help highly dynamic robots operate robustly, and there’s a brand new hardware platform that shows this off: an autonomous bicycle that can jump.

See Spot Run

This video is showing Spot running at a sustained speed of 5.2 meters per second (11.6 miles per hour). Out of the box, Spot’s top speed is 1.6 m/s, meaning that RAI’s spot has more than tripled (!) the quadruped’s factory speed.

If Spot running this quickly looks a little strange, that’s probably because it is strange, in the sense that the way this robot dog’s legs and body move as it runs is not very much like how a real dog runs at all. “The gait is not biological, but the robot isn’t biological,” explains Farbod Farshidian, roboticist at the RAI Institute. “Spot’s actuators are different from muscles, and its kinematics are different, so a gait that’s suitable for a dog to run fast isn’t necessarily best for this robot.”

The best Farshidian can categorize how Spot is moving is that it’s somewhat similar to a trotting gait, except with an added flight phase (with all four feet off the ground at once) that technically turns it into a run. This flight phase is necessary, Farshidian says, because the robot needs that time to successively pull its feet forward fast enough to maintain its speed. This is a “discovered behavior,” in that the robot was not explicitly programmed to “run,” but rather was just required to find the best way of moving as fast as possible.

Reinforcement Learning Versus Model Predictive Control

The Spot controller that ships with the robot when you buy it from Boston Dynamics is based on model predictive control (MPC), which involves creating a software model that approximates the dynamics of the robot as best you can, and then solving an optimization problem for the tasks that you want the robot to do in real time. It’s a very predictable and reliable method for controlling a robot, but it’s also somewhat rigid, because that original software model won’t be close enough to reality to let you really push the limits of the robot. And if you try to say, “Okay, I’m just going to make a superdetailed software model of my robot and push the limits that way,” you get stuck because the optimization problem has to be solved for whatever you want the robot to do, in real time, and the more complex the model is, the harder it is to do that quickly enough to be useful. Reinforcement learning (RL), on the other hand, learns offline. You can use as complex of a model as you want, and then take all the time you need in simulation to train a control policy that can then be run very efficiently on the robot.

In simulation, a couple of Spots (or hundreds of Spots) can be trained in parallel for robust real-world performance.Robotics and AI Institute

In the example of Spot’s top speed, it’s simply not possible to model every last detail for all of the robot’s actuators within a model-based control system that would run in real time on the robot. So instead, simplified (and typically very conservative) assumptions are made about what the actuators are actually doing so that you can expect safe and reliable performance.

Farshidian explains that these assumptions make it difficult to develop a useful understanding of what performance limitations actually are. “Many people in robotics know that one of the limitations of running fast is that you’re going to hit the torque and velocity maximum of your actuation system. So, people try to model that using the data sheets of the actuators. For us, the question that we wanted to answer was whether there might exist some other phenomena that was actually limiting performance.”

Searching for these other phenomena involved bringing new data into the reinforcement learning pipeline, like detailed actuator models learned from the real-world performance of the robot. In Spot’s case, that provided the answer to high-speed running. It turned out that what was limiting Spot’s speed was not the actuators themselves, nor any of the robot’s kinematics: It was simply the batteries not being able to supply enough power. “This was a surprise for me,” Farshidian says, “because I thought we were going to hit the actuator limits first.”

Spot’s power system is complex enough that there’s likely some additional wiggle room, and Farshidian says the only thing that prevented them from pushing Spot’s top speed past 5.2 m/s is that they didn’t have access to the battery voltages so they weren’t able to incorporate that real-world data into their RL model. “If we had beefier batteries on there, we could have run faster. And if you model that phenomena as well in our simulator, I’m sure that we can push this farther.”

Farshidian emphasizes that RAI’s technique is about much more than just getting Spot to run fast—it could also be applied to making Spot move more efficiently to maximize battery life, or more quietly to work better in an office or home environment. Essentially, this is a generalizable tool that can find new ways of expanding the capabilities of any robotic system. And when real-world data is used to make a simulated robot better, you can ask the simulation to do more, with confidence that those simulated skills will successfully transfer back onto the real robot.

Ultra Mobility Vehicle: Teaching Robot Bikes to Jump

Reinforcement learning isn’t just good for maximizing the performance of a robot—it can also make that performance more reliable. The RAI Institute has been experimenting with a completely new kind of robot that it invented in-house: a little jumping bicycle called the Ultra Mobility Vehicle, or UMV, which was trained to do parkour using essentially the same RL pipeline for balancing and driving as was used for Spot’s high-speed running.

There’s no independent physical stabilization system (like a gyroscope) keeping the UMV from falling over; it’s just a normal bike that can move forward and backward and turn its front wheel. As much mass as possible is then packed into the top bit, which actuators can rapidly accelerate up and down. “We’re demonstrating two things in this video,” says Marco Hutter, director of the RAI Institute’s Zurich office. “One is how reinforcement learning helps make the UMV very robust in its driving capabilities in diverse situations. And second, how understanding the robots’ dynamic capabilities allows us to do new things, like jumping on a table which is higher than the robot itself.”

“The key of RL in all of this is to discover new behavior and make this robust and reliable under conditions that are very hard to model. That’s where RL really, really shines.” —Marco Hutter, The RAI Institute

As impressive as the jumping is, for Hutter, it’s just as difficult (if not more difficult) to do maneuvers that may seem fairly simple, like riding backwards. “Going backwards is highly unstable,” Hutter explains. “At least for us, it was not really possible to do that with a classical [MPC] controller, particularly over rough terrain or with disturbances.”

Getting this robot out of the lab and onto terrain to do proper bike parkour is a work in progress that the RAI Institute says it will be able to demonstrate in the near future, but it’s really not about what this particular hardware platform can do—it’s about what any robot can do through RL and other learning-based methods, says Hutter. “The bigger picture here is that the hardware of such robotic systems can in theory do a lot more than we were able to achieve with our classic control algorithms. Understanding these hidden limits in hardware systems lets us improve performance and keep pushing the boundaries on control.”

Teaching the UMV to drive itself down stairs in sim results in a real robot that can handle stairs at any angle.Robotics and AI Institute

Reinforcement Learning for Robots Everywhere

Just a few weeks ago, the RAI Institute announced a new partnership with Boston Dynamics “to advance humanoid robots through reinforcement learning.” Humanoids are just another kind of robotic platform, albeit a significantly more complicated one with many more degrees of freedom and things to model and simulate. But when considering the limitations of model predictive control for this level of complexity, a reinforcement learning approach seems almost inevitable, especially when such an approach is already streamlined due to its ability to generalize.

“One of the ambitions that we have as an institute is to have solutions which span across all kinds of different platforms,” says Hutter. “It’s about building tools, about building infrastructure, building the basis for this to be done in a broader context. So not only humanoids, but driving vehicles, quadrupeds, you name it. But doing RL research and showcasing some nice first proof of concept is one thing—pushing it to work in the real world under all conditions, while pushing the boundaries in performance, is something else.”

Transferring skills into the real world has always been a challenge for robots trained in simulation, precisely because simulation is so friendly to robots. “If you spend enough time,” Farshidian explains, “you can come up with a reward function where eventually the robot will do what you want. What often fails is when you want to transfer that sim behavior to the hardware, because reinforcement learning is very good at finding glitches in your simulator and leveraging them to do the task.”

Simulation has been getting much, much better, with new tools, more accurate dynamics, and lots of computing power to throw at the problem. “It’s a hugely powerful ability that we can simulate so many things, and generate so much data almost for free,” Hutter says. But the usefulness of that data is in its connection to reality, making sure that what you’re simulating is accurate enough that a reinforcement learning approach will in fact solve for reality. Bringing physical data collected on real hardware back into the simulation, Hutter believes, is a very promising approach, whether it’s applied to running quadrupeds or jumping bicycles or humanoids. “The combination of the two—of simulation and reality—that’s what I would hypothesize is the right direction.”

-

Saving Public Data Takes More Than Simple Snapshots

by Gwendolyn Rak on 19. February 2025. at 18:30

Shortly after the Trump administration took office in the United States in late January, more than 8,000 pages across several government websites and databases were taken down, the New York Times found. Though many of these have now been restored, thousands of pages were purged of references to gender and diversity initiatives, for example, and others including the U.S. Agency for International Development (USAID) website remain down.

By 11 February, a federal judge ruled that the government agencies must restore public access to pages and datasets maintained by the Centers for Disease Control and Prevention (CDC) and the Food and Drug Administration (FDA). While many scientists fled to online archives in a panic, ironically, the Justice Department had argued that the physicians who brought the case were not harmed because the removed information was available on the Internet Archive’s Wayback Machine. In response, a federal judge wrote, “The Court is not persuaded,” noting that a user must know the original URL of an archived page in order to view it.

The administration’s legal argument “was a bit of an interesting accolade,” says Mark Graham, director of the Wayback Machine, who believes the judge’s ruling was “apropos.” Over the past few weeks, the Internet Archive and other archival sites have received attention for preserving government databases and websites. But these projects have been ongoing for years. The Internet Archive, for example, was founded as a nonprofit dedicated to providing universal access to knowledge nearly 30 years ago, and it now records more than a billion URLs every day, says Graham.

Since 2008, Internet Archive has also hosted an accessible copy of the End of Term Web Archive, a collaboration that documents changes to federal government sites before and after administration changes. In the most recent collection, it has already archived more than 500 terabytes of material.

Complementary Crawls

The Internet Archive’s strength is scale, Graham says. “We can often [preserve] things quickly, at scale. But we don’t have deep experience in analysis.” Meanwhile, groups like the Environmental Data and Governance Initiative and the Association of Health Care Journalists provide help for activists and academics identifying and documenting changes.

The Library Innovation Lab at Harvard Law School has also joined the efforts with its archive of data.gov, a 16 TB collection that includes more than 311,000 public datasets and is being updated daily with new data. The project began in late 2024, when the library realized that data sets are often missed in other web crawls, says Jack Cushman, a software engineer and director of the Library Innovation Lab.

“You can miss anything where you have to interact with JavaScript or with a button or with a form.” —Jack Cushman, Library Innovation Lab

A typical crawl has no trouble capturing basic HTML, PDF, or CSV files. But archiving interactive web services that are driven by databases poses a challenge. It would be impossible to archive a site like Amazon, for example, says Graham.

The datasets the Library Innovation Lab (LIL) is working to archive are similarly tricky to capture. “If you’re doing a web crawl and just clicking from link to link, as the End of Term archive does, you can miss anything where you have to interact with JavaScript or with a button or with a form, where you have to ask for permission and then register or download something,” explains Cushman.

“We wanted to do something that was complementary to existing web crawls, and the way we did that was to go into APIs,” he says. By going into the API’s, which bypass web pages to access data directly, the LIL’s program could fetch a complete catalog of the data sets—whether CSV, Excel, XML, or other file types—and pull the associated URLs to create an archive. In the case of data.gov, Cushman and his colleagues wrote a script to send the right 300 queries that would fetch 1,000 items per query, then go through the 300,000 total items to gather the data. “What we’re looking for is areas where some automation will unlock a lot of new data that wouldn’t otherwise be unlocked,” says Cushman.

The other important factor for the LIL archive was to make sure the data was in a usable format. “You might get something in a web crawl where [the data] is there across 100,000 web pages, but it’s very hard to get it back out into a spreadsheet or something that you can analyze,” Cushman says. Making it usable, both in the data format and user interface, helps create a sustainable archive.

Lots Of Copies Keep Stuff Safe

The key to preserving the internet’s data is a principle that goes by the acronym LOCKSS: Lots Of Copies Keep Stuff Safe.

When the Internet Archive suffered a cyberattack last October, the Archive took down the site for a three-and-a-half week period to audit the entire site and implement security upgrades. “Libraries have traditionally always been under attack, so this is no different,” Graham says. As part of its defense, the Archive now has several copies of the materials in disparate physical locations, both inside and outside the U.S.

“The US government is the world’s largest publisher,” Graham notes. It publishes material on a wide range of topics, and “much of it is beneficial to people, not only in this country, but throughout the world, whether that is about energy or health or agriculture or security.” And the fact that many individuals and organizations are contributing to preservation of the digital world is actually a good thing.

“The goal is for those copies to be diverse across every metric that you can think of. They should be on different kinds of media. They should be controlled by different people, with different funding sources, in different formats,” says Cushman. “Every form of similarity between your backups creates a risk of loss.” The data.gov archive has its primary copy stored through a cloud service with others as backup. The archive also includes open source software to make it easy to replicate.

In addition to maintaining copies, Cushman says it’s important to include cryptographic signatures and timestamps. Each time an archive is created, it’s signed with cryptographic proof of the creator’s email address and time, which can help verify the validity of an archive.

An Ongoing Challenge

Since President Trump took office, a lot of material has been removed from US federal websites—quantifiably more than previous new administrations, says Graham. On a global scale, however, this isn’t unprecedented, he adds.

In the U.S., official government websites have been changed with each new administration since Bill Clinton’s, notes Jason Scott, a “free range archivist” at the Internet Archive and co-founder of digital preservation site Archive Team. “This one’s more chaotic,” Scott says. But “the web is a very high entropy entity ... Google is an archive like a supermarket is a food museum.”

The job of digital archivists is a difficult one, especially with a backlog of sites that have existed across the evolution of internet standards. But these efforts are not new. “The ramping up will only be in terms of disk space and bandwidth resources, not the process that has been ongoing,” says Scott.

For Cushman, working on this project has underscored the value of public data. “The government data that we have is like a GPS signal,” he says. “It doesn’t tell us where to go, but it tells us what’s around us, so that we can make decisions. Engaging with it for the first time this way has really helped me appreciate what a treasure we have.”

-

A Rover Race on Mojave Desert Sands

by Joanna Goodrich on 18. February 2025. at 19:00

With NASA working on sending humans to Mars starting in the 2030s, colonizing the Red Planet seems more achievable than ever. The space agency is already leading yearlong simulated missions to better understand how living on Mars could affect humans.

Because of the planet’s thin atmosphere, high radiation levels, and abrasive dust, people would need to live in specialized dwellings and use robots to perform outdoor tasks.

With hopes of inspiring the next generation of engineers and scientists to develop space robots, IEEE held its first Robopalooza, a telepresence competition with robotic demonstrations, in November in Lucerne Valley, Calif. The competition is expected to become an annual event.

The contest and demonstrations were held in conjunction with the IEEE Conference on Telepresence at Caltech. The events were organized by IEEE Telepresence, an IEEE Future Directions initiative that aims to advance telepresence technology to help redefine how people live and work.

Seven teams from universities and robotics companies worldwide remotely operated a Helelani rover through an obstacle course inspired by the game Capture the Flag. The 318-kilogram vehicle was provided by the Pacific International Space Center for Exploration Systems (PISCES), an aerospace research center at the University of Hawaii in Hilo. The team that took the least time to retrieve the flag—located on a small hill in the middle of the 400-meter-long course—received US $5,000.

Companies and university labs developing space robots demonstrated some of their creations to the more than 300 conference attendees including local preuniversity students.

This year’s conference and competition are scheduled to be held in Leiden, Netherlands, from 8 to 10 September.

Why humans need robots on Mars

Science fiction writers have long explored the idea of people living on another planet, before astronauts even landed on the moon. It’s still a staple of popular series including the Dune, Red Rising, and Star Wars franchises, whose main characters don’t just reside in a galaxy far, far away. Paul Atreides, Darrow O’Lykos, and Luke Skywalker grew up or live on a desert planet much like Mars.

Settling the Red Planet is not likely to be easy. Before sending people there, robots would need to build housing. The planet’s atmosphere is 95 percent carbon dioxide. The radiation there would kill human inhabitants in a few months if they weren’t adequately shielded from it. Also, according to NASA, Mars is covered in fine dust particles; breathing in the sharp-edged fragments could damage lungs.

Once people inhabit the robot-built dwellings, they would need to use robots to complete outdoor tasks such as geological research, building maintenance, and water mining.

Spacecraft aren’t immune to Mars’s dangers, either. The thin atmosphere makes it difficult for rovers to land, as there is minimal air resistance to slow down their descent. The planet’s radiation levels, up to 50 times higher than on Earth, gradually degrade a rover’s erosion-resistant coating, electronic systems, and other components. The abrasive dust also can damage spacecraft.

Today’s rovers are slow-moving, averaging a ground speed of about 150 meters per hour on a flat surface, in part because of the 20- to 40-minute delay in communications between Earth and Mars, says Robert Mueller, who organized the telepresence competition. And rovers are expensive: NASA’s latest, Perseverance, cost around $1.7 billion to design and build.

Racing robots in the desert

When choosing a location for the Robopalooza, Mueller found that California’s Mojave Desert, with its hills and soft sand, closely resembled Mars’s topography. Mueller, an IEEE member, is a senior technologist and principal investigator at NASA’s Kennedy Space Center, near Cape Canaveral in Florida.

The competing teams were located in Australia, Chile, and the United States.

A camera mounted on the Helelani rover live-streamed its view to the participants’ computers so they could remotely maneuver the vehicle. The route ended at the top of Peterman Hill. The teams tried to navigate the rover around 14 traffic cones placed randomly along the course. If the rover touched a cone, 10 seconds were added to the team’s final time. If a team wasn’t able to maneuver the rover around a cone, 20 seconds were added.

Seven teams—from North Dakota University; SK Godelius; the University of Adelaide, in Australia; the University of Alabama in Tuscaloosa; Virginia State University; and Western Australia Remote Operations (WARO32)—competed remotely. The California State Polytechnic University, Ponoma, team competed on-site from a trailer.

With a finishing time of 20 minutes and 10 seconds—and no penalties—WARO32 won the competition.

“The winning team operated the rover from Perth, Australia, which was 14,800 kilometers from the competition site. They were the team that was farthest away from the vehicle,” Mueller says. “This showcases that telepresence is achievable across Earth and that there is enormous potential for a variety of tasks to be performed using telepresence, such as telemedicine, remote machinery operation, and business and corporate communication.”

Hector, a lunar lander, wears toddler-size Crocs to give it traction and balance.

Preuniversity students try out space robots

At the IEEE robotic demonstrations, representatives from robotics companies including Honeybee, Cislune, and Neurospace showed off some of their creations. They included a robot that extracts water from rocky soil, a lunar soil excavator, and a cargo vehicle that can adapt to different terrains.

Mueller invited nearby teachers to bring their students to the IEEE event. More than 300 elementary, middle, and high school students attended.

They had the opportunity to see top robotics companies demonstrate their machines and to play with Hector, a bipedal lunar lander created by two doctoral students from the University of Southern California, in Los Angeles.

“Many students and other attendees were inspired by the potential of robotics and telepresence as they watched the robot racing in the Mojave Desert,” Mueller says. “The IEEE Telepresence Initiative is planning to make this competition an annual event, which will take place at remote locations across the world that have extreme conditions, mimicking extraterrestrial planetary surfaces.”

-

China Rescues Stranded Lunar Satellites

by Andrew Jones on 18. February 2025. at 12:00

China has managed to deliver a pair of satellites into lunar orbit despite the spacecraft initially being stranded in low Earth orbit following a rocket failure, using a mix of complex calculations, precise engine burns, and astrodynamic ingenuity.

China launched the DRO-A and B satellites on 13 March last year on a Long March 2C rocket, aiming to send the pair into a distant retrograde orbit (DRO) around the moon. However, the rocket’s Yuanzheng-1S upper stage—intended to fire the spacecraft into a transfer orbit to the moon—failed, leaving the pair marooned in low Earth orbit.

Little is known for certain about the satellites. They must be small, given the limited payload capabilities of the rocket used for the launch, and are thought to be for testing technology and the uses of the unusual retrograde orbit. (DRO orbits could be handy for lunar communications and observation.) Critically, the spacecraft’s small size means they have little propellant, making reaching lunar orbit from low Earth orbit unassisted a very tall order. However, Microsat, the institute under the Chinese Academy of Sciences (CAS) behind the mission, got to work on a rescue, despite the daunting distance.

“Having to replan that in a hurry must be a nightmare, so it’s a very impressive achievement.” —Jonathan McDowell, Harvard-Smithsonian

What followed was a 167-day-long effort that first got the spacecraft out to well beyond lunar distance and then successfully inserted the satellites into their intended orbit. The operation included five orbital maneuvers, five further trajectory corrections to fine-tune the satellites course, and three gravity assists from the Earth and moon.

The first steps were small engine burns at perigee—the spacecraft’s closest orbital approach to Earth—which gradually raised the apogee—the farthest point of the orbit from Earth. Once the apogee was high enough, a larger burn put the spacecraft on an atypical course for the moon.

From the Earth to the Moon

Normally, spacecraft going to the moon follow the simplest trajectory, a so-called Hohmann transfer that burns a lot of propellant to get moving and then uses another big burn to drop into orbit once the spacecraft arrives at its destination after three to four days. Instead, the Chinese took advantage of a chaotic dynamical region around the Earth-moon system to save propellant. The Japanese Hiten probe had been rescued using a similar approach in 1990, but it was sent into a conventional lunar orbit.The calculations to reach DRO—a high-altitude, long-term stable orbit moving in a retrograde direction relative to the moon—would have been arduous.

“A small error will make you miss your target by a long way.” —Jonathan McDowell, Harvard-Smithsonian

“The astrodynamics of getting to the Moon is already much more complicated than just Earth orbit missions,” says Jonathan McDowell, a Harvard-Smithsonian astronomer and space activity tracker and analyst. “Involving so-called ‘weak capture’ and distant retrograde orbits is far more complicated still, and having to replan that in a hurry must be a nightmare, so it’s a very impressive achievement.”

Weak capture refers to a celestial body gravitationally capturing a spacecraft without the need for a significant propulsive maneuver. This technique, crucial for a fuel-efficient lunar orbit insertion, demands precise timing and fine trajectory adjustments, as McDowell explains.

“The way to think of these ‘modern’ and fancy orbit strategies is that you trade time for fuel. It takes much longer but you use less fuel. Once you get out to the apogee of the transfer trajectory—they don’t say how far out that was but I am guessing over a million kilometers—you can change your final destination a lot with just a small puff of the rockets. But by the same token, a small error will make you miss your target by a long way.”

Slides from an apparent Microsat presentation emerged on social media, illustrating the circuitous path taken to deliver the spacecraft to lunar orbit. The institute, however, did not respond to a request for comment on the mission.

DRO-A and B separated from each other after successfully entering their intended distant retrograde orbit. The pair have, according to U.S. Space Force space domain awareness, orbits with an apogee of around 580,000 kilometers relative to the Earth and a perigee of around 290,000 km, while the moon orbits Earth at an average distance of 385,000 km, indicating a very high orbit above the moon.

There, the spacecraft are testing out the attributes of the unique orbit and testing technologies, including communications with another satellite, DRO-L, which was launched a month before DRO-A and B into low Earth orbit. Though not a major part of China’s lunar plans, the country is planning to establish lunar navigation and communications infrastructure to support lunar exploration, and the satellites could inform these plans.

DRO-A, at least, also carries a science payload in the form of an all-sky monitor to detect gamma-ray bursts, particularly those associated with gravitational wave events, such as colliding black holes, neutron star collisions, and supernovae. The instrumentation is based on China’s earlier GECAM low Earth orbit gamma-ray-detecting mission, but with an unobstructed field of view in deep space and less interference.

The rescue then is a boost for China’s lunar plans and space science objectives, and demonstrates Chinese prowess in astrodynamics. McDowell notes the closest approximation to this rescue is the Asiasat 3 mission, renamed HGS-1, where the satellite bound for geostationary (GEO) orbit was stuck in an elliptical transfer orbit in 1997. The satellite’s apogee was raised to make a pair of lunar flybys to eventually deliver it to GEO with fuel remaining to operate for four years.

“[This] definitely shows that China is now on a par with the U.S. in its ability to manage complex astrodynamics,” McDowell says.

China also pulled off a complex lunar far side sample return mission last year, requiring five separate spacecraft, and next year plans a landing at the lunar south pole to seek out volatiles including water. The successful salvaging of the DRO-A and B mission reinforces China’s growing expertise in deep space navigation and complex orbital rescues. With plans to establish a permanent moon base in the 2030s, such capabilities will be crucial for maintaining and supporting long-term Moon operations.

-

Willie Hobbs Moore: STEM Trailblazer

by Willie D. Jones on 16. February 2025. at 14:00

At a time in American history when even the most intelligent Black women were expected to become, at most, teachers or nurses, Willie Hobbs Moore broke with societal expectations to become a noted physicist and engineer.

Moore probably is best known for being the first Black woman to earn a Ph.D. in science (physics) in the United States, in 1972. She also is renowned for being an unwavering advocate for getting more Black people into science, technology, engineering, and mathematics. Her achievements have inspired generations of Black students, and women especially, to believe that they could pursue a STEM career.

Moore, who died in her Ann Arbor, Mich., home on 14 March 1994, two months shy of her 60th birthday, is the subject of the new book Willie Hobbs Moore—You’ve Got to Be Excellent! The biography, published by IEEE-USA, is the seventh in the organization’s Famous Women Engineers in History series.

Moore attended the University of Michigan in Ann Arbor, where she earned bachelor’s and master’s degrees in electrical engineering and, in 1972, her barrier-breaking doctorate in physics. In 2013, the University of Michigan Women in Science and Engineering unit created the Willie Hobbs Moore Awards to honor students, staff, and faculty members who “demonstrate excellence promoting equity” in STEM fields. The university held a symposium in 2022 to honor Moore’s work and celebrate the 50th anniversary of her achievement.

Physicist Donnell Walton, former director of the Corning West Technology Center in Silicon Valley and a National Society of Black Physicists board member, praised Moore, saying she indicated that what’s possible is not limited to what’s expected. Walton befriended Moore while he was pursuing his doctorate in applied physics at the university, he says, adding that he admired the strength and perseverance it took for her to thrive in academic and professional arenas where she was the only Black woman.

Despite ingrained social norms that tended to push women and minorities into lower-status occupations, Moore refused to be dissuaded from her career. She conducted physics research at the University of Michigan and held several positions in industry before joining Ford Motor Co. in Dearborn, Mich., in 1977. She became a U.S. expert in Japanese quality systems and engineering design, improving Ford’s production processes. She rose through the ranks at the automaker and served as an executive who oversaw the warranty department within the company’s automobile assembly operation.

An early trailblazer

Moore was born in 1934 in Atlantic City, N.J. According to a Physics Today article that delved into her background, her father was a plumber and her mother worked part time as a hotel chambermaid.

An A student throughout high school, Moore displayed a talent for science and mathematics. She became the first person in her family to earn a college degree.

She began her studies at the Michigan engineering college in 1954—the same year that the U.S. Supreme Court ruled against legally mandated segregation in public schools.

Moore was the only Black female undergraduate in the electrical engineering program. Her academic success makes it clear that being one of one was not an impediment. But race was occasionally an issue. In that same 2022 Physics Today article, Ronald E. Mickens, a physics professor at Clark Atlanta University, told a story about an incident from Moore’s undergraduate days that illustrates the point. One day she encountered the chairman of another engineering college department, and completely unprompted, he told her, “You don’t belong here. Even if you manage to finish, there is no place for you in the professional world you seek.”

“There will always be prejudiced people; you’ve got to be prepared to survive in spite of their attitudes.” —Willie Hobbs Moore

But she persevered, maintaining her standard of excellence in her academic pursuits. She earned a bachelor’s degree in EE in 1958, followed by an EE master’s degree in 1961. She was the first Black woman to earn those degrees at Michigan.

She worked as an engineer at several companies before returning to the university in 1966 to begin working toward a doctorate. She conducted her graduate research under the direction of Samuel Krimm, a noted infrared spectroscopist. Krimm’s work focused on analyzing materials using infrared so he could study their molecular structures. Moore’s dissertation was a theoretical analysis of secondary chlorides for polyvinyl chloride polymers. PVC, a type of plastic, is widely used in construction, health care, and packaging. Moore’s work led to the development of additives that gave PVC pipes greater thermal and mechanical stability and improved their durability.

Moore paid for her doctoral studies by working part time at the university, KMS Industries, and Datamax Corp., all in Ann Arbor. Joining KMS as a systems analyst, she supported the optics design staff and established computer requirements for the optics division. She left KMS in 1968 to become a senior analyst at Datamax. In that role, she headed the analytics group, which evaluated the company’s products.

After earning her Ph.D. in 1972, for the next five years she was a postdoctoral Fellow and lecturer with the university’s Macromolecular Research Center.

She authored more than a dozen papers on protein spectroscopy—the science of analyzing proteins’ structure, composition, and activity by measuring how they interact with electromagnetic radiation. Her work appeared in several prestigious publications including the Journal of Applied Physics, The Journal of Chemical Physics, and the Journal of Molecular Spectroscopy.

Despite a promising career in academia, Moore left to work in industry.

Ford’s quality control queen

Moore joined Ford in 1977 as an assembly engineer. In an interview with The Ann Arbor News, she recalled contending with racial hostility and overt accusations that she was underqualified and had been hired only to fill a quota that was part of the company’s affirmative action program.

She demonstrated her value to the organization and became an expert in Japanese methods of quality engineering and manufacturing, particularly those invented by Genichi Taguchi, a renowned engineer and statistician.

The Taguchi method emphasized continuous improvement, waste reduction, and employee involvement in projects. Moore pushed Ford to use the approach, which led to higher-quality products and better efficiency. The changes proved critical to boosting the company’s competitiveness against Japanese automakers, which had begun to dominate the automobile market in the late 1970s and early 1980s.

Eventually, Moore rose to the company’s executive ranks, overseeing the warranty department of Ford’s assembly operation.

In 1985 Moore co-wrote the book Quality Engineering Products and Process Design Optimization with Yuin Wu, vice president of Taguchi Methods Training at ASI Consulting Group in Bingham Farms, Mich. ASI helps businesses develop strategies for improving productivity, engineering, and product quality. In their book, Moore and Wu wrote, “Philosophically, the Taguchi approach is technology rather than theory. It is inductive rather than deductive. It is an engineering tool. The Taguchi approach is concerned with productivity enhancement and cost-effectiveness.”

Encouraging more Blacks to study STEM

Moore was active in STEM education for minorities, as explored in an article about her published by the American Physical Society. She brought her skills and experience to volunteer activities, intending to produce more STEM professionals who looked like her. She was involved in community science and math programs in Ann Arbor, sponsored by The Links, a service organization for Black women. She also was active with Delta Sigma Theta, a historically Black, service-oriented sorority. She volunteered with the Saturday Academy, a community mentoring program that focuses on developing college-bound students’ life skills. Volunteers also provide subject matter instruction.

She advised minority engineering students: “There will always be prejudiced people; you’ve got to be prepared to survive in spite of their attitudes.” Black students she encountered recall her oft-repeated mantra: “You’ve got to be excellent!”

In a posthumous tribute essay about Moore, Walton recalled befriending her at the Saturday Academy while tutoring middle and high school students in science and mathematics.

“Don Coleman, the former associate provost at Howard University and a good friend of mine,” Walton wrote, “noted that Dr. Hobbs Moore had tutored him when he was an engineering student at the University of Michigan. [Coleman] recalled that she taught the fundamentals and always made him feel as though she was merely reminding him of what he already knew rather than teaching him unfamiliar things.”

Walton recalled how dedicated Moore was to ensuring Black students were prepared to follow in her footsteps. He said she was a mainstay at the Saturday Academy until her 24-year battle with cancer made it impossible for her to continue.

She was posthumously honored with the Bouchet Award at the National Conference of Black Physics Students in 1995. Edward A. Bouchet was the first Black person to earn a Ph.D. in a science (physics) in the United States.

Walton, who said he admired Moore for her determination to light the way for succeeding generations, says the programs that helped him as a young student are no longer being pursued with the fervor they once were.

“Particularly right now,” he told the American Institute of Physics in 2024, “we’re seeing a retrenchment, a backlash against programs and initiatives that deal with the historical underrepresentation of women and other people who we know have a history in the United States of being excluded. And if we don’t have interventions in place, there’s nothing to say that it won’t continue.” In the interview, Walton said he is concerned that instead of there being more STEM professionals like Moore, there might be fewer.

A lasting legacy

Moore’s life is a testament to perseverance, excellence, and the power of mentorship. Her achievements prove that it’s possible to overcome the inertia of low societal expectations and improve the world.

Willie Hobbs Moore—You’ve Got to Be Excellent! Biography is available for free to members. The non-member price is US $2.99

-

Video Friday: PARTNR

by Evan Ackerman on 14. February 2025. at 17:00

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboCup German Open: 12–16 March 2025, NUREMBERG, GERMANY

German Robotics Conference: 13–15 March 2025, NUREMBERG, GERMANY

European Robotics Forum: 25–27 March 2025, STUTTGART, GERMANY

RoboSoft 2025: 23–26 April 2025, LAUSANNE, SWITZERLAND

ICUAS 2025: 14–17 May 2025, CHARLOTTE, NC

ICRA 2025: 19–23 May 2025, ATLANTA, GA

London Humanoids Summit: 29–30 May 2025, LONDON

IEEE RCAR 2025: 1–6 June 2025, TOYAMA, JAPAN

2025 Energy Drone & Robotics Summit: 16–18 June 2025, HOUSTON, TX

RSS 2025: 21–25 June 2025, LOS ANGELES

ETH Robotics Summer School: 21–27 June 2025, GENEVA

IAS 2025: 30 June–4 July 2025, GENOA, ITALY

ICRES 2025: 3–4 July 2025, PORTO, PORTUGAL

IEEE World Haptics: 8–11 July 2025, SUWON, KOREA

Enjoy today’s videos!

There is an immense amount of potential for innovation and development in the field of human-robot collaboration — and we’re excited to release Meta PARTNR, a research framework that includes a large-scale benchmark, dataset and large planning model to jump start additional research in this exciting field.

[ Meta PARTNR ]

Humanoid is the first AI and robotics company in the UK, creating the world’s leading, commercially scalable, and safe humanoid robots.

[ Humanoid ]

To complement our review paper, “Grand Challenges for Burrowing Soft Robots,” we present a compilation of soft burrowers, both organic and robotic. Soft organisms use specialized mechanisms for burrowing in granular media, which have inspired the design of many soft robots. To improve the burrowing efficacy of soft robots, there are many grand challenges that must be addressed by roboticists.

[ Faboratory Research ] at [ Yale University ]

Three small lunar rovers were packed up at NASA’s Jet Propulsion Laboratory for the first leg of their multistage journey to the Moon. These suitcase-size rovers, along with a base station and camera system that will record their travels on the lunar surface, make up NASA’s CADRE (Cooperative Autonomous Distributed Robotic Exploration) technology demonstration.]

[ NASA ]

MenteeBot V3.0 is a fully vertically integrated humanoid robot, with full-stack AI and proprietary hardware.

[ Mentee Robotics ]

What do assistance robots look like? From robotic arms attached to a wheelchair to autonomous robots that can pick up and carry objects on their own, assistive robots are making a real difference to the lives of people with limited motor control.

[ Cybathlon ]

Robots can not perform reactive manipulation and they mostly operate in open-loop while interacting with their environment. Consequently, the current manipulation algorithms either are very inefficient in performance or can only work in highly structured environments. In this paper, we present closed-loop control of a complex manipulation task where a robot uses a tool to interact with objects.

[ Paper ] via [ Mitsubishi Electric Research Laboratories ]

Thanks, Yuki!

When the future becomes the present, anything is possible. In our latest campaign, “The New Normal,” we highlight the journey our riders experience from first seeing Waymo to relishing in the magic of their first ride. How did your first-ride feeling change the way you think about the possibilities of AVs?

[ Waymo ]

One of a humanoid robot’s unique advantages lies in its bipedal mobility, allowing it to navigate diverse terrains with efficiency and agility. This capability enables Moby to move freely through various environments and assist with high-risk tasks in critical industries like construction, mining, and energy.

[ UCR ]

Although robots are just tools to us, it’s still important to make them somewhat expressive so they can better integrate into our world. So, we created a small animation of the robot waking up—one that it executes all by itself!

[ Pollen Robotics ]

In this live demo, an OTTO AMR expert will walk through the key differences between AGVs and AMRs, highlighting how OTTO AMRs address challenges that AGVs cannot.

[ OTTO ] by [ Rockwell Automation ]

This Carnegie Mellon University Robotics Institute Seminar is from CMU’s Aaron Johnson, on “Uncertainty and Contact with the World.”

As robots move out of the lab and factory and into more challenging environments, uncertainty in the robot’s state, dynamics, and contact conditions becomes a fact of life. In this talk, I’ll present some recent work in handling uncertainty in dynamics and contact conditions, in order to both reduce that uncertainty where we can but also generate strategies that do not require perfect knowledge of the world state.

[ CMU RI ]

-

Are You Ready to Let an AI Agent Use Your Computer?

by Eliza Strickland on 13. February 2025. at 14:00

Two years after the generative AI boom really began with the launch of ChatGPT, it no longer seems that exciting to have a phenomenally helpful AI assistant hanging around in your web browser or phone, just waiting for you to ask it questions. The next big push in AI is for AI agents that can take action on your behalf. But while agentic AI has already arrived for power users like coders, everyday consumers don’t yet have these kinds of AI assistants.

That will soon change. Anthropic, Google DeepMind, and OpenAI have all recently unveiled experimental models that can use computers the way people do—searching the web for information, filling out forms, and clicking buttons. With a little guidance from the human user, they can do thinks like order groceries, call an Uber, hunt for the best price for a product, or find a flight for your next vacation. And while these early models have limited abilities and aren’t yet widely available, they show the direction that AI is going.

“This is just the AI clicking around,” said OpenAI CEO Sam Altman in a demo video as he watched the OpenAI agent, called Operator, navigate to OpenTable, look up a San Francisco restaurant, and check for a table for two at 7pm.

Zachary Lipton, an associate professor of machine learning at Carnegie Mellon University, notes that AI agents are already being embedded in specialized software for different types of enterprise customers such as salespeople, doctors, and lawyers. But until now, we haven’t seen AI agents that can “do routine stuff on your laptop,” he says. “What’s intriguing here is the possibility of people starting to hand over the keys.”

AI Agents from Anthropic, Google DeepMind, and OpenAI

Anthropic was the first to unveil this new functionality, with an announcement in October that its Claude chatbot can now “use computers the way humans do.” The company stressed that it was giving the models this capability as a public beta test, and that it’s only available to developers who are building tools and products on top of Anthropic’s large language models. Claude navigates by viewing screenshots of what the user sees and counting the pixels required to move the cursor to a certain spot for a click. A spokesperson for Anthropic says that Claude can do this work on any computer and within any desktop application.

Next out of the gate was Google DeepMind with its Project Mariner, built on top of Google’s Gemini 2 language model. The company showed Mariner off in December but called it an “early research prototype” and said it’s only making the tool available to “trusted testers” for now. As another precaution, Mariner currently only operates within the Chrome browser, and only within an active tab, meaning that it won’t run in the background while you work on other tasks. While this requirement seems to somewhat defeat the purpose of having a time-saving AI helper, it’s likely just a temporary condition for this early stage of development.

Finally, in January OpenAI launched its computer-use agent (CUA), called Operator. OpenAI called it a “research preview” and made it available only to users who pay US $200 per month for OpenAI’s premium service, though the company said it’s working toward broader release. Yash Kumar, an engineer on the Operator team, says the tool can work with essentially any website. “We’re starting with the browser because this is where the majority of work happens,” Kumar says. But he notes that “the CUA model is also trained to use a computer, so it’s possible we could expand it” to work with other desktop apps.

Like the others, Operator relies on chain-of-thought reasoning to take instructions and break them down into a series of tasks that it can complete. If it needs more information to complete a task—like, for example, if you prefer to buy red or yellow onions—it will pause and ask for input. It also asks for confirmation before taking a final step, like booking the restaurant table or putting in the grocery order.

Safety Concerns for Computer-Use Agents

Here are some things that computer-use agents can’t yet do: log in to sites, agree to terms of service, solve captchas, and enter credit card or other payment details. If an agent comes up against one of these roadblocks, it hands the steering wheel back to the human user. OpenAI notes that Operator doesn’t take screenshots of the browser while the user is entering login or payment information.

The three companies have all noted that putting an AI in charge of your computer could pose safety risks. Anthropic has specifically raised the concern of prompt injection attacks, or ways in which malicious actors can add something to the user’s prompt to make the model take an unexpected action. “Since Claude can interpret screenshots from computers connected to the internet, it’s possible that it may be exposed to content that includes prompt injection attacks,” Anthropic wrote in a blog post.

CMU’s Lipton says that the companies haven’t revealed much information about the computer-use agents and how they work, so it’s hard to assess the risks. “If someone is getting your computer operator to do something nefarious, does that mean they already have access to your computer?” he wonders, and if so, why wouldn’t the miscreant just take action directly?

Still, Lipton says, with all the actions we take and purchases we make online, “It doesn’t require a wild leap of imagination to imagine actions that would leave the user in a pickle.” For example, he says, “Who will be the first person who wakes up and says, ‘My [agent] bought me a fleet of cars?’”

The Future of Computer-Use Agents

While none of the companies have revealed a timeline for making their computer-use agents broadly available, it seems likely that consumers will begin to get access to them this year—either through the big AI companies or through startups creating cheaper knockoffs.

OpenAI’s Kumar says it’s an exciting time, and that Operator marks a step toward a more collaborative future for humans and AI. “It’s a stepping stone on our path to AGI,” he says, referring to the long-promised dream/nightmare of artificial general intelligence. “The ability to use the same interfaces and tools that humans interact with on a daily basis broadens the utility of AI, helping people save time on everyday tasks.”

If you remember the prescient 2013 movie Her, it seems like we’re edging toward the world that existed at the beginning of the film, before the sultry-voiced Samantha began speaking into the protagonist’s ear. It’s a world in which everyone has a boring and neutral AI to help them read and respond to messages and take care of other mundane tasks. Once the AI companies solidly achieve that goal, they’ll no doubt start working on Samantha.

-

IEEE Unveils the 2025–2030 Strategic Plan

by IEEE on 12. February 2025. at 19:00

IEEE’s 2020–2025 strategic plan set direction for the organization and informed its efforts over the last four years. The IEEE Board of Directors, supported by the IEEE Strategy and Alignment Committee, has updated the goals of the plan, which now covers 2025 through 2030. Even though the goals have been updated, IEEE’s mission and vision remain constant.

The 2025–2030 IEEE Strategic Plan’s six new goals focus on furthering the organization’s role as a leading trusted source, driving technological innovation ethically and with integrity, enabling interdisciplinary opportunities, inspiring future generations of technologists, further engaging the public, and empowering technology professionals throughout their careers.

Together with IEEE’s steadfast mission, vision, and core values, the plan will guide the organization’s priorities.

“The IEEE Strategic Plan provides the ‘North Star’ for IEEE activities,” says Kathleen Kramer, 2025 IEEE president and CEO. “It offers aspirational, guiding priorities to steer us for the near future. IEEE organizational units are aligning their initiatives to these goals so we may move forward as One IEEE.”

Input from a variety of groups

To gain input for the new strategic plan from the IEEE community, in-depth stakeholder interviews were conducted with the Board of Directors, senior professional staff leadership, young professionals, students, and others. IEEE also surveyed more than 35,000 individuals including volunteers; members and nonmembers; IEEE young professionals and student members; and representatives from industry. In-person focus groups were conducted in eight locations around the globe.

The goals were ideated through working sessions with the IEEE directors, directors-elect, senior professional staff leadership, and the IEEE Strategy and Alignment Committee, culminating with the Board approving them at its November 2024 meeting.

These six new goals will guide IEEE through the near future:

- Advance science and technology as a leading trusted source of information for research, development, standards, and public policy

- Drive technological innovation while promoting scientific integrity and the ethical development and use of technology

- Provide opportunities for technology-related interdisciplinary collaboration, research, and knowledge sharing across industry, academia, and government

- Inspire intellectual curiosity and support discovery and invention to engage the next generation of technology innovators

- Expand public awareness of the significant role that engineering, science, and technology play across the globe

- Empower technology professionals in their careers through ongoing education, mentoring, networking, and lifelong engagement

Work on the next phase is ongoing and is designed to guide the organization in cascading the goals into tactical objectives to ensure that organizational unit efforts align with the holistic IEEE strategy. Aligning organizational unit strategic planning with the broader IEEE Strategic Plan is an important next step.

In delivering on its strategic plan, IEEE will continue to foster a collaborative environment that is open, inclusive, and free of bias. The organization also will continue to sustain its strength, reach, and vitality of our organization for future generations and ensure our role as a 501(c)(3) public charity.

-

Dual-Arm HyQReal Puts Powerful Telepresence Anywhere

by Evan Ackerman on 11. February 2025. at 16:00

In theory, one of the main applications for robots should be operating in environments that (for whatever reason) are too dangerous for humans. I say “in theory” because in practice it’s difficult to get robots to do useful stuff in semi-structured or unstructured environments without direct human supervision. This is why there’s been some emphasis recently on teleoperation: Human software teaming up with robot hardware can be a very effective combination.

For this combination to work, you need two things. First, an intuitive control system that lets the user embody themselves in the robot to pilot it effectively. And second, a robot that can deliver on the kind of embodiment that the human pilot needs. The second bit is the more challenging, because humans have very high standards for mobility, strength, and dexterity. But researchers at the Italian Institute of Technology (IIT) have a system that manages to check both boxes, thanks to its enormously powerful quadruped, which now sports a pair of massive arms on its head.

“The primary goal of this project, conducted in collaboration with INAIL, is to extend human capabilities to the robot, allowing operators to perform complex tasks remotely in hazardous and unstructured environments to mitigate risks to their safety by exploiting the robot’s capabilities,” explains Claudio Semini, who leads the Robot Teleoperativo project at IIT. The project is based around the HyQReal hydraulic quadruped, the most recent addition to IIT’s quadruped family.

Hydraulics have been very visibly falling out of favor in robotics, because they’re complicated and messy, and in general robots don’t need the absurd power density that comes with hydraulics. But there are still a few robots in active development that use hydraulics specifically because of all that power. If your robot needs to be highly dynamic or lift really heavy things, hydraulics are, at least for now, where it’s at.

IIT’s HyQReal quadruped is one of those robots. If you need something that can carry a big payload, like a pair of massive arms, this is your robot. Back in 2019, we saw HyQReal pulling a three-tonne airplane. HyQReal itself weighs 140 kilograms, and its knee joints can output up to 300 newton-meters of torque. The hydraulic system is powered by onboard batteries and can provide up to 4 kilowatts of power. It also uses some of Moog’s lovely integrated smart actuators, which sadly don’t seem to be in development anymore. Beyond just lifting heavy things, HyQReal’s mass and power make it a very stable platform, and its aluminum roll cage and Kevlar skin ensure robustness.

The HyQReal hydraulic quadruped is tethered for power during experiments at IIT, but it can also run on battery power.IIT

The HyQReal hydraulic quadruped is tethered for power during experiments at IIT, but it can also run on battery power.IITThe arms that HyQReal is carrying are IIT-INAIL arms, which weigh 10 kg each and have a payload of 5 kg per arm. To put that in perspective, the maximum payload of a Boston Dynamics Spot robot is only 14 kg. The head-mounted configuration of the arms means they can reach the ground, and they also have an overlapping workspace to enable bimanual manipulation, which is enhanced by HyQReal’s ability to move its body to assist the arms with their reach. “The development of core actuation technologies with high power, low weight, and advanced control has been a key enabler in our efforts,” says Nikos Tsagarakis, head of the HHCM Lab at IIT. “These technologies have allowed us to realize a low-weight bimanual manipulation system with high payload capacity and large workspace, suitable for integration with HyQReal.”

Maximizing reachable space is important, because the robot will be under the remote control of a human, who probably has no particular interest in or care for mechanical or power constraints—they just want to get the job done.

To get the job done, IIT has developed a teleoperation system, which is weird-looking because it features a very large workspace that allows the user to leverage more of the robot’s full range of motion. Having tried a bunch of different robotic telepresence systems, I can vouch for how important this is: It’s super annoying to be doing some task through telepresence, and then hit a joint limit on the robot and have to pause to reset your arm position. “That is why it is important to study operators’ quality of experience. It allows us to design the haptic and teleoperation systems appropriately because it provides insights into the levels of delight or frustration associated with immersive visualization, haptic feedback, robot control, and task performance,” confirms Ioannis Sarakoglou, who is responsible for the development of the haptic teleoperation technologies in the HHCM Lab. The whole thing takes place in a fully immersive VR environment, of course, with some clever bandwidth optimization inspired by the way humans see that transmits higher-resolution images only where the user is looking.

HyQReal’s telepresence control system offers haptic feedback and a large workspace.IIT

HyQReal’s telepresence control system offers haptic feedback and a large workspace.IITTelepresence Robots for the Real World

The system is designed to be used in hazardous environments where you wouldn’t want to send a human. That’s why IIT’s partner on this project is INAIL, Italy’s National Institute for Insurance Against Accidents at Work, which is understandably quite interested in finding ways in which robots can be used to keep humans out of harm’s way.

In tests with Italian firefighters in 2022 (using an earlier version of the robot with a single arm), operators were able to use the system to extinguish a simulated tunnel fire. It’s a good first step, but Semini has plans to push the system to handle “more complex, heavy, and demanding tasks, which will better show its potential for real-world applications.”

As always with robots and real-world applications, there’s still a lot of work to be done, Semini says. “The reliability and durability of the systems in extreme environments have to be improved,” he says. “For instance, the robot must endure intense heat and prolonged flame exposure in firefighting without compromising its operational performance or structural integrity.” There’s also managing the robot’s energy consumption (which is not small) to give it a useful operating time, and managing the amount of information presented to the operator to boost situational awareness while still keeping things streamlined and efficient. “Overloading operators with too much information increases cognitive burden, while too little can lead to errors and reduce situational awareness,” says Yonas Tefera, who lead the development of the immersive interface. “Advances in immersive mixed-reality interfaces and multimodal controls, such as voice commands and eye tracking, are expected to improve efficiency and reduce fatigue in the future.”

There’s a lot of crossover here with the goals of the DARPA Robotics Challenge for humanoid robots, except IIT’s system is arguably much more realistically deployable than any of those humanoids are, at least in the near term. While we’re just starting to see the potential of humanoids in carefully controlled environment, quadrupeds are already out there in the world, proving how reliable their four-legged mobility is. Manipulation is the obvious next step, but it has to be more than just opening doors. We need it to use tools, lift debris, and all that other DARPA Robotics Challenge stuff that will keep humans safe. That’s what Robot Teleoperativo is trying to make real.

You can find more detail about the Robot Teleoperativo project in this paper, presented in November at the 2024 IEEE Conference on Telepresence, in Pasadena, Calif.

-

Celebrating Steve Jobs’s Impact on Consumer Tech and Design

by San Murugesan on 10. February 2025. at 19:00

Although Apple cofounder Steve Jobs died on 5 October 2011 at age 56, his legacy endures. His name remains synonymous with innovation, creativity, and the relentless pursuit of excellence. As a pioneer in technology and design, Jobs dared to imagine the impossible, transforming industries and reshaping human interaction with technology. His work continues to inspire engineers, scientists, and technologists worldwide. His contributions to technology, design, and human-centric innovation shape the modern world.

On the eve of what would have been his 70th birthday, 24 February, I examine his legacy, its contemporary relevance, and the enduring lessons that can guide us toward advancing technology for the benefit of humanity.

Jobs’s lasting impact: A revolution in technology

Jobs was more than a successful tech entrepreneur; he was a visionary who changed the world through his unyielding drive for innovation. He revolutionized many areas including computing, telecommunications, entertainment, and design. The products and services he pioneered have become integral to modern life and form the foundation for further technological advancements.

Celebrated for his vision, he also was criticized for his short temper, impatience, and lack of empathy. His autocratic, demanding leadership alienated colleagues and caused conflicts. But those traits also fueled innovation and offered lessons in both leadership pitfalls and aspirations.

Here are some of what I consider to be his most iconic innovations and contributions.