IEEE Spectrum IEEE Spectrum

-

Solar-Powered Tech Transforms Remote Learning

by Maurizio Arseni on 19. April 2025. at 13:00

When Marc Alan Sperber, of Arizona State University’s Education for Humanity initiative arrived at a refugee camp along the Thai-Myanmar border, the scene was typical of many crisis zones: no internet, unreliable power, and few resources. But within minutes, he and local NGO partners were able to set up a full-featured digital classroom using nothing more than a solar panel and a yellow device the size of a soup can.

Students equipped with only basic smartphones and old tablets were accessing content through Beekee, a Swiss-built lightweight standalone microserver that can turn any location into an offline first, pop-up digital classroom.

While international initiatives like Giga try to connect every school to the Internet, the timeline and cost remain hard to predict. And even then, according to Unesco’s Global Education Monitoring Report, keeping schools in low-income countries online could run up to a billion dollars a day.

Beekee, founded by Vincent Widmer and Sergio Estupiñán during their PhD studies at the educational technology department of the University of Geneva, seeks to bridge the connectivity gap through its easy-to-deploy device.

At the core of Beekee’s box is a Raspberry Pi-based microserver, enclosed in a ventilated 3D-printed, thermoresistant, plastic shell. Optimized for passive and active cooling, weather resilience, and field repairs, it can withstand heat in arid climates like that of Jordan and northern Kenya.

With its devices often deployed in remote regions, where repair options are few, Beekee supplies 3D print-friendly STL and G-code files to partners, enabling them to fabricate replacement parts on a 3D printer. “We’ve seen them use recycled plastic filament in Kenya and Lebanon to print replacement parts within days,” says Estupiñán.

The device consumes less than 10 watts of power, making it easy to run for over 12 hours on an inexpensive 20,000 milliamp-hour (mAh) power bank. Alternately, Beekee can run on compact solar panels, where battery backup can provide up to two hours even on a cloudy day or at night. “This kind of energy efficiency is essential,” says Marcel Hertel of GIZ, the German development agency that uses Beekee in Indonesia as a digital learning platform, accessible to farmers in remote areas for training. “We work where even charging a phone is a challenge,” he says.

The device runs on a custom Linux distribution and open-source software stack. Its Wi-Fi hotspot has a 40-meter range, providing coverage enough for two adjacent classrooms or a small courtyard. Up to 40 learners can connect simultaneously using their smartphones, tablets, or laptops, without apps or internet access needed. Beekee’s interface is browser-based.

However, the yellow box isn’t meant to replace the internet. It’s designed to complement it, using available bandwidth for syncing whenever available via 3G or 4G connections.

Although in many deployment zones, 3G/4G connectivity exists but is fragile. Mobile networks suffer from speed caps, high data costs, and congestion. Streaming educational content or relying on cloud platforms becomes impractical. But satellite-based internet connectivity, including emerging LEO satellite providers like Starlink, still provide windows of opportunity to download and upload content on the yellow box.

Beekee’s replacement part 3D design files can be used for remotely repairing the organization’s rugged e-learning boxes, using only a screwdriver and a 3D printer. Beekee

Beekee’s replacement part 3D design files can be used for remotely repairing the organization’s rugged e-learning boxes, using only a screwdriver and a 3D printer. BeekeeOffline Moodle for E-Learning

Beekee hosts e-learning tools for teachers and students, offering an offline Moodle instance—an open-source learning management system. Via Moodle, educators can use Scorm packages and H5P modules, technical standards commonly used to package and deliver e-learning material.

“Beekee is designed to interoperate with existing training platforms,” says Estupiñán. “We sync learner progress, content updates, and analytics without changing how an organization already works.”

Beekee also comes with Open Educational Resources (OER), including Offline Wikipedia, Khan Academy videos in multiple languages, and curated instructional content. “We don’t want just to deliver content,” says Estupiñán, “but also create a collaborative, engaging learning environment.”

Before turning to Beekee, some organizations attempted to create their own offline learning platforms or worked with third-party developers.

Some of them overlooked realities like extreme heat, power outages, and near-zero internet bandwidth—while others tried solutions that were essentially file libraries masquerading as learning platforms.

“Most standalone systems don’t support remote updates or syncing of learner data and analytics,” says Sperber. “They delivered PDFs, not actual learning experiences that include interactive practice, assessment, feedback, or anything of the like.”

Additionally, many of the systems lacked sustainable maintenance strategies and devices broke down under field conditions. “The tech might have looked sleek, but when things failed, there was no repair plan,” says Estupiñán. “We designed Beekee so that even non-specialist users could fix things with a screwdriver and a local 3D printer.”

Beekee runs its own production line using a 3D printer farm in Geneva, capable of producing up to 30 custom units per day. But it doesn’t make only hardware, It also offers training, instructional design support, and ongoing technical help. “The real challenge isn’t just getting technology into the field, it’s keeping it running,” says Estupiñán.

The Next Frontier: Offline AI

Future plans include integrating small language models (SLMs) directly into the box. A lightweight AI engine could automate tasks like grading, flagging conceptual errors, or supporting teachers with localized lesson plans.

“Offline AI is the next big step,” says Estupiñán. “It lets us bring intelligent support to teachers who may be isolated, undertrained, or overwhelmed.”

Beekee has partnered with more than 40 organizations across nearly 30 countries. Founded five years ago and, has now a team of seven. The company recently joined UNESCO’s Global Education Coalition alongside Coursera, Microsoft, and Google. Even though Beekee is primarily used in low-resource environments, its offline-first design is now drawing interest in broader contexts.

In France and Switzerland, secondary schools are beginning to use Beekee devices to give students digital access without exposing them fully to the internet during class. Teachers use them for outdoor projects, such as biology fieldwork, allowing students to share photos and notes over a local network. “The system is also being considered for secure, offline learning in correction facilities, and companies are exploring its potential for training in isolated, privacy-sensitive settings,” says Widmer.

-

Video Friday: Robot Boxing

by Evan Ackerman on 18. April 2025. at 16:00

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboSoft 2025: 23–26 April 2025, LAUSANNE, SWITZERLAND

ICUAS 2025: 14–17 May 2025, CHARLOTTE, NC

ICRA 2025: 19–23 May 2025, ATLANTA, GA

London Humanoids Summit: 29–30 May 2025, LONDON

IEEE RCAR 2025: 1–6 June 2025, TOYAMA, JAPAN

2025 Energy Drone & Robotics Summit: 16–18 June 2025, HOUSTON, TX

RSS 2025: 21–25 June 2025, LOS ANGELES

ETH Robotics Summer School: 21–27 June 2025, GENEVA

IAS 2025: 30 June–4 July 2025, GENOA, ITALY

ICRES 2025: 3–4 July 2025, PORTO, PORTUGAL

IEEE World Haptics: 8–11 July 2025, SUWON, KOREA

IFAC Symposium on Robotics: 15–18 July 2025, PARIS

RoboCup 2025: 15–21 July 2025, BAHIA, BRAZIL

RO-MAN 2025: 25–29 August 2025, EINDHOVEN, THE NETHERLANDS

CLAWAR 2025: 5–7 September 2025, SHENZHEN

CoRL 2025: 27–30 September 2025, SEOUL

IEEE Humanoids: 30 September–2 October 2025, SEOUL

World Robot Summit: 10–12 October 2025, OSAKA, JAPAN

IROS 2025: 19–25 October 2025, HANGZHOU, CHINA

Enjoy today’s videos!

Let’s step into a new era of Sci-Fi, join the fun together! Unitree will be livestreaming robot combat in about a month, stay tuned!

[ Unitree ]

A team of scientists and students from Delft University of Technology in the Netherlands (TU Delft) has taken first place at the A2RL Drone Championship in Abu Dhabi - an international race that pushes the limits of physical artificial intelligence, challenging teams to fly fully autonomous drones using only a single camera. The TU Delft drone competed against 13 autonomous drones and even human drone racing champions, using innovative methods to train deep neural networks for high-performance control.

[ TU Delft ]

RAI’s Ultra Mobile Vehicle (UMV) is learning some new tricks!

[ RAI Institute ]

With 28 moving joints (20 QDD actuators + 8 servo motors), Cosmo can walk with its two feet with a speed of up to 1 m/s (0.5 m/s nominal) and balance itself even when pushed. Coordinated with the motion of its head, fingers, arms and legs, Cosmo has a loud and expressive voice for effective interaction with humans. Cosmo speaks in canned phrases from the 90’s cartoon he originates from and his speech can be fully localized in any language.

[ RoMeLa ]

We wrote about Parallel Systems back in January of 2022, and it’s good to see that their creative take on autonomous rail is still moving along.

[ Parallel Systems ]

RoboCake is ready. This edible robotic cake is the result of a collaboration between researchers from EPFL (the Swiss Federal Institute of Technology in Lausanne), the Istituto Italiano di Tecnologia (IIT-Italian Institute of Technology) and pastry chefs and food scientists from EHL in Lausanne. It takes the form of a robotic wedding cake, decorated with two gummy robotic bears and edible dark chocolate batteries that power the candles.

[ EPFL ]

ROBOTERA’s fully self-developed five-finger dexterous hand has upgraded its skills, transforming into an esports hand in the blink of an eye! The XHAND1 features 12 active degrees of freedom, pioneering an industry-first fully direct-drive joint design. It offers exceptional flexibility and sensitivity, effortlessly handling precision tasks like finger opposition, picking up soft objects, and grabbing cards. Additionally, it delivers powerful grip strength with a maximum payload of nearly 25 kilograms, making it adaptable to a wide range of complex application scenarios.

[ ROBOTERA ]

Witness the future of industrial automation as Extend Robotics trials their cutting-edge humanoid robot in Leyland factories. In this groundbreaking video, see how the robot skillfully connects a master service disconnect unit—a critical task in factory operations. Watch onsite workers seamlessly collaborate with the robot using an intuitive XR (extended reality) interface, blending human expertise with robotic precision.

[ Extend Robotics ]

I kind of like the idea of having a mobile robot that lives in my garage and manages the charging and cleaning of my car.

[ Flexiv ]

How can we ensure robots using foundation models, such as large language models (LLMs), won’t “hallucinate” when executing tasks in complex, previously unseen environments? Our Safe and Assured Foundation Robots for Open Environments (SAFRON) Advanced Research Concept (ARC) seeks ideas to make sure robots behave only as directed & intended.

[ DARPA ]

What if doing your chores were as easy as flipping a switch? In this talk and live demo, roboticist and founder of 1X Bernt Børnich introduces NEO, a humanoid robot designed to help you out around the house. Watch as NEO shows off its ability to vacuum, water plants and keep you company, while Børnich tells the story of its development — and shares a vision for robot helpers that could free up your time to focus on what truly matters.

Rodney Brooks gave a keynote at the Stanford HAI spring conference on Robotics in a Human-Centered World.

There are a bunch of excellent talks from this conference on YouTube at the link below, but I think this panel is especially good, as a discussion of going from from research to real-world impact.

[ YouTube ] via [ Stanford HAI ]

Wing CEO Adam Woodworth discusses consumer drone delivery with Peter Diamandis at Abundance 360.

[ Wing ]

This CMU RI Seminar is from Sangbae Kim, who was until very recently at MIT but is now the Robotics Architect at Meta’s Robotics Studio.

[ CMU RI ]

-

Bell Labs Turns 100, Plans to Leave Its Old Headquarters

by Dina Genkina on 18. April 2025. at 13:00

This year, Bell Labs celebrates its hundredth birthday. In a centennial celebration held last week at the Murray Hill, New Jersey campus, the lab’s impressive technological history was celebrated with talks, panels, demos, and over a half dozen gracefully aging Nobel laureates.

During its impressive 100 year tenure, Bell Labs scientists invented the transistor, laid down the theoretical grounding for the digital age, discovered radio astronomy which led to the first evidence in favor of the big bang theory, contributed to the invention of the laser, developed the Unix operating system, invented the charge-coupled device (CCD) camera, and many more scientific and technological contributions that have earned Bell Labs ten Noble prizes and five Turing awards.

“I normally tell people, this is the ‘Bell Labs invented everything’ tour,” said Nokia Bell Labs archivist Ed Eckert as he led a tour through the lab’s history exhibit.

The lab is smaller than it once was. The main campus in Murray Hill, New Jersey appears like a bit of a ghost town, with empty cubicles and offices lining the halls. Now, it’s planning a move to a smaller facility in New Brunswick, New Jersey sometime in 2027. In its heyday, Bell Labs boasted around 6,000 workers at the Murray Hill location. Although that number has now dwindled to about 1,000, more work at other locations around the world

The Many Accomplishments of Bell Labs

Despite its somewhat diminished size, Bell Labs, now owned by Nokia, is alive and kicking.

“As Nokia Bell Labs, we have a dual mission,” says Bell Labs president Peter Vetter. “On the one hand, we need to support the longevity of the core business. That is networks, mobile networks, optical networks, the networking at large, security, device research, ASICs, optical components that support that network system. And then we also have the second part of the mission, which is help the company grow into new areas.”

Some of the new areas for growth were represented in live demonstrations at the centennial.

A team at Bell Labs is working on establishing the first cellular network on the moon. In February, Intuitive Machines sent their second lunar mission, Athena, with Bell Labs’ technology on board. The team fit two full cellular networks into a briefcase-sized box, the most compact networking system ever made. This cell network was self-deploying: Nobody on Earth needs to tell it what to do. The lunar lander tipped on its side upon landing and quickly went offline due to lack of solar power, Bell Labs’ networking module had enough time to power up and transmit data back to Earth.

Another Bell Labs group is focused on monitoring the world’s vast network of undersea fiber-optic cables. Undersea cables are subject to interruptions, be it from adversarial sabotage, undersea weather events like earthquakes or tsunamis, or fishing nets and ship anchors. The team wants to turn these cables into a sensor network, capable of monitoring the environment around a cable for possible damage. The team has developed a real-time technique for monitoring mild changes in cable length, so sensitive that the lab-based demo was able to pick up tiny vibrations from the presenter’s speaking voice. This technique can pin changes down to a 10 kilometer interval of cable, greatly simplifying the search for affected regions.

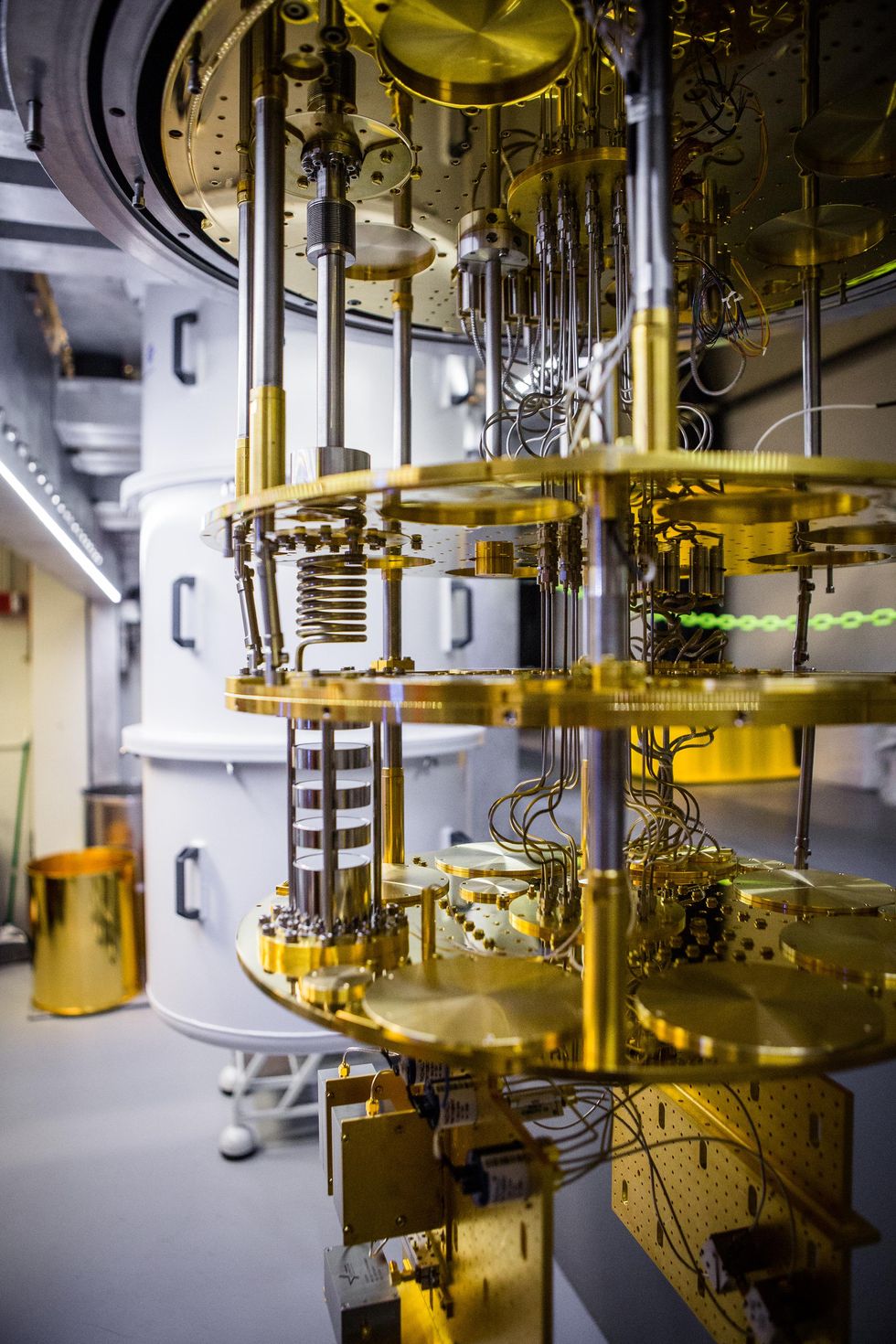

Nokia is taking the path less travelled when it comes to quantum computing, pursuing so-called topological quantum bits. These qubits, if made, would be much more robust to noise than other approaches, and are more readily amenable to scaling. However, building even a single qubit of this kind has been elusive. Nokia Bell Labs’ Robert Willett has been at it since his graduate work in 1988, and the team expect to demonstrate the first NOT gate with this architecture later this year.

Beam-steering antennas for point-to-point fixed wireless are normally made on printed circuit boards. But as the world goes to higher frequencies, toward 6G, conventional printed circuit board materials are no longer cutting it—the signal loss makes them economically unviable. That’s why a team at Nokia Bell Labs has developed a way to print circuit boards on glass instead. The result is a small glass chip that has 64 integrated circuits on one side and the antenna array on the other. A 100 gigahertz link using the tech was deployed at the Paris Olympics in 2024, and a commercial product is on the roadmap for 2027.

Mining, particularly autonomous mining that avoids putting humans in harm’s way, relies heavily on networking. That’s why Nokia has entered the mining business, developing smart digital twin technology that models the mine and the autonomous trucks that work on it. Their robo-truck system features two cellular modems, three Wifi cards, and twelve ethernet ports. The system collects different types of sensor data and correlates them on a virtual map of the mine (the digital twin). Then, it uses AI to suggest necessary maintenance and to optimize scheduling.

The lab is also dipping into AI. One team is working on integrating large language models with robots for industrial applications. These robots have access to a digital twin model of the space they are in and have a semantic representation of certain objects in their surroundings. In a demo, a robot was verbally asked to identify missing boxes in a rack, and it successfully pointed out which box wasn’t found in its intended place, and when prompted travelled to the storage area and identified the replacement. The key is to build robots that can “reason about the physical world,” says Matthew Andrews, a researcher in the AI lab. A test system will be deployed in a warehouse in the United Arab Emirates in the next six months.

Despite impressive scientific demonstrations, there was an air of apprehension about the event. In a panel discussion about the future of innovation, Princeton engineering dean Andrea Goldsmith said, “I’ve never been more worried about the innovation ecosystem in the US.” Former Google CEO Eric Schmidt said in a keynote that “The current administration seems to be trying to destroy university R&D.” Nevertheless, Schmidt and others expressed optimism about the future of innovation at Bell Labs and the US more generally. “We will win, because we are right and R&D is the foundation of economic growth,” he said.

-

The Future of AI and Robotics Is Being Led by Amazon’s Next-Gen Warehouses

by Dexter Johnson on 17. April 2025. at 11:09

This is a sponsored article brought to you by Amazon.

The cutting edge of robotics and artificial intelligence (AI) doesn’t occur just at NASA, or one of the top university labs, but instead is increasingly being developed in the warehouses of the e-commerce company Amazon. As online shopping continues to grow, companies like Amazon are pushing the boundaries of these technologies to meet consumer expectations.

Warehouses, the backbone of the global supply chain, are undergoing a transformation driven by technological innovation. Amazon, at the forefront of this revolution, is leveraging robotics and AI to shape the warehouses of the future. Far from being just a logistics organization, Amazon is positioning itself as a leader in technological innovation, making it a prime destination for engineers and scientists seeking to shape the future of automation.

Amazon: A Leader in Technological Innovation

Amazon’s success in e-commerce is built on a foundation of continuous technological innovation. Its fulfillment centers are increasingly becoming hubs of cutting-edge technology where robotics and AI play a pivotal role. Heath Ruder, Director of Product Management at Amazon, explains how Amazon’s approach to integrating robotics with advanced material handling equipment is shaping the future of its warehouses.

“We’re integrating several large-scale products into our next-generation fulfillment center in Shreveport, Louisiana,” says Ruder. “It’s our first opportunity to get our robotics systems combined under one roof and understand the end-to-end mechanics of how a building can run with incorporated autonomation.” Ruder is referring to the facility’s deployment of its Automated Storage and Retrieval Systems (ASRS), called Sequoia, as well as robotic arms like “Robin” and “Cardinal” and Amazon’s proprietary autonomous mobile robot, “Proteus”.

Amazon has already deployed “Robin”, a robotic arm that sorts packages for outbound shipping by transferring packages from conveyors to mobile robots. This system is already in use across various Amazon fulfillment centers and has completed over three billion successful package moves. “Cardinal” is another robotic arm system that efficiently packs packages into carts before the carts are loaded onto delivery trucks.

“Proteus” is Amazon’s autonomous mobile robot designed to work around people. Unlike traditional robots confined to a restricted area, Proteus is fully autonomous and navigates through fulfillment centers using sensors and a mix of AI-based and ML systems. It works with human workers and other robots to transport carts full of packages more efficiently.

The integration of these technologies is estimated to increase operational efficiency by 25 percent. “Our goal is to improve speed, quality, and cost. The efficiency gains we’re seeing from these systems are substantial,” says Ruder. However, the real challenge is scaling this technology across Amazon’s global network of fulfillment centers. “Shreveport was our testing ground and we are excited about what we have learned and will apply at our next building launching in 2025.”

Amazon’s investment in cutting-edge robotics and AI systems is not just about operational efficiency. It underscores the company’s commitment to being a leader in technological innovation and workplace safety, making it a top destination for engineers and scientists looking to solve complex, real-world problems.

How AI Models Are Trained: Learning from the Real World

One of the most complex challenges Amazon’s robotics team faces is how to make robots capable of handling a wide variety of tasks that require discernment. Mike Wolf, a principal scientist at Amazon Robotics, plays a key role in developing AI models that enable robots to better manipulate objects, across a nearly infinite variety of scenarios.

“The complexity of Amazon’s product catalog—hundreds of millions of unique items—demands advanced AI systems that can make real-time decisions about object handling,” explains Wolf. But how do these AI systems learn to handle such an immense variety of objects? Wolf’s team is developing machine learning algorithms that enable robots to learn from experience.

“We’re developing the next generation of AI and robotics. For anyone interested in this field, Amazon is the place where you can make a difference on a global scale.” —Mike Wolf, Amazon Robotics

In fact, robots at Amazon continuously gather data from their interactions with objects, refining their ability to predict how items will be affected when manipulated. Every interaction a robot has—whether it’s picking up a package or placing it into a container—feeds back into the system, refining the AI model and helping the robot to improve. “AI is continually learning from failure cases,” says Wolf. “Every time a robot fails to complete a task successfully, that’s actually an opportunity for the system to learn and improve.” This data-centric approach supports the development of state-of-the-art AI systems that can perform increasingly complex tasks, such as predicting how objects are affected when manipulated. This predictive ability will help robots determine the best way to pack irregularly shaped objects into containers or handle fragile items without damaging them.

“We want AI that understands the physics of the environment, not just basic object recognition. The goal is to predict how objects will move and interact with one another in real time,” Wolf says.

What’s Next in Warehouse Automation

Valerie Samzun, Senior Technical Product Manager at Amazon, leads a cutting-edge robotics program that aims to enhance workplace safety and make jobs more rewarding, fulfilling, and intellectually stimulating by allowing robots to handle repetitive tasks.

“The goal is to reduce certain repetitive and physically demanding tasks from associates,” explains Samzun. “This allows them to focus on higher-value tasks in skilled roles.” This shift not only makes warehouse operations more efficient but also opens up new opportunities for workers to advance their careers by developing new technical skills.

“Our research combines several cutting-edge technologies,” Samzun shared. “The project uses robotic arms equipped with compliant manipulation tools to detect the amount of force needed to move items without damaging them or other items.” This is an advancement that incorporates learnings from previous Amazon robotics projects. “This approach allows our robots to understand how to interact with different objects in a way that’s safe and efficient,” says Samzun. In addition to robotic manipulation, the project relies heavily on AI-driven algorithms that determine the best way to handle items and utilize space.

Samzun believes the technology will eventually expand to other parts of Amazon’s operations, finding multiple applications across its vast network. “The potential applications for compliant manipulation are huge,” she says.

Attracting Engineers and Scientists: Why Amazon is the Place to Be

As Amazon continues to push the boundaries of what’s possible with robotics and AI, it’s also becoming a highly attractive destination for engineers, scientists, and technical professionals. Both Wolf and Samzun emphasize the unique opportunities Amazon offers to those interested in solving real-world problems at scale.

For Wolf, who transitioned to Amazon from NASA’s Jet Propulsion Laboratory, the appeal lies in the sheer impact of the work. “The draw of Amazon is the ability to see your work deployed at scale. There’s no other place in the world where you can see your robotics work making a direct impact on millions of people’s lives every day,” he says. Wolf also highlights the collaborative nature of Amazon’s technical teams. Whether working on AI algorithms or robotic hardware, scientists and engineers at Amazon are constantly collaborating to solve new challenges.

Amazon’s culture of innovation extends beyond just technology. It’s also about empowering people. Samzun, who comes from a non-engineering background, points out that Amazon is a place where anyone with the right mindset can thrive, regardless of their academic background. “I came from a business management background and found myself leading a robotics project,” she says. “Amazon provides the platform for you to grow, learn new skills, and work on some of the most exciting projects in the world.”

For young engineers and scientists, Amazon offers a unique opportunity to work on state-of-the-art technology that has real-world impact. “We’re developing the next generation of AI and robotics,” says Wolf. “For anyone interested in this field, Amazon is the place where you can make a difference on a global scale.”

The Future of Warehousing: A Fusion of Technology and Talent

From Amazon’s leadership, it’s clear that the future of warehousing is about more than just automation. It’s about harnessing the power of robotics and AI to create smarter, more efficient, and safer working environments. But at its core it remains centered on people in its operations and those who make this technology possible—engineers, scientists, and technical professionals who are driven to solve some of the world’s most complex problems.

Amazon’s commitment to innovation, combined with its vast operational scale, makes it a leader in warehouse automation. The company’s focus on integrating robotics, AI, and human collaboration is transforming how goods are processed, stored, and delivered. And with so many innovative projects underway, the future of Amazon’s warehouses is one where technology and human ingenuity work hand in hand.

“We’re building systems that push the limits of robotics and AI,” says Wolf. “If you want to work on the cutting edge, this is the place to be.”

-

Future Chips Will Be Hotter Than Ever

by James Myers on 16. April 2025. at 13:30

For over 50 years now, egged on by the seeming inevitability of Moore’s Law, engineers have managed to double the number of transistors they can pack into the same area every two years. But while the industry was chasing logic density, an unwanted side effect became more prominent: heat.

In a system-on-chip (SoC) like today’s CPUs and GPUs, temperature affects performance, power consumption, and energy efficiency. Over time, excessive heat can slow the propagation of critical signals in a processor and lead to a permanent degradation of a chip’s performance. It also causes transistors to leak more current and as a result waste power. In turn, the increased power consumption cripples the energy efficiency of the chip, as more and more energy is required to perform the exact same tasks.

The root of the problem lies with the end of another law: Dennard scaling. This law states that as the linear dimensions of transistors shrink, voltage should decrease such that the total power consumption for a given area remains constant. Dennard scaling effectively ended in the mid-2000s at the point where any further reductions in voltage were not feasible without compromising the overall functionality of transistors. Consequently, while the density of logic circuits continued to grow, power density did as well, generating heat as a by-product.

As chips become increasingly compact and powerful, efficient heat dissipation will be crucial to maintaining their performance and longevity. To ensure this efficiency, we need a tool that can predict how new semiconductor technology—processes to make transistors, interconnects, and logic cells—changes the way heat is generated and removed. My research colleagues and I at Imec have developed just that. Our simulation framework uses industry-standard and open-source electronic design automation (EDA) tools, augmented with our in-house tool set, to rapidly explore the interaction between semiconductor technology and the systems built with it.

The results so far are inescapable: The thermal challenge is growing with each new technology node, and we’ll need new solutions, including new ways of designing chips and systems, if there’s any hope that they’ll be able to handle the heat.

The Limits of Cooling

Traditionally, an SoC is cooled by blowing air over a heat sink attached to its package. Some data centers have begun using liquid instead because it can absorb more heat than gas. Liquid coolants—typically water or a water-based mixture—may work well enough for the latest generation of high-performance chips such as Nvidia’s new AI GPUs, which reportedly consume an astounding 1,000 watts. But neither fans nor liquid coolers will be a match for the smaller-node technologies coming down the pipeline.

Heat follows a complex path as it’s removed from a chip, but 95 percent of it exits through the heat sink. Imec

Heat follows a complex path as it’s removed from a chip, but 95 percent of it exits through the heat sink. ImecTake, for instance, nanosheet transistors and complementary field-effect transistors (CFETs). Leading chip manufacturers are already shifting to nanosheet devices, which swap the fin in today’s fin field-effect transistors for a stack of horizontal sheets of semiconductor. CFETs take that architecture to the extreme, vertically stacking more sheets and dividing them into two devices, thus placing two transistors in about the same footprint as one. Experts expect the semiconductor industry to introduce CFETs in the 2030s.

In our work, we looked at an upcoming version of the nanosheet called A10 (referring to a node of 10 angstroms, or 1 nanometer) and a version of the CFET called A5, which Imec projects will appear two generations after the A10. Simulations of our test designs showed that the power density in the A5 node is 12 to 15 percent higher than in the A10 node. This increased density will, in turn, lead to a projected temperature rise of 9 °C for the same operating voltage.

Complementary field-effect transistors will stack nanosheet transistors atop each other, increasing density and temperature. To operate at the same temperature as nanosheet transistors (A10 node), CFETs (A5 node) will have to run at a reduced voltage. Imec

Complementary field-effect transistors will stack nanosheet transistors atop each other, increasing density and temperature. To operate at the same temperature as nanosheet transistors (A10 node), CFETs (A5 node) will have to run at a reduced voltage. Imec Nine degrees might not seem like much. But in a data center, where hundreds of thousands to millions of chips are packed together, it can mean the difference between stable operation and thermal runaway—that dreaded feedback loop in which rising temperature increases leakage power, which increases temperature, which increases leakage power, and so on until, eventually, safety mechanisms must shut down the hardware to avoid permanent damage.

Researchers are pursuing advanced alternatives to basic liquid and air cooling that may help mitigate this kind of extreme heat. Microfluidic cooling, for instance, uses tiny channels etched into a chip to circulate a liquid coolant inside the device. Other approaches include jet impingement, which involves spraying a gas or liquid at high velocity onto the chip’s surface, and immersion cooling, in which the entire printed circuit board is dunked in the coolant bath.

But even if these newer techniques come into play, relying solely on coolers to dispense with extra heat will likely be impractical. That’s especially true for mobile systems, which are limited by size, weight, battery power, and the need to not cook their users. Data centers, meanwhile, face a different constraint: Because cooling is a building-wide infrastructure expense, it would cost too much and be too disruptive to update the cooling setup every time a new chip arrives.

Performance Versus Heat

Luckily, cooling technology isn’t the only way to stop chips from frying. A variety of system-level solutions can keep heat in check by dynamically adapting to changing thermal conditions.

One approach places thermal sensors around a chip. When the sensors detect a worrying rise in temperature, they signal a reduction in operating voltage and frequency—and thus power consumption—to counteract heating. But while such a scheme solves thermal issues, it might noticeably affect the chip’s performance. For example, the chip might always work poorly in hot environments, as anyone who’s ever left their smartphone in the sun can attest.

Another approach, called thermal sprinting, is especially useful for multicore data-center CPUs. It is done by running a core until it overheats and then shifting operations to a second core while the first one cools down. This process maximizes the performance of a single thread, but it can cause delays when work must migrate between many cores for longer tasks. Thermal sprinting also reduces a chip’s overall throughput, as some portion of it will always be disabled while it cools.

System-level solutions thus require a careful balancing act between heat and performance. To apply them effectively, SoC designers must have a comprehensive understanding of how power is distributed on a chip and where hot spots occur, where sensors should be placed and when they should trigger a voltage or frequency reduction, and how long it takes parts of the chip to cool off. Even the best chip designers, though, will soon need even more creative ways of managing heat.

Making Use of a Chip’s Backside

A promising pursuit involves adding new functions to the underside, or backside, of a wafer. This strategy mainly aims to improve power delivery and computational performance. But it might also help resolve some heat problems.

New technologies can reduce the voltage that needs to be delivered to a multicore processor so that the chip maintains a minimum voltage while operating at an acceptable frequency. A backside power-delivery network does this by reducing resistance. Backside capacitors lower transient voltage losses. Backside integrated voltage regulators allow different cores to operate at different minimum voltages as needed.Imec

New technologies can reduce the voltage that needs to be delivered to a multicore processor so that the chip maintains a minimum voltage while operating at an acceptable frequency. A backside power-delivery network does this by reducing resistance. Backside capacitors lower transient voltage losses. Backside integrated voltage regulators allow different cores to operate at different minimum voltages as needed.ImecImec foresees several backside technologies that may allow chips to operate at lower voltages, decreasing the amount of heat they generate. The first technology on the road map is the so-called backside power-delivery network (BSPDN), which does precisely what it sounds like: It moves power lines from the front of a chip to the back. All the advanced CMOS foundries plan to offer BSPDNs by the end of 2026. Early demonstrations show that they lessen resistance by bringing the power supply much closer to the transistors. Less resistance results in less voltage loss, which means the chip can run at a reduced input voltage. And when voltage is reduced, power density drops—and so, in turn, does temperature.

By changing the materials within the path of heat removal, backside power-delivery technology could make hot spots on chips even hotter.

Imec

By changing the materials within the path of heat removal, backside power-delivery technology could make hot spots on chips even hotter.

Imec

After BSPDNs, manufacturers will likely begin adding capacitors with high energy-storage capacity to the backside as well. Large voltage swings caused by inductance in the printed circuit board and chip package can be particularly problematic in high-performance SoCs. Backside capacitors should help with this issue because their closer proximity to the transistors allows them to absorb voltage spikes and fluctuations more quickly. This arrangement would therefore enable chips to run at an even lower voltage—and temperature—than with BSPDNs alone.

Finally, chipmakers will introduce backside integrated voltage-regulator (IVR) circuits. This technology aims to curtail a chip’s voltage requirements further still through finer voltage tuning. An SoC for a smartphone, for example, commonly has 8 or more compute cores, but there’s no space on the chip for each to have its own discrete voltage regulator. Instead, one off-chip regulator typically manages the voltage of four cores together, regardless of whether all four are facing the same computational load. IVRs, on the other hand, would manage each core individually through a dedicated circuit, thereby improving energy efficiency. Placing them on the backside would save valuable space on the frontside.

It is still unclear how backside technologies will affect heat management; demonstrations and simulations are needed to chart the effects. Adding new technology will often increase power density, and chip designers will need to consider the thermal consequences. In placing backside IVRs, for instance, will thermal issues improve if the IVRs are evenly distributed or if they are concentrated in specific areas, such as the center of each core and memory cache?

Recently, we showed that backside power delivery may introduce new thermal problems even as it solves old ones. The cause is the vanishingly thin layer of silicon that’s left when BSPDNs are created. In a frontside design, the silicon substrate can be as thick as 750 micrometers. Because silicon conducts heat well, this relatively bulky layer helps control hot spots by spreading heat from the transistors laterally. Adding backside technologies, however, requires thinning the substrate to about 1 mm to provide access to the transistors from the back. Sandwiched between two layers of wires and insulators, this slim silicon slice can no longer move heat effectively toward the sides. As a result, heat from hyperactive transistors can get trapped locally and forced upward toward the cooler, exacerbating hot spots.

Our simulation of an 80-core server SoC found that BSPDNs can raise hot-spot temperatures by as much as 14 °C. Design and technology tweaks—such as increasing the density of the metal on the backside—can improve the situation, but we will need more mitigation strategies to avoid it completely.

Preparing for “CMOS 2.0”

BSPDNs are part of a new paradigm of silicon logic technology that Imec is calling CMOS 2.0. This emerging era will also see advanced transistor architectures and specialized logic layers. The main purpose of these technologies is optimizing chip performance and power efficiency, but they might also offer thermal advantages, including improved heat dissipation.

In today’s CMOS chips, a single transistor drives signals to both nearby and faraway components, leading to inefficiencies. But what if there were two drive layers? One layer would handle long wires and buffer these connections with specialized transistors; the other would deal only with connections under 10 mm. Because the transistors in this second layer would be optimized for short connections, they could operate at a lower voltage, which again would reduce power density. How much, though, is still uncertain.

In the future, parts of chips will be made on their own silicon wafers using the appropriate process technology for each. They will then be 3D stacked to form SoCs that function better than those built using only one process technology. But engineers will have to carefully consider how heat flows through these new 3D structures.

Imec

In the future, parts of chips will be made on their own silicon wafers using the appropriate process technology for each. They will then be 3D stacked to form SoCs that function better than those built using only one process technology. But engineers will have to carefully consider how heat flows through these new 3D structures.

Imec

What is clear is that solving the industry’s heat problem will be an interdisciplinary effort. It’s unlikely that any one technology alone—whether that’s thermal-interface materials, transistors, system-control schemes, packaging, or coolers—will fix future chips’ thermal issues. We will need all of them. And with good simulation tools and analysis, we can begin to understand how much of each approach to apply and on what timeline. Although the thermal benefits of CMOS 2.0 technologies—specifically, backside functionalization and specialized logic—look promising, we will need to confirm these early projections and study the implications carefully. With backside technologies, for instance, we will need to know precisely how they alter heat generation and dissipation—and whether that creates more new problems than it solves.

Chip designers might be tempted to adopt new semiconductor technologies assuming that unforeseen heat issues can be handled later in software. That may be true, but only to an extent. Relying too heavily on software solutions would have a detrimental impact on a chip’s performance because these solutions are inherently imprecise. Fixing a single hot spot, for example, might require reducing the performance of a larger area that is otherwise not overheating. It will therefore be imperative that SoCs and the semiconductor technologies used to build them are designed hand in hand.

The good news is that more EDA products are adding features for advanced thermal analysis, including during early stages of chip design. Experts are also calling for a new method of chip development called system technology co-optimization. STCO aims to dissolve the rigid abstraction boundaries between systems, physical design, and process technology by considering them holistically. Deep specialists will need to reach outside their comfort zone to work with experts in other chip-engineering domains. We may not yet know precisely how to resolve the industry’s mounting thermal challenge, but we are optimistic that, with the right tools and collaborations, it can be done.

-

Navigating the Angstrom Era

by Wiley on 16. April 2025. at 13:22

This is a sponsored article brought to you by Applied Materials.

The semiconductor industry is in the midst of a transformative era as it bumps up against the physical limits of making faster and more efficient microchips. As we progress toward the “angstrom era,” where chip features are measured in mere atoms, the challenges of manufacturing have reached unprecedented levels. Today’s most advanced chips, such as those at the 2nm node and beyond, are demanding innovations not only in design but also in the tools and processes used to create them.

At the heart of this challenge lies the complexity of defect detection. In the past, optical inspection techniques were sufficient to identify and analyze defects in chip manufacturing. However, as chip features have continued to shrink and device architectures have evolved from 2D planar transistors to 3D FinFET and Gate-All-Around (GAA) transistors, the nature of defects has changed.

Defects are often at scales so small that traditional methods struggle to detect them. No longer just surface-level imperfections, they are now commonly buried deep within intricate 3D structures. The result is an exponential increase in data generated by inspection tools, with defect maps becoming denser and more complex. In some cases, the number of defect candidates requiring review has increased 100-fold, overwhelming existing systems and creating bottlenecks in high-volume production.

Applied Materials’ CFE technology achieves sub-nanometer resolution, enabling the detection of defects buried deep within 3D device structures.

The burden created by the surge in data is compounded by the need for higher precision. In the angstrom era, even the smallest defect — a void, residue, or particle just a few atoms wide — can compromise chip performance and the yield of the chip manufacturing process. Distinguishing true defects from false alarms, or “nuisance defects,” has become increasingly difficult.

Traditional defect review systems, while effective in their time, are struggling to keep pace with the demands of modern chip manufacturing. The industry is at an inflection point, where the ability to detect, classify, and analyze defects quickly and accurately is no longer just a competitive advantage — it’s a necessity.

Applied Materials

Applied MaterialsAdding to the complexity of this process is the shift toward more advanced chip architectures. Logic chips at the 2nm node and beyond, as well as higher-density DRAM and 3D NAND memories, require defect review systems capable of navigating intricate 3D structures and identifying issues at the nanoscale. These architectures are essential for powering the next generation of technologies, from artificial intelligence to autonomous vehicles. But they also demand a new level of precision and speed in defect detection.

In response to these challenges, the semiconductor industry is witnessing a growing demand for faster and more accurate defect review systems. In particular, high-volume manufacturing requires solutions that can analyze exponentially more samples without sacrificing sensitivity or resolution. By combining advanced imaging techniques with AI-driven analytics, next-generation defect review systems are enabling chipmakers to separate the signal from the noise and accelerate the path from development to production.

eBeam Evolution: Driving the Future of Defect Detections

Electron beam (eBeam) imaging has long been a cornerstone of semiconductor manufacturing, providing the ultra-high resolution necessary to analyze defects that are invisible to optical techniques. Unlike light, which has a limited resolution due to its wavelength, electron beams can achieve resolutions at the sub-nanometer scale, making them indispensable for examining the tiniest imperfections in modern chips.

Applied Materials

Applied MaterialsThe journey of eBeam technology has been one of continuous innovation. Early systems relied on thermal field emission (TFE), which generates an electron beam by heating a filament to extremely high temperatures. While TFE systems are effective, they have known limitations. The beam is relatively broad, and the high operating temperatures can lead to instability and shorter lifespans. These constraints became increasingly problematic as chip features shrank and defect detection requirements grew more stringent.

Enter cold field emission (CFE) technology, a breakthrough that has redefined the capabilities of eBeam systems. Unlike TFE, CFE operates at room temperature, using a sharp, cold filament tip to emit electrons. This produces a narrower, more stable beam with a higher density of electrons that results in significantly improved resolution and imaging speed.

Applied Materials

Applied MaterialsFor decades, CFE systems were limited to lab usage because it was not possible to keep the tools up and running for adequate periods of time — primarily because at “cold” temperatures, contaminants inside the chambers adhere to the eBeam emitter and partially block the flow of electrons.

In December 2022, Applied Materials announced that it had solved the reliability issues with the introduction of its first two eBeam systems based on CFE technology. Applied is an industry leader at the forefront of defect detection innovation. It is a company that has consistently pushed the boundaries of materials engineering to enable the next wave of innovation in chip manufacturing. After more than 10 years of research across a global team of engineers, Applied mitigated the CFE stability challenge by developing multiple breakthroughs. These include new technology to deliver orders of magnitude higher vacuum compared to TFE — tailoring the eBeam column with special materials that reduce contamination, and designing a novel chamber self-cleaning process that further keeps the tip clean.

CFE technology achieves sub-nanometer resolution, enabling the detection of defects buried deep within 3D device structures. This is a capability that is critical for advanced architectures like Gate-All-Around (GAA) transistors and 3D NAND memory. Additionally, CFE systems offer faster imaging speeds compared to traditional TFE systems, allowing chipmakers to analyze more defects in less time.

The Rise of AI in Semiconductor Manufacturing

While eBeam technology provides the foundation for high-resolution defect detection, the sheer volume of data generated by modern inspection tools has created a new challenge: how to process and analyze this data quickly and accurately. This is where artificial intelligence (AI) comes into play.

AI-driven systems can classify defects with remarkable accuracy, sorting them into categories that provide engineers with actionable insights.

AI is transforming manufacturing processes across industries, and semiconductors are no exception. AI algorithms — particularly those based on deep learning — are being used to automate and enhance the analysis of defect inspection data. These algorithms can sift through massive datasets, identifying patterns and anomalies that would be impossible for human engineers to detect manually.

By training with real in-line data, AI models can learn to distinguish between true defects — such as voids, residues, and particles — and false alarms, or “nuisance defects.” This capability is especially critical in the angstrom era, where the density of defect candidates has increased exponentially.

Enabling the Next Wave of Innovation: The SEMVision H20

The convergence of AI and advanced imaging technologies is unlocking new possibilities for defect detection. AI-driven systems can classify defects with remarkable accuracy. Sorting defects into categories provides engineers with actionable insights. This not only speeds up the defect review process, but it also improves its reliability while reducing the risk of overlooking critical issues. In high-volume manufacturing, where even small improvements in yield can translate into significant cost savings, AI is becoming indispensable.

The transition to advanced nodes, the rise of intricate 3D architectures, and the exponential growth in data have created a perfect storm of manufacturing challenges, demanding new approaches to defect review. These challenges are being met with Applied’s new SEMVision H20.

Applied Materials

Applied MaterialsBy combining second-generation cold field emission (CFE) technology with advanced AI-driven analytics, the SEMVision H20 is not just a tool for defect detection - it’s a catalyst for change in the semiconductor industry.

A New Standard for Defect Review

The SEMVision H20 builds on the legacy of Applied’s industry-leading eBeam systems, which have long been the gold standard for defect review. This second generation CFE has higher, sub-nanometer resolution faster speed than both TFE and first generation CFE because of increased electron flow through its filament tip. These innovative capabilities enable chipmakers to identify and analyze the smallest defects and buried defects within 3D structures. Precision at this level is essential for emerging chip architectures, where even the tiniest imperfection can compromise performance and yield.

But the SEMVision H20’s capabilities go beyond imaging. Its deep learning AI models are trained with real in-line customer data, enabling the system to automatically classify defects with remarkable accuracy. By distinguishing true defects from false alarms, the system reduces the burden on process control engineers and accelerates the defect review process. The result is a system that delivers 3X faster throughput while maintaining the industry’s highest sensitivity and resolution - a combination that is transforming high-volume manufacturing.

“One of the biggest challenges chipmakers often have with adopting AI-based solutions is trusting the model. The success of the SEMVision H20 validates the quality of the data and insights we are bringing to customers. The pillars of technology that comprise the product are what builds customer trust. It’s not just the buzzword of AI. The SEMVision H20 is a compelling solution that brings value to customers.”

Broader Implications for the Industry

The impact of the SEMVision H20 extends far beyond its technical specifications. By enabling faster and more accurate defect review, the system is helping chipmakers reduce factory cycle times, improve yields, and lower costs. In an industry where margins are razor-thin and competition is fierce, these improvements are not just incremental - they are game-changing.

Additionally, the SEMVision H20 is enabling the development of faster, more efficient, and more powerful chips. As the demand for advanced semiconductors continues to grow - driven by trends like artificial intelligence, 5G, and autonomous vehicles - the ability to manufacture these chips at scale will be critical. The system is helping to make this possible, ensuring that chipmakers can meet the demands of the future.

A Vision for the Future

Applied’s work on the SEMVision H20 is more than just a technological achievement; it’s a reflection of the company’s commitment to solving the industry’s toughest challenges. By leveraging cutting-edge technologies like CFE and AI, Applied is not only addressing today’s pain points but also shaping the future of defect review.

As the semiconductor industry continues to evolve, the need for advanced defect detection solutions will only grow. With the SEMVision H20, Applied is positioning itself as a key enabler of the next generation of semiconductor technologies, from logic chips to memory. By pushing the boundaries of what’s possible, the company is helping to ensure that the industry can continue to innovate, scale, and thrive in the angstrom era and beyond.

-

The Many Ways Tariffs Will Hit Your Electronics

by Samuel K. Moore on 16. April 2025. at 13:00

Like the industry he covers, Shawn DuBravac had already had quite a week by the time IEEE Spectrum spoke to him early last Thursday, 10 April 2025. As chief economist at IPC, the 3,000-member industry association for electronics manufacturers, he’s tasked with figuring out the impact of the tsunami of tariffs the U.S. government has planned, paused, or enacted. Earlier that morning he’d recalculated price changes for electronics in the U.S. market following a 90-day pause on steeper tariffs that had been unveiled the previous week, the implementation of universal 10 percent tariffs, and a 125 percent tariff on Chinese imports. A day after this interview, he was recalculating again, following an exemption on electronics of an unspecified duration. According to DuBravac, the effects of all this will likely include higher prices, less choice for consumers, stalled investment, and even stifled innovation.

How have you had to adjust your forecasts today [Thursday 10 April]?

Shawn DuBravac: I revised our forecasts this morning to take into account what the world would look like if the 90-day pause holds into the future and the 125 percent tariffs on China also hold. If you look at smartphones, it would be close to a 91 percent impact. But if all the tariffs are put back in place as they were specified on “Liberation Day,” then that would be 101 percent price impact.

The estimates become highly dependent on how influential China is for final assembly. So, if you look instead at something like TVs, 76 percent of televisions that are imported into the United States are coming from Mexico, where there has long been strong TV manufacturing because there were already tariffs in place on smart flat-panel televisions. The price impact I see for TVs is somewhere between 12 and 18 percent, as opposed to a near doubling for smartphones.

Video-game consoles are another story. In 2024, 86 percent of video-game consoles were coming into the United States from China. So the tariffs have a very big impact.

That said, the number of smartphones coming from China has actually declined pretty significantly in recent years. It was still about 72 percent in 2024, but Vietnam was 14 percent and India was 12 percent. Only a couple years ago the United States wasn’t importing any meaningful amount of smartphones from India, and it’s now become a very important hub.

It sounds like the supply chain started shifting well ahead of these tariffs.

DuBravac: Supply chains are really designed to be dynamic, adaptive, and resilient. So they’re constantly reoptimizing. I almost think of supply chains like living, breathing entities. If there is a disruption in one part, it’s like it lurches forward to figure out how to resolve the constrain, how to heal.

We make these estimates with the presumption that nothing changes, but everything would change if this 125 percent were to become permanent. You would see an acceleration of the decoupling from China that has been happening since 2017 and accelerated during the pandemic.

It’s also important to recognize that the United States isn’t the only buyer of smartphones. They’re produced in a global market, and so the supply chains are going to optimize based on that global-market dynamic. Maybe the rest of the chain could remain intact, and for example, China could continue to produce smartphones for Europe, Asia, and Latin America.

How can supply chains adapt in this constantly changing environment?

DuBravac: That, to me, is the most detrimental aspect of all of this. Supply chains want to adjust, but if they’re not sure what the environment is going to be in the future, they will be hesitant. If you were investing in a new factory—especially a modern, cutting-edge, semiautonomous factory—these are long-term investments. You’re looking at a 20- to 50-year time horizon, so you’re not going to make those type of investments in a geography if you’re not sure what the the broader situation is.

I think one of the great ironies of all of this is that there was already a decoupling from China taking place, but because the tariff dynamics have been so fluid, it causes a pause in new business investment. As a result of that potential pause, the impact of tariffs could be more pronounced on U.S. consumers, because supply chains don’t adjust as quickly as they might have adjusted in a more certain environment.

A lot of damage was done because of the uncertainty that’s been created, and it’s not clear to me that any of that uncertainty has been resolved. Our 3,000 member companies express a tremendous amount of uncertainty about the current environment.

Lower-priced electronics have thin margins. What does that mean for the low-end consumer?

DuBravac: What I see there is the households that are constrained by financials, they’re often the consumers of low-price products, and they’re the ones that are most likely to see tariff cost pushed through. There’s just no margin along the way to absorb those higher costs, and so they might see the highest percentage pricing.

A low-price laptop would probably see a higher price increase in percentage terms. So I think the challenge there is the households least well positioned to handle the impact are the ones that will probably see the most impact.

For some products, we tend to have higher price elasticities at lower price points, which means that a small price change tends to have a big negative impact on demand. There could be other things happening in the background as well, but the net result is that U.S. consumers have less choice.

Some companies have already announced that they were going to cut out their lower-priced models, because it no longer makes economic sense to sell into the marketplace. That could happen on a company basis within their model selections, but it could also happen broadly, in an entire category where you might see the three or four lowest-priced options for a given category exit the market. So now you’re only left with more expensive options.

What other effects are tariffs having?

DuBravac: Another long-term effect we’ve talked about is that as companies try to optimize the cost, they relocate engineering staff to address cost. They’re pulling that engineering staff from other problems that they were trying to solve, like the next cutting-edge innovation. So some of that loss is a potentially a loss of innovation. Companies are going to worry about cost, and as a result, they’re not going to make the next iteration of product as innovative. It’s hard to measure, but I think that it is a potential negative by-product.

The other thing is tariffs generally allow domestic producers to raise their price as well. You’ve already seen that for steel manufacturers. Maybe that makes U.S. companies more solvent or more viable, but at the end of the day, it’s consumers and businesses that will be paying higher prices.

-

Meet the “First Lady of Engineering”

by Willie D. Jones on 15. April 2025. at 16:00

For more than a century, women and racial minorities have fought for access to education and employment opportunities once reserved exclusively for white men. The life of Yvonne Young “Y.Y.” Clark is a testament to the power of perseverance in that fight. As a smart Black woman who shattered the barriers imposed by race and gender, she made history multiple times during her career in academia and industry.

She probably is best known as the first woman to serve as a faculty member in the engineering college at Tennessee State University, in Nashville. Her pioneering spirit extended far beyond the classroom, however, as she continuously staked out new territory for women and Black professionals in engineering. She accomplished a lot before she died on 27 January 2019 at her home in Nashville at the age of 89.

Clark is the subject of the latest biography in IEEE-USA’s Famous Women Engineers in History series. “Don’t Give Up” was her mantra.

An early passion for technology

Born on 13 April 1929 in Houston, Clark moved with her family to Louisville, Ky., as a baby. She was raised in an academically driven household. Her father, Dr. Coleman M. Young Jr., was a surgeon. Her mother, Hortense H. Young, was a library scientist and journalist. Her mother’s “Tense Topics” column, published by the Louisville Defender newspaper, tackled segregation, housing discrimination, and civil rights issues, instilling awareness of social justice in Y.Y.

Clark’s passion for technology became evident at a young age. As a child, she secretly repaired her family’s malfunctioning toaster, surprising her parents. It was a defining moment, signaling to her family that she was destined for a career in engineering—not in education like her older sister, a high school math teacher.

“Y.Y.’s family didn’t create her passion or her talents. Those were her own,” said Carol Sutton Lewis, co-host and producer for the third season of the “Lost Women of Science” podcast, on which Clark was profiled. “What her family did do, and what they would continue to do, was make her interests viable in a world that wasn’t fair.”

Clark’s interest in studying engineering was precipitated by her passion for aeronautics. She said all the pilots she spoke with had studied engineering, so she was determined to do so. She joined the Civil Air Patrol and took simulated flying lessons. She then learned to fly an airplane with the help of a family friend.

Despite her academic excellence, though, racial barriers stood in her way. She graduated at age 16 from Louisville’s Central High School in 1945. Her parents, concerned that she was too young to attend college, sent her to Boston for two additional years at the Girls’ Latin School and Roxbury Memorial High School.

She then applied to the University of Louisville, where she was initially accepted and offered a full scholarship. When university administrators realized she was Black, however, they rescinded the scholarship and the admission, Clark said on the “Lost Women of Science” podcast, which included clips from when her daughter interviewed her in 2007. As Clark explained in the interview, the state of Kentucky offered to pay her tuition to attend Howard University, a historically Black college in Washington, D.C., rather than integrate its publicly funded university.

Breaking barriers in higher education

Although Howard provided an opportunity, it was not free of discrimination. Clark faced gender-based barriers, according to the IEEE-USA biography. She was the only woman among 300 mechanical engineering students, many of whom were World War II veterans.

“Y.Y.’s family didn’t create her passion or her talents. Those were her own. What her family did do, and what they would continue to do, was make her interests viable in a world that wasn’t fair.” —Carol Sutton Lewis

Despite the challenges, she persevered and in 1951 became the first woman to earn a bachelor’s degree in mechanical engineering from the university. The school downplayed her historic achievement, however. In fact, she was not allowed to march with her classmates at graduation. Instead, she received her diploma during a private ceremony in the university president’s office.

A career defined by firsts

Determined to forge a career in engineering, Clark repeatedly encountered racial and gender discrimination. In a 2007 Society of Women Engineers (SWE) StoryCorps interview, she recalled that when she applied for an engineering position with the U.S. Navy, the interviewer bluntly told her, “I don’t think I can hire you.” When she asked why not, he replied, “You’re female, and all engineers go out on a shakedown cruise,” the trip during which the performance of a ship is tested before it enters service or after it undergoes major changes such as an overhaul. She said the interviewer told her, “The omen is: ‘No females on the shakedown cruise.’”

Clark eventually landed a job with the U.S. Army’s Frankford Arsenal gauge laboratories in Philadelphia, becoming the first Black woman hired there. She designed gauges and finalized product drawings for the small-arms ammunition and range-finding instruments manufactured there. Tensions arose, however, when some of her colleagues resented that she earned more money due to overtime pay, according to the IEEE-USA biography. To ease workplace tensions, the Army reduced her hours, prompting her to seek other opportunities.

Her future husband, Bill Clark, saw the difficulty she was having securing interviews, and suggested she use the gender-neutral name Y.Y. on her résumé.

The tactic worked. She became the first Black woman hired by RCA in 1955. She worked for the company’s electronic tube division in Camden, N.J.

Although she excelled at designing factory equipment, she encountered more workplace hostility.

“Sadly,” the IEEE-USA biography says, she “felt animosity from her colleagues and resentment for her success.”

When Bill, who had taken a faculty position as a biochemistry instructor at Meharry Medical College in Nashville, proposed marriage, she eagerly accepted. They married in December 1955, and she moved to Nashville.

In 1956 Clark applied for a full-time position at Ford Motor Co.’s Nashville glass plant, where she had interned during the summers while she was a Howard student. Despite her qualifications, she was denied the job due to her race and gender, she said.

She decided to pursue a career in academia, becoming in 1956 the first woman to teach mechanical engineering at Tennessee State University. In 1965 she became the first woman to chair TSU’s mechanical engineering department.

While teaching at TSU, she pursued further education, earning a master’s degree in engineering management from Nashville’s Vanderbilt University in 1972—another step in her lifelong commitment to professional growth.

After 55 years with the university, where she was also a freshman student advisor for much of that time, Clark retired in 2011 and was named professor emeritus.

A legacy of leadership and advocacy

Clark’s influence extended far beyond TSU. She was active in the Society of Women Engineers after becoming its first Black member in 1951.

Racism, however, followed her even within professional circles.

At the 1957 SWE conference in Houston, the event’s hotel initially refused her entry due to segregation policies, according to a 2022 profile of Clark. Under pressure from the society’s leadership, the hotel compromised; Clark could attend sessions but had to be escorted by a white woman at all times and was not allowed to stay in the hotel despite having paid for a room. She was reimbursed and instead stayed with relatives.

As a result of that incident, the SWE vowed never again to hold a conference in a segregated city.

Over the decades, Clark remained a champion for women in STEM. In one SWE interview, she advised future generations: “Prepare yourself. Do your work. Don’t be afraid to ask questions, and benefit by meeting with other women. Whatever you like, learn about it and pursue it.

“The environment is what you make it. Sometimes the environment is hostile, but don’t worry about it. Be aware of it so you aren’t blindsided.”

Her contributions earned her numerous accolades including the 1998 SWE Distinguished Engineering Educator Award and the 2001 Tennessee Society of Professional Engineers Distinguished Service Award.

A lasting impression

Clark’s legacy was not confined to engineering; she was deeply involved in Nashville community service. She served on the board of the 18th Avenue Family Enrichment Center and participated in the Nashville Area Chamber of Commerce. She was active in the Hendersonville Area chapter of The Links, a volunteer service organization for Black women, and the Nashville alumnae chapter of the Delta Sigma Theta sorority. She also mentored members of the Boy Scouts, many of whom went on to pursue engineering careers.

Clark spent her life knocking down barriers that tried to impede her. She didn’t just break the glass ceiling—she engineered a way through it for people who came after her.

-

What Engineers Should Know About AI Jobs in 2025

by Gwendolyn Rak on 15. April 2025. at 14:00

It seems AI jobs are here to stay, based on the latest data from the 2025 AI Index Report.

To better understand the current state of AI, the annual report from Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) collects a wide range of information on model performance, investment, public opinion, and more. Every year, IEEE Spectrum summarizes our top takeaways from the entire report by plucking out a series of charts, but here we zero in on the technology’s effect on the workforce. Much of the report’s findings about jobs are based on data from LinkedIn and Lightcast, a research firm that analyzes job postings from more than 51,000 websites.

Last year’s report showed signs that the AI hiring boom was quieting. But this year, AI job postings were back up in most places after the prior year’s lag. In the United States, for example, the percentage of all job postings demanding AI skills rose to 1.8 percent, up from 1.4 percent in 2023.

The AI Index Report/Stanford HAI

The AI Index Report/Stanford HAIWill AI Create Job Disruptions?

Many people, including software engineers, fear that AI will make their jobs expendable—but others believe the technology will provide new opportunities. A McKinsey & Co. survey found that 28 percent of executives in software engineering expect generative AI to decrease their organizations’ workforces in the next three years, while 32 percent expect the workforce to increase. Overall, the portion of executives who anticipate a decrease in the workforce seems to be declining.

In fact, a separate study from LinkedIn and GitHub suggests that adoption of GitHub Copilot, the generative AI-powered coding assistant, is associated with a small increase in software-engineering hiring. The study also found these new hires were required to have fewer advanced programming skills, as Peter McCrory, an economist and labor researcher at LinkedIn, noted during a panel discussion on the AI Report last Thursday.

As tools like GitHub Copilot are adopted, the mix of required skills may shift. “Big picture, what we see on LinkedIn in recent years is that members are increasingly emphasizing a broader range of skills and increasingly uniquely human skills, like ethical reasoning or leadership,” McCrory said.

Python Remains a Top Skill

Still, programming skills remain central to AI jobs. In both 2023 and 2024, Python was the top specialized skill listed in U.S. AI job postings. The programming language also held onto its lead this year as the language of choice for many AI programmers.

The AI Index Report/Stanford HAI

The AI Index Report/Stanford HAITaking a broader look at AI-related skills, most were listed in a greater percentage of job postings in 2024 compared with those in 2023, with two exceptions: autonomous driving and robotics. Generative AI in particular saw a large increase, growing by nearly a factor of four.

The AI Index Report/Stanford HAI

The AI Index Report/Stanford HAIAI’s Gender Gap

A gender gap is appearing in AI talent. According to LinkedIn’s research, women in most countries are less likely to list AI skills on their profiles, and it estimates that in 2024, nearly 70 percent of AI professionals on the platform were male. The ratio has been “remarkably stable over time,” the report states.

The AI Index Report/Stanford HAI

The AI Index Report/Stanford HAIAcademia and Industry

Although models are becoming more efficient, training AI is expensive. That expense is one of the primary reasons most of today’s notable AI advances are coming from industry instead of academia.

“Sometimes in academia, we make do with what we have, so you’re seeing a shift of our research toward topics that we can afford to do with the limited computing [power] that we have,” AI Index steering committee co-director Yolanda Gil said at last week’s panel discussion. “That is a loss in terms of advancing the field of AI,” said Gil.

Gil and others at the event emphasized the importance of investment in academia, as well as collaboration across sectors—industry, government, and education. Such partnerships can both provide needed resources to researchers and create a better understanding of the job market among educators, enabling them to prepare students to fill important roles.

-

Video Friday: Tiny Robot Bug Hops and Jumps

by Evan Ackerman on 11. April 2025. at 15:30

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboSoft 2025: 23–26 April 2025, LAUSANNE, SWITZERLAND

ICUAS 2025: 14–17 May 2025, CHARLOTTE, NC

ICRA 2025: 19–23 May 2025, ATLANTA, GA