IEEE Spectrum IEEE Spectrum

-

Transistor-like Qubits Hit Key Benchmark

by Dina Genkina on 11. September 2024. at 12:00

A team in Australia has recently demonstrated a key advance in metal-oxide-semiconductor-based (or MOS-based) quantum computers. They showed that their two-qubit gates—logical operations that involve more than one quantum bit, or qubit—perform without errors 99 percent of the time. This number is important, because it is the baseline necessary to perform error correction, which is believed to be necessary to build a large-scale quantum computer. What’s more, these MOS-based quantum computers are compatible with existing CMOS technology, which will make it more straightforward to manufacture a large number of qubits on a single chip than with other techniques.

“Getting over 99 percent is significant because that is considered by many to be the error correction threshold, in the sense that if your fidelity is lower than 99 percent, it doesn’t really matter what you’re going to do in error correction,” says Yuval Boger, CCO of quantum computing company QuEra and who wasn’t involved in the work. “You’re never going to fix errors faster than they accumulate.”

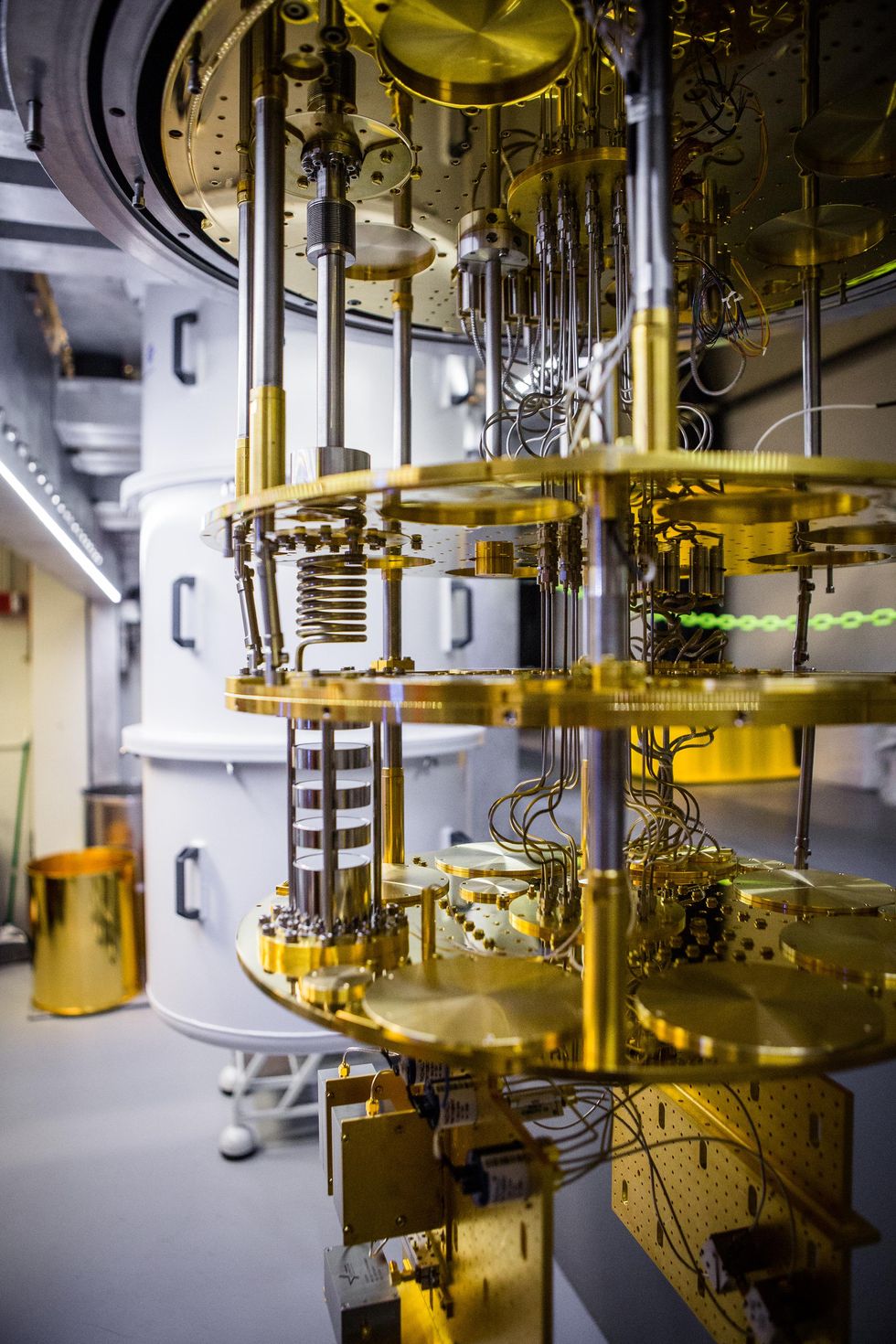

There are many contending platforms in the race to build a useful quantum computer. IBM, Google and others are building their machines out of superconducting qubits. Quantinuum and IonQ use individual trapped ions. QuEra and Atom Computing use neutrally-charged atoms. Xanadu and PsiQuantum are betting on photons. The list goes on.

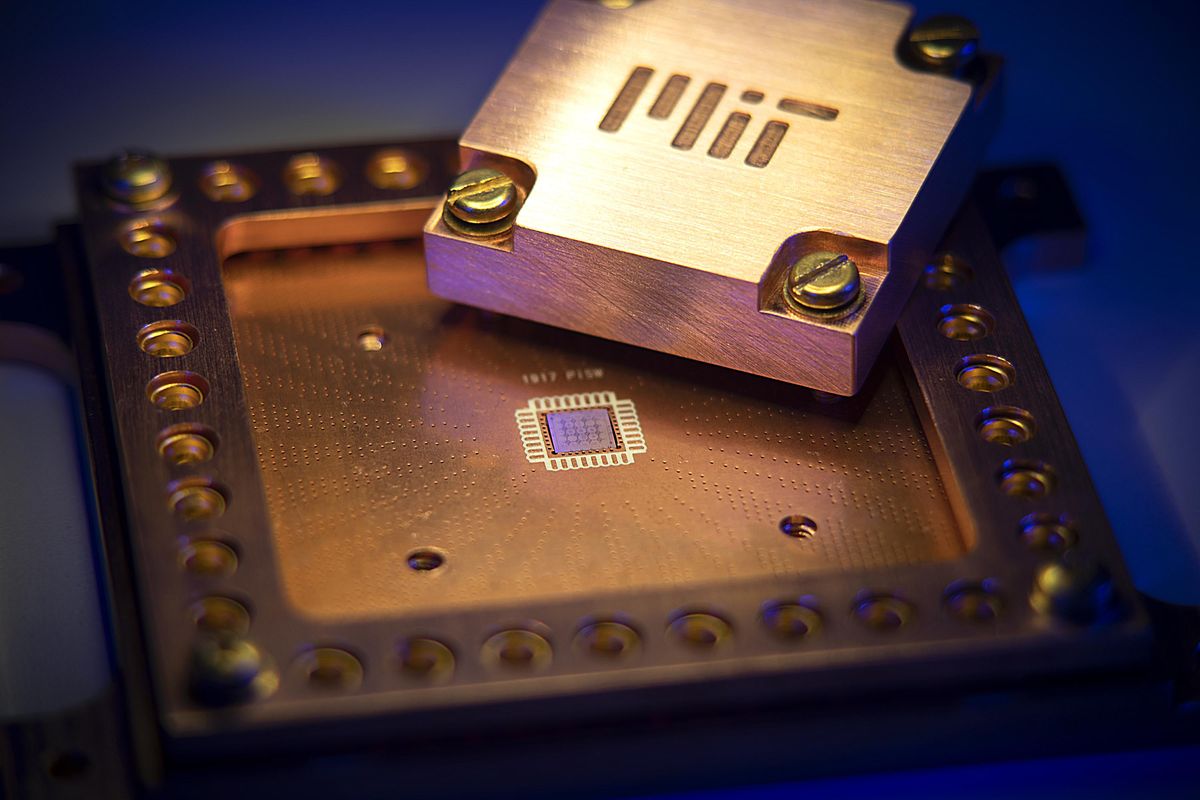

In the new result, a collaboration between the University of New South Whales (UNSW) and Sydney-based startup Diraq, with contributors from Japan, Germany, Canada, and the U.S., has taken yet another approach: trapping single electrons in MOS devices. “What we are trying to do is we are trying to make qubits that are as close to traditional transistors as they can be,” says Tuomo Tanttu, a research fellow at UNSW who led the effort.

Qubits That Act Like Transistors

These qubits are indeed very similar to a regular transistor, gated in such a way as to have only a single electron in the channel. The biggest advantage of this approach is that it can be manufactured using traditional CMOS technologies, making it theoretically possible to scale to millions of qubits on a single chip. Another advantage is that MOS qubits can be integrated on-chip with standard transistors for simplified input, output, and control, says Diraq CEO Andrew Dzurak.

The drawback of this approach, however, is that MOS qubits have historically suffered from device-to-device variability, causing significant noise on the qubits.

“The sensitivity in [MOS] qubits is going to be more than in transistors, because in transistors, you still have 20, 30, 40 electrons carrying the current. In a qubit device, you’re really down to a single electron,” says Ravi Pillarisetty, a senior device engineer for Intel quantum hardware who wasn’t involved in the work.

The team’s result not only demonstrated the 99 percent accurate functionality on two-qubit gates of the test devices, but also helped better understand the sources of device-to-device variability. The team tested three devices with three qubits each. In addition to measuring the error rate, they also performed comprehensive studies to glean the underlying physical mechanisms that contribute to noise.

The researchers found that one of the sources of noise was isotopic impurities in the silicon layer, which, when controlled, greatly reduced the circuit complexity necessary to run the device. The next leading cause of noise was small variations in electric fields, likely due to imperfections in the oxide layer of the device. Tanttu says this is likely to improve by transitioning from a laboratory clean room to a foundry environment.

“It’s a great result and great progress. And I think it’s setting the right direction for the community in terms of thinking less about one individual device, or demonstrating something on an individual device, versus thinking more longer term about the scaling path,” Pillarisetty says.

Now, the challenge will be to scale up these devices to more qubits. One difficulty with scaling is the number of input/output channels required. The quantum team at Intel, who are pursuing a similar technology, has recently pioneered a chip they call Pando Tree to try to address this issue. Pando Tree will be on the same substrate as the quantum processor, enabling faster inputs and outputs to the qubits. The Intel team hopes to use it to scale to thousands of qubits. “A lot of our approach is thinking about, how do we make our qubit processor look more like a modern CPU?” says Pillarisetty.

Similarly, Diraq CEO Dzurak says his team plan to scale their technology to thousands of qubits in the near future through a recently announced partnership with Global Foundries. “With Global Foundries, we designed a chip that will have thousands of these [MOS qubits]. And these will be interconnected by using classical transistor circuitry that we designed. This is unprecedented in the quantum computing world,” Dzurak says.

-

Where VR Gaming Took a Wrong Turn

by Marcus Carter on 10. September 2024. at 13:00

In 2017 Mark Zuckerberg stated a bold goal: He wanted one billion people to try virtual reality (VR) by 2027. While he still has a few years to pull it off, the target remains impossibly farfetched. The most recent estimates place total worldwide VR headset sales at only 34 million.

VR Gaming was expected to lead this uptake, but why hasn’t it? We believe that VR gaming has been held back by game developers who are committed to a fantasy. In this fantasy, VR games align with the values of “hardcore” gamer culture, with advanced graphics and wholly immersive play. Aspirational attempts to reach this flawed fantasy have squashed the true potential of VR for gaming.

VR Gaming’s Contemporary Emergence

The 1990s and 2000s saw several ill-fated attempts to launch VR gaming systems—including the Sega VR system, which the company promoted breathlessly but then never released, because it gave players motion sickness and headaches. But VR gaming’s contemporary emergence really began in August 2009, when then-17-year-old Palmer Luckey began posting on a VR enthusiasts forum about his plan to make a head-mounted VR gaming device. One early reader of Luckey’s posts was John Carmack, lead programmer for several of the most influential first-person shooter games, including Doom and Wolfenstein.

Palmer Luckey, shown here in 2013 at the age of 20, holds an early Oculus Rift virtual reality head-mounted display.Allen J. Schaben/Los Angeles Times/Getty Images

Palmer Luckey, shown here in 2013 at the age of 20, holds an early Oculus Rift virtual reality head-mounted display.Allen J. Schaben/Los Angeles Times/Getty Images

While working on the remaster of Doom 3—which included support for 3D displays—Carmack was experimenting with different VR headsets that were available at the time. The two connected through their forum posts, and Luckey sent one of his prototype VR headsets to Carmack. When Carmack took the prototype to the major gaming expo E3 in 2012, it catalyzed an avalanche of interest in the project.

Carmack’s involvement put Luckey’s newly formed company, Oculus VR, on a trajectory towards a particular kind of gaming: the hyper-violent games with high-fidelity graphics that hardcore gamers revere. Carmack, far beyond anyone else, pioneered the genre of hardcore games with his first-person shooter games.

Sega Visions Magazine promoted Sega VR in its August/September 1993 issue. Sega

Sega Visions Magazine promoted Sega VR in its August/September 1993 issue. Sega

Here’s how the gaming scholar Shira Chess sums up the genre: “Traditionally, ‘hardcore’ describes games that are difficult to learn, expensive, and unforgiving of mistakes and that must be played over longer periods of time. Conversely, casual games can be learned quickly, are forgiving of mistakes and cheap or free, and can be played for either longer or shorter periods of time, depending on one’s schedule.”

Oculus’s Kickstarter campaign in 2012 was proudly “designed for gamers, by gamers.” Soon after, Meta (then Facebook) acquired Oculus for US $3 billion in March 2014. The acquisition enraged many of those in the gaming community and those who had backed the original Kickstarter. Facebook was already an unpopular platform with the tech-enthusiast community, associated more closely with data collection and surveillance than gaming. If Facebook was associated with gaming, it was with casual social media games like Farmville and Bejeweled. But as it turns out, Meta went on to invest billions in VR, a level of investment highly unlikely if Oculus had remained independent.

The Three Wrong Assumptions of VR Gaming

VR’s origin in hardcore gaming culture resulted in VR game development being underpinned by three false assumptions about the types of experiences that would (or could) make VR gaming successful. These assumptions were that gamers wanted graphical realism and fast-paced violence, and that they didn’t want casual play experiences.

Over the past three decades, “AAA” game development—a term used in the games industry to signify high-budget games distributed by large publishers—has driven the massive expansion of computing power in consumer gaming devices. Particularly in PC gaming, part of what made a game hardcore was the computing power needed to run it at “maximum settings,” with the most detailed and textured graphics available.

The enormous advances in game graphics over the past 30 years contributed to significant improvements in player experience. This graphical realism became closely entwined with the concept of immersion.

For VR—which sold itself as “ truly immersive”—this meant that hardcore gamers expected graphically real VR experiences. But VR environments need to be rendered smoothly in order to not cause motion sickness, something made harder by a commitment to graphical realism. This aspiration saddling VR games with a nearly impossible compute burden.

One game that sidesteps this issue—and has subsequently become one of the most celebrated VR games—is Superhot VR, an action puzzle with basic graphics in which enemy avatars and their bullets only move when the player moves their body.

The video game Superhot VR remains one of the top-selling VR games years after its release due to its unique experience of time manipulation through body movements.Superhot VR

The video game Superhot VR remains one of the top-selling VR games years after its release due to its unique experience of time manipulation through body movements.Superhot VR

Play begins with the player surrounded by attacking enemies, with death immediately returning the player to the starting moment. Play thus involves discovering what sequence of movements and attacks can get the player out of this perilous situation. It’s a learning curve reminiscent of the 2014 science-fiction film Edge of Tomorrow, in which a hapless soldier (played by Tom Cruise) quicky becomes an elite, superhuman soldier while stuck in a time loop.

The attention in Superhot’s gameplay is not to visual fidelity or sensory immersion, but what genuinely makes VR distinct: embodiment. The effect of its conceit is a superhuman-like control of time manipulation, with players deftly contorting their bodies to evade slow moving bullets while dispatching enemies with an empowering ease. Superhot VR provides an experience worth donning a headset for, and it consequently remains one of VR gaming’s top selling titles eight years after its release.

When Immersion Is Too Much

John Carmack’ Doom and Wolfenstein, on which VR’s gaming fantasy was based, are first-person shooters that closely map to hardcore gaming ideals. They’re hyperviolent, fast-paced, and difficult; they have a limited focus on story; and they feature some of the goriest scenes in games. In the same way that VR gaming has been detrimentally entwined with the pursuit of photorealism, VR gaming has been co-opted by these hardcore values that ultimately limit the medium. They lack mainstream appeal and valorise experiences that simply aren’t as appealing in VR as it is in a flat screen.

In a discussion around the design of Half Life: Alyx—one of the only high-budget VR-only games—designers Greg Coomer and Robin Walker explain that VR changes the way that people interact with virtual environments. As Coomer says, “people are slower to traverse space, and they want to slow down and be more interactive with more things in each environment. It has affected, on a fundamental level, how we’ve constructed environments and put things together.” Walker adds that the changes aren’t “because of some constraint around how they move through the world, it’s just because they pay so much more attention to things and poke at things.” Environments in VR games are much denser; on PC they feel small, but in VR they feel big.

This in part explains why few games originally designed for flat screens and “ported” to VR have been successful. The rapidly paced hyperviolence best characterized by Doom is simply sensory overload in VR, and the “intensity of being there”—one of Carmack’s aspirations—is unappealing. In VR, unrelenting games are unpleasurable: Most of us aren’t that coordinated, and we can’t play for extended periods of time in VR. It’s physically exhausting.

Casual Virtual Reality?

Beat Saber is a prime example of a game that might be derided as casual, if it weren’t the bestselling VR game of all time. Beat Saber is a music rhythm-matching game, a hybrid of Dance Dance Revolution, Guitar Hero, and Fruit Ninja. In time with electronic music, a playlist of red or blue boxes streams towards the player. Armed with two neon swords—commonly described as light sabers—the player must strike these boxes in the correct direction, denoted by a subtle white arrow.

Striking a box releases a note in the accompanying song, resulting in an experience that is half playing an instrument, and half dance. Well patterned songs create sweeping movements and rhythms reminiscent of the exaggerated gestures used by Nintendo Wii players.

Beat Saber youtube

Beat Saber’s appeal is immersion-through-embodiment, also achieved by disregarding VR’s gaming fantasy of hardcore experiences. With each song being, well, song length, Beat Saber supports a shorter, casual mode of engagement that isn’t pleasurable because it is difficult or competitive, but simply because playing a song feels good.

Gaming in VR has been subjected to a vicious self-reinforcing cycle wherein VR developers create hardcore games, which appeal to a certain kind of hardcore gamer user, whose purchasing habits in turn drive further development of those kinds of games, and not others. Attempts to penetrate this feedback loop have been met with the hostility of VR’s online gaming culture, appropriated from gamer culture at large.

As a result, the scope of VR games remains narrow, and oblivious to the kinds of games that might take VR to its billionth user. Maybe then, the one thing that could save VR gaming is the one possibility that VR enthusiasts decried the most when Facebook purchased Oculus in 2014: Farmville VR.

-

Meet the Teens Whose Tech Reduces Drownings and Fights Air Pollution

by Elizabeth Fuscaldo on 09. September 2024. at 18:00

Drowning is the third leading cause of accidental deaths globally, according to the World Health Organization. The deaths disproportionately impact low- and middle-income communities, whose beaches tend to lack lifeguards because of limited funds. Last year 104 drownings of the 120 reported in the United States occurred on unstaffed beaches.

That fueled Angelina Kim’s drone project. Kim is a senior at the Bishop’s School in La Jolla, Calif. Her Autonomous Unmanned Aerial Vehicle (UAV) System for Ocean Hazard Recognition and Rescue: Scout and Rescue UAV Prototype project was showcased in May at Regeneron’s International Science and Engineering Fair (ISEF) in Los Angeles.

Kim project took first place in this year’s IEEE Presidents’ Scholarship competition: a prize of US $10,000 payable over four years of undergraduate university study. The IEEE Foundation established the award to acknowledge a deserving student whose project demonstrates an understanding of electrical or electronics engineering, computer science, or other IEEE field of interest. The scholarship is administered by IEEE Educational Activities.

Kim has long been motivated to help those in need, especially after her mother’s illness when Kim was a young child.

“I’ve been determined to find ways to create a safer community using technology my whole life,” she says. “I realized as I got more into robotics that technology can be used to protect those in my community.”

Kim and the second- and third-place scholarship winners also received a complimentary IEEE student membership.

In addition, to mark the scholarship’s quarter century, IEEE established a 25th anniversary award to honor a project that is likely to make a difference. It also was given out at ISEF.

Drones that can save swimmers’ lives

Angelina Kim’s autonomous drone system, consisting of a scout and rescue drone, aims to prevent drownings on beaches that have no lifeguards.Lynn Bowlby

Angelina Kim’s autonomous drone system, consisting of a scout and rescue drone, aims to prevent drownings on beaches that have no lifeguards.Lynn Bowlby The autonomous UAV lifeguard system consists of two types of drones: a scout craft and a rescue craft. The scout drone surveys approximately 1 kilometer of shoreline, taking photographs and analyzing them for rip currents, which can be deadly to swimmers.

The scout drone “implements a new version of differential frame displacement and a new depth-risk model,” Kim says. The displacement compares the previous image to the next one and notes in what direction a wave is moving. The algorithm detects rip currents and, using the depth-risk model, focuses on strong currents in the deep end—which are more dangerous. If the scout drone detects a swimmer caught in such a current, it summons the rescue drone. That drone drops a flotation device outfitted with a heaving rope and can pull the endangered swimmer to shore, Kim says.

The rescue drones operate with roll-and-pitch tilt rotors, allowing them to fly in whatever direction and orientation they need to.

Kim says she was shocked and ecstatic to receive the award: “It felt like a dream come true because IEEE has been the organization I’ve always wanted to be a part of.”

She presented her project at two IEEE gatherings this year: the IEEE International Conference on Control and Automation and the IEEE International Conference on Automatic Control and Intelligent Systems.

Kim says some of the roadblocks she encountered with her project have made her a better engineer.

“If everything goes perfectly, then there will not be much to learn,” she says. “But if you fail—like accidentally flying your drone into your parents’ house, like I did—you get opportunities to learn.”

She plans to study electrical or mechanical engineering in college. Eventually, she says, she would like to build and mass-produce technologies that help communities at a low cost.

Spotting carbon dioxide in soil

Sahiti Bulusu’s Carboflux device measures and tracks carbon levels in soil to collect data for climatologists. Lynn Bowlby

Sahiti Bulusu’s Carboflux device measures and tracks carbon levels in soil to collect data for climatologists. Lynn BowlbyExcessive carbon dioxide is a leading cause of global warming and climate change, according to NASA. Burning fossil fuels is a prominent source of carbon dioxide, but it also can be released from the ground.

“People don’t account for the large amounts of CO2 that come from soil,” second-place winner Sahiti Bulusu says. “Carbon flux is the rate at which CO2 is exchanged between the soil and the atmosphere, and can account for 80 percent of net ecosystem carbon exchange.”

Bulusu is a senior at the Basis Independent high school in Fremont, California.

Ecosystem carbon exchange is the transfer of carbon dioxide between the atmosphere and the physical environment. Because the exchange is not often accounted for, there’s a gap in data. To provide additional information, Bulusu created the Carboflux Network.

The sensor node is designed to measure enhanced flux CO2 and add to the Global Ecosystem Monitoring Network. The system contains three main subunits: a flux chamber, an under-soil sensor array, and a microcontroller with a data transmission unit. The enclosed flux chamber houses sensors. When CO2 accumulates in the chamber, the linear increase in carbon dioxide concentration is used to calculate carbon flux. The second subunit uses the gradient method, which measures flux using Fick’s law, looking at the CO2 gradient in the soil, and multiplying it with the soil diffusivity coefficient. Sensors are put in the ground at different depths to measure the gradient.

“Carboflux uses the gas concentration between the depths to predict what the soil carbon flux would be,” Bulusu says.

The system is automated and provides real-time data through microcontrollers and a modem. The device studies soil flux, both above and beneath the ground, she says.

“It is able to be scaled locally and globally,” she says, “helping to pinpoint local carbon sources and carbon sinks.” The data also can give scientists a large-scale global perspective.

Bulusu came up with her idea with help from mentors and professors Helen Dahlke and Elad Levintal.

“They told me about the lack of global carbon flux data, and the idea for a network of CO2 flux sensors,” Bulusu says. “This data is needed for climate modeling and mitigation strategies.

“I didn’t think I would be so passionate about a project. I love science, but I never thought I would be someone who would sit in the rain and cold for hours trying to figure out a problem. I was so invested in this project, and it has come so far.”

Bulusu plans to pursue a degree in computer science or environmental science. Whatever field she choses, she says, she wants to improve the environment with the technology she creates.

She was awarded a $600 scholarship for her project.

Accessible communication for ALS patients

Gaze Link, developed by Xiangzhou Sun, uses a mobile app, camera, and artificial intelligence to help those with ALS communicate through their eye movements. Lynn Bowlby

Gaze Link, developed by Xiangzhou Sun, uses a mobile app, camera, and artificial intelligence to help those with ALS communicate through their eye movements. Lynn BowlbyXiangzhou “Jonas” Sun has been volunteering to help people with amyotrophic lateral sclerosis, also known as Lou Gehrig’s disease. After Sun and his family spent time helping ALS patients and assisting caretakers in Hong Kong and the United States, he was inspired to create a mobile app to assist them. He is a senior at the Webb School of California, in Claremont.

While volunteering, he noticed that ALS patients had trouble communicating because of the disease.

“ALS damages neurons in the body, and patients gradually lose the ability to walk, move their hands, and speak,” he says. “My objective with Gaze Link was to build a mobile application that could help ALS patients to type sentences with only their eyes and without external assistance.”

The low-cost smartphone app uses eye-gesture recognition, AI sentence generation, and text-to-speech capabilities to allow people with disabilities linked to ALS to use their phone’s front-end camera to communicate.

Gaze Link now works with three languages: English, Mandarin, and Spanish.

Users’ eye gestures are mapped to words and pronunciations, and an AI next-word-prediction feature is incorporated.

Sun’s passion for his project is palpable.

“My favorite moment was when I brought a prototype of Gaze Link to a caretaker from the ALS Association, and they were overjoyed,” he says. “That moment was really emotional for me because I was motivated by these patients. It gave me a huge sense of achievement that I could finally help them.”

Sun received a $400 scholarship for placing third.

He says he plans to use his engineering know-how to help people with disabilities. He is passionate about design and visual arts as well, and says he hopes to combine them with his engineering skills.

Gaze Link is available through Google Play.

An inclusive way to code

Abhisek Shah’s AuralStudio provides a way for programmers with visual impairments to code using the Rattle language he wrote, which can be read aloud.Lynn Bowlby

Abhisek Shah’s AuralStudio provides a way for programmers with visual impairments to code using the Rattle language he wrote, which can be read aloud.Lynn Bowlby The 25th anniversary award, in the amount of $1,000, was given to Abishek Shah, a senior at Green Level High School, in Cary, N.C.

Last year Shah had a temporary vision issue, which made looking at a computer screen nearly impossible, even for a short time. After that, while visiting family back home in India, he got a chance to interact with some students at a school for blind girls.

During his interactions with the students, he says, he realized they were determined and driven and wanted to be financially independent. None had even considered a career in computer programming, however, believing it to be off-limits because of their visual impairment, he says.

He wondered if there was a solution that could help blind people code.

“How can we redesign and rethink the way we code today?” he asked himself before devising his AuralStudio. He realized he could put his passion for coding to good use and created a software application for those whose vision impairments are permanent.

His AuralStudio development environment allows programmers with a visual disability to write, build, run, and test prototypes.

“All this was built toward helping those with disabilities learn to code,” Shah says.

It eliminates the need for a keyboard and mouse in favor of a custom control pad. It includes a voice-only option for those who cannot use their hands.

The testbed uses Rattle, a programming language that Shah created to be read aloud, both from the computer and the programmer. AuralStudio also uses acyclic digraphs to render code, making it easier and more intuitive. Shah wrote a two-part autocorrect algorithm to prevent homophones and homonyms from causing errors. It was done by integrating AI into the application.

Shah used the programming languages Rust, C, JavaScript, and Python. Raspberry Pi was the primary hardware.

He worked with visually impaired students from the Governor Morehead School of Raleigh, N.C., teaching them programming basics.

“The students were so eager to learn,” he says. “Getting to see their eagerness, grit, and hard-working nature was so heartwarming and probably the best part of this project.”

Shah says he plans to study computer science and business.

“Anything I build will have the end goal of improving the lives of others,” he says.

-

Will the "AI Scientist" Bring Anything to Science?

by Eliza Strickland on 09. September 2024. at 14:41

When an international team of researchers set out to create an “AI scientist” to handle the whole scientific process, they didn’t know how far they’d get. Would the system they created really be capable of generating interesting hypotheses, running experiments, evaluating the results, and writing up papers?

What they ended up with, says researcher Cong Lu, was an AI tool that they judged equivalent to an early Ph.D. student. It had “some surprisingly creative ideas,” he says, but those good ideas were vastly outnumbered by bad ones. It struggled to write up its results coherently, and sometimes misunderstood its results: “It’s not that far from a Ph.D. student taking a wild guess at why something worked,” Lu says. And, perhaps like an early Ph.D. student who doesn’t yet understand ethics, it sometimes made things up in its papers, despite the researchers’ best efforts to keep it honest.

Lu, a postdoctoral research fellow at the University of British Columbia, collaborated on the project with several other academics, as well as with researchers from the buzzy Tokyo-based startup Sakana AI. The team recently posted a preprint about the work on the ArXiv server. And while the preprint includes a discussion of limitations and ethical considerations, it also contains some rather grandiose language, billing the AI scientist as “the beginning of a new era in scientific discovery,” and “the first comprehensive framework for fully automatic scientific discovery, enabling frontier large language models (LLMs) to perform research independently and communicate their findings.”

The AI scientist seems to capture the zeitgeist. It’s riding the wave of enthusiasm for AI for science, but some critics think that wave will toss nothing of value onto the beach.

The “AI for Science” Craze

This research is part of a broader trend of AI for science. Google DeepMind arguably started the craze back in 2020 when it unveiled AlphaFold, an AI system that amazed biologists by predicting the 3D structures of proteins with unprecedented accuracy. Since generative AI came on the scene, many more big corporate players have gotten involved. Tarek Besold, a SonyAI senior research scientist who leads the company’s AI for scientific discovery program, says that AI for science is “a goal behind which the AI community can rally in an effort to advance the underlying technology but—even more importantly—also to help humanity in addressing some of the most pressing issues of our times.”

Yet the movement has its critics. Shortly after a 2023 Google DeepMind paper came out claiming the discovery of 2.2 million new crystal structures (“equivalent to nearly 800 years’ worth of knowledge”), two materials scientists analyzed a random sampling of the proposed structures and said that they found “scant evidence for compounds that fulfill the trifecta of novelty, credibility, and utility.” In other words, AI can generate a lot of results quickly, but those results may not actually be useful.

How the AI Scientist Works

In the case of the AI scientist, Lu and his collaborators tested their system only on computer science, asking it to investigate topics relating to large language models, which power chatbots like ChatGPT and also the AI scientist itself, and the diffusion models that power image generators like DALL-E.

The AI scientist’s first step is hypothesis generation. Given the code for the model it’s investigating, it freely generates ideas for experiments it could run to improve the model’s performance, and scores each idea on interestingness, novelty, and feasibility. It can iterate at this step, generating variations on the ideas with the highest scores. Then it runs a check in Semantic Scholar to see if its proposals are too similar to existing work. It next uses a coding assistant called Aider to run its code and take notes on the results in the format of an experiment journal. It can use those results to generate ideas for follow-up experiments.

The AI scientist is an end-to-end scientific discovery tool powered by large language models. University of British Columbia

The AI scientist is an end-to-end scientific discovery tool powered by large language models. University of British ColumbiaThe next step is for the AI scientist to write up its results in a paper using a template based on conference guidelines. But, says Lu, the system has difficulty writing a coherent nine-page paper that explains its results—”the writing stage may be just as hard to get right as the experiment stage,” he says. So the researchers broke the process down into many steps: The AI scientist wrote one section at a time, and checked each section against the others to weed out both duplicated and contradictory information. It also goes through Semantic Scholar again to find citations and build a bibliography.

But then there’s the problem of hallucinations—the technical term for an AI making stuff up. Lu says that although they instructed the AI scientist to only use numbers from its experimental journal, “sometimes it still will disobey.” Lu says the model disobeyed less than 10 percent of the time, but “we think 10 percent is probably unacceptable.” He says they’re investigating a solution, such as instructing the system to link each number in its paper to the place it appeared in the experimental log. But the system also made less obvious errors of reasoning and comprehension, which seem harder to fix.

And in a twist that you may not have seen coming, the AI scientist even contains a peer review module to evaluate the papers it has produced. “We always knew that we wanted some kind of automated [evaluation] just so we wouldn’t have to pour over all the manuscripts for hours,” Lu says. And while he notes that “there was always the concern that we’re grading our own homework,” he says they modeled their evaluator after the reviewer guidelines for the leading AI conference NeurIPS and found it to be harsher overall than human evaluators. Theoretically, the peer review function could be used to guide the next round of experiments.

Critiques of the AI Scientist

While the researchers confined their AI scientist to machine learning experiments, Lu says the team has had a few interesting conversations with scientists in other fields. In theory, he says, the AI scientist could help in any field where experiments can be run in simulation. “Some biologists have said there’s a lot of things that they can do in silico,” he says, also mentioning quantum computing and materials science as possible fields of endeavor.

Some critics of the AI for science movement might take issue with that broad optimism. Earlier this year, Jennifer Listgarten, a professor of computational biology at UC Berkeley, published a paper in Nature Biotechnology arguing that AI is not about to produce breakthroughs in multiple scientific domains. Unlike the AI fields of natural language processing and computer vision, she wrote, most scientific fields don’t have the vast quantities of publicly available data required to train models.

Two other researchers who study the practice of science, anthropologist Lisa Messeri of Yale University and psychologist M.J. Crockett of Princeton University, published a 2024 paper in Nature that sought to puncture the hype surrounding AI for science. When asked for a comment about this AI scientist, the two reiterated their concerns over treating “AI products as autonomous researchers.” They argue that doing so risks narrowing the scope of research to questions that are suited for AI, and losing out on the diversity of perspectives that fuels real innovation. “While the productivity promised by ‘the AI Scientist’ may sound appealing to some,” they tell IEEE Spectrum, “producing papers and producing knowledge are not the same, and forgetting this distinction risks that we produce more while understanding less.”

But others see the AI scientist as a step in the right direction. SonyAI’s Besold says he believes it’s a great example of how today’s AI can support scientific research when applied to the right domain and tasks. “This may become one of a handful of early prototypes that can help people conceptualize what is possible when AI is applied to the world of scientific discovery,” he says.

What’s Next for the AI Scientist

Lu says that the team plans to keep developing the AI scientist, and he says there’s plenty of low-hanging fruit as they seek to improve its performance. As for whether such AI tools will end up playing an important role in the scientific process, “I think time will tell what these models are good for,” Lu says. It might be, he says, that such tools are useful for the early scoping stages of a research project, when an investigator is trying to get a sense of the many possible research directions—although critics add that we’ll have to wait for future studies to see if these tools are really comprehensive and unbiased enough to be helpful.

Or, Lu says, if the models can be improved to the point that they match the performance of “a solid third-year Ph.D. student,” they could be a force multiplier for anyone trying to pursue an idea (at least, as long as the idea is in an AI-suitable domain). “At that point, anyone can be a professor and carry out a research agenda,” says Lu. “That’s the exciting prospect that I’m looking forward to.”

-

Greener Steel Production Requires More Electrochemical Engineers

by Dan Steingart on 08. September 2024. at 13:00

In the 1800s, aluminum was considered more valuable than gold or silver because it was so expensive to produce the metal in any quantity. Thanks to the Hall-Héroult smelting process, which pioneered the electrochemical reduction of aluminum oxide in 1886, electrochemistry advancements made aluminum more available and affordable, rapidly transforming it into a core material used in the manufacturing of aircraft, power lines, food-storage containers and more.

As society mobilizes against the pressing climate crisis we face today, we find ourselves seeking transformative solutions to tackle environmental challenges. Much as electrochemistry modernized aluminum production, science holds the key to revolutionizing steel and iron manufacturing.

Electrochemistry can help save the planet

As the world embraces clean energy solutions such as wind turbines, electric vehicles, and solar panels to address the climate crisis, changing how we approach manufacturing becomes critical. Traditional steel production—which requires a significant amount of energy to burn fossil fuels at temperatures exceeding 1,600 °C to convert ore into iron—currently accounts for about 10 percent of the planet’s annual CO2 emissions. Continuing with conventional methods risks undermining progress toward environmental goals.

Scientists already are applying electrochemistry—which provides direct electrical control of oxidation-reduction reactions—to convert ore into iron. The conversion is an essential step in steel production and the most emissions-spewing part. Electrochemical engineers can drive the shift toward a cleaner steel and iron industry by rethinking and reprioritizing optimizations.

When I first studied engineering thermodynamics in 1998, electricity—which was five times the price per joule of heat—was considered a premium form of energy to be used only when absolutely required.

Since then the price of electricity has steadily decreased. But emissions are now known to be much more harmful and costly.

Engineers today need to adjust currently accepted practices to develop new solutions that prioritize mass efficiency over energy efficiency.

In addition to electrochemical engineers working toward a cleaner steel and iron industry, advancements in technology and cheaper renewables have put us in an “electrochemical moment” that promises change across multiple sectors.

The plummeting cost of photovoltaic panels and wind turbines, for example, has led to more affordable renewable electricity. Advances in electrical distribution systems that were designed for electric vehicles can be repurposed for modular electrochemical reactors.

Electrochemistry holds the potential to support the development of clean, green infrastructure beyond batteries, electrolyzers, and fuel cells. Electrochemical processes and methods can be scaled to produce metals, ceramics, composites, and even polymers at scales previously reserved for thermochemical processes. With enough effort and thought, electrochemical production can lead to billions of tons of metal, concrete, and plastic. And because electrochemistry directly accesses the electron transfer fundamental to chemistry, the same materials can be recycled using renewable energy.

As renewables are expected to account for more than 90 percent of global electricity expansion during the next five years, scientists and engineers focused on electrochemistry must figure out how best to utilize low-cost wind and solar energy.

The core components of electrochemical systems, including complex oxides, corrosion-resistant metals, and high-power precision power converters, are now an exciting set of tools for the next evolution of electrochemical engineering.

The scientists who came before have created a stable set of building blocks; the next generation of electrochemical engineers needs to use them to create elegant, reliable reactors and other systems to produce the processes of the future.

Three decades ago, electrochemical engineering courses were, for the most part, electives and graduate-level. Now almost every institutional top-ranked R&D center has full tracks of electrochemical engineering. Students interested in the field should take both electroanalytical chemistry and electrochemical methods classes and electrochemical energy storage and materials processing coursework.

Although scaled electrochemical production is possible, it is not inevitable. It will require the combined efforts of the next generation of engineers to reach its potential scale.

Just as scientists found a way to unlock the potential of the abundant, once-unattainable aluminum, engineers now have the opportunity to shape a cleaner, more sustainable future. Electrochemistry has the power to flip the switch to clean energy, paving the way for a world in which environmental harmony and industrial progress go hand in hand.

-

Get to Know the IEEE Board of Directors

by IEEE on 06. September 2024. at 18:00

The IEEE Board of Directors shapes the future direction of IEEE and is committed to ensuring IEEE remains a strong and vibrant organization—serving the needs of its members and the engineering and technology community worldwide—while fulfilling the IEEE mission of advancing technology for the benefit of humanity.

This article features IEEE Board of Directors members A. Matt Francis, Tom Murad, and Christopher Root.

IEEE Senior Member A. Matt Francis

Director, IEEE Region 5: Southwestern U.S.

Moriah Hargrove Anders

Moriah Hargrove AndersFrancis’s primary technology focus is extreme environment and high-temperature integrated circuits. His groundbreaking work has pushed the boundaries of electronics, leading to computers operating in low Earth orbit for more than a year on the International Space Station and on jet engines. Francis and his team have designed and built some of the world’s most rugged semiconductors and systems.

He is currently helping explore new computing frontiers in supersonic and hypersonic flight, geothermal energy exploration, and molten salt reactors. Well versed in shifting technology from idea to commercial application, Francis has secured and led projects with the U.S. Air Force, DARPA, NASA, the National Science Foundation, the U.S. Department of Energy, and private-sector customers.

Francis’s influence extends beyond his own ventures. He is a member of the IEEE Aerospace and Electronic Systems, IEEE Computer, and IEEE Electronics Packaging societies, demonstrating his commitment to industry and continuous learning.

He attended the University of Arkansas in Fayetteville for both his undergraduate and graduate degrees. He joined IEEE while at the university and was president of the IEEE–Eta Kappa Nu honor society’s Gamma Phi chapter. Francis’s other past volunteer roles include serving as chair of the IEEE Ozark Section, which covers Northwest Arkansas, and also as a member of the IEEE-USA Entrepreneurship Policy Innovation Committee.

His deep-rooted belief in the power of collaboration is evident in his willingness to share knowledge and support aspiring entrepreneurs. Francis is proud to have helped found a robotics club (an IEEE MGA Local Group) in his rural Elkins, Ark., community and to have served on steering committees for programs including IEEE TryEngineering and IEEE-USA’s Innovation, Research, and Workforce Conferences. He serves as an elected city council member for his town, and has cofounded two non-profits, supporting his community and the state of Arkansas.

Francis’s journey from entrepreneur to industry leader is a testament to his determination and innovative mindset. He has received numerous awards including the IEEE-USA Entrepreneur Achievement Award for Leadership in Entrepreneurial Spirit, IEEE Region 5 Directors Award, and IEEE Region 5 Outstanding Individual Member Achievement Award.

IEEE Senior Member Tom Murad

Director, IEEE Region 7: Canada

Siemens Canada

Siemens CanadaMurad is a respected technology leader, award-winning educator, and distinguished speaker on engineering, skills development, and education. Recently retired, he has 40 years of experience in professional engineering and technical operations executive management, including more than 10 years of academic and R&D work in industrial controls and automation.

He received his doctorate (Ph.D.) degree in power electronics and industrial controls from Loughborough University of Technology in the U.K.

Murad has held high-level positions in several international engineering and industrial organizations, and he contributed to many global industrial projects. His work on projects in power utilities, nuclear power, oil and gas, mining, automotive, and infrastructure industries has directly impacted society and positively contributed to the economy. He is a strong advocate of innovation and creativity, particularly in the areas of digitalization, smart infrastructure, and Industry 4.0. He continues his academic career as an adjunct professor at University of Guelph in Ontario, Canada.

His dedication to enhancing the capabilities of new generations of engineers is a source of hope and optimism. His work in significantly improving the quality and relevance of engineering and technical education in Canada is a testament to his commitment to the future of the engineering profession and community. For that he has been assigned by the Ontario Government to be a member of the board of directors of the Post Secondary Education Quality Assessment Board (PEQAB).

Murad is a member of the IEEE Technology and Engineering Management, IEEE Education, IEEE Intelligent Transportation Systems, and IEEE Vehicular Technology societies, the IEEE-Eta Kappa Nu honor society, and the Editorial Advisory Board Chair for the IEEE Canadian Review Magazine. His accomplishments show his passion for the engineering profession and community.

He is a member of the Order of Honor of the Professional Engineers of Ontario, Canada, Fellow of Engineers Canada, Fellow of Engineering Institutes of Canada (EIC), and received the IEEE Canada J.M. Ham Outstanding Engineering Educator Award, among other recognitions highlighting his impact on the field.

IEEE Senior Member Christopher Root

Director, Division VII

Vermont Electric Power Company and Shana Louiselle

Vermont Electric Power Company and Shana LouiselleRoot has been in the electric utility industry for more than 40 years and is an expert in power system operations, engineering, and emergency response. He has vast experience in the operations, construction, and maintenance of transmission and distribution utilities, including all phases of the engineering and design of power systems. He has shared his expertise through numerous technical presentations on utility topics worldwide.

Currently an industry advisor and consultant, Root focuses on the crucial task of decarbonizing electricity production. He is engaged in addressing the challenges of balancing an increasing electrical market and dependence on renewable energy with the need to provide low-cost, reliable electricity on demand.

Root’s journey with IEEE began in 1983 when he attended his first meeting as a graduate student at Rensselaer Polytechnic Institute, in Troy, N.Y. Since then, he has served in leadership roles such as treasurer, secretary, and member-at-large of the IEEE Power & Energy Society (PES). His commitment to the IEEE mission and vision is evident in his efforts to revitalize the dormant IEEE PES Boston Chapter in 2007 and his instrumental role in establishing the IEEE PES Green Mountain Section in Vermont in 2015. He also is a member of the editorial board of the IEEE Power & Energy Magazine and the IEEE–Eta Kappa Nu honor society.

Root’s contributions and leadership in the electric utility industry have been recognized with the IEEE PES Leadership in Power Award and the PES Meritorious Service Award. -

Video Friday: HAND to Take on Robotic Hands

by Evan Ackerman on 06. September 2024. at 15:53

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

ICRA@40: 23–26 September 2024, ROTTERDAM, NETHERLANDS

IROS 2024: 14–18 October 2024, ABU DHABI, UAE

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH

Enjoy today’s videos!

The National Science Foundation Human AugmentatioN via Dexterity Engineering Research Center (HAND ERC) was announced in August 2024. Funded for up to 10 years and $52 million, the HAND ERC is led by Northwestern University, with core members Texas A&M, Florida A&M, Carnegie Mellon, and MIT, and support from Wisconsin-Madison, Syracuse, and an innovation ecosystem consisting of companies, national labs, and civic and advocacy organizations. HAND will develop versatile, easy-to-use dexterous robot end effectors (hands).

[ HAND ]

The Environmental Robotics Lab at ETH Zurich, in partnership with Wilderness International (and some help from DJI and Audi), is using drones to sample DNA from the tops of trees in the Peruvian rainforest. Somehow, the treetops are where 60 to 90 percent of biodiversity is found, and these drones can help researchers determine what the heck is going on up there.

[ ERL ]

Thanks, Steffen!

1X introduces NEO Beta, “the pre-production build of our home humanoid.”

“Our priority is safety,” said Bernt Børnich, CEO at 1X. “Safety is the cornerstone that allows us to confidently introduce NEO Beta into homes, where it will gather essential feedback and demonstrate its capabilities in real-world settings. This year, we are deploying a limited number of NEO units in selected homes for research and development purposes. Doing so means we are taking another step toward achieving our mission.”

[ 1X ]

We love MangDang’s fun and affordable approach to robotics with Mini Pupper. The next generation of the little legged robot has just launched on Kickstarter, featuring new and updated robots that make it easy to explore embodied AI.

The Kickstarter is already fully funded after just a day or two, but there are still plenty of robots up for grabs.

[ Kickstarter ]

Quadrupeds in space can use their legs to reorient themselves. Or, if you throw one off a roof, it can learn to land on its feet.

To be presented at CoRL 2024.

[ ARL ]

HEBI Robotics, which apparently was once headquartered inside a Pittsburgh public bus, has imbued a table with actuators and a mind of its own.

[ HEBI Robotics ]

Carcinization is a concept in evolutionary biology where a crustacean that isn’t a crab eventually becomes a crab. So why not do the same thing with robots? Crab robots solve all problems!

[ KAIST ]

Waymo is smart, but also humans are really, really dumb sometimes.

[ Waymo ]

The Robotics Department of the University of Michigan created an interactive community art project. The group that led the creation believed that while roboticists typically take on critical and impactful problems in transportation, medicine, mobility, logistics, and manufacturing, there are many opportunities to find play and amusement. The final piece is a grid of art boxes, produced by different members of our robotics community, which offer an eight-inch-square view into their own work with robotics.

I appreciate that UBTECH’s humanoid is doing an actual job, but why would you use a humanoid for this?

[ UBTECH ]

I’m sure most actuators go through some form of life-cycle testing. But if you really want to test an electric motor, put it into a BattleBot and see what happens.

Yes, but have you tried fighting a BattleBot?

[ AgileX ]

In this video, we present collaboration aerial grasping and transportation using multiple quadrotors with cable-suspended payloads. Grasping using a suspended gripper requires accurate tracking of the electromagnet to ensure a successful grasp while switching between different slack and taut modes. In this work, we grasp the payload using a hybrid control approach that switches between a quadrotor position control and a payload position control based on cable slackness. Finally, we use two quadrotors with suspended electromagnet systems to collaboratively grasp and pick up a larger payload for transportation.

[ Hybrid Robotics ]

I had not realized that the floretizing of broccoli was so violent.

[ Oxipital ]

While the RoboCup was held over a month ago, we still wanted to make a small summary of our results, the most memorable moments, and of course an homage to everyone who is involved with the B-Human team: the team members, the sponsors, and the fans at home. Thank you so much for making B-Human the team it is!

[ B-Human ]

-

When "AI for Good" Goes Wrong

by Payal Arora on 05. September 2024. at 14:59

This guest article is adapted from the author’s new book From Pessimism to Promise: Lessons from the Global South on Designing Inclusive Tech, published by MIT Press.

What do AI-enabled rhino collars in South Africa, computer-vision pest-detection drones in the Punjab farmlands, and wearable health devices in rural Malawi have in common?

These initiatives are all part of the AI for Good movement, which aligns AI technologies with the United Nations sustainable development goals to find solutions for global challenges like poverty, health, education, and environmental sustainability.

MIT Press

MIT PressThe hunger for AI-based solutions is understandable. In 2023, 499 rhinos were killed by poachers in South Africa, an increase of more than 10 percent from 2022. Several farmers in Punjab lost about 90 percent of their cotton yield to the pink bollworm; if the pest had been detected in time, they could have saved their crops. As for healthcare, despite decades of effort to boost the numbers of healthcare practitioners in rural areas, they continue to migrate to cities.

What makes AI “good,” though? Why do we need to preface AI applications in the Global South with morality and charity? And will noble intent translate to making AI tools work for the majority of the world?

A Changed Reality

The fact is, the Global South of decades ago does not exist.

Today the countries in the Global South are more confident, more entrepreneurial, and are taking leadership to pioneer locally appropriate AI tools that work for their people. Startups understand that the success of new tech is contingent on leveraging local knowledge for meaningful adoption and scaling.

The old formula of “innovate in the West and disseminate to the rest” is out of sync with this new reality. While the West holds onto its old missionary zeal, the South-South collaboration continues to grow, sharing new tech and building AI governance. What’s more, some tech altruism initiatives have come under scrutiny as they obfuscate their data extraction activities, making them more transactional than charitable.

The Market for Tech Altruism

In August, the European Union’s legal framework on AI, the AI Act, entered into force. Its measures are meant to help citizens and stakeholders optimize these tools while mitigating the risks. There is little mention of AI for good in their documents; it’s simply the default. Yet, as we shift from the Global North to the South, morality kicks in.

Tech altruism underlines this shift. Many of the AI for Good initiatives are funded by tech philanthropists in partnership with global aid agencies. Doing good manifests in piloting tech solutions, with the Global South as a live laboratory. A running joke with development workers is that their field suffers from “pilotitis,” an acute syndrome of pilot projects that never scale. The Global South is typically viewed as a recipient, a market, a beneficiary for techno-solutionism.

Take AI collars for rhinos. The Conservation Collar initiative in South Africa, for example, detects abnormal behavior, and these signals are sent to an AI system that computes the probability of risk. If it determines that the animal is at urgent risk, the rangers can hypothetically act immediately to stop the poaching. But when my team investigated the ground realities, we found that rangers face numerous obstacles to fast action, including dirt roads, old vehicles, and long distances. Many rangers had not been paid in months, and their motivation was low. And to top all this, they faced an armed militia protecting a multibillion rhino trade business.

In Punjab, drones with computer vision can guide farmers to detect pests before they destroy crops. The Global Alliance for Climate-Smart Agriculture funds projects involving many such AI-enabled technologies as farmers face the vagaries of the climate crisis. However, detection is just one part of a larger problem. Farmers struggle with poor quality and unaffordable pesticides, loan sharks, the vulnerabilities brought on by monocropping, and water scarcity. Agricultural innovators complain that there are few early adopters of their tech, however good their tools may be. Afterall, young people in the Global South increasingly don’t see their future in farming.

Meanwhile, we’ve seen philanthropies such as the Bill & Melinda Gates Foundation launch grand challenges for AI to help alleviate burdens on African healthcare systems. This has resulted in winners such as IntelSurv in Malawi, an intelligent disease surveillance data feedback system that computes data from smart wearables. Yet, even with hundreds of patents for such devices being registered every year, they’re not yet capable of consistently capturing high-quality data. In places like Malawi, these devices may become the single source of training data for healthcare AI, amplifying errors in their healthcare system

The fact is, we can’t really solve problems with AI without accompanying social reforms. Building proper roads or paying your rangers on time is not an innovation, it’s common sense. Likewise, whether it’s in the healthcare or the agricultural sector, people need social incentives to adopt these technologies. Otherwise these AI tools will remain in the wild, and won’t be domesticated.

Data Is Currency

Tech altruism has increasingly become suspect as AI companies are now facing an acute data shortage. They’re scrambling for data in the Global South, where majority of tech users live. Take, for instance, the case of Worldcoin, co-founded by OpenAI CEO Sam Altman. It plans to become “the world’s largest privacy-preserving human identity and financial network, giving ownership to everyone.” Worldcoin started as a nonprofit in 2019 by collecting biometric data, mostly in Global South countries, through its “orb” device and in exchange for cryptocurrency. Today, it’s a for-profit entity and is under investigation by many countries for its dubious data-collection methods.

The German nonprofit Heinrich-Böll-Stiftung recently reported on the aggressive growth of digital agricultural platforms across Africa which promise farmers precision agriculture and increased yields via AI-enabled apps. Yet these apps often provide corporations with free access to data about seeds, soil, crops, fertilizers, and weather from the farms where they’re used. Corporations can use AI analytics to weaponize this information, perhaps creating discriminatory agricultural insurance policies or micro-targeting ads for seeds and fertilizers. Similarly, in the health care sector, the Center for Digital Health at Brown University has reported on the selling of personal health data to third-party advertisers without user consent.

The problem is that, unlike private companies that are compelled to follow the law, altruistic initiatives often succeed in circumventing regulations due to their “charitable” intent. Almost a decade ago, Facebook launched Free Basics, which provided access to limited internet services in the Global South by violating net neutrality principles. When India blocked Free Basics in 2015, Mark Zuckerberg appeared shocked and remarked, “Who could possibly be against this?”

Today we ask, who could possibly get on board?

From Paternalism to Partnerships

As of 2024, according to one estimate, the Global South contributes 80 percent to global economic growth. Close to 90 percent of the world’s young population reside in these regions. And it has become a vital space for innovation. In 2018, China entered the global innovation index rankings as one of the top twenty most innovative countries in the world. India’s government has set up its “tech stack,” the largest open source, interoperable, and public digital infrastructure in the world. This stack is enabling entrepreneurs to build their products and services away from the Apple and Google duopoly that constrains competition and choice.

Despite the Global South demonstrating its innovative prowess, the imitator label remains sticky. This perception often translates to Western organizations treating Global South countries as beneficiaries, and not as partners and leaders in global innovation.

It is time we stop underestimating the Global South. Instead, Western organizations should channel their energies by looking at how different consumers can help to rethink opportunity, safeguards, and digital futures for the world’s majority. Inclusion is not an altruistic act. It is an essential element to generating solutions for the wicked problems that humanity faces today.

In designing new tech, we need to shift away from morality-driven design with grandiose visions of doing good. Instead, we should strive for design that focuses on the relationships between people, contexts, and policies.

Designers, programmers, and funders can benefit from listening to what users and entrepreneurs in the Global South have to say about AI intervening in their lives. And policymakers should bury the term “AI for Good.”

Media outlets must stop debating whether tech alone can solve the world’s problems. The real contextual intelligence we need won’t come from AI, but from human beings.

-

How Region Realignment Will Impact IEEE Elections

by IEEE Member and Geographic Activities on 04. September 2024. at 18:00

The work of restructuring IEEE’s geographic regions is well underway. Six U.S. regions will be consolidated into five, joining together the current IEEE Region 1 (Northeastern U.S.) and Region 2 (Eastern U.S.) to form Region 2 (Eastern and Northeastern U.S.). IEEE Region 10 (Asia and Pacific) will be split into two to form Region 10 (North Asia) and Region 11 (South Asia and Pacific).

The restructuring of IEEE’s 10 geographic regions will provide a more equitable representation across its global membership, as outlined in The Institute’s February 2023 article, “IEEE is Realigning Its Geographic Regions.”

The realignment will impact this year’s annual IEEE election process, which runs through 1 October.

In this year’s IEEE annual election, eligible voting members residing in Region 2 will be electing the IEEE Region 2 director-elect for the 2025—2026 term (serving as director in 2027—2028). The elected officer will first serve as director of the current Region 2 in 2027, and then in 2028 as director of the new Region 2.

The eligible voting members residing in Region 10 will be electing the IEEE Region 10 director-elect for the term 2025—2026 (serving as Director in 2027—2028). The elected officer will first serve as director of the current Region 10 in 2027 and then in 2028 as Director of the new Region 10 .

IEEE Member and Geographic Activities is continuing to coordinate the transition in tandem with the other IEEE organizational units in preparation for the realignment, which takes effect in January 2028.

This article appears in the September 2004 print issue.

-

How the Designer of the First Hydrogen Bomb Got the Gig

by Glenn Zorpette on 02. September 2024. at 14:00

By any measure, Richard Garwin is one of the most decorated and successful engineers of the 20th century. The IEEE Life Fellow has won the Presidential Medal of Freedom, the National Medal of Science, France’s La Grande Médaille de l’Académie des Sciences, and is one of just a handful of people elected to all three U.S. National Academies: Engineering, Science, and Medicine. At IBM, where he worked from 1952 to 1993, Garwin was a key contributor or a facilitator on some of the most important products and breakthroughs of his era, including magnetic resonance imaging, touchscreen monitors, laser printers, and the Cooley-Tukey fast Fourier transform algorithm.

And all that was after he did the thing for which he is most famous. At age 23 and at the behest of Edward Teller, Garwin designed the very first working hydrogen bomb, which was referred to as “the Sausage.” It was detonated in a test code-named Ivy Mike at Enewetak Atoll in November 1952, yielding 10.4 megatons of TNT. (The largest detonation before Ivy Mike was of a bomb code-named George, which yielded a mere 225 kilotons.)

Richard Garwin

Richard Garwin is an IBM Fellow Emeritus, an IEEE Life Fellow, and the designer of the first working hydrogen bomb.

Not until 2001—50 years after Garwin’s work on the bomb—did his pivotal role become publicly known. The definitive history of the hydrogen bomb, Richard Rhodes’s Dark Sun: The Making of the Hydrogen Bomb, published in 1995, has barely a page about Garwin. However, in 1979, after suffering a heart attack and contemplating his mortality, Teller sat down with the physicist George A. Keyworth II to record an oral testimony about the project. Teller’s verbal reckoning was kept secret for 22 years, until 2001, at which time a transcript was obtained by The New York Times.

In the transcript, Teller discounts the role of the mathematician Stanislaw Ulam, who was thought to have been Teller’s partner in what is still called the Teller-Ulam configuration. This “configuration” was actually a theory-based framework that envisioned a two-stage thermonuclear device based in part on a fission bomb (the first stage) that would generate the enormous temperatures and pressures needed to trigger a runaway fusion reaction (in the second stage). In the same transcript, Teller lavishes praise on Garwin’s design and declares, “that first design was made by Dick Garwin.” Because of the enduring secrecy around that first thermonuclear bomb, Garwin’s role had been largely unknown outside of a small circle of Los Alamos physicists, mathematicians, and engineers who were involved with the project—notably Teller, Enrico Fermi, Hans Bethe, and Ulam. Teller died in 2003.

Starting in the early 1950s and continuing in parallel with his career at IBM, Garwin also served as an advisor or consultant to U.S. government agencies on some of the most vital tech-related issues, and some of the most prestigious panels, of his times. That work continues to this day with his service as a member of the Jason group, the elite panel that offers technical and scientific advice, often classified, to the U.S. Defense Department and other agencies. Garwin, who has served in advisory roles under every U.S. president from Dwight Eisenhower to Barak Obama, has also been known for his writing and speaking on issues related to nuclear proliferation and arms control.

IEEE Spectrum spoke via videoconference with Garwin, now 96, who was at his home in Westchester County, New York.

Richard Garwin on:

- Returning to Los Alamos to work on the hydrogen bomb

- What it’s like to hold plutonium

- Designing “the Sausage”

- His proudest contributions during his time at IBM

- His friendship with Enrico Fermi

- Whether he considers himself an engineer or a physicist

- His efforts in the nuclear arms-control movement

- The future of nuclear and renewable energy

Garwin arrived at Los Alamos for the second time to work as a physicist in May of 1951. In the interview, he spoke early on, and without prompting, about Edward Teller’s ideas at the time about how a thermonuclear (fusion) bomb would work. Teller had not had much success translating his ideas into a working bomb, in part, Garwin says, because Teller did not understand that the deuterium fuel would “burn” (react) when it was very highly compressed, as it would be in the basic, Teller-Ulam conception of a hydrogen bomb.

Garwin: When I got to Los Alamos for the second time, in 1951, I had already known Edward Teller. He was on the physics faculty of the University of Chicago. And I went to Edward and I said, “What is the progress on your ideas for burning deuterium?” And he told me that he had met with the mathematician, Stanislaw Ulam, who worked for him. Ulam was in his small group. Teller was allowed only about four people in his group, much to his distress. And he resented that. But it was the right choice because you would need an atomic bomb, according to the Ulam-Teller concept. And there was no sense diluting the effort on working on the atomic bomb.

But Edward had had for many years a wrong theorem which he had never written down. He confesses this in his 1979 paper [ Editor’s note: This is the statement dictated to Keyworth after Teller’s heart attack] in which he gives me credit for the hydrogen bomb. But his theorem was that compression wouldn’t help. That if you couldn’t burn deuterium at normal liquid density—I think it’s 0.19 grams per cubic centimeter—you can’t burn it at 100-fold or 1,000-fold density. Everything would just happen faster, 100 times faster, or 1,000 times faster. This was a wrong theorem. He had never written it down, and it was wrong. And when he told Stan Ulam, he said in his 1979 effort, that he had been wasting a lot of time talking to Stan.

And so Edward decided that I would write it [a detailed engineering design for a working hydrogen bomb] up and give him a fair shot. And Ulam’s idea, according to this still-secret document in the Los Alamos report library, was given away by the title of the report. The title of the report that is, and always has been, unclassified. The first part of the title was: “Hydrodynamic Lenses.” The second part of the title was: “and Radiation Mirrors.” [ Editor’s Note: The paper, published in secret in March 1951, is titled, “On Heterocatalytic Detonations I: Hydrodynamic Lenses and Radiation Mirrors,” and it is the paper that contains the first description of the Teller-Ulam configuration.]

That was the option that Teller thought was best. So I went to Teller in his office at Los Alamos, and I asked him what had happened. He said that he had written up the meeting he had had recently with Stan Ulam and that Ulam had proposed acoustic lenses, of which we had 32 on the original implosion weapon [detonated at the Trinity Test near Alamogordo, N.M.]. So you could get 32 segments of the sphere. They had fast and slow explosives. And so most of the mass of the explosive—of the 8 tons of the weapon, probably 4 tons was the lenses, which didn’t count in accelerating the plutonium.

And so that was the Nagasaki bomb and the one that was tested in Alamogordo on July 16, 1945. [Teller] told me about his report, and that was the end of the conversation, except that he said what he really needed was a small experiment to prove to the most skeptical physicists that this was the way to build the atomic bomb and the hydrogen bomb. And I took that as a challenge. I started and tried to make a 20-kiloton experiment, but I couldn’t make one that was sufficiently convincing and decided to make it full scale. And so that’s what I did. I published my report of the Sausage based on the concepts current at the time. I wrote that up and published it in the classified report library, also on July 25th, 1951.

And it was detonated, as Teller says later, “exactly as Dick Garwin had devised it,” on November 1, 1952, so just 16 months afterwards. And it could never have been done faster. And the only way it got done that fast was because I wrote the paper all by myself. I was sitting in the office with Enrico Fermi. I had two offices: One was with Fermi in the theoretical division, and the other was in the physics division, where I was working on developing a means for accelerating deuterons and protons to 100 kilovolts.

Ivy Mike, detonated in the Marshall Islands on 1 November, 1952, was the first successful test of a full-scale thermonuclear device. Richard Garwin designed the bomb used in the test.Los Alamos National Laboratory/AP

Ivy Mike, detonated in the Marshall Islands on 1 November, 1952, was the first successful test of a full-scale thermonuclear device. Richard Garwin designed the bomb used in the test.Los Alamos National Laboratory/APGarwin took exception to my suggestion that Teller “entrusted” him with the design of the first thermonuclear bomb. He also revealed poignant details about the daily routine in his office, which he shared with Enrico Fermi.

Garwin: [Teller] challenged me. He didn’t entrust me. He didn’t know that it could be done. But he said, “I’d like a small experiment that would persuade the most skeptical and that this is the way to do it.” And it persuaded the person who counted—It persuaded [Los Alamos Director] Norris Bradbury, and Norris Bradbury [then allocated more resources for continued work on hydrogen bombs], without asking anybody else, because that’s how things worked then. Truman had said, “We’re going to build a hydrogen bomb,” and nobody knew how to build it. But Truman didn’t say that. People thought that Edward Teller probably knew how to build it. But he had been working on it since 1939, and he didn’t know how to build it, either. He continually complained that he didn’t have enough people. But any time, he could have written down his theorem and found out that it was wrong. But he found out it was wrong when he wrote down what he and Ulam had talked about.

When I sat in the room, it was a very small room, which had two desks, my desk faced Fermi’s desk. I could see him face-to-face. He taught me a lot the first year and the second year. But only the first year did I share an office with him. And that year, he worked with Stan Ulam in the mornings. The coders would come in. And they would deliver the code, the results of their work. They had been following a spreadsheet that Fermi had started. The first few lines he had actually calculated and sat next to the coder and calculated the first few lines of the spreadsheet, which were various zones along the axis of this infinitely long cylinder. And it started at one end, which was enriched with tritium. And so it reacted about 100 times as fast as deuterium itself. And so then, the second line across the spreadsheet would be the second set of zones along the axis. And the third line would be the third set of zones, and so on. And so they would come in with the whole thing, 100 zones, perhaps. And Fermi would discuss that with the coder, and then he would think of what to calculate next with Stan Ulam.

What’s it like to hold plutonium in your hands? Garwin is among the few people on the planet who can tell you.

Garwin: You can put plutonium in your pocket if it’s coated with nickel, as were the original plutonium hemispheres for the atomic bomb. I’ve held it in my two hands. It was a very dangerous thing to do. But at Los Alamos, you could be admitted to the sanctum and hold the nickel-plated plutonium in your hands. It’s warm, like a rabbit. And of course, if you isolate it, it gets even warmer.

Garwin spoke about the basic design of the Sausage, the first thermonuclear bomb. He disclosed how the device persuaded Hans Bethe, a star of the Manhattan Project and later, like Garwin, active in arms-control causes, about the viability of thermonuclear weapons. In a touching aside, he remembered his wife, Lois Garwin, to whom he was married from 1947 until her death in 2018.

Garwin: In order to get the Sausage to work, you needed to have a different way of getting equal forces on all sides. And that was the design of the full-size bomb. We used a normal atomic bomb at one end, and then, as has been revealed since then, a cylinder containing deuterium and surrounding that, a cylinder containing hydrogen. And beyond that, the very heavy container. All of that was at liquid-hydrogen temperature or at liquid-deuterium temperature. I can’t go into more detail at present, even now. So I made a full-size weapon. And it was very big. But I argued with Hans Bethe, who was head of various committees for building the atomic bomb, the hydrogen bomb, even though he didn’t want to build it. He wanted to prove that it couldn’t be built. But he was an honest man and excellent physicist. And so he accepted that it could be built.

But I never saw a weapons test, not even in Nevada. Never saw a weapons test. But I traveled to Hawaii a couple of times during the Ivy series and the George series in order to talk to people who came from the test site back to Hawaii to talk to me and others.

I want to mention my wife, Lois Garwin. I could not have done any of this without her. She died in 2018, February 4. And she was the one who took care of the children, except for waking up and diapering them or feeding them a bottle at night, because I could wake up and go to sleep much faster than she.

Garwin also weighed in on one of the most enduring controversies of nuclear-weapons history, which was the relative contributions of Teller and Ulam to the Teller-Ulam configuration. Garwin was asked, was it really the two of them working on this, or was it all Edward Teller?