IEEE Spectrum IEEE Spectrum

-

Video Friday: Silly Robot Dog Jump

by Evan Ackerman on 16. August 2024. at 16:45

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

ICRA@40: 23–26 September 2024, ROTTERDAM, NETHERLANDS

IROS 2024: 14–18 October 2024, ABU DHABI, UAE

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH

Enjoy today’s videos!

The title of this video is “Silly Robot Dog Jump” and that’s probably more than you need to know.

[ Deep Robotics ]

It’ll be great when robots are reliably autonomous, but until they get there, collaborative capabilities are a must.

[ Robust AI ]

I am so INCREDIBLY EXCITED for this.

[ IIT Instituto Italiano di Tecnologia ]

In this 3 minutes long one-take video, the LimX Dynamics CL-1 takes on the challenge of continuous heavy objects loading among shelves in a simulated warehouse, showcasing the advantages of the general-purpose form factor of humanoid robots.

[ LimX Dynamics ]

Birds, bats and many insects can tuck their wings against their bodies when at rest and deploy them to power flight. Whereas birds and bats use well-developed pectoral and wing muscles, how insects control their wing deployment and retraction remains unclear because this varies among insect species. Here we demonstrate that rhinoceros beetles can effortlessly deploy their hindwings without necessitating muscular activity. We validated the hypothesis using a flapping microrobot that passively deployed its wings for stable, controlled flight and retracted them neatly upon landing, demonstrating a simple, yet effective, approach to the design of insect-like flying micromachines.

[ Nature ]

Agility Robotics’ CTO, Pras Velagapudi, talks about data collection, and specifically about the different kinds we collect from our real-world robot deployments and generally what that data is used for.

[ Agility Robotics ]

Robots that try really hard but are bad at things are utterly charming.

[ University of Tokyo JSK Lab ]

The DARPA Triage Challenge unsurprisingly has a bunch of robots in it.

[ DARPA ]

The Cobalt security robot has been around for a while, but I have to say, the design really holds up—it’s a good looking robot.

[ Cobalt AI ]

All robots that enter elevators should be programmed to gently sway back and forth to the elevator music. Even if there’s no elevator music.

[ Somatic ]

ABB Robotics and the Texas Children’s Hospital have developed a groundbreaking lab automation solution using ABB’s YuMi® cobot to transfer fruit flies (Drosophila melanogaster) used in the study for developing new drugs for neurological conditions such as Alzheimer’s, Huntington’s and Parkinson’s.

[ ABB ]

Extend Robotics are building embodied AI enabling highly flexible automation for real-world physical tasks. The system features intuitive immersive interface enabling tele-operation, supervision and training AI models.

[ Extend Robotics ]

The recorded livestream of RSS 2024 is now online, in case you missed anything.

[ RSS 2024 ]

-

IEEE and Keysight Team Up to Teach Kids About Electronics

by Robert Schneider on 15. August 2024. at 18:00

IEEE TryEngineering has partnered with Keysight Technologies to develop lesson plans focused on electronics and power simulation. Keysight provides hardware, software, and services to a wide variety of industries, particularly in the area of electronic measurement.

IEEE TryEngineering, an IEEE Educational Activities program, empowers educators to foster the next generation of technology innovators through free, online access to culturally relevant, developmentally appropriate, and educationally sound instructional resources for teachers and community volunteers.

The lesson plans cover a variety of STEM topics, experience levels, and age ranges. Educators should be able to find an applicable topic for their students, regardless of their grade level or interests.

Lesson plans on circuits

There are already a number of lesson plans available through the Keysight partnership that introduce students to electrical concepts, with more being developed. The most popular one thus far is Series and Parallel Circuits, which has been viewed more than 100 times each month. Teams of pupils predict the difference between a parallel and serial circuit design by building examples using wires, light bulbs, and batteries.

“TryEngineering is proud to be Keysight’s partner in attaining the ambitious goal of bringing engineering lessons to 1 million students in 2024.” —Debra Gulick

The newest of the Keysight-sponsored lesson plans, Light Up Name Badge, teaches the basics of circuitry, such as the components of a circuit, series and parallel circuits, and electronic component symbols. Students can apply their newfound knowledge in a design challenge wherein they create a light-up badge with their name.

Developing a workforce through STEM outreach

“Keysight’s commitment to workforce development through preuniversity STEM outreach makes it an ideal partner for IEEE TryEngineering,” says Debra Gulick, director of student and academic education programs for IEEE Educational Activities.

In addition, Keysight’s corporate social responsibility vision to build a better planet by accelerating innovation to connect and secure the world while employing a global business framework of ethical, environmentally sustainable, and socially responsible operations makes it a suitable IEEE partner.

“TryEngineering is proud to be Keysight’s partner in attaining the ambitious goal of bringing engineering lessons to 1 million students in 2024,” Gulick says.

The IEEE STEM Summit, a three-day virtual event in October for IEEE volunteers and educators, is expected to include a session highlighting Keysight’s lesson plans.

Educators and volunteers engaged in outreach activities with students can learn more on the Keysight TryEngineering partnership page.

The arrangement with Keysight was made possible with support from the IEEE Foundation. -

Optical Metasurfaces Shine a Light on Li-Fi, Lidar

by Margo Anderson on 15. August 2024. at 14:00

A new, tunable smart surface can transform a single pulse of light into multiple beams, each aimed in different directions. The proof-of-principle development opens the door to a range of innovations in communications, imaging, sensing, and medicine.

The research comes out of the Caltech lab of Harry Atwater, a professor of applied physics and materials science, and is possible due to a type of nano-engineered material called a metasurface. “These are artificially designed surfaces which basically consist of nanostructured patterns,” says Prachi Thureja, a graduate student in Atwater’s group. “So it’s an array of nanostructures, and each nanostructure essentially allows us to locally control the properties of light.”

The surface can be reconfigured up to millions of times per second to change how it is locally controlling light. That’s rapid enough to manipulate and redirect light for applications in optical data transmission such as optical space communications and Li-Fi, as well as lidar.

“[The metasurface] brings unprecedented freedom in controlling light,” says Alex M.H. Wong, an associate professor of electrical engineering at the City University of Hong Kong. “The ability to do this means one can migrate existing wireless technologies into the optical regime. Li-Fi and LIDAR serve as prime examples.”

Metasurfaces remove the need for lenses and mirrors

Manipulating and redirecting beams of light typically involves a range of conventional lenses and mirrors. These lenses and mirrors might be microscopic in size, but they’re still using optical properties of materials like Snell’s Law, which describes the progress of a wavefront through different materials and how that wavefront is redirected—or refracted—according to the properties of the material itself.

By contrast, the new work offers the prospect of electrically manipulating a material’s optical properties via a semiconducting material. Combined with nano-scaled mirror elements, the flat, microscopic devices can be made to behave like a lens, without requiring lengths of curved or bent glass. And the new metasurface’s optical properties can be switched millions of times per second using electrical signals.

“The difference with our device is by applying different voltages across the device, we can change the profile of light coming off of the mirror, even though physically it’s not moving,” says paper co-author Jared Sisler—also a graduate student in Atwater’s group. “And then we can steer the light like it’s an electrically reprogrammable mirror.”

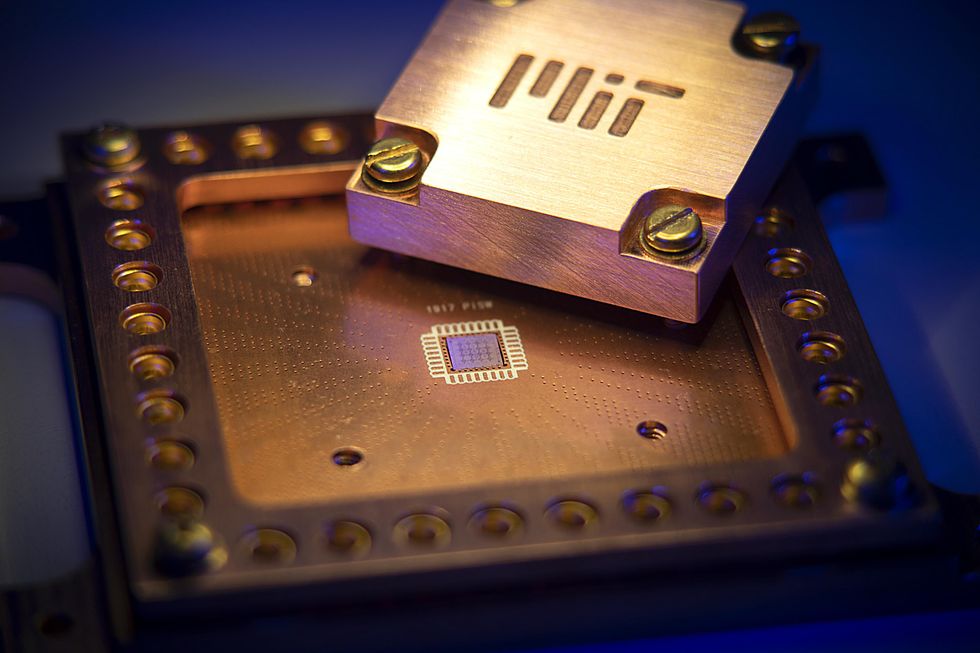

The device itself, a chip that measures 120 micrometers on each side, achieves its light-manipulating capabilities with an embedded surface of tiny gold antennas in a semiconductor layer of indium tin oxide. Manipulating the voltages across the semiconductor alters the material’s capacity to bend light—also known as its index of refraction. Between the reflection of the gold mirror elements and the tunable refractive capacity of the semiconductor, a lot of rapidly-tunable light manipulation becomes possible.

“I think the whole idea of using a solid-state metasurface or optical device to steer light in space and also use that for encoding information—I mean, there’s nothing like that that exists right now,” Sisler says. “So I mean, technically, you can send more information if you can achieve higher modulation rates. But since it’s kind of a new domain, the performance of our device is more just to show the principle.”

Metasurfaces open up plenty of new possibilities

The principle, says Wong, suggests a wide array of future technologies on the back of what he says are likely near-term metasurface developments and discoveries.

“The metasurface [can] be flat, ultrathin, and lightweight while it attains the functions normally achieved by a series of carefully curved lenses,” Wong says. “Scientists are currently still unlocking the vast possibilities the metasurface has available to us.

“With improvements in nanofabrication, elements with small feature sizes much smaller than the wavelength are now reliably fabricable,” Wong continues. “Many functionalities of the metasurface are being routinely demonstrated, benefiting not just communication but also imaging, sensing, and medicine, among other fields... I know that in addition to interest from academia, various players from industry are also deeply interested and making sizable investments in pushing this technology toward commercialization.”

-

NIST Announces Post-Quantum Cryptography Standards

by Dina Genkina on 13. August 2024. at 10:01

Today, almost all data on the Internet, including bank transactions, medical records, and secure chats, is protected with an encryption scheme called RSA (named after its creators Rivest, Shamir, and Adleman). This scheme is based on a simple fact—it is virtually impossible to calculate the prime factors of a large number in a reasonable amount of time, even on the world’s most powerful supercomputer. Unfortunately, large quantum computers, if and when they are built, would find this task a breeze, thus undermining the security of the entire Internet.

Luckily, quantum computers are only better than classical ones at a select class of problems, and there are plenty of encryption schemes where quantum computers don’t offer any advantage. Today, the U.S. National Institute of Standards and Technology (NIST) announced the standardization of three post-quantum cryptography encryption schemes. With these standards in hand, NIST is encouraging computer system administrators to begin transitioning to post-quantum security as soon as possible.

“Now our task is to replace the protocol in every device, which is not an easy task.” —Lily Chen, NIST

These standards are likely to be a big element of the Internet’s future. NIST’s previous cryptography standards, developed in the 1970s, are used in almost all devices, including Internet routers, phones, and laptops, says Lily Chen, head of the cryptography group at NIST who lead the standardization process. But adoption will not happen overnight.

“Today, public key cryptography is used everywhere in every device,” Chen says. “Now our task is to replace the protocol in every device, which is not an easy task.”

Why we need post-quantum cryptography now

Most experts believe large-scale quantum computers won’t be built for at least another decade. So why is NIST worried about this now? There are two main reasons.

First, many devices that use RSA security, like cars and some IoT devices, are expected to remain in use for at least a decade. So they need to be equipped with quantum-safe cryptography before they are released into the field.

“For us, it’s not an option to just wait and see what happens. We want to be ready and implement solutions as soon as possible.” —Richard Marty, LGT Financial Services

Second, a nefarious individual could potentially download and store encrypted data today, and decrypt it once a large enough quantum computer comes online. This concept is called “harvest now, decrypt later“ and by its nature, it poses a threat to sensitive data now, even if that data can only be cracked in the future.

Security experts in various industries are starting to take the threat of quantum computers seriously, says Joost Renes, principal security architect and cryptographer at NXP Semiconductors. “Back in 2017, 2018, people would ask ‘What’s a quantum computer?’” Renes says. “Now, they’re asking ‘When will the PQC standards come out and which one should we implement?’”

Richard Marty, chief technology officer at LGT Financial Services, agrees. “For us, it’s not an option to just wait and see what happens. We want to be ready and implement solutions as soon as possible, to avoid harvest now and decrypt later.”

NIST’s competition for the best quantum-safe algorithm

NIST announced a public competition for the best PQC algorithm back in 2016. They received a whopping 82 submissions from teams in 25 different countries. Since then, NIST has gone through 4 elimination rounds, finally whittling the pool down to four algorithms in 2022.

This lengthy process was a community-wide effort, with NIST taking input from the cryptographic research community, industry, and government stakeholders. “Industry has provided very valuable feedback,” says NIST’s Chen.

These four winning algorithms had intense-sounding names: CRYSTALS-Kyber, CRYSTALS-Dilithium, Sphincs+, and FALCON. Sadly, the names did not survive standardization: The algorithms are now known as Federal Information Processing Standard (FIPS) 203 through 206. FIPS 203, 204, and 205 are the focus of today’s announcement from NIST. FIPS 206, the algorithm previously known as FALCON, is expected to be standardized in late 2024.

The algorithms fall into two categories: general encryption, used to protect information transferred via a public network, and digital signature, used to authenticate individuals. Digital signatures are essential for preventing malware attacks, says Chen.

Every cryptography protocol is based on a math problem that’s hard to solve but easy to check once you have the correct answer. For RSA, it’s factoring large numbers into two primes—it’s hard to figure out what those two primes are (for a classical computer), but once you have one it’s straightforward to divide and get the other.

“We have a few instances of [PQC], but for a full transition, I couldn’t give you a number, but there’s a lot to do.” —Richard Marty, LGT Financial Services

Two out of the three schemes already standardized by NIST, FIPS 203 and FIPS 204 (as well as the upcoming FIPS 206), are based on another hard problem, called lattice cryptography. Lattice cryptography rests on the tricky problem of finding the lowest common multiple among a set of numbers. Usually, this is implemented in many dimensions, or on a lattice, where the least common multiple is a vector.

The third standardized scheme, FIPS 205, is based on hash functions—in other words, converting a message to an encrypted string that’s difficult to reverse

The standards include the encryption algorithms’ computer code, instructions for how to implement it, and intended uses. There are three levels of security for each protocol, designed to future-proof the standards in case some weaknesses or vulnerabilities are found in the algorithms.

Lattice cryptography survives alarms over vulnerabilities

Earlier this year, a pre-print published to the arXiv alarmed the PQC community. The paper, authored by Yilei Chen of Tsinghua University in Beijing, claimed to show that lattice-based cryptography, the basis of two out of the three NIST protocols, was not, in fact, immune to quantum attacks. On further inspection, Yilei Chen’s argument turned out to have a flaw—and lattice cryptography is still believed to be secure against quantum attacks.

On the one hand, this incident highlights the central problem at the heart of all cryptography schemes: There is no proof that any of the math problems the schemes are based on are actually “hard.” The only proof, even for the standard RSA algorithms, is that people have been trying to break the encryption for a long time, and have all failed. Since post-quantum cryptography standards, including lattice cryptogrphay, are newer, there is less certainty that no one will find a way to break them.

That said, the failure of this latest attempt only builds on the algorithm’s credibility. The flaw in the paper’s argument was discovered within a week, signaling that there is an active community of experts working on this problem. “The result of that paper is not valid, that means the pedigree of the lattice-based cryptography is still secure,” says NIST’s Lily Chen (no relation to Tsinghua University’s Yilei Chen). “People have tried hard to break this algorithm. A lot of people are trying, they try very hard, and this actually gives us confidence.”

NIST’s announcement is exciting, but the work of transitioning all devices to the new standards has only just begun. It is going to take time, and money, to fully protect the world from the threat of future quantum computers.

“We’ve spent 18 months on the transition and spent about half a million dollars on it,” says Marty of LGT Financial Services. “We have a few instances of [PQC], but for a full transition, I couldn’t give you a number, but there’s a lot to do.”

-

Level Up Your Leadership Skills with IEEE Courses

by Nicholas Spada on 12. August 2024. at 19:00

Author and leadership expert John C. Maxwell famously said, “The single biggest way to impact an organization is to focus on leadership development. There is almost no limit to the potential of an organization that recruits good people, raises them up as leaders, and continually develops them.”

Experts confirm that there are clear benefits to fostering leadership by encouraging employees’ professional growth and nurturing and developing company leaders. A culture of leadership development and innovation boosts employee engagement by 20 percent to 25 percent, according to an analysis in the Journal of Applied Psychology. Companies are 22 percent more profitable, on average, when they engage their employees by building a culture of leadership, innovation, and recognition, according to Zippia research.

Developing professionals into strong leaders can have a lasting impact on a company, and the IEEE Professional Development Suite can help make it possible. The training programs in the suite help aspiring technology leaders who want to develop their essential business and management skills. Programs include IEEE Leading Technical Teams, the IEEE | Rutgers Online Mini-MBA for Engineers and Technical Professionals, and the Intensive Wireless Communications and Advanced Topics in Wireless courses offered by the IEEE Communications Society. IEEE also offers topical courses through its eLearning Library.

Tips for leading teams

IEEE Leading Technical Teams is a live, six-hour course offered both in person and virtually. Addressing challenges that come with leading groups, it is designed for team leaders, managers, and directors of engineering and technical teams.

“Participating benefited me and my employer by enhancing my leadership skills in inspiring others to achieve the goals of our organization,” says Stephen Wilkowski, a system test engineer at CACI International in Reston, Va., who completed the training. “I found the leadership practices assessment to be very valuable, as I appreciated the anonymous feedback received from those who I work with. I would recommend the training to anyone desiring to improve their leadership skills.”

Attendees participate in the 360° Leadership Practices Inventory, a tool that solicits confidential feedback on the participant’s strengths and opportunities for improvement from their team members and managers. The program encompasses instructor-led exercises and case studies demonstrating the application of best practices to workplace challenges.

Participants learn the “five practices of exemplary leadership” and receive valuable peer coaching.

To learn more about in-person and virtual options for individuals and companies, complete this form.

A mini-MBA for technologists

The 12-week IEEE | Rutgers Online Mini-MBA for Engineers and Technical Professionals program covers business strategy, new product development management, financial analysis, sales and marketing, and leadership. It includes a combination of expert instruction, peer interaction, self-paced video lessons, interactive assessments, live office hours, and hands-on capstone project experience. The program offers flexible learning opportunities for individual learners as well as customized company cohort options.

Developing professionals into strong leaders can have a lasting impact on a company, and the IEEE Professional Development Suite can help make that possible.

“The mini-MBA was a great opportunity to explore other areas of business that I don’t typically encounter,” says graduate Jonathan Bentz, a senior manager at Nvidia. “I have a customer-facing technical role, and the mini-MBA allowed me to get a taste of the full realm of business leadership.”

For more information, see IEEE | Rutgers Online Mini-MBA for Engineers and Technical Professionals.

Training on wireless communications

The Intensive Wireless Communications and the Advanced Topics in Wireless course series are exclusively presented by the IEEE Communications Society.

The Intensive Wireless interactive live course provides training necessary to stay on top of key developments in the dynamic, rapidly evolving communications industry. Designed for those with an engineering background who want to enhance their knowledge of wireless communication technologies, the series is an ideal way to train individual employees or your entire team at once.

The Advanced Topics in Wireless series is for engineers and technical professionals with a working knowledge of wireless who are looking to enhance their skill set. The series dives into recent advancements, applications, and use cases in emerging connectivity.

Participants in the live, online course series develop a comprehensive view of 5G/NR technology, as well as an understanding of the implementation of all the ITU-specified use case categories such as enhanced mobile broadband, mIoT, and ultra-reliable low-latency communication. The series also provides a robust foundation on the network architecture and the evolution of technology, which enables fully open radio access networks.

Learn more about the Advanced Topics in Wireless Course Series by completing this form.

Topics in the eLearning Library

Tailored for professionals, faculty, and students, the IEEE eLearning Library taps into a wealth of expertise from the organization’s global network of more than 450,000 industry and academia members. Courses cover a wide variety of disciplines including artificial intelligence, blockchain technology, cyber and data security, power and energy, telecommunications, and IEEE standards.

You can help foster growth and leadership skills for your organization by offering employees access to hundreds of courses. Start exploring the library by filling out this form.

Completion of course programs offers learners the ability to earn IEEE certificates bearing professional development hours, continuing education units, and digital badges.

-

Amazon Vies for Nuclear-Powered Data Center

by Andrew Moseman on 12. August 2024. at 18:36

When Amazon Web Services paid US $650 million in March for another data center to add to its armada, the tech giant thought it was buying a steady supply of nuclear energy to power it, too. The Susquehanna Steam Electric Station outside of Berick, Pennsylvania, which generates 2.5 gigawatts of nuclear power, sits adjacent to the humming data center and had been directly powering it since the center opened in 2023.

After striking the deal, Amazon wanted to change the terms of its original agreement to buy 180 megawatts of additional power directly from the nuclear plant. Susquehanna agreed to sell it. But third parties weren’t happy about that, and their deal has become bogged down in a regulatory battle that will likely set a precedent for data centers, cryptocurrency mining operations, and other computing facilities with voracious appetites for clean electricity.

Putting a data center right next to a power plant so that it can draw electricity from it directly, rather than from the grid, is becoming more common as data centers seek out cheap, steady, carbon-free power. Proposals for co-locating data centers next to nuclear power have popped up in New Jersey, Texas, Ohio, and elsewhere. Sweden is considering using small modular reactors to power future data centers.

However, co-location raises questions about equity and energy security, because directly-connected data centers can avoid paying fees that would otherwise help maintain grids. They also hog hundreds of megawatts that could be going elsewhere.

“They’re effectively going behind the meter and taking that capacity off of the grid that would otherwise serve all customers,” says Tony Clark, a senior advisor at the law firm Wilkinson Barker Knauer and a former commissioner at the Federal Energy Regulatory Commission (FERC), who has testified to a U.S. House subcommittee on the subject.

Amazon’s nuclear power deal meets hurdles

The dust-up over the Amazon-Susquehanna agreement started in June, after Amazon subsidiary Amazon Web Services filed a notice to change its interconnection service agreement (ISA) in order to buy more nuclear power from Susquehanna’s parent company, Talen Energy. Amazon wanted to increase the amount of behind-the-meter power it buys from the plant from 300 MW to 480 MW. Shortly after it requested the change, utility giants Exelon and American Electric Power (AEP), filed a protest against the agreement and asked FERC to hold a hearing on the matter.

Their complaint: the deal between Amazon and the nuclear plant would hurt a third party, namely all the customers who buy power from AEP or Exelon utilities. The protest document argues that the arrangement would shift up to $140 million in extra costs onto the people of Pennsylvania, New Jersey, and other states served by PJM, a regional transmission organization that oversees the grid in those areas. “Multiplied by the many similar projects on the drawing board, it is apparent that this unsupported filing has huge financial consequences that should not be imposed on ratepayers without sufficient process to determine and evaluate what is really going on,” their complaint says.

Susquehanna dismissed the argument, effectively saying that its deal with Amazon is none of AEP and Exelon’s business. “It is an unlawful attempt to hijack this limited [ISA] amendment proceeding that they have no stake in and turn it into an ad hoc national referendum on the future of data center load,” Susquehanna’s statement said. (AEP, Exelon, Talen/Susquehanna, and Amazon all declined to comment for this story.)

More disputes like this will likely follow as more data centers co-locate with clean energy. Kevin Schneider, a power system expert at Pacific Northwest National Laboratory and research professor at Washington State University, says it’s only natural that data center operators want the constant, consistent nature of nuclear power. “If you look at the base load nature of nuclear, you basically run it up to a power level and leave it there. It can be well aligned with a server farm.”

Data center operators are also exploring energy options from solar and wind, but these energy sources would have a difficult time matching the constancy of nuclear, even with grid storage to help even out their supply. So giant tech firms look to nuclear to keep their servers running without burning fossil fuels, and use that to trumpet their carbon-free achievements, as Amazon did when it bought the data center in Pennsylvania. “Whether you’re talking about Google or Apple or Microsoft or any of those companies, they tend to have corporate sustainability goals. Being served by a nuclear unit looks great on their corporate carbon balance sheet,” Clark says.

Costs of data centers seeking nuclear energy

Yet such arrangements could have major consequences for other energy customers, Clark argues. For one, directing all the energy from a nuclear plant to a data center is, fundamentally, no different than retiring that plant and taking it offline. “It’s just a huge chunk of capacity leaving the system,” he says, resulting in higher prices and less energy supply for everyone else.

Another issue is the “behind-the-meter” aspect of these kinds of deals. A data center could just connect to the grid and draw from the same supply as everyone else, Clark says. But by connecting directly to the power plant, the center’s owner avoids paying the administrative fees that are used to maintain the grid and grow its infrastructure. Those costs could then get passed on to businesses and residents who have to buy power from the grid. “There’s just a whole list of charges that get assessed through the network service that if you don’t connect through the network, you don’t have to pay,” Clark says. “And those charges are the part of the bill that will go up” for everyone else.

Even the “carbon-free” public relations talking points that come with co-location may be suspect in some cases. In Washington State, where Schneider works, new data centers are being planted next to the region’s abundant hydropower stations, and they’re using so much of that energy that parts of the state are considering adding more fossil fuel capacity to make ends meet. This results in a “zero-emissions shell game,” Clark wrote in a white paper on the subject.

These early cases are likely only the beginning. A report posted in May from the Electric Power Research Institute predicts energy demand from data centers will double by 2030, a leap driven by the fact that AI queries need ten times more energy than traditional internet searches. The International Energy Agency puts the timeline for doubling sooner–in 2026. Data centers, AI, and the cryptocurrency sector consumed an estimated 460 terawatt-hours (TWh) in 2022, and could reach more than 1000 TWh in 2026, the agency predicts.

Data centers face energy supply challenges

New data centers can be built in a matter of months, but it takes years to build utility-scale power projects, says Poorvi Patel, manager of strategic insights at Electric Power Research Institute and contributor to the report. The potential for unsustainable growth in electricity needs has put grid operators on alert, and in some cases has sent them sounding the alarm. Eirgrid, a state-owned transmission operator in Ireland, last week warned of a “mass exodus” of data centers in Ireland if it can’t connect new sources of energy.

There’s only so much existing nuclear power to go around, and enormous logistical and regulatory roadblocks to building more. So data center operators and tech giants are looking for creative solutions. Some are considering small modular reactors (SMRs)–which are advanced nuclear reactors with much smaller operating capacities than conventional reactors. Nano Nuclear Energy, which is developing microreactors–a particularly small type of SMR–last month announced an agreement with Blockfusion to explore the possibility of powering a currently defunct cryptomining facility in Niagara Falls, New York.

“To me, it does seem like a space where, if big tech has a voracious electric power needs and they really want that 24/7, carbon-free power, nuclear does seem to be the answer,” Clark says. “They also have the balance sheets to be able to do some of the risk mitigation that might make it attractive to get an SMR up and running.”

-

Photonic Chip Cuts Cost of Hunting Exoplanets

by Rachel Berkowitz on 12. August 2024. at 13:01

At 6.5 meters in diameter, the James Webb Space Telescope’s primary mirror captures more light than any telescope that’s ever been launched from Earth. But not every astronomer has US $10 billion to spend on a space telescope. So to help bring the cost of space-based astronomy down, researchers at the National Research Council of Canada in Ottawa are working on a way to process starlight on a tiny optical chip. Ross Cheriton, a photonics researcher there, and his students built and tested a CubeSat prototype with a new kind of photonic chip. The goal is to lower the barrier to entry for astronomical science using swarms of lower-cost spacecraft.

“We hope to enable smaller space telescopes to do big science using highly compact instrument-on-chips,” Cheriton says, who is also affiliated with the Quantum and Nanotechnology Research Centre in Ottawa.

Photonics integrated circuits (PICs) use light instead of electricity to process information, and they’re in wide use slinging trillions and trillions of bits around data centers. But only recently have astronomers begun to examine how to use them to push the boundaries of what can be learned about the universe.

Ground-based telescopes are plagued by Earth’s atmosphere, where turbulence blurs incoming light, making it difficult to focus it onto a camera chip. In outer space, telescopes can peer at extremely faint objects in non-visible wavelengths without correcting for the impact of turbulence. That’s where Cheriton aims to boldly go with a PIC filter that detects very subtle gas signatures during an exoplanet “eclipse” called a transit.

The main motivation for putting photonic chips in space is to reduce the size, weight, and cost of components, because it can be produced en masse in a semiconductor foundry. “The dream is a purely fiber and chip-based instrument with no other optics,” says Cheriton. Replacing filters, lenses, and mirrors with a chip also improves stability and scalability compared to ordinary optical parts.

CubeSats—inexpensive, small, and standardized satellites—have proved to be a cost-effective way of deploying small instrument payloads. “The compact nature of PICs is a perfect match for CubeSats to study bright exoplanet systems James Webb doesn’t have time to stare at,” says Cheriton.

For a total mission cost of less than $1 million—compared to the Webb’s $10 billion—an eventual CubeSat mission could stare at a star for days to weeks while it waits for a planet to cross the field of view. Then, it would look for slight changes in the star’s spectrum that are associated with how the planet’s atmosphere absorbs light—telltale evidence of gasses of a biological origin.

Smaller spectroscopy

As a proof-of-concept, Cheriton guided a team of undergraduate students who spent eight months designing and integrating a PIC into a custom 3U CubeSat (10 centimeter x 10 cm x 30 cm) platform. Their silicon nitride photonic circuit sensor proved itself capable of detecting the absorption signatures of CO2 in incoming light.

In their design, light entering the CubeSat’s collimating lens gets focused into a fiber and then pushed to the photonic chip. It enters an etched set of waveguides that includes a ring resonator. Here, light having a specific set of wavelengths builds in intensity over multiple trips around the ring, and is then output to a detector. Because only a select few wavelengths constructively interfere—those chosen to match a gas’s absorption spectrum—the ring serves as a comb-like filter. After the light goes through the ring resonator, the signal from the waveguide gets passed to an output fiber and onto a camera connected to a Raspberry Pi computer for processing. A single pixel’s intensity therefore serves as a reading for a gas’s presence.

Light travels through a waveguide on a photonic integrated circuit.Teseract

Light travels through a waveguide on a photonic integrated circuit.TeseractBecause it’s built on a chip, the sensor could be multiplexed for observing several objects or sense different gasses simultaneously. Additionally, all the light falling on a single pixel means that the signal is more sensitive than a traditional spectrometer, says Cheriton. Moreover, instead of hunting for peaks in a full spectrum, the technology looks for how well the absorption spectrum matches that of a specific gas, a more efficient process. “If something is in space, you don’t want to send gigabytes of data home if you don’t have to,” he says.

Space travel is still a long way off for the astrophotonic CubeSat. The current design does not use space-qualified components. But Cheriton’s students tested it in the lab for red light (635 nm) and CO2 in a gas cell. They used a “ground station” computer to transmit all commands and receive all results—and to monitor the photovoltaics and collect data from the flight control sensors onboard their CubeSat.

Next, the team plans to test whether their sensor can detect oxygen with the silicon nitride chip, a material that was chosen for its transparency to the gas’s 760 nm wavelength. Success would leave them well positioned to meet what Cheriton calls the next huge milestone for astronomers: looking for an earth-like planet with oxygen.

The work was presented at the Optica (formerly Optical Society of America) Advanced Photonics conference in July.

-

Hybrid Bonding Plays Starring Role in 3D Chips

by Samuel K. Moore on 11. August 2024. at 13:00

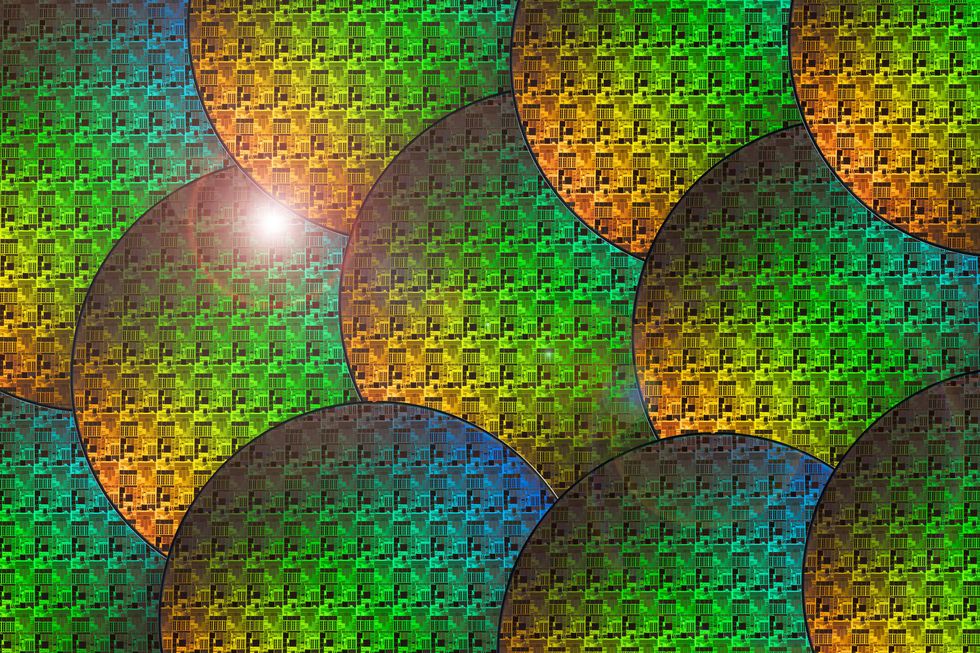

Chipmakers continue to claw for every spare nanometer to continue scaling down circuits, but a technology involving things that are much bigger—hundreds or thousands of nanometers across—could be just as significant over the next five years.

Called hybrid bonding, that technology stacks two or more chips atop one another in the same package. That allows chipmakers to increase the number of transistors in their processors and memories despite a general slowdown in the shrinking of transistors, which once drove Moore’s Law. At the IEEE Electronic Components and Technology Conference (ECTC) this past May in Denver, research groups from around the world unveiled a variety of hard-fought improvements to the technology, with a few showing results that could lead to a record density of connections between 3D stacked chips: some 7 million links per square millimeter of silicon.

All those connections are needed because of the new nature of progress in semiconductors, Intel’s Yi Shi told engineers at ECTC. Moore’s Law is now governed by a concept called system technology co-optimization, or STCO, whereby a chip’s functions, such as cache memory, input/output, and logic, are fabricated separately using the best manufacturing technology for each. Hybrid bonding and other advanced packaging tech can then be used to assemble these subsystems so that they work every bit as well as a single piece of silicon. But that can happen only when there’s a high density of connections that can shuttle bits between the separate pieces of silicon with little delay or energy consumption.

Out of all the advanced-packaging technologies, hybrid bonding provides the highest density of vertical connections. Consequently, it is the fastest growing segment of the advanced-packaging industry, says Gabriella Pereira, technology and market analyst at Yole Group. The overall market is set to more than triple to US $38 billion by 2029, according to Yole, which projects that hybrid bonding will make up about half the market by then, although today it’s just a small portion.

In hybrid bonding, copper pads are built on the top face of each chip. The copper is surrounded by insulation, usually silicon oxide, and the pads themselves are slightly recessed from the surface of the insulation. After the oxide is chemically modified, the two chips are then pressed together face-to-face, so that the recessed pads on each align. This sandwich is then slowly heated, causing the copper to expand across the gap and fuse, connecting the two chips.

Making Hybrid Bonding Better

- Hybrid bonding starts with two wafers or a chip and a wafer facing each other. The mating surfaces are covered in oxide insulation and slightly recessed copper pads connected to the chips’ interconnect layers.

- The wafers are pressed together to form an initial bond between the oxides.

- The stacked wafers are then heated slowly, strongly linking the oxides and expanding the copper to form an electrical connection.

- To form more secure bonds, engineers are flattening the last few nanometers of oxide. Even slight bulges or warping can break dense connections.

- The copper must be recessed from the surface of the oxide just the right amount. Too much and it will fail to form a connection. Too little and it will push the wafers apart. Researchers are working on ways to control the level of copper down to single atomic layers.

- The initial links between the wafers are weak hydrogen bonds. After annealing, the links are strong covalent bonds [below]. Researchers expect that using different types of surfaces, such as silicon carbonitride, which has more locations to form chemical bonds, will lead to stronger links between the wafers.

- The final step in hybrid bonding can take hours and require high temperatures. Researchers hope to lower the temperature and shorten the process time.

- Although the copper from both wafers presses together to form an electrical connection, the metal’s grain boundaries generally do not cross from one side to the other. Researchers are trying to cause large single grains of copper to form across the boundary to improve conductance and stability.

Hybrid bonding can either attach individual chips of one size to a wafer full of chips of a larger size or bond two full wafers of chips of the same size. Thanks in part to its use in camera chips, the latter process is more mature than the former, Pereira says. For example, engineers at the European microelectronics-research institute Imec have created some of the most dense wafer-on-wafer bonds ever, with a bond-to-bond distance (or pitch) of just 400 nanometers. But Imec managed only a 2-micrometer pitch for chip-on-wafer bonding.

The latter is a huge improvement over the advanced 3D chips in production today, which have connections about 9 μm apart. And it’s an even bigger leap over the predecessor technology: “microbumps” of solder, which have pitches in the tens of micrometers.

“With the equipment available, it’s easier to align wafer to wafer than chip to wafer. Most processes for microelectronics are made for [full] wafers,” says Jean-Charles Souriau, scientific leader in integration and packaging at the French research organization CEA Leti. But it’s chip-on-wafer (or die-to-wafer) that’s making a splash in high-end processors such as those from AMD, where the technique is used to assemble compute cores and cache memory in its advanced CPUs and AI accelerators.

In pushing for tighter and tighter pitches for both scenarios, researchers are focused on making surfaces flatter, getting bound wafers to stick together better, and cutting the time and complexity of the whole process. Getting it right could revolutionize how chips are designed.

WoW, Those Are Some Tight Pitches

The recent wafer-on-wafer (WoW) research that achieved the tightest pitches—from 360 nm to 500 nm—involved a lot of effort on one thing: flatness. To bond two wafers together with 100-nm-level accuracy, the whole wafer has to be nearly perfectly flat. If it’s bowed or warped to the slightest degree, whole sections won’t connect.

Flattening wafers is the job of a process called chemical mechanical planarization, or CMP. It’s essential to chipmaking generally, especially for producing the layers of interconnects above the transistors.

“CMP is a key parameter we have to control for hybrid bonding,” says Souriau. The results presented at ECTC show CMP being taken to another level, not just flattening across the wafer but reducing mere nanometers of roundness on the insulation between the copper pads to ensure better connections.

“It’s difficult to say what the limit will be. Things are moving very fast.” —Jean-Charles Souriau, CEA Leti

Other researchers focused on ensuring those flattened parts stick together strongly enough. They did so by experimenting with different surface materials such as silicon carbonitride instead of silicon oxide and by using different schemes to chemically activate the surface. Initially, when wafers or dies are pressed together, they are held in place with relatively weak hydrogen bonds, and the concern is whether everything will stay in place during further processing steps. After attachment, wafers and chips are then heated slowly, in a process called annealing, to form stronger chemical bonds. Just how strong these bonds are—and even how to figure that out—was the subject of much of the research presented at ECTC.

Part of that final bond strength comes from the copper connections. The annealing step expands the copper across the gap to form a conductive bridge. Controlling the size of that gap is key, explains Samsung’s Seung Ho Hahn. Too little expansion, and the copper won’t fuse. Too much, and the wafers will be pushed apart. It’s a matter of nanometers, and Hahn reported research on a new chemical process that he hopes to use to get it just right by etching away the copper a single atomic layer at a time.

The quality of the connection counts, too. The metals in chip interconnects are not a single crystal; instead they’re made up of many grains, crystals oriented in different directions. Even after the copper expands, the metal’s grain boundaries often don’t cross from one side to another. Such a crossing should reduce a connection’s electrical resistance and boost its reliability. Researchers at Tohoku University in Japan reported a new metallurgical scheme that could finally generate large, single grains of copper that cross the boundary. “This is a drastic change,” says Takafumi Fukushima, an associate professor at Tohoku. “We are now analyzing what underlies it.”

Other experiments discussed at ECTC focused on streamlining the bonding process. Several sought to reduce the annealing temperature needed to form bonds—typically around 300 °C—as to minimize any risk of damage to the chips from the prolonged heating. Researchers from Applied Materials presented progress on a method to radically reduce the time needed for annealing—from hours to just 5 minutes.

CoWs That Are Outstanding in the Field

Imec used plasma etching to dice up chips and give them chamfered corners. The technique relieves mechanical stress that could interfere with bonding.Imec

Imec used plasma etching to dice up chips and give them chamfered corners. The technique relieves mechanical stress that could interfere with bonding.Imec

Chip-on-wafer (CoW) hybrid bonding is more useful to makers of advanced CPUs and GPUs at the moment: It allows chipmakers to stack chiplets of different sizes and to test each chip before it’s bound to another, ensuring that they aren’t dooming an expensive CPU with a single flawed part.

But CoW comes with all of the difficulties of WoW and fewer of the options to alleviate them. For example, CMP is designed to flatten wafers, not individual dies. Once dies have been cut from their source wafer and tested, there’s less that can be done to improve their readiness for bonding.

Nevertheless, researchers at Intel reported CoW hybrid bonds with a 3-μm pitch, and, as mentioned, a team at Imec managed 2 μm, largely by making the transferred dies very flat while they were still attached to the wafer and keeping them extra clean throughout the process. Both groups used plasma etching to dice up the dies instead of the usual method, which uses a specialized blade. Unlike a blade, plasma etching doesn’t lead to chipping at the edges, which creates debris that could interfere with connections. It also allowed the Imec group to shape the die, making chamfered corners that relieve mechanical stress that could break connections.

CoW hybrid bonding is going to be critical to the future of high-bandwidth memory (HBM), according to several researchers at ECTC. HBM is a stack of DRAM dies—currently 8 to 12 dies high—atop a control-logic chip. Often placed within the same package as high-end GPUs, HBM is crucial to handling the tsunami of data needed to run large language models like ChatGPT. Today, HBM dies are stacked using microbump technology, so there are tiny balls of solder surrounded by an organic filler between each layer.

But with AI pushing memory demand even higher, DRAM makers want to stack 20 layers or more in HBM chips. The volume that microbumps take up means that these stacks will soon be too tall to fit properly in the package with GPUs. Hybrid bonding would shrink the height of HBMs and also make it easier to remove excess heat from the package, because there would be less thermal resistance between its layers.

“I think it’s possible to make a more-than-20-layer stack using this technology.” —Hyeonmin Lee, Samsung

At ECTC, Samsung engineers showed that hybrid bonding could yield a 16-layer HBM stack. “I think it’s possible to make a more-than-20-layer stack using this technology,” says Hyeonmin Lee, a senior engineer at Samsung. Other new CoW technology could also help bring hybrid bonding to high-bandwidth memory. Researchers at CEA Leti are exploring what’s known as self-alignment technology, says Souriau. That would help ensure good CoW connections using just chemical processes. Some parts of each surface would be made hydrophobic and some hydrophilic, resulting in surfaces that would slide into place automatically.

At ECTC, researchers from Tohoku University and Yamaha Robotics reported work on a similar scheme, using the surface tension of water to align 5-μm pads on experimental DRAM chips with better than 50-nm accuracy.

The Bounds of Hybrid Bonding

Researchers will almost certainly keep reducing the pitch of hybrid-bonding connections. A 200-nm WoW pitch is not just possible but desirable, Han-Jong Chia, a project manager for pathfinding systems at Taiwan Semiconductor Manufacturing Co. , told engineers at ECTC. Within two years, TSMC plans to introduce a technology called backside power delivery. (Intel plans the same for the end of this year.) That’s a technology that puts the chip’s chunky power-delivery interconnects below the surface of the silicon instead of above it. With those power conduits out of the way, the uppermost levels can connect better to smaller hybrid-bonding bond pads, TSMC researchers calculate. Backside power delivery with 200-nm bond pads would cut down the capacitance of 3D connections so much that a measure of energy efficiency and signal speed would be as much as eight times better than what can be achieved with 400-nm bond pads.

Chip-on-wafer hybrid bonding is more useful than wafer-on-wafer bonding, in that it can place dies of one size onto a wafer of larger dies. However, the density of connections that can be achieved is lower than for wafer-on-wafer bonding.Imec

Chip-on-wafer hybrid bonding is more useful than wafer-on-wafer bonding, in that it can place dies of one size onto a wafer of larger dies. However, the density of connections that can be achieved is lower than for wafer-on-wafer bonding.Imec

At some point in the future, if bond pitches narrow even further, Chia suggests, it might become practical to “fold” blocks of circuitry so they are built across two wafers. That way some of what are now long connections within the block might be able to take a vertical shortcut, potentially speeding computations and lowering power consumption.

And hybrid bonding may not be limited to silicon. “Today there is a lot of development in silicon-to-silicon wafers, but we are also looking to do hybrid bonding between gallium nitride and silicon wafers and glass wafers…everything on everything,” says CEA Leti’s Souriau. His organization even presented research on hybrid bonding for quantum-computing chips, which involves aligning and bonding superconducting niobium instead of copper.

“It’s difficult to say what the limit will be,” Souriau says. “Things are moving very fast.”

This article was updated on 11 August 2024.

This article appears in the September 2024 print issue.

-

Trailblazing Tech Leader Helps Shape U.S. AI Strategy

by Joanna Goodrich on 10. August 2024. at 13:00

In the two years since Arati Prabhakar was appointed director of the White House Office of Science and Technology Policy, she has set the United States on a course toward regulating artificial intelligence. The IEEE Fellow advised the U.S. President Joe Biden in writing the executive order he issued to accomplish the goal just six months after she began her new role in 2022.

Prabhakar is the first woman and the first person of color to serve as OSTP director, and she has broken through the glass ceiling at other agencies as well. She was the first woman to lead the National Institute of Standards and Technology (NIST) and the Defense Advanced Research Projects Agency.

Arati Prabhakar

Employer

U.S. government

Title

Director of the White House Office of Science and Technology Policy

Member grade

Fellow

Alma maters

Texas Tech University; Caltech

Working in the public sector wasn’t initially on her radar. Not until she became a DARPA program manager in 1986, she says, did she really understand what she could accomplish as a government official.

“What I have come to love about [public service] is the opportunity to shape policies at a scale that is really unparalleled,” she says.

Prabhakar’s passion for tackling societal challenges by developing technology also led her to take leadership positions at companies including Raychem (now part of TE Connectivity), Interval Research Corp., and U.S. Venture Partners. In 2019 she helped found Actuate, a nonprofit in Palo Alto, Calif., that seeks to create technology to help address climate change, data privacy, health care access, and other pressing issues.

“I really treasure having seen science, technology, and innovation from all different perspectives,” she says. “But the part I have loved most is public service because of the impact and reach that it can have.”

Discovering her passion for electrical engineering

Prabhakar, who was born in India and raised in Texas, says she decided to pursue a STEM career because when she was growing up, her classmates said women weren’t supposed to work in science, technology, engineering or mathematics.

“Them saying that just made me want to pursue it more,” she says. Her parents, who had wanted her to become a doctor, supported her pursuit of engineering, she adds.

After earning a bachelor’s degree in electrical engineering in 1979 from Texas Tech University, in Lubbock, she moved to California to continue her education at Caltech. She graduated with a master’s degree in EE in 1980, then earned a doctorate in applied physics in 1984. Her doctoral thesis focused on understanding deep-level defects and impurities in semiconductors that affect device performance.

After acquiring her Ph.D., she says, she wanted to make a bigger impact with her research than academia would allow, so she applied for a policy fellowship from the American Association for the Advancement of Science to work at the congressional Office of Technology Assessment. The office examines issues involving new or expanding technologies, assesses their impact, and studies whether new policies are warranted.

“We have huge aspirations for the future—such as mitigating climate change—that science and technology have to be part of achieving.”

“I wanted to share my research in semiconductor manufacturing processes with others,” Prabhakar says. “That’s what felt exciting and valuable to me.”

She was accepted into the program and moved to Washington, D.C. During the yearlong fellowship, she conducted a study on microelectronics R&D for the research and technology subcommittee of the U.S. House of Representatives committee on science, space, and technology. The subcommittee oversees STEM-related matters including education, policy, and standards.

While there, she worked with people who were passionate about public service and government, but she didn’t feel the same, she says, until she joined DARPA. As program manager, Prabhakar established and led several projects including a microelectronics office that invests in developing new technologies in areas such as lithography, optoelectronics, infrared imaging, and neural networks.

In 1993 an opportunity arose that she couldn’t refuse, she says: President Bill Clinton nominated her to direct the National Institute of Standards and Technology. NIST develops technical guidelines and conducts research to create tools that improve citizens’ quality of life. At age 34, she became the first woman to lead the agency.

Believing in IEEE’s Mission

Like many IEEE members, Prabhakar says, she joined IEEE as a student member while attending Texas Tech University because the organization’s mission aligned with her belief that engineering is about creating value in the world.

She continues to renew her membership, she says, because IEEE emphasizes that technology should benefit humanity.

“It really comes back to this idea of the purpose of engineering and the role that it plays in the world,” she says.

After leading NIST through the first Clinton administration, she left for the private sector, including stints as CTO at appliance-component maker Raychem in Menlo Park, Calif., and president of private R&D lab Interval Research of Palo Alto, Calif. In all, she spent the next 14 years in the private sector, mostly as a partner at U.S. Venture Partners, in Menlo Park, where she invested in semiconductor and clean-tech startups.

In 2012 she returned to DARPA and became its first female director.

“When I received the call offering me the job, I stopped breathing,” Prabhakar says. “It was a once-in-a-lifetime opportunity to make a difference at an agency that I had loved earlier in my career. And it proved to be just as meaningful an experience as I had hoped.”

For the next five years she led the agency, focusing on developing better military systems and the next generation of artificial intelligence, as well as creating solutions in social science, synthetic biology, and neurotechnology.

Under her leadership, in 2014 DARPA established the Biological Technologies Office to oversee basic and applied research in areas including gene editing, neurosciences, and synthetic biology. The office launched the Pandemic Prevention Platform, which helped fund the development of the mRNA technology that is used in the Moderna and Pfizer coronavirus vaccines.

She left the agency in 2017 to move back to California with her family.

“When I left the organization, what was very much on my mind was that the United States has the most powerful innovation engine the world has ever seen,” Prabhakar says. “At the same time, what kept tugging at me was that we have huge aspirations for the future—such as mitigating climate change—that science and technology have to be part of achieving.”

That’s why, in 2019, she helped found Actuate. She served as the nonprofit’s chief executive until 2022, when she took on the role of OSTP director.

Although she didn’t choose her career path because it was her passion, she says, she came to realize that she loves the role that engineering, science, and technology play in the world because of their “power to change how the future unfolds.”

Leading AI regulation worldwide

When Biden asked if Prabhakar would take the OSTP job, she didn’t think twice, she says. “When do you need me to move in?” she says she told him.

“I was so excited to work for the president because he sees science and technology as a necessary part of creating a bright future for the country,” Prabhakar says.

A month after she took office, the generative AI program ChatGPT launched and became a hot topic.

“AI was already being used in different areas, but all of a sudden it became visible to everyone in a way that it really hadn’t been before,” she says.

Regulating AI became a priority for the Biden administration because of the technology’s breadth and power, she says, as well as the rapid pace at which it’s being developed.

Prabhakar led the creation of Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. Signed on 30 October 2022, the order outlines goals such as protecting consumers and their privacy from AI systems, developing watermarking systems for AI-generated content, and warding off intellectual property theft stemming from the use of generative models.

“The executive order is possibly the most important accomplishment in relation to AI,” Prabhakar says. “It’s a tool that mobilizes the [U.S. government’s] executive branch and recognizes that such systems have safety and security risks, but [it] also enables immense opportunity. The order has put the branches of government on a very constructive path toward regulation.”

Meanwhile, the United States spearheaded a U.N. resolution to make regulating AI an international priority. The United Nations adopted the measure this past March. In addition to defining regulations, it seeks to use AI to advance progress on the U.N.’s sustainable development goals.

“There’s much more to be done,” Prabhakar says, “but I’m really happy to see what the president has been able to accomplish, and really proud that I got to help with that.”

-

Video Friday: The Secrets of Shadow Robot’s New Hand

by Evan Ackerman on 09. August 2024. at 15:30

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

ICRA@40: 23–26 September 2024, ROTTERDAM, NETHERLANDS

IROS 2024: 14–18 October 2024, ABU DHABI, UAE

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH

Enjoy today’s videos!

At ICRA 2024, in Tokyo last May, we sat down with the director of Shadow Robot, Rich Walker, to talk about the journey toward developing its newest model. Designed for reinforcement learning, the hand is extremely rugged, has three fingers that act like thumbs, and has fingertips that are highly sensitive to touch.

[ IEEE Spectrum ]

Food Angel is a food delivery robot to help with the problems of food insecurity and homelessness. Utilizing autonomous wheeled robots for this application may seem to be a good approach, especially with a number of successful commercial robotic delivery services. However, besides technical considerations such as range, payload, operation time, autonomy, etc., there are a number of important aspects that still need to be investigated, such as how the general public and the receiving end may feel about using robots for such applications, or human-robot interaction issues such as how to communicate the intent of the robot to the homeless.

[ RoMeLa ]

The UKRI FLF team RoboHike of UCL Computer Science of the Robot Perception and Learning lab with Forestry England demonstrate the ANYmal robot to help preserve the cultural heritage of an historic mine in the Forest of Dean, Gloucestershire, UK.

This clip is from a reboot of the British TV show “Time Team.” If you’re not already a fan of “Time Team,” let me just say that it is one of the greatest retro reality TV shows ever made, where actual archaeologists wander around the United Kingdom and dig stuff up. If they can find anything. Which they often can’t. And also it has Tony Robinson (from “Blackadder”), who runs everywhere for some reason. Go to Time Team Classics on YouTube for 70+ archived episodes.

[ UCL RPL ]

UBTECH humanoid robot Walker S Lite is working in Zeekr’s intelligent factory to complete handling tasks at the loading workstation for 21 consecutive days, and assist its employees with logistics work.

[ UBTECH ]

Current visual navigation systems often treat the environment as static, lacking the ability to adaptively interact with obstacles. This limitation leads to navigation failure when encountering unavoidable obstructions. In response, we introduce IN-Sight, a novel approach to self-supervised path planning, enabling more effective navigation strategies through interaction with obstacles.

[ ETH Zurich paper / IROS 2024 ]

When working on autonomous cars, sometimes it’s best to start small.

[ University of Pennsylvania ]

MIT MechE researchers introduce an approach called SimPLE (Simulation to Pick Localize and placE), a method of precise kitting, or pick and place, in which a robot learns to pick, regrasp, and place objects using the object’s computer-aided design (CAD) model, and all without any prior experience or encounters with the specific objects.

[ MIT ]

Staff, students (and quadruped robots!) from UCL Computer Science wish the Great Britain athletes the best of luck this summer in the Olympic Games & Paralympics.

Walking in tall grass can be hard for robots, because they can’t see the ground that they’re actually stepping on. Here’s a technique to solve that, published in Robotics and Automation Letters last year.

[ ETH Zurich Robotic Systems Lab ]

There is no such thing as excess batter on a corn dog, and there is also no such thing as a defective donut. And apparently, making Kool-Aid drink pouches is harder than it looks.

[ Oxipital AI ]

Unitree has open-sourced its software to teleoperate humanoids in VR for training-data collection.

[ Unitree / GitHub ]

Nothing more satisfying than seeing point-cloud segments wiggle themselves into place, and CSIRO’s Wildcat SLAM does this better than anyone.

[ IEEE Transactions on Robotics ]

A lecture by Mentee Robotics CEO Lior Wolf, on Mentee’s AI approach.

[ Mentee Robotics ]

-

Quantum Cryptography Has Everyone Scrambling

by Margo Anderson on 08. August 2024. at 14:00

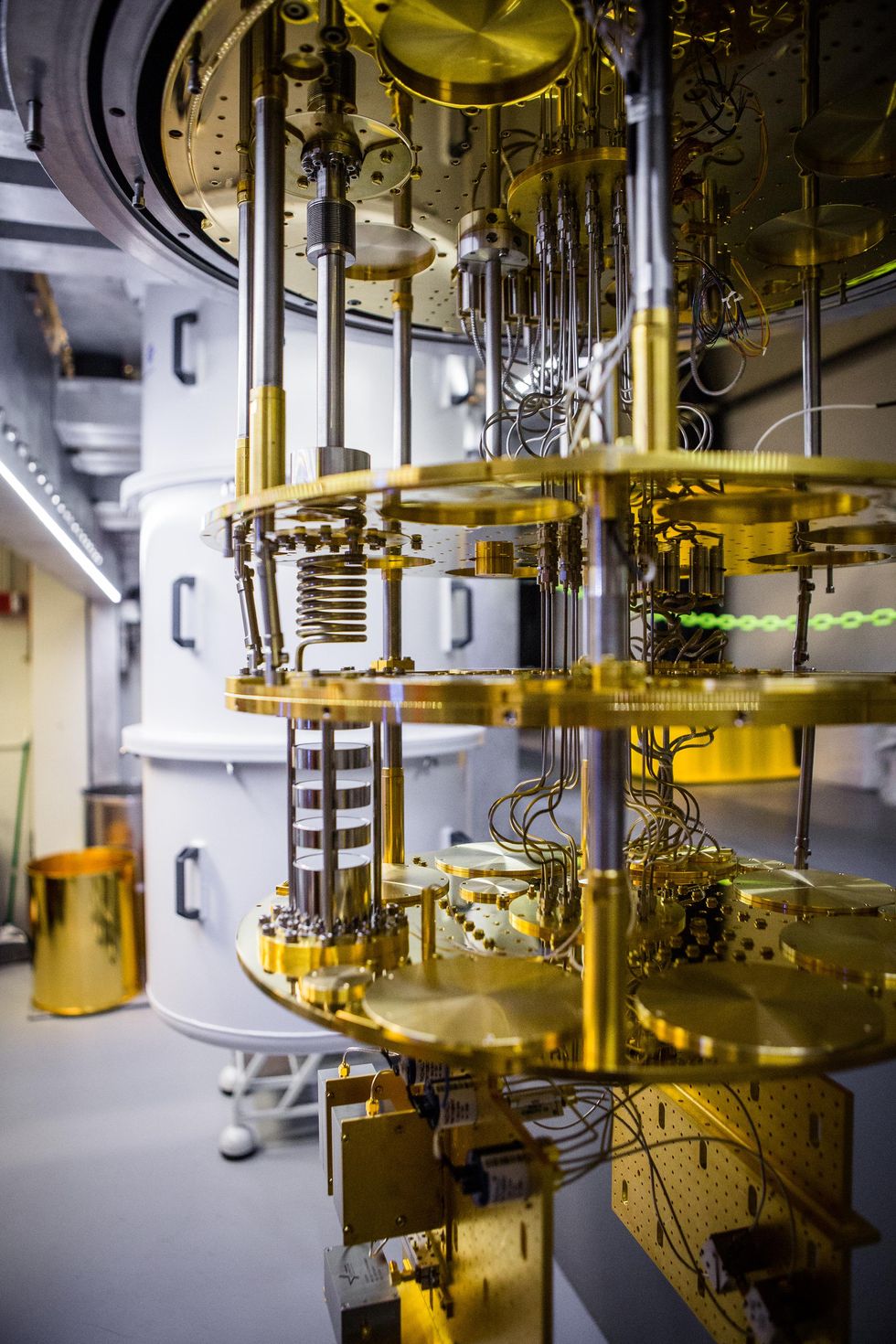

While the technology world awaits NIST’s latest “post-quantum” cryptography standards this summer, a parallel effort is underway to also develop cryptosystems that are grounded in quantum technology—what are called quantum-key distribution or QKD systems.

As a result, India, China, and a range of technology organizations in the European Union and United States are researching and developing QKD and weighing standards for the nascent cryptography alternative. And the biggest question of all is how or if QKD fits into a robust, reliable, and fully future-proof cryptography system that will ultimately become the global standard for secure digital communications into the 2030s. As in any emerging technology standard, different players are staking claims on different technologies and implementations of those technologies. And many of the big players are pursuing such divergent options because no technology is a clear winner at the moment.

According to Ciel Qi, a research analyst at the New York-based Rhodium Group, there’s one clear leader in QKD research and development—at least for now. “While China likely holds an advantage in QKD-based cryptography due to its early investment and development, others are catching up,” says Qi.

Two different kinds of “quantum secure” tech

At the center of these varied cryptography efforts is the distinction between QKD and post-quantum cryptography (PQC) systems. QKD is based on quantum physics, which holds that entangled qubits can store their shared information so securely that any effort to uncover it is unavoidably detectable. Sending pairs of entangled-photon qubits to both ends of a network provides the basis for physically secure cryptographic keys that can lock down data packets sent across that network.

Typically, quantum cryptography systems are built around photon sources that chirp out entangled photon pairs—where photon A heading down one length of fiber has a polarization that’s perpendicular to the polarization of photon B heading in the other direction. The recipients of these two photons perform separate measurements that enable both recipients to know that they and only they have the shared information transmitted by these photon pairs. (Otherwise, if a third party had intervened and measured one or both photons first, the delicate photon states would have been irreparably altered before reaching the recipients.)

“People can’t predict theoretically that these PQC algorithms won’t be broken one day.” —Doug Finke, Global Quantum Intelligence

This shared bit the two people on opposite ends of the line have in common then becomes a 0 or 1 in a budding secret key that the two recipients build up by sharing more and more entangled photons. Build up enough shared secret 0s and 1s between sender and receiver, and that secret key can be used for a type of strong cryptography, called a one-time pad, that guarantees a message’s safe transmission and faithful receipt by only the intended recipient.

By contrast, post-quantum cryptography (PQC) is based not around quantum physics but pure math, in which next-generation cryptographic algorithms are designed to run on conventional computers. And it’s the algorithms’ vast complexity that makes PQC security systems practically uncrackable, even by a quantum computer. So NIST—the U.S. National Institute of Standards and Technology—is developing gold-standard PQC systems that will undergird tomorrow’s post-quantum networks and communications.

The big problem with the latter approach, says Doug Finke, chief content officer of the New York-based Global Quantum Intelligence, is PQC is only believed (on very, very good but not infallible evidence) to be uncrackable by a fully-grown quantum computer. PQC, in other words, cannot necessarily offer the ironclad “quantum security” that’s promised.

“People can’t predict theoretically that these PQC algorithms won’t be broken one day,” Finke says. “On the other hand, QKD—there are theoretical arguments based on quantum physics that you can’t break a QKD network.”

That said, real-world QKD implementations might still be hackable via side-channel, device-based, and other clever attacks. Plus, QKD also requires direct access to a quantum-grade fiber optics network and sensitive quantum communications tech, neither of which is exactly commonplace today. “For day-to-day stuff, for me to send my credit card information to Amazon on my cellphone,” Finke says, “I’m not going to use QKD.”

China’s early QKD lead dwindling

According to Qi, China may have originally picked QKD as a focal point of their quantum technology development in part because the U.S. was not directing its efforts that way. “[The] strategic focus on QKD may be driven by China’s desire to secure a unique technological advantage, particularly as the U.S. leads in PQC efforts globally,” she says.

In particular, she points to ramped up efforts to use satellite uplinks and downlinks as the basis for free-space Chinese QKD systems. Citing as a source China’s “father of quantum,” Pan Jianwei, Qi says, “To achieve global quantum network coverage, China is currently developing a medium-high orbit quantum satellite, which is expected to be launched around 2026.”

That said, the limiting factor in all QKD systems to date is their ultimate reliance on a single photon to represent each qubit. Not even the most exquisitely-refined lasers and fiber optic lines can’t escape the vulnerability of individual photons.

QKD repeaters, which would blindly replicate a single photon’s quantum state but not leak any distinguishing information about the individual photons passing through—meaning the repeater would not be hackable by eavesdroppers—do not exist today. But, Finke says, such tech is achievable, though at least 5 to 10 years away. “It definitely is early days,” he says.

“While China likely holds an advantage in QKD-based cryptography due to its early investment and development, others are catching up.” —Ciel Qi, Rhodium Group

“In China they do have a 2,000-kilometer network,” Finke says. “But it uses this thing called trusted nodes. I think they have over 30 in the Beijing to Shanghai network. So maybe every 100 km, they have this unit which basically measures the signal... and then regenerates it. But the trusted node you have to locate on an army base or someplace like that. If someone breaks in there, they can hack into the communications.”

Meanwhile, India has been playing catch-up, according to Satyam Priyadarshy, a senior advisor to Global Quantum Intelligence. Priyadarshy says India’s National Quantum Mission includes plans for QKD communications research—aiming ultimately for QKD networks connecting cities over 2,000-km distances, as well as across similarly long-ranging satellite communications networks.

Priyadarshy points both to government QKD research efforts—including at the Indian Space Research Organization—and private enterprise-based R&D, including by the Bengaluru-based cybersecurity firm QuNu Labs. Priyadarshy says that QuNu, for example, has been working on a hub-and-spoke framework named ChaQra for QKD. (Spectrum also sent requests for comment to officials at India’s Department of Telecommunications, which were unanswered as of press time.)

“A hybrid of QKD and PQC is the most likely solution for a quantum safe network.” —Satyam Priyadarshy, Global Quantum Intelligence

In the U.S. and European Union, similar early-stage efforts are also afoot. Contacted by IEEE Spectrum, officials from the European Telecommunications Standards Institute (ETSI); the International Standards Organization (ISO); the International Electrotechnical Commission (IEC); and the IEEE Communications Society confirmed initiatives and working groups that are now working to both promote QKD technologies and emergent standards now taking shape.

“While ETSI is fortunate to have experts in a broad range of relevant topics, there is a lot to do,” says Martin Ward, senior research scientist based at Toshiba’s Cambridge Research Laboratory in England, and chair of a QKD industry standards group at ETSI.

Multiple sources contacted for this article envisioned a probable future in which PQC will likely be the default standard for most secure communications in a world of pervasive quantum computing. Yet, PQC also cannot avoid its potential Achilles’ heel against increasingly powerful quantum algorithms and machines either. This is where, the sources suggest, QKD could offer the prospect of hybrid secure communications that PQC alone could never provide.

“QKD provides [theoretical] information security, while PQC enables scalab[ility],” Priyadarshy says. “A hybrid of QKD and PQC is the most likely solution for a quantum safe network.” But he added that efforts at investigating hybrid QKD-PQC technologies and standards today are “very limited.”

Then, says Finke, QKD could still have the final say, even in a world where PQC remains preeminent. Developing QKD technology just happens, he points out, to also provide the basis for a future quantum Internet.

“It’s very important to understand that QKD is actually just one use case for a full quantum network,” Finke says.

“There’s a lot of applications, like distributed quantum computing and quantum data centers and quantum sensor networks,” Finke adds. “So even the research that people are doing now in QKD is still very, very helpful because a lot of that same technology can be leveraged for some of these other use cases.”

-

A Non-Engineer’s Journey to IEEE Leadership

by Kathy Pretz on 07. August 2024. at 18:00

Sharlene Brown often accompanied her husband, IEEE Senior Member Damith Wickramanayake, to organization meetings. He has held leadership positions in the IEEE Jamaica Section, in IEEE Region 3, and on the IEEE Member and Geographic Activities board. Both are from Jamaica.

She either waited outside the conference room or helped with tasks such as serving refreshments. Even though her husband encouraged her to sit in on the meetings, she says, she felt uncomfortable doing so because she wasn’t an engineer. Brown is an accountant and human resources professional. Her husband is a computer science professor at the University of Technology, Jamaica, in Kingston. He is currently Region 3’s education activities coordinator and a member of the section’s education and outreach committee for the IEEE Educational Activities Board.

Sharlene Brown

Employer

Maritime Authority of Jamaica, in Kingston

Title

Assistant accountant

Member grade

Senior member

Alma mater

University of Technology, Jamaica, in Kingston; Tsinghua University, in Beijing