IEEE Spectrum IEEE Spectrum

-

NASA Made the Hubble Telescope to Be Remade

by Ned Potter on 02. October 2024. at 13:00

When NASA decided in the 1970s that the Hubble Space Telescope should be serviceable in space, the engineering challenges must have seemed nearly insurmountable. How could a machine that complex and delicate be repaired by astronauts wearing 130-kilogram suits with thick gloves?

In the end, spacewalkers not only fixed the telescope, they regularly remade it.

That was possible because engineers designed Hubble to be toroidal, its major systems laid out in wedge-shaped equipment bays that astronauts could open from the outside. A series of maintenance workstations on the telescope’s outer surface ensured astronauts could have ready access to crucial telescope parts.

On five space-shuttle servicing missions between 1993 and 2009, 16 spacewalkers replaced every major component except the telescope’s mirrors and outer skin. They increased its electric supply by 20 percent. And they tripled its ability to concentrate and sense light, job one of any telescope.

The now legendary orbital observatory was built to last 15 years in space. But with updates, it has operated for more than 30—a history of invention and re-invention to make any engineering team proud. “Twice the lifetime,” says astronaut Kathryn Sullivan, who flew on Hubble’s 1990 launch mission. “Just try finding something else that has improved with age in space. I dare you.”

-

Partial Automation Doesn't Make Vehicles Safer

by Willie D. Jones on 02. October 2024. at 11:00

Early on the morning of 3 September, a multi-car accident occurred on Interstate 95 in Pennsylvania, raising alarms about the dangers of relying too heavily on advanced driver assistance systems (ADAS). Two men were killed when a Ford Mustang Mach-E electric vehicle, traveling at 114 kilometers per hour (71 mph), crashed into a car that had pulled over to the highway’s left shoulder. According to Pennsylvania State Police, the driver of the Mustang mistakenly believed that the car’s BlueCruise hands-free driving feature and adaptive cruise control could take full responsibility for driving.

The crash is part of a worrying trend involving drivers who overestimate the capabilities of partial automation systems. Ford’s BlueCruise system, while advanced, provides only level 2 vehicle autonomy. This means it can assist with steering, lane-keeping, and speed control on prequalified highways, but the driver must remain alert and ready to take over at any moment.

State police and federal investigators discovered that the driver of the Mustang involved in the deadly I-95 incident was both intoxicated and texting at the time of the crash, factors that likely contributed to their failure to regain control of the vehicle when necessary. The driver has been charged with vehicular homicide, involuntary manslaughter, and several other offenses.

Are Self-Driving Cars Safer?

This incident is the latest in a series of crashes involving Mustang Mach-E vehicles equipped with level 2 partial automation. Similar accidents were reported earlier this year in Texas and Philadelphia, all occurring at night on highways and resulting in fatalities. In response, the National Highway Traffic Safety Administration (NHTSA) launched an investigation into the crashes and the role ADAS systems may have played in them.

Unfortunately, there isn’t good data on the proportion of fatal crashes involving vehicles equipped with these partial automation systems. —David Kidd, Insurance Institute for Highway Safety

This is not a niche issue. Consulting and analysis firms including Munich-based Roland Berger predict that by 2025, more than one-third of new cars rolling off the world’s assembly lines will be equipped with at least level 2 autonomy. According to a Roland Berger survey of auto manufacturers, only 14 percent of vehicles produced next year will have no ADAS features at all.

“Unfortunately, there isn’t good data on the proportion of fatal crashes involving vehicles equipped with these partial automation systems,” says David Kidd, a researcher at the Arlington, Va.–based Insurance Institute for Highway Safety (IIHS). The nonprofit agency conducts vehicle safety testing and research, including evaluating vehicle crashworthiness.

IIHS evaluates whether ADAS provides a safety benefit by combining information about what vehicles come equipped with with data maintained by the Highway Loss Data Institute and police crash reports. But that record keeping, says Kidd, doesn’t yield hard data on the proportion of vehicles with systems such as BlueCruise or Tesla’s Autopilot that are involved in fatal crashes. Still, he notes, looking at information about the incidence of crashes involving vehicles that have level 2 driver assistance systems and the rate at which crashes happen with those not so equipped, “there is no significant difference.”

Asked about the fact that these three Mach-E crashes happened at night, Kidd points out that it’s not just a coincidence. Nighttime presents a very difficult set of conditions for these systems. “All the vehicles [with partial automation] we tested do an excellent job [of picking up the visual cues they need to avoid collisions] during the day, but after dark, they struggle.”

Automated Systems Make Riskier Drivers

IIHS released a report in July underscoring the danger of misusing ADAS systems. The study found that partial automation features like Ford’s BlueCruise are best understood as convenience features rather than safety technologies. According to IIHS President David Harkey, “Everything we’re seeing tells us that partial automation is a convenience feature like power windows or heated seats rather than a safety technology.

“Other technologies,” says Kidd, “like automatic emergency braking, lane departure warning, and blind-spot monitoring, which are designed to warn of an imminent crash, are effective at preventing crashes. We look at the partial automation technologies and these collision warning technologies differently because they have very different safety implications.”

The July IIHS study also highlighted a phenomenon known as risk compensation, where drivers using automated systems tend to engage in riskier behaviors, such as texting or driving under the influence, believing that the technology will save them from accidents. A similar issue arose with the widespread introduction of anti-lock braking systems in the 1980s, when drivers falsely assumed they could brake later or safely come to a stop from higher speeds, often with disastrous results.

What’s Next for ADAS?

While automakers like Ford say that ADAS is not designed to take the driver out of the loop, incidents like the Pennsylvania and Texas crashes underscore the need for better education and possibly stricter regulations around the use of these technologies. Until full vehicle autonomy is realized, drivers must remain vigilant, even when using advanced assistance features.

As partial automation systems become more common, experts warn that robust safeguards are needed to prevent their misuse. The IIHS study concluded that “Designing partial driving automation with robust safeguards to deter misuse will be crucial to minimizing the possibility that the systems will inadvertently increase crash risk.”“There are things auto manufacturers can do to help keep drivers involved with the driving task and make them use the technologies responsibly,” says Kidd. “IIHS has a new ratings program, called Safeguards, that evaluates manufacturers’ implementation of driver monitoring technologies.”

To receive a good rating, Kidd says, “Vehicles with partial automation will need to ensure that drivers are looking at the road, that their hands are in a place where they’re ready to take control if the automation technology makes a mistake, and that they’re wearing their seatbelt.” Kidd admits that no technology can determine whether someone’s mind is focused on the road and the driving task. But by monitoring a person’s gaze, head posture, and hand position, sensors can make sure the person’s actions are consistent with someone who is actively engaged in driving. “The whole sense of this program is to make sure that the [level 2 driving automation] technology isn’t portrayed as being more capable than it is. It does support the driver on an ongoing basis, but it certainly does not replace the driver.”

The European Commission released a report in March pointing out that progress toward reducing road fatalities is stalling in too many countries. This sticking point in the number of roadway deaths is an example of a phenomenon known as risk homeostasis, where risk compensation serves to counterbalance the intended effects of a safety advance, rendering the net effect unchanged. Asked what will counteract risk compensation so there will be a significant reduction in the annual worldwide roadway death toll, the IIHS’s Kidd said “We are still in the early stages of understanding whether automating all of the driving task—like what Waymo and Cruise are doing with their level 4 driving systems—is the answer. It looks like they will be safer than human drivers but it’s still too early to tell.”

-

Defending Taiwan With Chips and Drones

by Harry Goldstein on 01. October 2024. at 21:00

The majority of the world’s advanced logic chips are made in Taiwan, and most of those are made by one company: Taiwan Semiconductor Manufacturing Co. (TSMC). While it seems risky for companies like Nvidia, Apple, and Google to depend so much on one supplier, for Taiwan’s leaders, that’s a feature, not a bug.

In fact, this concentration of chip production is the tentpole of the island’s strategy to defend itself from China, which under the Chinese Communist Party’s One China Principle, considers Taiwan a renegade province that will be united with the mainland one way or another.

Taiwan’s Silicon Shield strategy rests on two assumptions. The first is that the United States won’t let China take the island and its chip production facilities (which reportedly have kill switches on the most advanced extreme ultraviolet machines that could render them useless in the event of an attack). The second is that China won’t risk destroying perhaps the most vital part of its own semiconductor supply chain as a consequence of a hostile takeover.

The U.S. military seems steadfast in its resolve to keep Taiwan out of Chinese hands. In fact, one general has declared that the United States is prepared to unleash thousands of aerial and maritime drones in the event China invades.

“U.S. naval attack drones positioned on the likely routes could disrupt or possibly even halt a Chinese invasion, much as Ukrainian sea drones have denied Russia access to the western Black Sea.”--Bryan Clark

“I want to turn the Taiwan Strait into an unmanned hellscape using a number of classified capabilities,” Adm. Samuel Paparo, commander of the U.S. Indo-Pacific Command told Washington Post columnist Josh Rogin in June.

There is now, two and half years into the Russia-Ukraine war, plenty of evidence that the kinds of drones Paparo referenced can play key roles in logistics, surveillance, and offensive operations. And U.S. war planners are learning a lot from that conflict that applies to Taiwan’s situation.

As Bryan Clark, a senior fellow at the Hudson Institute and director of the Institute’s Center for Defense Concepts and Technology, points out in this issue, “U.S. naval attack drones positioned on the likely routes could disrupt or possibly even halt a Chinese invasion, much as Ukrainian sea drones have denied Russia access to the western Black Sea.”

There is no way to know when or even if a conflict over Taiwan will commence. So for now, Taiwan is doubling down on its Silicon Shield by launching more renewable generation projects so that its chipmakers can meet customer demands to minimize the carbon footprint of chip production.

The island already has 2.4 gigawatts of offshore-wind capacity with another 3 GW planned or under construction, reports IEEE Spectrum contributing editor Peter Fairley, who visited Taiwan earlier this year. In “Powering Taiwan’s Silicon Shield” [p. 22], he notes that the additional capacity will help TSMC meet its goal of having 60 percent of its energy come from renewables by 2030. Fairley also reports on clever power-saving innovations deployed in TSMC’s fabs to reduce the company’s annual electricity consumption by about 175 gigawatt-hours.

Between bringing more renewable energy online and making their fabs more efficient, the chipmakers of Taiwan hope to keep their customers happy while the island’s leaders hope to deter its neighbor across the strait—if not with its Silicon Shield, then with the silicon brains of the drone hordes that could fly and float into the breach in the island’s defense.

-

Special Events Mark IEEE Honor Society’s 120th Anniversary

by Amy Michael on 01. October 2024. at 18:00

On 28 October 1904, on the campus of the University of Illinois Urbana-Champaign, Eta Kappa Nu (HKN) was founded by a group of young men led by Maurice L. Carr, whose vision was to create an honor society that recognized electrical engineers who embodied the ideals of scholarship, character, and attitude and to promote the profession.

From its humble beginning until today, HKN has established nearly 280 university chapters worldwide. Currently, the honor society boasts more than 40,000 members. Having inducted roughly 200,000 members during its existence, it has never strayed from the core principles espoused by its founders.

“Eta Kappa Nu grew because there have always been many members who have been willing and eager to serve it loyally and unselfishly,” Carr said in the “Dreams That Have Come True,” article published in the 1939 October/November issue of the honor society’s triannual magazine, The Bridge.

In 2010, HKN became the honor society of IEEE, resulting in global expansion, establishing chapters outside the United States, and inducting students and professionals from the IEEE fields of interest.

Today the character portion of HKN’s creed translates into its students collectively providing more than 100,000 hours of service each year to their communities and campuses through science, technology, engineering, and mathematics outreach programs and tutoring.

Hackathons, fireside chats, and more

In honor of its 120th anniversary, IEEE-HKN is celebrating with several exciting events.

HKN’s first online hackathon is scheduled to be held from 11 to 22 October. Students around the world compete to solve engineering problems for prizes and bragging rights.

On 28 October, IEEE-HKN’s Founders Day, 2019 IEEE-HKN President Karen Panetta is hosting a virtual fireside chat with HKN Eminent Members Vint Cerf and Bob Kahn. The two Internet pioneers are expected to share the inside story of how they conceived and designed its architecture.

The fireside chat is due to come after a presentation for the hackathon winners, and it will be followed by an online networking session for participants to share their HKN stories and brainstorm how to continue the forward momentum for the next generation of engineers.

The three events are open to everyone. Register now to attend any or all of them.

“My favorite part of being an HKN member is the sense of community.” — Matteo Alasio

HKN has set up a dedicated page showcasing how members and nonmembers can participate in the celebrations. The page also honors the society’s proud history with a time line of its impressive growth during the past 120 years.

IEEE-HKN alumni have been gathering at events across the United States to celebrate the anniversary. They include IEEE Region 3’s SoutheastCon, the IEEE Life Members Conference, the IEEE Communication Society Conference, the IEEE Power & Energy Society General Meeting, the IEEE World Forum on Public Safety Technology, and the Frontiers in Education Conference.

A gathering of the alumni of the Eta Kappa Nu San Francisco Chapter that took place in October 1925 on the top floor of the Pacific Telephone and Telegraph Company’s building.IEEE-HKN

A gathering of the alumni of the Eta Kappa Nu San Francisco Chapter that took place in October 1925 on the top floor of the Pacific Telephone and Telegraph Company’s building.IEEE-HKNIEEE-HKN’s reach and impact

The honor society’s leaders attribute its success to never straying from its core founding principles while remaining relevant. It offers support throughout its members’ career journeys and provides a vibrant network of like-minded professionals. Today that translates to annual conferences, webinars, podcasts, alumni networking opportunities, professional and leadership development services, mentoring initiatives, an awards program, and scholarships.

“When I joined HKN as a student, my chapter meant a great deal to me, as a community of friends, as leadership and professional development, and as inspiration to keep working toward my next goal,” says Sean Bentley, 2024 IEEE-HKN president-elect. “As I moved through my career and looked for ways to give back, I was happy to answer the call for service with HKN.”

HKN’s success is made possible by the commitment of its volunteers and donors, who give their time, expertise, and resources guided by a zeal to nurture the next generation of engineering professionals.

Matteo Alasio, an IEEE-HKN alum and former president of the Mu Nu chapter, at the Politecnico di Torino in Italy, says, “My favorite part of being an HKN member is the sense of community. Being part of a big family that works together to help students and promote professional development is incredibly fulfilling. It’s inspiring to collaborate with others who are dedicated to making a positive impact.”

HKN is a lifelong designation. If you are inducted into Eta Kappa Nu, your membership never expires. Visit the IEEE-HKN website to reconnect with the society or to learn more about its programs, chapters, students, and opportunities. You also can sign up for its 2024 Student Leadership Conference, to be held in November in Charlotte, N.C.

-

Brain-like Computers Tackle the Extreme Edge

by Dina Genkina on 01. October 2024. at 16:00

Neuromorphic computing draws inspiration from the brain, and Steven Brightfield, chief marketing officer for Sydney-based startup BrainChip, says that makes it perfect for use in battery-powered devices doing AI processing.

“The reason for that is evolution,” Brightfield says. “Our brain had a power budget.” Similarly, the market BrainChip is targeting is power constrained. ”You have a battery and there’s only so much energy coming out of the battery that can power the AI that you’re using.”

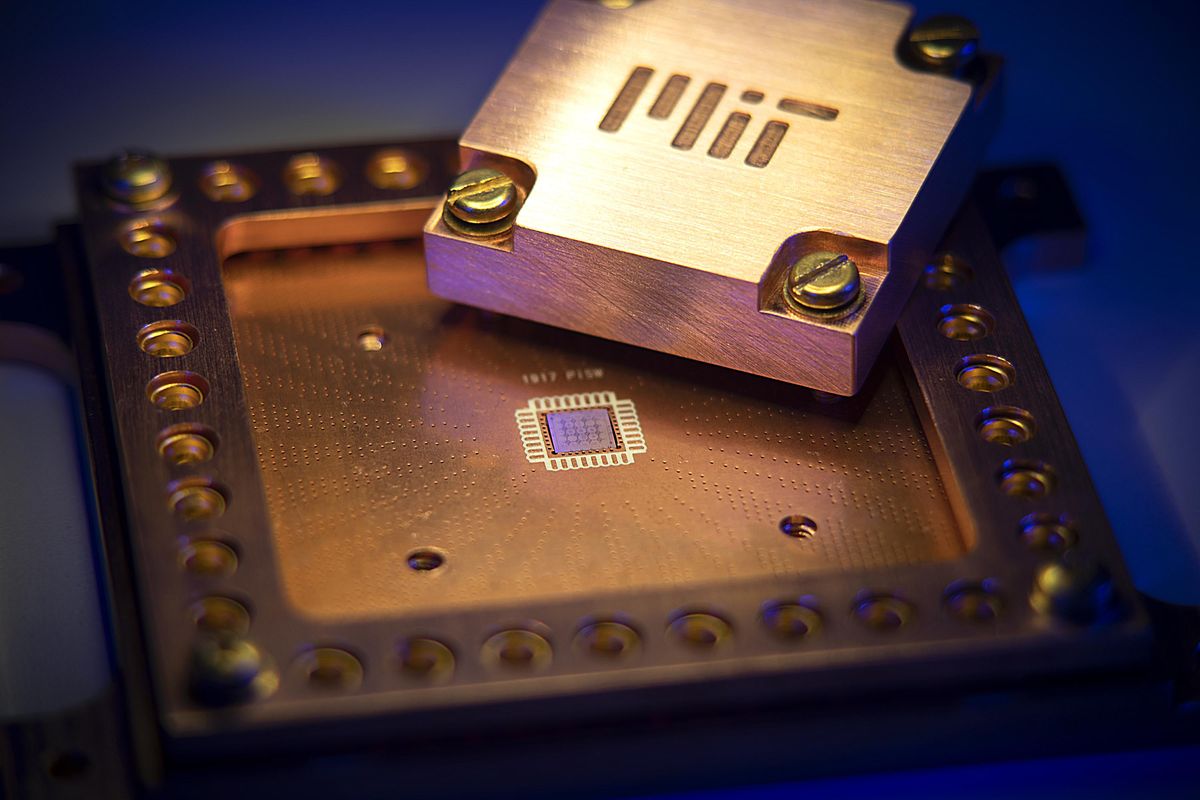

Today, BrainChip announced their chip design, the Akida Pico, is now available. Akida Pico, which was developed for use in power-constrained devices, is a stripped-down, miniaturized version of BrainChip’s Akida design, introduced last year. Akida Pico consumes 1 milliwatt of power, or even less depending on the application. The chip design targets the extreme edge, which is comprised of small user devices such as mobile phones, wearables, and smart appliances that typically have severe limitations on power and wireless communications capacities. Akida Pico joins similar neuromorphic devices on the market designed for the edge, such as Innatera’s T1 chip, announced earlier this year, and SynSense’s Xylo, announced in July 2023.

Neuron Spikes Save Energy

Neuromorphic computing devices mimic the spiking nature of the brain. Instead of traditional logic gates, computational units—referred to as ‘neurons’—send out electrical pulses, called spikes, to communicate with each other. If a spike reaches a certain threshold when it hits another neuron, that one is activated in turn. Different neurons can create spikes independent of a global clock, resulting in highly parallel operation.

A particular strength of this approach is that power is only consumed when there are spikes. In a regular deep learning model, each artificial neuron simply performs an operation on its inputs: It has no internal state. In a spiking neural network architecture, in addition to processing inputs, a neuron has an internal state. This means the output can depend not only on the current inputs, but on the history of past inputs, says Mike Davies, director of the neuromorphic computing lab at Intel. These neurons can choose not to output anything if, for example, the input hasn’t changed sufficiently from previous inputs, thus saving energy.

“Where neuromorphic really excels is in processing signal streams when you can’t afford to wait to collect the whole stream of data and then process it in a delayed, batched manner. It’s suited for a streaming, real-time mode of operation,” Davies says. Davies’ team recently published a result showing their Loihi chip’s energy use was one-thousandth of a GPU’s use for streaming use cases.

Akida Pico includes its neural processing engine, along with event processing and model weight storage SRAM units, direct memory units for spike conversion and configuration, and optional peripherals. Brightfield says in some devices, such as simple detectors, the chip can be used as a stand-alone device, without a microcontroller or any other external processing. For other use cases that require further on-device processing, it can be combined with a microcontroller, CPU, or any other processing unit.

BrainChip’s Akida Pico design includes a miniaturized version of their neuromorphic processing engine, suitable for small, battery-operated devices.BrainChip

BrainChip’s Akida Pico design includes a miniaturized version of their neuromorphic processing engine, suitable for small, battery-operated devices.BrainChipBrainChip has also worked to develop AI model architectures that are optimized for minimal power use in their device. They showed off their techniques with an application that detects keywords in speech. This is useful for voice assistance like Amazon’s Alexa, which waits for the ‘Hello, Alexa’ keywords to activate.

The BrainChip team used their recently developed model architecture to reduce power use to one-fifth of the power consumed by traditional models running on a conventional microprocessor, as demonstrated in their simulator. “I think Amazon spends $200 million a year in cloud computing services to wake up Alexa,” Brightfield says. “They do that using a microcontroller and a neural processing unit (NPU), and it still consumes hundreds of milliwatts of power.” If BrainChip’s solution indeed provides the claimed power savings for each device, the effect would be significant.

In a second demonstration, they used a similar machine learning model to demonstrate audio de-noising, for use in hearing aids or noise canceling headphones.

To date, neuromorphic computers have not found widespread commercial uses, and it remains to be seen if these miniature edge devices will take off, in part because of the diminished capabilities of such low-power AI applications. “If you’re at the very tiny neural network level, there’s just a limited amount of magic you can bring to a problem,” Intel’s Davis says.

BrainChip’s Brightfield, however, is hopeful that the application space is there. “It could be speech wake up. It could just be noise reduction in your earbuds or your AR glasses or your hearing aids. Those are all the kind of use cases that we think are targeted. We also think there’s use cases that we don’t know that somebody’s going to invent.”

-

Happy IEEE Day!

by IEEE on 01. October 2024. at 13:10

Happy IEEE Day!

IEEE Day commemorates the first gathering of IEEE members to share their technical ideas in 1884.

First celebrated in 2009, IEEE Day marks its 15th anniversary this year.

Worldwide celebrations demonstrate the ways thousands of IEEE members in local communities join together to collaborate on ideas that leverage technology for a better tomorrow.

Celebrate IEEE Day with colleagues from IEEE Sections, Student Branches, Affinity groups, and Society Chapters. Events happen both virtually and in person all around the world.

Join the celebration around the world!

Every year, IEEE members from IEEE Sections, Student Branches, Affinity groups, and Society Chapters join hands to celebrate IEEE Day. Events happen both virtually and in person. IEEE Day celebrates the first time in history when engineers worldwide gathered to share their technical ideas in 1884.

Special Activities & Offers for Members

Check out our special offers and activities for IEEE members and future members. And share these with your friends and colleagues.

Compete in contests and win prizes!

Have some fun and compete in the photo and video contests. Get your phone and camera ready when you attend one of the events. This year we will have both Photo and Video Contests. You can submit your entries in STEM, technical, humanitarian and social categories.

-

Even Gamma Rays Can't Stop This Memory

by Kohava Mendelsohn on 01. October 2024. at 11:00

This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

In space, high-energy gamma radiation can change the properties of semiconductors, altering how they work or rendering them completely unusable. Finding devices that can withstand radiation is important not just to keep astronauts safe but also to ensure that a spacecraft lasts the many years of its mission. Constructing a device that can easily measure radiation exposure is just as valuable. Now, a globe-spanning group of researchers has found that a type of memristor, a device that stores data as resistance even in the absence of a power supply, can not only measure gamma radiation but also heal itself after being exposed to it.

Memristors have demonstrated the ability to self-heal under radiation before, says Firman Simanjuntak, a professor of materials science and engineering at the University of Southampton whose team developed this memristor. But until recently, no one really understood how they healed—or how best to apply the devices. Recently, there’s been “a new space race,” he says, with more satellites in orbit and more deep-space missions on the launchpad, so “everyone wants to make their devices … tolerant towards radiation.” Simanjuntak’s team has been exploring the properties of different types of memristors since 2019, but now wanted to test how their devices change when exposed to blasts of gamma radiation.

Normally, memristors set their resistance according to their exposure to high-enough voltage. One voltage boosts the resistance, which then remains at that level when subject to lower voltages. The opposite voltage decreases the resistance, resetting the device. The relationship between voltage and resistance depends on the previous voltage, which is why the devices are said to have a memory.

The hafnium oxide memristor used by Simanjuntak is a type of memristor that cannot be reset, called a WORM (write once, read many times) device, suitable for permanent storage. Once it is set with a negative or positive voltage, the opposing voltage does not change the device. It consists of several layers of material: first conductive platinum, then aluminum doped hafnium oxide (an insulator), then a layer of titanium, then a layer of conductive silver at the top.

When voltage is applied to these memristors, a bridge of silver ions forms in the hafnium oxide, which allows the current to flow through, setting its conductance value. Unlike in other memristors, this device’s silver bridge is stable and fixes in place, which is why once the device is set, it usually can’t be returned to a rest state.

That is, unless radiation is involved. The first discovery the researchers made was that under gamma radiation, the device acts as a resettable switch. They believe that the gamma rays break the bond between the hafnium and oxygen atoms, causing a layer of titanium oxide to form at the top of the memristor, and a layer of platinum oxide to form at the bottom. The titanium oxide layer creates an extra barrier for the silver ions to cross, so a weaker bridge is formed, one that can be broken and reset by a new voltage.

The extra platinum oxide layer caused by the gamma rays also serves as a barrier to incoming electrons. This means a higher voltage is required to set the memristor. Using this knowledge, the researchers were able to create a simple circuit that measured amounts of radiation by checking the voltage that was required to set the memristor. A higher voltage meant the device had encountered more radiation.

From a regular state, the memristor forms a stable conductive bridge. Under radiation, a thicker layer of titanium oxide creates a slower-forming, weaker conductive bridge.OM Kumar et al./IEEE Electron Device Letters

From a regular state, the memristor forms a stable conductive bridge. Under radiation, a thicker layer of titanium oxide creates a slower-forming, weaker conductive bridge.OM Kumar et al./IEEE Electron Device LettersBut the true marvel of these hafnium oxide memristors is their ability to self-heal after a big dose of radiation. The researchers treated the memristor with 5 megarads of radiation—500 times more than a lethal dose in humans. Once the gamma radiation was removed, the titanium oxide and platinum oxide layers gradually dissipated, the oxygen atoms returning to form hafnium oxide again. After 30 days, instead of still requiring a higher-than-normal voltage to form, the devices that were exposed to radiation required the same voltage to form as untouched devices.

“It’s quite exciting what they’re doing,” says Pavel Borisov, a researcher at Loughborough University in the UK who studies how to use memristors to mimic the synapses in the human brain. His team conducted similar experiments with a silicon oxide based memristor, and also found that radiation changed the behavior of the device. In Borisov’s experiments, however, the memristors did not heal after the radiation.

Memristors are simple, lightweight, and low power, which already makes them ideal for use in space applications. In the future, Simanjuntak hopes to use memristors to develop radiation-proof memory devices that would enable satellites in space to do onboard calculations. “You can use a memristor for data storage, but also you can use it for computation,” he says, “So you could make everything simpler, and reduce the costs as well.”

This research was accepted for publication in a future issue of Electron Device Letters.

-

Leading Educator Weighs in on University DEI Program Cuts

by Kathy Pretz on 30. September 2024. at 18:00

Many U.S. university students returning to campus this month will find their school no longer has a diversity, equity, and inclusion program. More than 200 universities in 30 states so far this year have eliminated, cut back, or changed their DEI efforts, according to an article in The Chronicle of Higher Education.

It is happening at mostly publicly funded universities, because state legislators and governors are enacting laws that prohibit or defund DEI programs. They’re also cutting budgets and sometimes implementing other measures that restrict diversity efforts. Some colleges have closed their DEI programs altogether to avoid political pressure.

The Institute asked Andrea J. Goldsmith, a top educator and longtime proponent of diversity efforts within the engineering field and society, to weigh in.

Goldsmith shared her personal opinion about DEI with The Institute, not as Princeton’s dean of engineering and applied sciences. A wireless communications pioneer, she is an IEEE Fellow who launched the IEEE Board of Directors Diversity and Inclusion Committee in 2019 and once served as its chair.

She received this year’s IEEE Mulligan Education Medal for educating, mentoring, and inspiring generations of students, and for authoring pioneering textbooks in advanced digital communications.

“For the longest time,” Goldsmith says, “there was so much positive momentum toward improving diversity and inclusion. And now there’s a backlash, which is really unfortunate, but it’s not everywhere.” She says she is proud of her university’s president, who has been vocal that diversity is about excellence and that Princeton is better because its students and faculty are diverse.

In the interview, Goldsmith spoke about why she thinks the topic has become so controversial, what measures universities can take to ensure their students have a sense of belonging, and what can be done to retain female engineers—a group that has been underrepresented in the field.

The Institute: What do you think is behind the movement to dissolve DEI programs?

Goldsmith: That’s a very complex question, and I certainly don’t have the answer.

It has become a politically charged issue because there’s a notion that DEI programs are really about quotas or advancing people who are not deserving of the positions they have been given. Part of the backlash also was spurred by the Oct. 7 attack on Israel, the war in Gaza, and the protests. One notion is that Jewish students are also a minority that needs protection, and why is it that DEI programs are only focused on certain segments of the population as opposed to diversity and inclusion for everyone, for people with all different perspectives, and those who are victims or subject to explicit bias, implicit bias, or discrimination? I think that these are legitimate concerns, and that programs around diversity and inclusion should be addressing them.

The goal of diversity and inclusion is that everybody should be able to participate and reach their full potential. That should go for every profession and, in particular, every segment of the engineering community.

Also in the middle of this backlash is the U.S. Supreme Court’s 2023 decision that ended race-conscious affirmative action in college admissions—which means that universities cannot take diversity into account explicitly in their admission of students. The decision in and of itself only affects undergraduate admissions, but it has raised concerns about broadening the decision to faculty hiring or for other kinds of programs that promote diversity and inclusion within universities and private companies.

I think the Supreme Court’s decision, along with the political polarization and the recent protests at universities, have all been pieces of a puzzle that have come together to paint all DEI programs with a broad brush of not being about excellence and lowering barriers but really being about promoting certain groups of people at the expense of others.

How might the elimination of DEI programs impact the engineering profession specifically?

Goldsmith: I think it depends on what it means to eliminate DEI programs. Programs to promote the diversity of ideas and perspectives in engineering are essential for the success of the profession. As an optimist, I believe we should continue to have programs that ensure our profession can bring in people with diverse perspectives and experiences.

Does that mean that every DEI program in engineering companies and universities needs to evolve or change? Not necessarily. Maybe some programs do because they aren’t necessarily achieving the goal of ensuring that diverse people can thrive.

“My work in the profession of engineering to enhance diversity and inclusion has really been about excellence for the profession.”

We need to be mindful of the concerns that have been raised about DEI programs. I don’t think they are completely unfounded.

If we do the easy thing—which is to just eliminate the programs without replacing them with something else or evolving them—then it will hurt the engineering profession.

The metrics being used to assess whether these programs are achieving their goals need to be reviewed. If they are not, the programs need to be improved. If we do that, I think DEI programs will continue to positively impact the engineering profession.

For universities that have cut or reduced their programs, what are some other ways to make sure all students have a sense of belonging?

Goldsmith: I would look at what other initiatives could be started that would have a different name but still have the goal of ensuring that students have a sense of belonging.

Long before DEI programs, there were other initiatives within universities that helped students figure out their place within the school, initiated them into what it means to be a member of the community, and created a sense of belonging through various activities. These include prefreshman and freshman orientation programs, student groups and organizations, student-led courses (with or without credit), eating clubs, fraternities, and sororities, to name just a few. I am referring here to any program within a university that creates a sense of community for those who participate—which is a pretty broad category of programs.

These continue, but they aren’t called DEI programs. They’ve been around for decades, if not since the university system was founded.

How can universities and companies ensure that all people have a good experience in school and the workplace?

Goldsmith: This year has been a huge challenge for universities, with protests, sit-ins, arrests, and violence.

One of the things I said in my opening remarks to freshmen at the start of this semester is that you will learn more from people around you who have different viewpoints and perspectives than you will from people who think like you. And that engaging with people who disagree with you in a respectful and scholarly way and being open to potentially changing your perspective will not only create a better community of scholars but also better prepare you for postgraduation life, where you may be interacting with a boss, coworkers, family, and friends who don’t agree with you.

Finding ways to engage with people who don’t agree with you is essential for engaging with the world in a positive way. I know we don’t think about that as much in engineering because we’re going about building our technologies, doing our equations, or developing our programs. But so much of engineering is collaboration and understanding other people, whether it’s your customers, your boss, or your collaborators.

I would argue everyone is diverse. There’s no such thing as a nondiverse person, because no two people have the exact same set of experiences. Figuring out how to engage with people who are different is essential for success in college, grad school, your career, and your life.

I think it’s a bit different in companies, because you can fire someone who does a sit-in in the boss’s office. You can’t do that in universities. But I think workplaces also need to create an environment where diverse people can engage with each other beyond just what they’re working on in a way that’s respectful and intellectual.

Reports show that half of female engineers leave the high-tech industry because they have a poor work experience. Why is that, and what can be done to retain women?

Goldsmith: That is one of the harder questions facing the engineering profession. The challenges that women face are implicit, including sometimes explicit bias. In extreme cases, there are sexual and other kinds of harassment, and bullying. These egregious behaviors have decreased some. The Me Too movement raised a lot of awareness, but [poor behavior] still is far more prevalent than we want it to be. It’s very difficult for women who have experienced that kind of egregious and illegal behavior to speak up. For example, if it’s their Ph.D. advisor, what does that mean if they speak up? Do they lose their funding? Do they lose all the research they’ve done? This powerful person can bad-mouth them for job applications and potential future opportunities.

So, it’s very difficult to curb these behaviors. However, there has been a lot of awareness raised, and universities and companies have put protections in place against them.

Then there’s implicit bias, where a qualified woman is passed over for a promotion, or women are asked to take meeting notes but not the men. Or a woman leader gets a bad performance review because she doesn’t take no for an answer, is too blunt, or too pushy. All these are things that male leaders are actually lauded for.

There is data on the barriers and challenges that women face and what universities and employers can do to mitigate them. These are the experiences that hurt women’s morale and upward mobility and, ultimately, make them leave the profession.

One of the most important things for a woman to be successful in this profession is to have mentors and supporters. So it is important to make sure that women engineers are assigned mentors at every stage, from student to senior faculty or engineer and everything in between, to help them understand the challenges they face and how to deal with them, as well as to promote and support them.

I also think having leaders in universities and companies recognize and articulate the importance of diversity helps set the tone from the top down and tends to mitigate some of the bias and implicit bias in people lower in the organization.

I think the backlash against DEI is going to make it harder for leaders to articulate the value of diversity, and to put in place some of the best practices around ensuring that diverse people are considered for positions and reach their full potential.

We have definitely taken a step backward in the past year on the understanding that diversity is about excellence and implementing best practices that we know work to mitigate the challenges that diverse people face. But that just means we need to redouble our efforts.

Although this isn’t the best time to be optimistic about diversity in engineering, if we take the long view, I think that things are certainly better than they were 20 or 30 years ago. And I think 20 or 30 years from now they’ll be even better.

-

The Incredible Story Behind the First Transistor Radio

by Allison Marsh on 30. September 2024. at 14:00

Imagine if your boss called a meeting in May to announce that he’s committing 10 percent of the company’s revenue to the development of a brand-new mass-market consumer product, made with a not-yet-ready-for-mass-production component. Oh, and he wants it on store shelves in less than six months, in time for the holiday shopping season. Ambitious, yes. Kind of nuts, also yes.

But that’s pretty much what Pat Haggerty, vice president of Texas Instruments, did in 1954. The result was the Regency TR-1, the world’s first commercial transistor radio, which debuted 70 years ago this month. The engineers delivered on Haggerty’s audacious goal, and I certainly hope they received a substantial year-end bonus.

Why did Texas Instruments make the Regency TR-1 transistor radio?

But how did Texas Instruments come to make a transistor radio in the first place? TI traces its roots to a company called Geophysical Service Inc. (GSI), which made seismic instrumentation for the oil industry as well as electronics for the military. In 1945, GSI hired Patrick E. Haggerty as the general manager of its laboratory and manufacturing division and its electronics work. By 1951, Haggerty’s division was significantly outpacing GSI’s geophysical division, and so the Dallas-based company reorganized as Texas Instruments to focus on electronics.

Meanwhile, on 30 June 1948, Bell Labs announced John Bardeen and Walter Brattain’s game-changing invention of the transistor. No longer would electronics be dependent on large, hot vacuum tubes. The U.S. government chose not to classify the technology because of its potentially broad applications. In 1951, Bell Labs began licensing the transistor for US $25,000 through the Western Electric Co.; Haggerty bought a license for TI the following year.

The engineers delivered on Haggerty’s audacious goal, and I certainly hope they received a substantial year-end bonus.

TI was still a small company, with not much in the way of R&D capacity. But Haggerty and the other founders wanted it to become a big and profitable company. And so they established research labs to focus on semiconductor materials and a project-engineering group to develop marketable products.

The TR-1 was the first transistor radio, and it ignited a desire for portable gadgets that continues to this day.

Bettmann/Getty Images

The TR-1 was the first transistor radio, and it ignited a desire for portable gadgets that continues to this day.

Bettmann/Getty ImagesHaggerty made a good investment when he hired Gordon Teal, a 22-year veteran of Bell Labs. Although Teal wasn’t part of the team that invented the germanium transistor, he recognized that it could be improved by using a single grown crystal, such as silicon. Haggerty was familiar with Teal’s work from a 1951 Bell Labs symposium on transistor technology. Teal happened to be homesick for his native Texas, so when TI advertised for a research director in the New York Times, he applied, and Haggerty offered him the job of assistant vice president instead. Teal started at TI on 1 January 1953.

Fifteen months later, Teal gave Haggerty a demonstration of the first silicon transistor, and he presented his findings three and a half weeks later at the Institute of Radio Engineers’ National Conference on Airborne Electronics, in Dayton, Ohio. His innocuously titled paper, “Some Recent Developments in Silicon and Germanium Materials and Devices,” completely understated the magnitude of the announcement. The audience was astounded to hear that TI had not just one but three types of silicon transistors already in production, as Michael Riordan recounts in his excellent article “The Lost History of the Transistor” (IEEE Spectrum, October 2004).

And fun fact: The TR-1 shown at top once belonged to Willis Adcock, a physical chemist hired by Teal to perfect TI’s silicon transistors as well as transistors for the TR-1. (The radio is now in the collections of the Smithsonian’s National Museum of American History.)

The TR-1 became a product in less than six months

This advancement in silicon put TI on the map as a major player in the transistor industry, but Haggerty was impatient. He wanted a transistorized commercial product now, even if that meant using germanium transistors. On 21 May 1954, Haggerty challenged a research group at TI to have a working prototype of a transistor radio by the following week; four days later, the team came through, with a breadboard containing eight transistors. Haggerty decided that was good enough to commit $2 million—just under 10 percent of TI’s revenue—to commercializing the radio.

Of course, a working prototype is not the same as a mass-production product, and Haggerty knew TI needed a partner to help manufacture the radio. That partner turned out to be Industrial Development Engineering Associates (IDEA), a small company out of Indianapolis that specialized in antenna boosters and other electronic goods. They signed an agreement in June 1954 with the goal of announcing the new radio in October. TI would provide the components, and IDEA would manufacture the radio under its Regency brand.

Germanium transistors at the time cost $10 to $15 apiece. With eight transistors, the radio was too expensive to be marketed at the desired price point of $50 (more than $580 today, which is coincidentally about what it’ll cost you to buy one in good condition on eBay). Vacuum-tube radios were selling for less, but TI and IDEA figured early adopters would pay that much to try out a new technology. Part of Haggerty’s strategy was to increase the volume of transistor production to eventually lower the per-transistor cost, which he managed to push down to about $2.50.

By the time TI met with IDEA, the breadboard was down to six transistors. It was IDEA’s challenge to figure out how to make the transistorized radio at a profit. According to an oral history with Richard Koch, IDEA’s chief engineer on the project, TI’s real goal was to make transistors, and the radio was simply the gimmick to get there. In fact, part of the TI–IDEA agreement was that any patents that came out of the project would be in the public domain so that TI was free to sell more transistors to other buyers.

At the initial meeting, Koch, who had never seen a transistor before in real life, suggested substituting a germanium diode for the detector (which extracted the audio signal from the desired radio frequency), bringing the transistor count down to five. After thinking about the configuration a bit more, Koch eliminated another transistor by using a single transistor for the oscillator/mixer circuit.

TI’s original prototype used eight germanium transistors, which engineers reduced to six and, ultimately, four for the production model.Division of Work and Industry/National Museum of American History/Smithsonian Institution

TI’s original prototype used eight germanium transistors, which engineers reduced to six and, ultimately, four for the production model.Division of Work and Industry/National Museum of American History/Smithsonian Institution

The final design was four transistors set in a superheterodyne design, a type of receiver that combines two frequencies to produce an intermediate frequency that can be easily amplified, thereby boosting a weak signal and decreasing the required antenna size. The TR-1 had two transistors as intermediate-frequency amplifiers and one as an audio amplifier, plus the oscillator/mixer. Koch applied for a patent for the circuitry the following year.

The radio ran on a 22.5-volt battery, which offered a playing life of 20 to 30 hours and cost about $1.25. (Such batteries were also used in the external power and electronics pack for hearing aids, the only other consumer product to use transistors up until this point.)

While IDEA’s team was working on the circuitry, they outsourced the design of the TR-1’s packaging to the Chicago firm of Painter, Teague, and Petertil. Their first design didn’t work because the components didn’t fit. Would their second design be better? As Koch later recalled, IDEA’s purchasing agent, Floyd Hayhurst, picked up the molding dies for the radio cases in Chicago and rushed them back to Indianapolis. He arrived at 2:00 in the morning, and the team got to work. Fortunately, everything fit this time. The plastic case was a little warped, but that was simple to fix: They slapped a wooden piece on each case as it came off the line so it wouldn’t twist as it cooled.

This video shows how each radio was assembled by hand:

On 18 October 1954, Texas Instruments announced the first commercial transistorized radio. It would be available in select outlets in New York and Los Angeles beginning 1 November, with wider distribution once production ramped up. The Regency TR-1 Transistor Pocket Radio initially came in black, gray, red, and ivory. They later added green and mahogany, as well as a run of pearlescents and translucents: lavender, pearl white, meridian blue, powder pink, and lime.

The TR-1 got so-so reviews, faced competition

Consumer Reports was not enthusiastic about the Regency TR-1. In its April 1955 review, it found that transmission of speech was “adequate” under good conditions, but music transmission was unsatisfactory under any conditions, especially on a noisy street or crowded beach. The magazine used adjectives such as whistle, squeal, thin, tinny, and high-pitched to describe various sounds—not exactly high praise for a radio. It also found fault with the on/off switch. Their recommendation: Wait for further refinement before buying one.

More than 100,000 TR-1s were sold in its first year, but the radio was never very profitable.Archive PL/Alamy

More than 100,000 TR-1s were sold in its first year, but the radio was never very profitable.Archive PL/Alamy

The engineers at TI and IDEA didn’t necessarily disagree. They knew they were making a sound-quality trade-off by going with just four transistors. They also had quality-control problems with the transistors and other components, with initial failure rates up to 50 percent. Eventually, IDEA got the failure rate down to 12 to 15 percent.

Unbeknownst to TI or IDEA, Raytheon was also working on a transistorized radio—a tabletop model rather than a pocket-size one. That gave them the space to use six transistors, which significantly upped the sound quality. Raytheon’s radio came out in February 1955. Priced at $79.95, it weighed 2 kilograms and ran on four D-cell batteries. That August, a small Japanese company called Tokyo Telecommunications Engineering Corp. released its first transistor radio, the TR-55. A few years later, the company changed its name to Sony and went on to dominate the world’s consumer radio market.

The legacy of the Regency TR-1

The Regency TR-1 was a success by many measures: It sold 100,000 in its first year, and it helped jump-start the transistor market. But the radio was never very profitable. Within a few years, both Texas Instruments and IDEA left the commercial AM radio business, TI to focus on semiconductors, and IDEA to concentrate on citizens band radios. Yet Pat Haggerty estimated that this little pocket radio pushed the market in transistorized consumer goods ahead by two years. It was a leap of faith that worked out, thanks to some hardworking engineers with a vision.

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the October 2024 print issue as “The First Transistor Radio.”

References

In 1984, Michael Wolff conducted oral histories with IDEA’s lead engineer Richard Koch and purchasing agent Floyd Hayhurst. Wolff subsequently used them the following year in his IEEE Spectrum article “The Secret Six-Month Project,” which includes some great references at the end.

Robert J. Simcoe wrote “The Revolution in Your Pocket” for the fall 2004 issue of Invention and Technology to commemorate the 50th anniversary of the Regency TR-1.

As with many collectibles, the Regency TR-1 has its champions who have gathered together many primary sources. For example, Steve Reyer, a professor of electrical engineering at the Milwaukee School of Engineering before he passed away in 2018, organized his efforts in a webpage that’s now hosted by https://www.collectornet.net.

-

Disabling a Nuclear Weapon in Midflight

by John R. Allen on 29. September 2024. at 13:00

In 1956 Henry Kissinger speculated in Foreign Affairs about how the nuclear stalemate between the United States and the Soviet Union could force national security officials into a terrible dilemma. His thesis was that the United States risked sending a signal to potential aggressors that, faced with conflict, defense officials would have only two choices: settle for peace at any price, or retaliate with thermonuclear ruin. Not only had “victory in an all-out war become technically impossible,” Kissinger wrote, but in addition, it could “no longer be imposed at acceptable cost.”

His conclusion was that decision-makers needed better options between these catastrophic extremes. And yet this gaping hole in nuclear response policy persists to this day. With Russia and China leading an alliance actively opposing Western and like-minded nations, with war in Europe and the Middle East, and spiraling tensions in Asia, it would not be histrionic to suggest that the future of the planet is at stake. It is time to find a way past this dead end.

Seventy years ago only the Soviet Union and the United States possessed nuclear weapons. Today there are eight or nine countries that have weapons of mass destruction. Three of them—Russia, China, and North Korea—have publicly declared irreconcilable opposition to American-style liberal democracy.

Their antagonism creates an urgent security challenge. During its war with Ukraine, now in its third year, Russian leadership has repeatedly threatened to use tactical nuclear weapons. Then, earlier this year, the Putin government blocked United Nations enforcement of North Korea’s compliance with international sanctions, enabling the Hermit Kingdom to more easily circumvent access restrictions on nuclear technology.

Thousands of nuclear missiles can be in the air within minutes of a launch command; the consequence of an operational mistake or security miscalculation would be the obliteration of global society. Considered in this light, there is arguably no more urgent or morally necessary imperative than devising a means of neutralizing nuclear-equipped missiles midflight, should such a mistake occur.

Today the delivery of a nuclear package is irreversible once the launch command has been given. It is impossible to recall or de-activate a land-based, sea-based, or cruise missile once they are on their way. This is a deliberate policy-and-design choice born of concern that electronic sabotage, for example in the form of hostile radio signals, could disable the weapons once they are in flight.

And yet the possibility of a misunderstanding leading to nuclear retaliation remains all too real. For example, in 1983, Stanislav Petrov literally saved the world by overruling, based on his own judgement, a “high reliability” report from the Soviet Union’s Oko satellite surveillance network. He was later proven correct; the system had mistakenly interpreted sunlight reflections off high-altitude clouds as rocket flares, indicating an American attack. Had he followed his training and allowed a Soviet retaliation to proceed, his superiors would have realized within minutes that they had made a horrific mistake in response to a technical glitch, not an American first strike.

A Trident I submarine-launched ballistic missile was test fired from the submarine USS Mariano G. Vallejo, which was decommissioned in 1995.U.S. Navy

A Trident I submarine-launched ballistic missile was test fired from the submarine USS Mariano G. Vallejo, which was decommissioned in 1995.U.S. NavySo why, 40 years later, do we still lack a means of averting the unthinkable? In his book Command and Control, Eric Schlosser quoted an early commander in chief of the Strategic Air Command (SAC), General Thomas S. Power, who explained why there is still no way to revoke a nuclear order. Power said that the very existence of a recall or self-destruct mechanism “would create a fail-disable potential for knowledge agents to ‘dud’” the weapon. Schlosser wrote that “missiles being flight-tested usually had a command-destruct mechanism—explosives attached to the airframe that could be set off by remote control, destroying the missile if it flew off course. SAC refused to add that capability to operational missiles, out of concern that the Soviets might find a way to detonate them all in midflight.”

In 1990, Sherman Frankel pointed out in Science and Global Security that “there already exists an agreement between the United States and the Soviet Union, usually referred to as the 1971 Accidents Agreement, that specifies what is to be done in the event of an accidental or unauthorized launch of a nuclear weapon. The relevant section says that “in the event of an accident, the Party whose nuclear weapon is involved will immediately make every effort to take necessary measures to render harmless or destroy such weapon without its causing damage.” That’s a nice thought, but “in the ensuing decades, no capability to remotely divert or destroy a nuclear-armed missile...has been deployed by the U.S. government,” Frankel says. This is still true today.

The inability to reverse a nuclear decision has persisted because two generations of officials and policymakers have grossly underestimated our ability to prevent adversaries from attacking the hardware and software of nuclear-equipped missiles before or after they are launched.

The systems that deliver these warheads to their targets fall into three major categories, collectively known as the nuclear triad. It consists of submarine-launched ballistic missiles (SLBMs), ground-launched intercontinental ballistic missiles (ICBMs), and bombs launched from strategic bombers, including cruise missiles. About half of the United States’ active arsenal is carried on the Navy’s 14 nuclear Trident II ballistic-missile submarines, which are on constant patrol in the Atlantic and Pacific oceans. The ground-launched missiles are called Minuteman III, a 50-year-old system that the U.S. Air Force describes as the “cornerstone of the free world.” Approximately 400 ICBMs are siloed in ready-to-launch configurations across Montana, North Dakota, and Wyoming. Recently, under a vast program known as Sentinel, the U.S. Department of Defense embarked on a plan to replace the Minuteman IIIs at an estimated cost of US $140 billion.

Each SLBM and ICBM can be equipped with multiple independently targetable reentry vehicles, or MIRVs. These are aerodynamic shells, each containing a nuclear warhead, that can steer themselves with great accuracy to targets established in advance of their launch. Trident II can carry as many as 12 MIRVs, although to stay within treaty constraints, the U.S. Navy limits the number to about four. Today the United States has about 1,770 warheads deployed in the sea, in the ground, or on strategic bombers.

While civilian rockets and some military systems carry bidirectional communications for telemetry and guidance, strategic weapons are deliberately and completely isolated. Because our technological ability to secure a radio channel is incomparably improved, a secure monodirectional link that would allow the U.S. president to abort a mission in case of accident or reconciliation is possible today.

U.S. Air Force technicians work on a Minuteman III’s Multiple Independently-targetable Reentry Vehicle system. The reentry vehicles are the black cones.U.S. Air Force

U.S. Air Force technicians work on a Minuteman III’s Multiple Independently-targetable Reentry Vehicle system. The reentry vehicles are the black cones.U.S. Air ForceICBMs launched from the continental United States would take about 30 minutes to reach Russia; SLBMs would reach targets there in about half that time. During the 5-minute boost phase that lifts the rocket above the atmosphere, controllers could contact the airframe through ground-, sea-, or space-based (satellite) communication channels. After the engines shut down, the missile continues on a 20- or 25-minute (or less for SLBMs) parabolic arc, governed entirely by Newtonian mechanics. During that time, both terrestrial and satellite communications are still possible. However, as the reentry vehicle containing the warhead enters the atmosphere, a plasma sheaths the vehicle. That plasma blocks reception of radio waves, so during the reentry and descent phases, which combined last about a minute, receipt of abort instructions would only be possible after the plasma sheaths subside. What that means in practical terms is that there would be a communications window of only a few seconds before detonation, and probably only with space-borne transmitters.

There are several alternative approaches to the design and implementation of this safety mechanism. Satellite-navigation beacons such as GPS, for example, transmit signals in the L- band and decode terrestrial and near-earth messages at about 50 bits per second, which is more than enough for this purpose. Satellite-communication systems, as another example, compensate for weather, terrain, and urban canyons with specialized K-band beamforming antennas and adaptive noise-resistant modulation techniques, like spread spectrum, with data rates measured in megabits per second (Mb/s).

For either kind of signal, the received-carrier strength would be about 100 decibels per milliwatt; anything above that level, as it presumably would be at or near the missile’s apogee, would improve reliability without compromising security. The upshot is that the technology needed to implement this protection scheme—even for an abort command issued in the last few seconds of the missile’s trajectory—is available now. Today we understand how to reliably receive extremely low-powered satellite signals, reject interference and noise, and encode messages, using such techniques as symmetric cryptography so that they are sufficiently indecipherable for this application.

The signals, codes, and disablement protocols can be dynamically programmed immediately prior to launch. Even if an adversary was able to see the digital design, they would not know which key to use or how to implement it. Given all this, we believe that the ability to disarm a launched warhead should be included in the Pentagon’s extension of the controversial Sentinel modernization program.

What exactly would happen with the missile if a deactivate message was sent? It could be one of several things, depending on where the missile was in its trajectory. It could instruct the rocket to self-destruct on ascent, redirect the rocket into outer space, or disarm the payload before reentry or during descent.

Of course, all of these scenarios presume that the microelectronics platform underpinning the missile and weapon is secure and has not been tampered with. According to the Government Accountability Office, “the primary domestic source of microelectronics for nuclear weapons components is the Microsystems Engineering, Sciences, and Applications (MESA) Complex at Sandia National Laboratories in New Mexico.” Thanks to Sandia and other laboratories, there are significant physical barriers to microelectronic tampering. These could be enhanced with recent design advances that promote semiconductor supply-chain security.

Towards that end, Joe Costello, the founder and former CEO of the semiconductor software giant Cadence Design Systems, and a Kaufman Award winner, told us that there are many security measures and layers of device protection that simply did not exist as recently as a decade ago. He said, “We have the opportunity, and the duty, to protect our national security infrastructure in ways that were inconceivable when nuclear fail-safe policy was being made. We know what to do, from design to manufacturing. But we’re stuck with century-old thinking and decades-old technology. This is a transcendent risk to our future.”

Kissinger concluded his classic treatise by stating that “Our dilemma has been defined as the alternative of Armageddon or defeat without war. We can overcome the paralysis induced by such a prospect only by creating other alternatives both in our diplomacy and our military policy.” Indeed, the recall or deactivation of nuclear weapons post-launch, but before detonation, is imperative to the national security of the United States and the preservation of human life on the planet.

-

IEEE’s Let’s Make Light Competition Returns to Tackle Illumination Gap

by Willie D. Jones on 28. September 2024. at 18:00

In economically advantaged countries, it’s hard to imagine a time when electric lighting wasn’t available in nearly every home, business, and public facility. But according to the World Economic Forum, the sun remains the primary light source for more than 1 billion people worldwide.

Known as light poverty, the lack of access to reliable, adequate, artificial light is experienced by many of the world’s poorest people. They rely on unsafe, inefficient lighting sources such as candles and kerosene lamps to perform tasks such as studying, cooking, working, and doing household chores after dusk.

Overcoming the stark contrast in living conditions is the focus of IEEE Smart Lighting’s Let’s Make Light competition.

Open to anyone 18 or older, the contest seeks innovative lighting technologies that can be affordable, accessible, and sustainable for people now living in extreme poverty.

The entry that best responds to the challenge—developing a lighting system that is reliable and grid-independent and can be locally manufactured and repaired—will be awarded a US $3,000 prize. The second prize is $2,000, and the third-place finisher receives $1,000.

The deadline for submissions is 1 November.

The contest’s origin

The Let’s Make Light competition was born out of a presentation on global lighting issues, including light poverty, given to IEEE Life Fellow John Nelson, then chair of IEEE Smart Village, and IEEE Fellow Georges Zissis, former chair of the IEEE Future Directions Committee.

Wanting to know more about light poverty, Nelson forwarded the presentation to Toby Cumberbatch, who has extensive experience in developing practical solutions for communities facing the issue. Cumberbatch, an IEEE senior member, is a professor emeritus of electrical engineering at the Cooper Union, in New York City. For years, he taught his first-year engineering students how to create technology to help underserved communities.

“A winning design has to be usable by people who don’t even know what an on-off switch is.” —Toby Cumberbatch

Cumberbatch’s candid response was that the ideas presented didn’t adequately address the needs of impoverished end users he and his students had been trying to help.

That led Zissis to create the Let’s Make Light competition in hopes that it would ignite a spark in the larger IEEE community to develop technologies that would truly serve those who need it most. He appointed Cumberbatch as co-chair of the competition committee.

Understanding the wealth gap

Last year’s entries highlighted a significant gap in understanding the factors behind light poverty, Cumberbatch says. The factors include limited electrical grid access and the inability to afford all but the most rudimentary products. Cumberbatch says he and his students have even encountered communities with nonmonetary economies.

Past entries have failed to address the core challenge of providing practical and user-friendly lighting solutions.Reflecting on some recent submissions, Cumberbatch noted a fundamental disconnect. “The entries included charging stations for electric vehicles and proposals to use lasers to light streets,” he says. “A winning design has to be usable by people who don’t even know what an on-off switch is.”

To ensure this year’s contestants better address the problem, IEEE Future Directions released a video illustrating the realities of poverty and the essential qualities that a successful lighting solution must possess, such as being safe, clean, accessible, and affordable.

“With the right resources,” the video’s narrator says, “people living in these remote communities will create new and better ways to work and live their lives.”

For more details, visit the Let’s Make Light competition’s website.

-

Video Friday: ICRA Turns 40

by Evan Ackerman on 27. September 2024. at 16:00

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

IROS 2024: 14–18 October 2024, ABU DHABI, UAE

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH

Humanoids 204: 22–24 November 2024, NANCY, FRANCE

Enjoy today’s videos!

The interaction between humans and machines is gaining increasing importance due to the advancing degree of automation. This video showcases the development of robotic systems capable of recognizing and responding to human wishes.

By Jana Jost, Sebastian Hoose, Nils Gramse, Benedikt Pschera, and Jan Emmerich from Fraunhofer IML

[ Fraunhofer IML ]

Humans are capable of continuously manipulating a wide variety of deformable objects into complex shapes, owing largely to our ability to reason about material properties as well as our ability to reason in the presence of geometric occlusion in the object’s state. To study the robotic systems and algorithms capable of deforming volumetric objects, we introduce a novel robotics task of continuously deforming clay on a pottery wheel, and we present a baseline approach for tackling such a task by learning from demonstration.

By Adam Hung, Uksang Yoo, Jonathan Francis, Jean Oh, and Jeffrey Ichnowski from CMU Robotics Insittute

[ Carnegie Mellon University Robotics Institute ]

Suction-based robotic grippers are common in industrial applications due to their simplicity and robustness, but [they] struggle with geometric complexity. Grippers that can handle varied surfaces as easily as traditional suction grippers would be more effective. Here we show how a fractal structure allows suction-based grippers to increase conformability and expand approach angle range.

By Patrick O’Brien, Jakub F. Kowalewski, Chad C. Kessens, and Jeffrey Ian Lipton from Northeastern University Transformative Robotics Lab

We introduce a newly developed robotic musician designed to play an acoustic guitar in a rich and expressive manner. Unlike previous robotic guitarists, our Expressive Robotic Guitarist (ERG) is designed to play a commercial acoustic guitar while controlling a wide dynamic range, millisecond-level note generation, and a variety of playing techniques such as strumming, picking, overtones, and hammer-ons.

By Ning Yang , Amit Rogel , and Gil Weinberg from Georgia Tech

[ Georgia Tech ]

The iCub project was initiated in 2004 by Giorgio Metta, Giulio Sandini, and David Vernon to create a robotic platform for embodied cognition research. The main goals of the project were to design a humanoid robot, named iCub, to create a community by leveraging on open-source licensing, and implement several basic elements of artificial cognition and developmental robotics. More than 50 iCub have been built and used worldwide for various research projects.

[ Istituto Italiano di Tecnologia ]

In our video, we present SCALER-B, a multi-modal versatile climbing robot that is a quadruped robot capable of standing up, bipedal locomotion, bipedal climbing, and pullups with two finger grippers.

By Yusuke Tanaka, Alexander Schperberg, Alvin Zhu, and Dennis Hong from UCLA

[ Robotics Mechanical Laboratory at UCLA ]

This video explores Waseda University’s innovative journey in developing wind instrument-playing robots, from automated performance to interactive musical engagement. Through demonstrations of technical advancements and collaborative performances, the video illustrates how Waseda University is pushing the boundaries of robotics, blending technology and artistry to create interactive robotic musicians.

By Jia-Yeu Lin and Atsuo Takanishi from Waseda University

This video presents a brief history of robot painting projects with the intention of educating viewers about the specific, core robotics challenges that people developing robot painters face. We focus on four robotics challenges: controls, the simulation-to-reality gap, generative intelligence, and human-robot interaction. We show how various projects tackle these challenges with quotes from experts in the field.

By Peter Schaldenbrand, Gerry Chen, Vihaan Misra, Lorie Chen, Ken Goldberg, and Jean Oh from CMU

[ Carnegie Mellon University ]

The wheeled humanoid neoDavid is one of the most complex humanoid robots worldwide. All finger joints can be controlled individually, giving the system exceptional dexterity. neoDavids Variable Stiffness Actuators (VSAs) enable very high performance in the tasks with fast collisions, highly energetic vibrations, or explosive motions, such as hammering, using power-tools, e.g. a drill-hammer, or throwing a ball.

[ DLR Institute of Robotics andMechatronics ]

LG Electronics’ journey to commercialize robot navigation technology in various areas such as home, public spaces, and factories will be introduced in this paper. Technical challenges ahead in robot navigation to make an innovation for our better life will be discussed. With the vision on ‘Zero Labor Home’, the next smart home agent robot will bring us next innovation in our lives with the advances of spatial AI, i.e. combination of robot navigation and AI technology.

By Hyoung-Rock Kim, DongKi Noh and Seung-Min Baek from LG

[ LG ]

HILARE stands for: Heuristiques Intégrées aux Logiciels et aux Automatismes dans un Robot Evolutif. The HILARE project started by the end of 1977 at LAAS (Laboratoire d’Automatique et d’Analyse des Systèmes at this time) under the leadership of Georges Giralt. The video features HILARE robot and delivers explanations.

By Aurelie Clodic, Raja Chatila, Marc Vaisset, Matthieu Herrb, Stephy Le Foll, Jerome Lamy, and Simon Lacroix from LAAS/CNRS (Note that the video narration is in French with English subtitles.)

[ LAAS/CNRS ]

Humanoid legged locomotion is versatile, but typically used for reaching nearby targets. Employing a personal transporter (PT) designed for humans, such as a Segway, offers an alternative for humanoids navigating the real world, enabling them to switch from walking to wheeled locomotion for covering larger distances, similar to humans. In this work, we develop control strategies that allow humanoids to operate PTs while maintaining balance.

By Vidyasagar Rajendran, William Thibault, Francisco Javier Andrade Chavez, and Katja Mombaur from University of Waterloo

Motion planning, and in particular in tight settings, is a key problem in robotics and manufacturing. One infamous example for a difficult, tight motion planning problem is the Alpha Puzzle. We present a first demonstration in the real world of an Alpha Puzzle solution with a Universal Robotics UR5e, using a solution path generated from our previous work.

By Dror Livnat, Yuval Lavi, Michael M. Bilevich, Tomer Buber, and Dan Halperin from Tel Aviv University

Interaction between humans and their environment has been a key factor in the evolution and the expansion of intelligent species. Here we present methods to design and build an artificial environment through interactive robotic surfaces.

By Fabio Zuliani, Neil Chennoufi, Alihan Bakir, Francesco Bruno, and Jamie Paik from EPFL

[ EPFL Reconfigurable Robotics Lab ]