IEEE Spectrum IEEE Spectrum

-

Video Friday: Robots With Knives

by Erico Guizzo on 17. May 2024. at 10:00

Greetings from the IEEE International Conference on Robotics and Automation (ICRA) in Yokohama, Japan! We hope you’ve been enjoying our short videos on TikTok, YouTube, and Instagram. They are just a preview of our in-depth ICRA coverage, and over the next several weeks we’ll have lots of articles and videos for you. In today’s edition of Video Friday, we bring you a dozen of the most interesting projects presented at the conference.

Enjoy today’s videos, and stay tuned for more ICRA posts!

Upcoming robotics events for the next few months:

RoboCup 2024: 17–22 July 2024, EINDHOVEN, NETHERLANDS

ICSR 2024: 23–26 October 2024, ODENSE, DENMARK

Cybathlon 2024: 25–27 October 2024, ZURICH, SWITZERLAND

Please send us your events for inclusion.

The following two videos are part of the “ Cooking Robotics: Perception and Motion Planning” workshop, which explored “the new frontiers of ‘robots in cooking,’ addressing various scientific research questions, including hardware considerations, key challenges in multimodal perception, motion planning and control, experimental methodologies, and benchmarking approaches.” The workshop featured robots handling food items like cookies, burgers, and cereal, and the two robots seen in the videos below used knives to slice cucumbers and cakes. You can watch all workshop videos here.

“SliceIt!: Simulation-Based Reinforcement Learning for Compliant Robotic Food Slicing,” by Cristian C. Beltran-Hernandez, Nicolas Erbetti, and Masashi Hamaya from OMRON SINIC X Corporation, Tokyo, Japan.

Cooking robots can enhance the home experience by reducing the burden of daily chores. However, these robots must perform their tasks dexterously and safely in shared human environments, especially when handling dangerous tools such as kitchen knives. This study focuses on enabling a robot to autonomously and safely learn food-cutting tasks. More specifically, our goal is to enable a collaborative robot or industrial robot arm to perform food-slicing tasks by adapting to varying material properties using compliance control. Our approach involves using Reinforcement Learning (RL) to train a robot to compliantly manipulate a knife, by reducing the contact forces exerted by the food items and by the cutting board. However, training the robot in the real world can be inefficient, and dangerous, and result in a lot of food waste. Therefore, we proposed SliceIt!, a framework for safely and efficiently learning robot food-slicing tasks in simulation. Following a real2sim2real approach, our framework consists of collecting a few real food slicing data, calibrating our dual simulation environment (a high-fidelity cutting simulator and a robotic simulator), learning compliant control policies on the calibrated simulation environment, and finally, deploying the policies on the real robot.

“Cafe Robot: Integrated AI Skillset Based on Large Language Models,” by Jad Tarifi, Nima Asgharbeygi, Shuhei Takamatsu, and Masataka Goto from Integral AI in Tokyo, Japan, and Mountain View, Calif., USA.

The cafe robot engages in natural language inter-action to receive orders and subsequently prepares coffee and cakes. Each action involved in making these items is executed using AI skills developed by Integral, including Integral Liquid Pouring, Integral Powder Scooping, and Integral Cutting. The dialogue for making coffee, as well as the coordination of each action based on the dialogue, is facilitated by the Integral Task Planner.

“Autonomous Overhead Powerline Recharging for Uninterrupted Drone Operations,” by Viet Duong Hoang, Frederik Falk Nyboe, Nicolaj Haarhøj Malle, and Emad Ebeid from University of Southern Denmark, Odense, Denmark.

We present a fully autonomous self-recharging drone system capable of long-duration sustained operations near powerlines. The drone is equipped with a robust onboard perception and navigation system that enables it to locate powerlines and approach them for landing. A passively actuated gripping mechanism grasps the powerline cable during landing after which a control circuit regulates the magnetic field inside a split-core current transformer to provide sufficient holding force as well as battery recharging. The system is evaluated in an active outdoor three-phase powerline environment. We demonstrate multiple contiguous hours of fully autonomous uninterrupted drone operations composed of several cycles of flying, landing, recharging, and takeoff, validating the capability of extended, essentially unlimited, operational endurance.

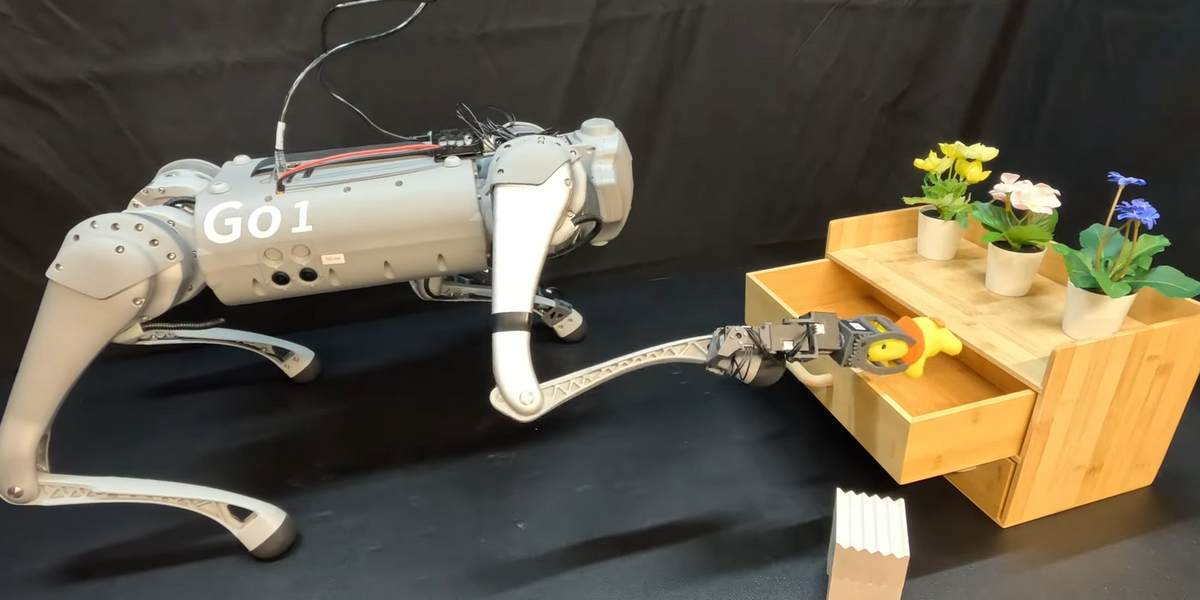

“Learning Quadrupedal Locomotion With Impaired Joints Using Random Joint Masking,” by Mincheol Kim, Ukcheol Shin, and Jung-Yup Kim from Seoul National University of Science and Technology, Seoul, South Korea, and Robotics Institute, Carnegie Mellon University, Pittsburgh, Pa., USA.

Quadrupedal robots have played a crucial role in various environments, from structured environments to complex harsh terrains, thanks to their agile locomotion ability. However, these robots can easily lose their locomotion functionality if damaged by external accidents or internal malfunctions. In this paper, we propose a novel deep reinforcement learning framework to enable a quadrupedal robot to walk with impaired joints. The proposed framework consists of three components: 1) a random joint masking strategy for simulating impaired joint scenarios, 2) a joint state estimator to predict an implicit status of current joint condition based on past observation history, and 3) progressive curriculum learning to allow a single network to conduct both normal gait and various joint-impaired gaits. We verify that our framework enables the Unitree’s Go1 robot to walk under various impaired joint conditions in real world indoor and outdoor environments.

“Synthesizing Robust Walking Gaits via Discrete-Time Barrier Functions With Application to Multi-Contact Exoskeleton Locomotion,” by Maegan Tucker, Kejun Li, and Aaron D. Ames from Georgia Institute of Technology, Atlanta, Ga., and California Institute of Technology, Pasadena, Calif., USA.

Successfully achieving bipedal locomotion remains challenging due to real-world factors such as model uncertainty, random disturbances, and imperfect state estimation. In this work, we propose a novel metric for locomotive robustness – the estimated size of the hybrid forward invariant set associated with the step-to-step dynamics. Here, the forward invariant set can be loosely interpreted as the region of attraction for the discrete-time dynamics. We illustrate the use of this metric towards synthesizing nominal walking gaits using a simulation in-the-loop learning approach. Further, we leverage discrete time barrier functions and a sampling-based approach to approximate sets that are maximally forward invariant. Lastly, we experimentally demonstrate that this approach results in successful locomotion for both flat-foot walking and multicontact walking on the Atalante lower-body exoskeleton.

“Supernumerary Robotic Limbs to Support Post-Fall Recoveries for Astronauts,” by Erik Ballesteros, Sang-Yoep Lee, Kalind C. Carpenter, and H. Harry Asada from MIT, Cambridge, Mass., USA, and Jet Propulsion Laboratory, California Institute of Technology, Pasadena, Calif., USA.

This paper proposes the utilization of Supernumerary Robotic Limbs (SuperLimbs) for augmenting astronauts during an Extra-Vehicular Activity (EVA) in a partial-gravity environment. We investigate the effectiveness of SuperLimbs in assisting astronauts to their feet following a fall. Based on preliminary observations from a pilot human study, we categorized post-fall recoveries into a sequence of statically stable poses called “waypoints”. The paths between the waypoints can be modeled with a simplified kinetic motion applied about a specific point on the body. Following the characterization of post-fall recoveries, we designed a task-space impedance control with high damping and low stiffness, where the SuperLimbs provide an astronaut with assistance in post-fall recovery while keeping the human in-the-loop scheme. In order to validate this control scheme, a full-scale wearable analog space suit was constructed and tested with a SuperLimbs prototype. Results from the experimentation found that without assistance, astronauts would impulsively exert themselves to perform a post-fall recovery, which resulted in high energy consumption and instabilities maintaining an upright posture, concurring with prior NASA studies. When the SuperLimbs provided assistance, the astronaut’s energy consumption and deviation in their tracking as they performed a post-fall recovery was reduced considerably.

“ArrayBot: Reinforcement Learning for Generalizable Distributed Manipulation through Touch,” by Zhengrong Xue, Han Zhang, Jingwen Cheng, Zhengmao He, Yuanchen Ju, Changyi Lin, Gu Zhang, and Huazhe Xu from Tsinghua Embodied AI Lab, IIIS, Tsinghua University; Shanghai Qi Zhi Institute; Shanghai AI Lab; and Shanghai Jiao Tong University, Shanghai, China.

We present ArrayBot, a distributed manipulation system consisting of a 16 × 16 array of vertically sliding pillars integrated with tactile sensors. Functionally, ArrayBot is designed to simultaneously support, perceive, and manipulate the tabletop objects. Towards generalizable distributed manipulation, we leverage reinforcement learning (RL) algorithms for the automatic discovery of control policies. In the face of the massively redundant actions, we propose to reshape the action space by considering the spatially local action patch and the low-frequency actions in the frequency domain. With this reshaped action space, we train RL agents that can relocate diverse objects through tactile observations only. Intriguingly, we find that the discovered policy can not only generalize to unseen object shapes in the simulator but also have the ability to transfer to the physical robot without any sim-to-real fine tuning. Leveraging the deployed policy, we derive more real world manipulation skills on ArrayBot to further illustrate the distinctive merits of our proposed system.

“SKT-Hang: Hanging Everyday Objects via Object-Agnostic Semantic Keypoint Trajectory Generation,” by Chia-Liang Kuo, Yu-Wei Chao, and Yi-Ting Chen from National Yang Ming Chiao Tung University, in Taipei and Hsinchu, Taiwan, and NVIDIA.

We study the problem of hanging a wide range of grasped objects on diverse supporting items. Hanging objects is a ubiquitous task that is encountered in numerous aspects of our everyday lives. However, both the objects and supporting items can exhibit substantial variations in their shapes and structures, bringing two challenging issues: (1) determining the task-relevant geometric structures across different objects and supporting items, and (2) identifying a robust action sequence to accommodate the shape variations of supporting items. To this end, we propose Semantic Keypoint Trajectory (SKT), an object agnostic representation that is highly versatile and applicable to various everyday objects. We also propose Shape-conditioned Trajectory Deformation Network (SCTDN), a model that learns to generate SKT by deforming a template trajectory based on the task-relevant geometric structure features of the supporting items. We conduct extensive experiments and demonstrate substantial improvements in our framework over existing robot hanging methods in the success rate and inference time. Finally, our simulation-trained framework shows promising hanging results in the real world.

“TEXterity: Tactile Extrinsic deXterity,” by Antonia Bronars, Sangwoon Kim, Parag Patre, and Alberto Rodriguez from MIT and Magna International Inc.

We introduce a novel approach that combines tactile estimation and control for in-hand object manipulation. By integrating measurements from robot kinematics and an image based tactile sensor, our framework estimates and tracks object pose while simultaneously generating motion plans in a receding horizon fashion to control the pose of a grasped object. This approach consists of a discrete pose estimator that tracks the most likely sequence of object poses in a coarsely discretized grid, and a continuous pose estimator-controller to refine the pose estimate and accurately manipulate the pose of the grasped object. Our method is tested on diverse objects and configurations, achieving desired manipulation objectives and outperforming single-shot methods in estimation accuracy. The proposed approach holds potential for tasks requiring precise manipulation and limited intrinsic in-hand dexterity under visual occlusion, laying the foundation for closed loop behavior in applications such as regrasping, insertion, and tool use.

“Out of Sight, Still in Mind: Reasoning and Planning about Unobserved Objects With Video Tracking Enabled Memory Models,” by Yixuan Huang, Jialin Yuan, Chanho Kim, Pupul Pradhan, Bryan Chen, Li Fuxin, and Tucker Hermans from University of Utah, Salt Lake City, Utah, Oregon State University, Corvallis, Ore., and NVIDIA, Seattle, Wash., USA.

Robots need to have a memory of previously observed, but currently occluded objects to work reliably in realistic environments. We investigate the problem of encoding object-oriented memory into a multi-object manipulation reasoning and planning framework. We propose DOOM and LOOM, which leverage transformer relational dynamics to encode the history of trajectories given partial-view point clouds and an object discovery and tracking engine. Our approaches can perform multiple challenging tasks including reasoning with occluded objects, novel objects appearance, and object reappearance. Throughout our extensive simulation and real world experiments, we find that our approaches perform well in terms of different numbers of objects and different numbers

“Open Sourse Underwater Robot: Easys,” by Michikuni Eguchi, Koki Kato, Tatsuya Oshima, and Shunya Hara from University of Tsukuba and Osaka University, Japan.

“Sensorized Soft Skin for Dexterous Robotic Hands,” by Jana Egli, Benedek Forrai, Thomas Buchner, Jiangtao Su, Xiaodong Chen, and Robert K. Katzschmann from ETH Zurich, Switzerland, and Nanyang Technological University, Singapore.

Conventional industrial robots often use two fingered grippers or suction cups to manipulate objects or interact with the world. Because of their simplified design, they are unable to reproduce the dexterity of human hands when manipulating a wide range of objects. While the control of humanoid hands evolved greatly, hardware platforms still lack capabilities, particularly in tactile sensing and providing soft contact surfaces. In this work, we present a method that equips the skeleton of a tendon-driven humanoid hand with a soft and sensorized tactile skin. Multi-material 3D printing allows us to iteratively approach a cast skin design which preserves the robot’s dexterity in terms of range of motion and speed. We demonstrate that a soft skin enables frmer grasps and piezoresistive sensor integration enhances the hand’s tactile sensing capabilities.

-

Credentialing Adds Value to Training Programs

by Christine Cherevko on 16. May 2024. at 18:00

With careers in engineering and technology evolving so rapidly, a company’s commitment to upskilling its employees is imperative to their career growth. Maintaining the appropriate credentials—such as a certificate or digital badge that attests to successful completion of a specific set of learning objectives—can lead to increased job satisfaction, employee engagement, and higher salaries.

For many engineers, mostly in North America, completing a certain number of professional development hours and continuing-education units each year is required to maintain a professional engineering license.

Many companies have found that offering training and credentialing opportunities helps them stay competitive in today’s job marketplace. The programs encourage promotion from within—which helps reduce turnover and costly recruiting expenses for organizations. Employees with a variety of credentials are more engaged in industry-related initiatives and are more likely to take on leadership roles than their noncredentialed counterparts. Technical training programs also give employees the opportunity to enhance their technical skills and demonstrate their willingness to learn new ones.

One way to strengthen and elevate in-house technical training is through the IEEE Credentialing Program. A credential is an assurance of quality education obtained for employers and a source of pride for learners because they can share that their credentials have been verified by the world’s largest technical professional organization.

In addition to supporting engineering professionals in achieving their career goals, the certificates and digital badges available through the program help companies enhance the credibility of their training events, conferences, and courses. Also, most countries accept IEEE certificates towards their domestic continuing-education requirements for engineers.

Start earning your certificates and digital badges with these IEEE courses. Learn how your organization can offer credentials for your courses here.

-

High-Speed Rail Finally Coming to the U.S.

by Willie D. Jones on 16. May 2024. at 13:11

In late April, the Miami-based rail company Brightline Trains broke ground on a project that the company promises will give the United States its first dedicated, high-speed passenger rail service. The 350-kilometer (218-mile) corridor, which the company calls Brightline West, will connect Las Vegas to the suburbs of Los Angeles. Brightline says it hopes to complete the project in time for the 2028 Summer Olympic Games, which will take place in Los Angeles.

Brightline has chosen Siemens American Pioneer 220 engines that will run at speeds averaging 165 kilometers per hour, with an advertised top speed of 320 km/h. That average speed still falls short of the Eurostar network connecting London, Paris, Brussels, and Amsterdam (300 km/h), Germany’s Intercity-Express 3 service (330 km/h), and the world’s fastest train service, China’s Beijing-to-Shanghai regional G trains (350 km/h).

There are currently only two rail lines in the U.S. that ever reach the 200 km/h mark, which is the unofficial minimum speed at which a train can be considered to be high-speed rail. Brightline, the company that is about to construct the L.A.-to-Las-Vegas Brightline West line, also operates a Miami-Orlando rail line that averages 111 km/h. The other is Amtrak’s Acela line between Boston and Washington, D.C.—and that line only qualifies as high-speed rail for just 80 km of its 735-km route. That’s a consequence of the rail status quo in the United States, in which slower freight trains typically have right of way on shared rail infrastructure.

As Vaclav Smil, professor emeritus at the University of Manitoba, noted in IEEE Spectrum in 2018, there has long been hope that the United States would catch up with Europe, China, and Japan, where high-speed regional rail travel has long been a regular fixture. “In a rational world, one that valued convenience, time, low energy intensity and low carbon conversions, the high-speed electric train would always be the first choice for [intercity travel],” Smil wrote at the time. And yet, in the United States, funding and regulatory approval for such projects have been in short supply.

Now, Brightline West, as well as a few preexisting rail projects that are at some stage of development, such as the California High-Speed Rail Network and the Texas Central Line, could be a bellwether for an attitude shift that could—belatedly—put trains closer to equal footing with cars and planes for travelers in the continental United States.

The U.S. government, like many national governments, has pledged to reduce greenhouse gas emissions. Because that generally requires decarbonizing transportation and improving energy efficiency, trains, which can run on electricity generated from fossil-fuel as well as non-fossil-fuel sources, are getting a big push. As Smil noted in 2018, trains use a fraction of a megajoule of energy per passenger-kilometer, while a lone driver in even one of the most efficient gasoline-powered cars will use orders of magnitude more energy per passenger-kilometer.

Brightline and Siemens did not respond to inquiries by Spectrum seeking to find out what innovations they plan to introduce that would make the L.A.-to-Las Vegas passenger line run faster or perhaps use less energy than its Asian and European counterparts. But Karen E. Philbrick, executive director of the Mineta Transportation Institute at San Jose State University, in California, says that’s beside the point. She notes that the United States, having focused on cars for the better part of the past century, already missed the period when major innovations were being made in high-speed rail. “What’s important about Brightline West and, say, the California High-speed Rail project, is not how innovative they are, but the fact that they’re happening at all. I am thrilled to see the U.S. catching up.”

Maybe Brightline or other groups seeking to get Americans off the roadways and onto railways will be able to seize the moment and create high-speed rail lines connecting other intraregional population centers in the United States. With enough of those pieces in place, it might someday be possible to ride the rails from California to New York in a single day, in the same way train passengers in China can get from Beijing to Shanghai between breakfast and lunch.

-

Never Recharge Your Consumer Electronics Again?

by Stephen Cass on 15. May 2024. at 16:25

Stephen Cass: Hello and welcome to Fixing the Future, an IEEE Spectrum podcast where we look at concrete solutions to tough problems. I’m your host Stephen Cass, a senior editor at IEEE Spectrum. And before I start, I just wanted to tell you that you can get the latest coverage of Spectrum‘s most important beats, including AI, climate change, and robotics, by signing up for one of our free newsletters. Just go to spectrum.ieee.org/newsletters to subscribe.

We all love our mobile devices where the progress of Moore’s Law has meant we’re able to pack an enormous amount of computing power in something that’s small enough that we can wear it as jewelery. But their Achilles heel is power. They eat up battery life requiring frequent battery changes or charging. One company that’s hoping to reduce our battery anxiety is Exeger, which wants to enable self-charging devices that convert ambient light into energy on the go. Here to talk about its so-called Powerfoyle solar cell technology is Exeger’s founder and CEO, Giovanni Fili. Giovanni, welcome to the show.

Giovanni Fili: Thank you.

Cass: So before we get into the details of the Powerfoyle technology, was I right in saying that the Achilles heel of our mobile devices is battery life? And if we could reduce or eliminate that problem, how would that actually influence the development of mobile and wearable tech beyond just not having to recharge as often?

Fili: Yeah. I mean, for sure, I think the global common problem or pain point is for sure battery anxiety in different ways, ranging from your mobile phone to your other portable devices, and of course, even EV like cars and all that. So what we’re doing is we’re trying to eliminate this or reduce or eliminate this battery anxiety by integrating— seamlessly integrating, I should say, a solar cell. So our solar cell can convert any light energy to electrical energy. So indoor, outdoor from any angle. We’re not angle dependent. And the solar cell can take the shape. It can look like leather, textile, brushed steel, wood, carbon fiber, almost anything, and can take light from all angles as well, and can be in different colors. It’s also very durable. So our idea is to integrate this flexible, thin film into any device and allow it to be self-powered, allowing for increased functionality in the device. Just look at the smartwatches. I mean, the first one that came, you could wear them for a few hours, and you had to charge them. And they packed them with more functionality. You still have to charge them every day. And you still have to charge them every day, regardless. But now, they’re packed with even more stuff. So as soon as you get more energy efficiency, you pack them with more functionality. So we’re enabling this sort of jump in functionality without compromising design, battery, sustainability, all of that. So yeah, so it’s been a long journey since I started working with this 17 years ago.

Cass: I actually wanted to ask about that. So how is Exeger positioned to attack this problem? Because it’s not like you’re the first company to try and do nice mobile charging solutions for mobile devices.

Fili: I can mention there, I think that the main thing that differentiates us from all other previous solutions is that we have invented a new electrode material, the anode and the cathode with a similar almost like battery. So we have anode, cathode. We have electrolytes inside. So this is a—

Cass: So just for readers who might not be familiar, a battery is basically you have an anode, which is the positive terminal—I hope I didn’t forgot that—cathode, which is a negative terminal, and then you have an electrolyte between them in the battery, and then chemical reactions between these three components, and it can get kind of complicated, produce an electric potential between one side and the other. And in a solar cell, also there’s an anode and a cathode and so on. Have I got that right, my little, brief sketch?

Fili: Yeah. Yeah. Yeah. And so what we add to that architecture is we add one layer of titanium dioxide nanoparticles. Titanium dioxide is the white in white wall paint, toothpaste, sunscreen, all that. And it’s a very safe and abundant material. And we use that porous layer of titanium nanoparticles. And then we deposit a dye, a color, a pigment on this layer. And this dye can be red, black, blue, green, any kind of color. And the dye will then absorb the photons, excite electrons that are injected into the titanium dioxide layer and then collected by the anode and then conducted out to the cable. And now, we use the electrons to light the lamp or a motor or whatever we do with it. And then they turn back to the cathode on the other side and inside the cell. So the electrons goes the other way and the inner way. So the plus, you can say, go inside ions in the electrolytes. So it’s a regenerative system.

So our innovation is a new— I mean, all solar cells, they have electrodes to collect the electrons. If you have silicon wafers or whatever you have, right? And you know that all these solar cells that you’ve seen, they have silver lines crossing the surface. The silver lines are there because the conductivity is quite poor, funny enough, in these materials. So high resistance. So then you need to deposit the silver lines there, and they’re called current collectors. So you need to collect the current. Our innovation is a new electrode material that has 1,000 times better conductivity than other flexible electrode materials. That allows us as the only company in the world to eliminate the silver lines. And we print all our layers as well. And as you print in your house, you can print a photo, an apple with a bite in it, you can print the name, you can print anything you want. We can print anything we want, and it will also be converting light energy to electric energy. So a solar cell.

Cass: So the key part is that the color dye is doing that initial work of converting the light. Do different colors affect the efficiency? I did see on your site that it comes in all these kind of different colors, but. And I was thinking to myself, well, is the black one the best? Is the red one the best? Or is it relatively insensitive to the visible color that I see when I look at these dyes?

Fili: So you’re completely right there. So black would give you the most. And if you go to different colors, typically you lose like 20, 30 percent. But fortunately enough for us, over 50 percent of the consumer electronic market is black products. So that’s good. So I think that you asked me how we’re positioned. I mean, with our totally unique integration possibilities, imagine this super thin, flexible film that works all day, every day from morning to sunset, indoor, outdoor, can look like leather. So we’ve made like a leather bag, right? The leather bag is the solar cell. The entire bag is the solar cell. You wouldn’t see it. It just looks like a normal leather bag.

Cass: So when you talk about flexible, you actually mean this— so sometimes when people talk about flexible electronics, they mean it can be put into a shape, but then you’re not supposed to bend it afterwards. When you’re talking about flexible electronics, you’re talking about the entire thing remains flexible and you can use it flexibly instead of just you can conform it once to a shape and then you kind of leave it alone.

Fili: Correct. So we just recently released a hearing protector with 3M. This great American company with more than 60,000 products across the world. So we have a global exclusivity contract with them where they have integrated our bendable, flexible solar film in the headband. So the headband is the solar cell, right? And where you previously had to change disposable battery every second week, two batteries every second week, now you never need to change the battery again. We just recharge this small rechargeable battery indoor and outdoor, just continues to charge all the time. And they have added a lot of extra really cool new functionality as well. So we’re eliminating the need for disposable batteries. We’re saving millions and millions of batteries. We’re saving the end user, the contractor, the guy who uses them a lot of hassle to buy this battery, store them. And we increase reliability and functionality because they will always be charged. You can trust them that they always work. So that’s where we are totally unique. The solar cell is super durable. If we can be in a professional hearing protector to use on airports, construction sites, mines, whatever you use, factories, oil rig platforms, you can do almost anything. So I don’t think any other solar cell would be able to pass those durability tests that we did. It’s crazy.

Cass: So I have a question. It kind of it’s more appropriate from my experience with utility solar cells and things you put on roofs. But how many watts per square meter can you deliver, we’ll say, in direct sunlight?

Fili: So our focus is on indirect sunlight, like shade, suboptimal light conditions, because that’s where you would typically be with these products. But if you compare to more of a silicon, which is what you typically use for calculators and all that stuff. So we are probably around twice as what they deliver in this dark conditions, two to three times, depending. If you use glass, if you use flexible, we’re probably three times even more, but. So we don’t do full sunshine utility scale solar. But if you look at these products like the hearing protector, we have done a lot of headphones with Adidas and other huge brands, we typically recharge like four times what they use. So if you look at— if you go outside, not in full sunshine, but half sunshine, let’s say 50,000 lux, you’re probably talking at about 13, 14 minutes to charge one hour of listening. So yeah, so we have sold a few hundred thousand products over the last three years when we started selling commercially. And - I don’t know - I haven’t heard anyone who has charged since. I mean, surely someone has, but typically the user never need to charge them again, just charge themself.

Cass: Well, that’s right, because for many years, I went to CES, and I often would buy these, or acquire these, little solar cell chargers. And it was such a disappointing experience because they really would only work in direct sunlight. And even then, it would take a very long time. So I want to talk a little bit about, then, to get to that, what were some of the biggest challenges you had to overcome on the way to developing this tech?

Fili: I mean, this is the fourth commercial solar cell technology in the world after 110 or something years of research. I mean, the Americans, the Bell Laboratory sent the first silicon cell, I think it’s in like 1955 or something, to space. And then there’s been this constant development and trying to find, but to develop a new energy source is as close to impossible as you get, more or less. Everybody tried and everybody failed. We didn’t know that, luckily enough. So just the whole-- so when I try to explain this, I get this question quite a lot. Imagine you found out something really cool, but there’s no one to ask. There’s no book to read. You just realize, “Okay, I have to make like hundreds of thousands, maybe millions of experiments to learn. And all of them, except finally one, they will all fail. But that’s okay.” You will fail, fail, fail. And then, “Oh, here’s the solution. Something that works. Okay. Good.” So we had to build on just constant failing, but it’s okay because you’re in a research phase. So we had to. I mean, we started off with this new nanomaterials, and then we had to make components of these materials. And then we had to make solar cells of the components, but there were no machines either. We have had to invent all the machines from scratch as well to make these components and the solar cells and some of the non-materials. That was also tough. How do you design a machine for something that doesn’t exist? It’s pretty difficult specification to give to a machine builder. So in the end, we had to build our own machine building capacity here. We’re like 50 guys building machines, so.

But now, I mean, today we have over 300 granted patents, another 90 that will be approved soon. We have a complete machine park that’s proprietary. We are now building the largest solar cell factory— one of the largest solar cell factories in Europe. It’s already operational, phase one. Now we’re expanding into phase two. And we’re completely vertically integrated. We don’t source anything from Russia, China; never did. Only US, Japan, and Europe. We run the factories on 100 percent renewable energy. We have zero emissions to air and water. And we don’t have any rare earth metals, no strange stuff in it. It’s like it all worked out. And now we have signed, like I said, global exclusivity deal with 3M. We have a global exclusivity deal with the largest company in the world on computer peripherals, like mouse, keyboard, that stuff. They can only work with us for years. We have signed one of the large, the big fives, the Americans, the huge CE company. Can’t tell you yet the name. We have a globally exclusive deal for electronic shelf labels, the small price tags in the stores. So we have a global solution with Vision Group, that’s the largest. They have 50 percent of the world market as well. And they have Walmart, IKEA, Target, all these huge companies. So now it’s happening. So we’re rolling out, starting to deploy massive volumes later this year.

Cass:So I’ll talk a little bit about that commercial experience because you talked about you had to create verticals. I mean, in Spectrum, we do cover other startups which have had these— they’re kind of starting from scratch. And they develop a technology, and it’s a great demo technology. But then it comes that point where you’re trying to integrate in as a supplier or as a technology partner with a large commercial entity, which has very specific ideas and how things are to be manufactured and delivered and so on. So can you talk a little bit about what it was like adapting to these partners like 3M and what changes you had to make and what things you learned in that process where you go from, “Okay, we have a great product and we could make our own small products, but we want to now connect in as part of this larger supply chain.”

Fili: It’s a very good question and it’s extremely tough. It’s a tough journey, right? Like to your point, these are the largest companies in the world. They have their way. And one of the first really tough lessons that we learned was that one factory wasn’t enough. We had to build two factories to have redundancy in manufacturing. Because single source is bad. Single source, single factory, that’s really bad. So we had to build two factories and we had to show them we were ready, willing and able to be a supplier to them. Because one thing is the product, right? But the second thing is, are you worthy supplier? And that means how much money you have in the bank. Are you going to be here in two, three, four years? What’s your ISO certifications like? REACH, RoHS, Prop 65. What’s your LCA? What’s your view on this? Blah, blah, blah. Do you have professional supply chain? Did you do audits on your suppliers? But now, I mean, we’ve had audits here by five of the largest companies in the world. We’ve all passed them. And so then you qualify as a worthy supplier. Then comes your product integration work, like you mentioned. And I think it’s a lot about— I mean, that’s our main feature. The main unique selling point with Exeger is that we can integrate into other people’s products. Because when you develop this kind of crazy technology-- “Okay, so this is solar cell. Wow. Okay.” And it can look like anything. And it works all the time. And all the other stuff is sustainable and all that. Which product do you go for? So I asked myself—I’m an entrepreneur since the age of 15. I’ve started a number of companies. I lost so much money. I can’t believe it. And managed to earn a little bit more. But I realized, “Okay, how do you select? Where do you start? Which product?”

Okay, so I sat down. I was like, “When does it sell well? When do you see market success?” When something is important. When something is important, it’s going to work. It’s not the best tech. It has to be important enough. And then, you need distribution and scale and all that. Okay, how do you know if something is important? You can’t. Okay. What if you take something that’s already is— I mean, something new, you can’t know if it’s going to work. But if we can integrate into something that’s already selling in the billions of units per year, like headphones— I think this year, one billion headphones are going to be sold or something. Okay, apparently, obviously that’s important for people. Okay, let’s develop technology that can be integrated into something that’s already important and allow it to stay, keep all the good stuff, the design, the weight, the thickness, all of that, even improve the LCA better for the environment. And it’s self-powered. And it will allow the user to participate and help a little bit to a better world, right? With no charge cable, no charging in the wall, less batteries and all that. So our strategy was to develop such a strong technology so that we could integrate into these companies/partners products.

Cass: So I guess the question there is— so you come to a company, the company has its own internal development engineers. It’s got its own people coming up with product ideas and so on. How do you evangelize within a company to say, “Look, you get in the door, you show your demo,” to say, product manager who’s thinking of new product lines, “You guys should think about making products with our technology.” How do you evangelize that they think, “Okay, yeah, I’m going to spend the next six months of my life betting on these headphones, on this technology that I didn’t invent that I’m kind of trusting.” How do you get that internal buy-in with the internal engineers and the internal product developers and product managers?

Fili: That’s the Holy Grail, right? It’s very, very, very difficult. Takes a lot of time. It’s very expensive. And the point, I think you’re touching a little bit when you’re asking me now, because they don’t have a guy waiting to buy or a division or department waiting to buy this flexible indoor solar cell that can look like leather. They don’t have anyone. Who’s going to buy? Who’s the decision maker? There is not one. There’s a bunch, right? Because this will affect the battery people. This will affect the antenna people. This will affect the branding people. It will affect the mechanic people, etc., etc., etc. So there’s so many people that can say no. No one can say yes alone. All of them can say no alone. Any one of them can block the project, but to proceed, all of them have to say yes. So it’s a very, very tough equation. So that’s why when we realized this— this was another big learning that we had that we couldn’t go with the sales guy. We couldn’t go with two sales guys. We had to go with an entire team. So we needed to bring our design guy, our branding person, our mechanics person, our software engineer. We had to go like huge teams to be able to answer all the questions and mitigate and explain.

So we had to go both top down and explain to the head of product or head of sustainability, “Okay, if you have 100 million products out in five years and they’re going to be using 50 batteries per year, that’s 5 billion batteries per year. That’s not good, right? What if we can eliminate all these batteries? That’s good for sustainability.” “Okay. Good.” “That’s also good for total cost. We can lower total cost of ownership.” “Okay, that’s also good.” “And you can sell this and this and this way. And by the way, here’s a narrative we offer you. We have also made some assets, movies, pictures, texts. This is how other people talk about this.” But it’s a very, very tough start. How do you get the first big name in? And big companies, they have a lot to risk, a lot to lose as well. So my advice would be to start smaller. I mean, we started mainly due to COVID, to be honest. Because Sweden stayed open during COVID, which was great. We lived our lives almost like normal. But we couldn’t work with any international companies because they were all closed or no one went to the office. So we had to turn to Swedish companies, and we developed a few products during COVID. We launched like four or five products on the market with smaller Swedish companies, and we launched so much. And then we could just send these headphones to the large companies and tell them, “You know what? Here’s a headphone. Use it for a few months. We’ll call you later.” And then they call us that, “You know what? We have used them for three months. No one has charged. This is sick. It actually works.” We’re like, “Yeah, we know.” And then that just made it so much easier. And now anyone who wants to make a deal with us, they can just buy these products anywhere online or in-store across the whole world and try them for themselves.

And we send them also samples. They can buy, they can order from our website, like development kits. We have software, we have partnered up with Qualcomm, early semiconductor. All the big electronics companies, we’re now qualified partners with them. So all the electronics is powerful already. So now it’s very easy now to build prototypes if you want to test something. We have offices across the world. So now it’s much easier. But my advice to anyone who would want to start with this is try and get a few customers in. The important thing is that they also care about the project. If we go to one of these large companies, 3M, they have 60,000 products. If they have 60,001, yeah. But for us, it’s like the project. And we have managed to land it in a way. So it’s also important for them now because it just touches so many of their important areas that they work with, so.

Cass: So in terms of future directions for the technology, do you have a development pathway? What kind of future milestones are you hoping to hit?

Fili: For sure. So at the moment, we’re focusing on consumer electronics market, IoT, smart home. So I think the next big thing will be the smart workplace where you see huge construction sites and other areas where we connect the workers, anything from the smart helmet. You get hit in your head, how hard was it? I mean, why can’t we tell you that? That’s just ridiculous. There’s all these sensors already available. Someone just needs to power the helmet. Location services. Is the right person in the right place with the proper training or not? On the construction side, do you have the training to work with dynamite, for example, or heavy lifts or different stuff? So you can add the geofencing in different sites. You can add health data, digital health tracking, pulse, breathing, temperature, different stuff. Compliance, of course. Are you following all the rules? Are you wearing your helmet? Is the helmet buttoned? Are you wearing the proper other gear, whatever it is? Otherwise, you can’t start your engine, or you can’t go into this site, or you can’t whatever. I think that’s going to greatly improve the proactive safety and health a lot and increase profits for employers a lot too at the same time. In a few years, I think we’re going to see the American unions are going to be our best sales force. Because when they see the greatness of this whole system, they’re going to demand it in all tenders, all biggest projects. They’re going to say, “Hey, we want to have the connected worker safety stuff here.” Because you can just stream-- if you’re working, you can stream music, talk to your colleagues, enjoy connected safety without invading the privacy, knowing that you’re good. If you fall over, if you faint, if you get a heart attack, whatever, in a few seconds, the right people will know and they will take their appropriate actions. It’s just really, really cool, this stuff.

Cass: Well, it’ll be interesting to see how that turns out. But I’m afraid that’s all we have time for today, although this is fascinating. But today, so Giovanni, I want to thank you very much for coming on the show.

Fili: Thank you so much for having me.

Cass: So today we were talking with Giovanni Fili, who is Exeger’s founder and CEO, about their new flexible powerfoyle solar cell technology. For IEEE Spectrum‘s Fixing the Future, I’m Stephen Cass, and I hope you’ll join me next time.

-

How to Put a Data Center in a Shoebox

by Anna Herr on 15. May 2024. at 15:00

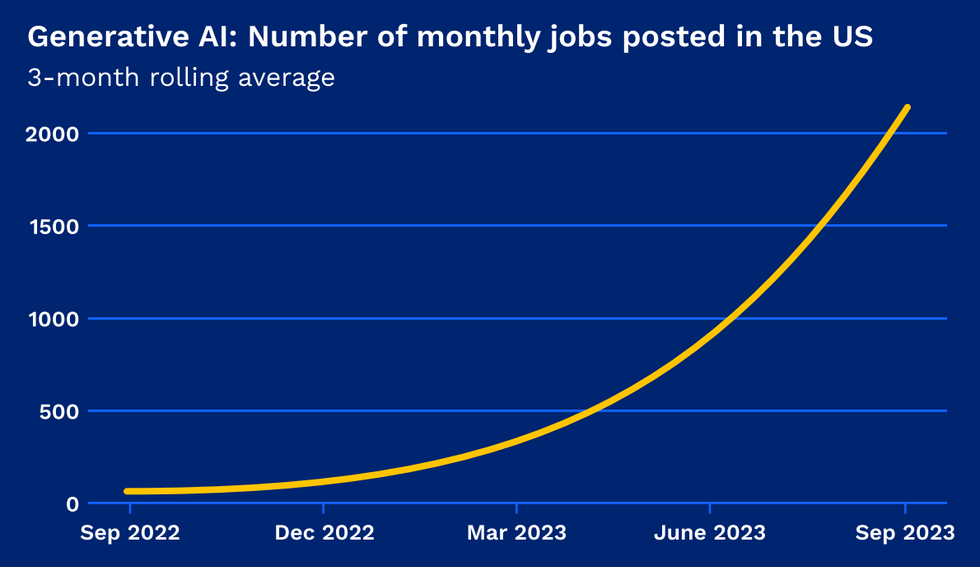

Scientists have predicted that by 2040, almost 50 percent of the world’s electric power will be used in computing. What’s more, this projection was made before the sudden explosion of generative AI. The amount of computing resources used to train the largest AI models has been doubling roughly every 6 months for more than the past decade. At this rate, by 2030 training a single artificial-intelligence model would take one hundred times as much computing resources as the combined annual resources of the current top ten supercomputers. Simply put, computing will require colossal amounts of power, soon exceeding what our planet can provide.

One way to manage the unsustainable energy requirements of the computing sector is to fundamentally change the way we compute. Superconductors could let us do just that.

Superconductors offer the possibility of drastically lowering energy consumption because they do not dissipate energy when passing current. True, superconductors work only at cryogenic temperatures, requiring some cooling overhead. But in exchange, they offer virtually zero-resistance interconnects, digital logic built on ultrashort pulses that require minimal energy, and the capacity for incredible computing density due to easy 3D chip stacking.

Are the advantages enough to overcome the cost of cryogenic cooling? Our work suggests they most certainly are. As the scale of computing resources gets larger, the marginal cost of the cooling overhead gets smaller. Our research shows that starting at around 1016 floating-point operations per second (tens of petaflops) the superconducting computer handily becomes more power efficient than its classical cousin. This is exactly the scale of typical high-performance computers today, so the time for a superconducting supercomputer is now.

At Imec, we have spent the past two years developing superconducting processing units that can be manufactured using standard CMOS tools. A processor based on this work would be one hundred times as energy efficient as the most efficient chips today, and it would lead to a computer that fits a data-center’s worth of computing resources into a system the size of a shoebox.

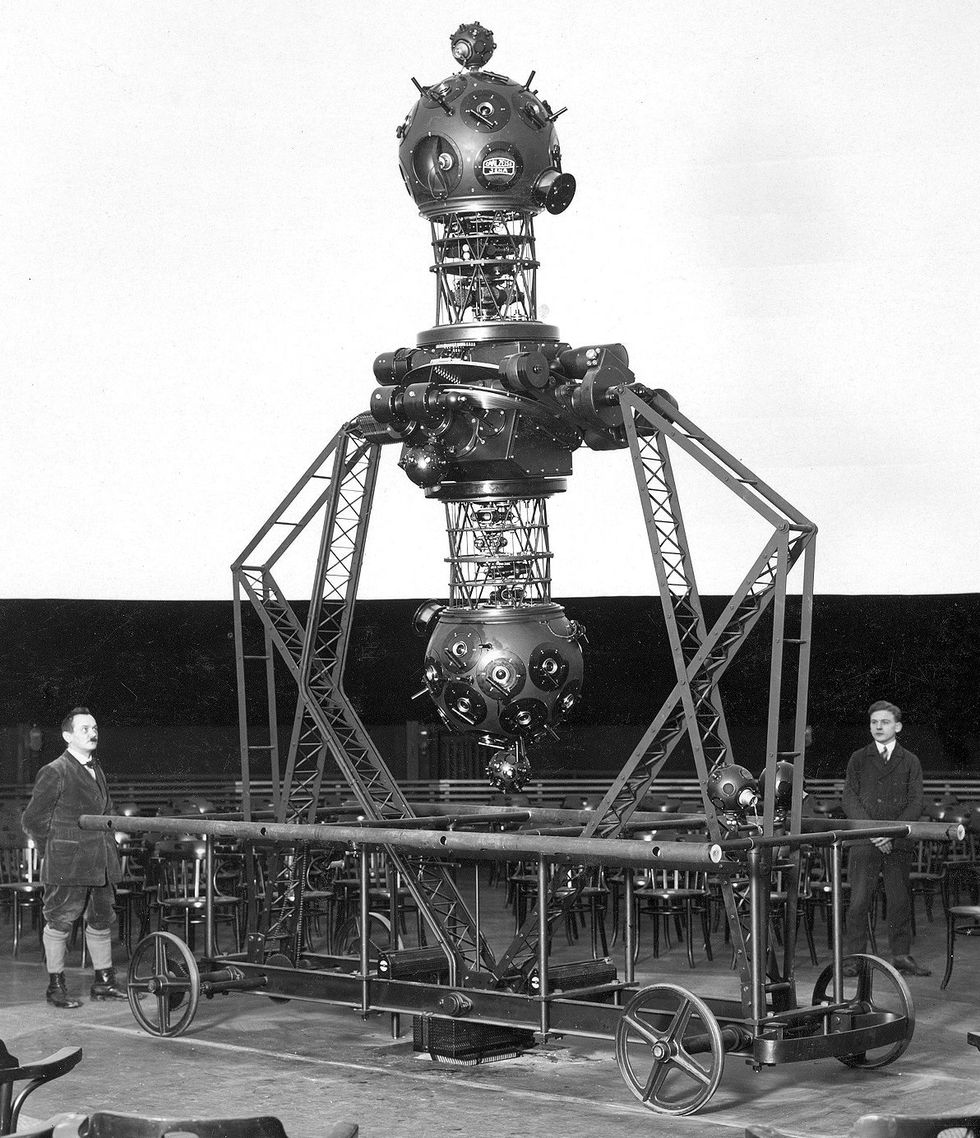

The Physics of Energy-Efficient Computation

Superconductivity—that superpower that allows certain materials to transmit electricity without resistance at low enough temperatures—was discovered back in 1911, and the idea of using it for computing has been around since the mid-1950s. But despite the promise of lower power usage and higher compute density, the technology couldn’t compete with the astounding advance of CMOS scaling under Moore’s Law. Research has continued through the decades, with a superconducting CPU demonstrated by a group at Yokohama National University as recently as 2020. However, as an aid to computing, superconductivity has stayed largely confined to the laboratory.

To bring this technology out of the lab and toward a scalable design that stands a chance of being competitive in the real world, we had to change our approach here at Imec. Instead of inventing a system from the bottom up—that is, starting with what works in a physics lab and hoping it is useful—we designed it from the top down—starting with the necessary functionality, and working directly with CMOS engineers and a full-stack development team to ensure manufacturability. The team worked not only on a fabrication process, but also software architectures, logic gates, and standard-cell libraries of logic and memory elements to build a complete technology.

The foundational ideas behind energy-efficient computation, however, have been developed as far back as 1991. In conventional processors, much of the power consumed and heat dissipated comes from moving information among logic units, or between logic and memory elements rather than from actual operations. Interconnects made of superconducting material, however, do not dissipate any energy. The wires have zero electrical resistance, and therefore, little energy is required to move bits within the processor. This property of having extremely low energy losses holds true even at very high communication frequencies, where losses would skyrocket ordinary interconnects.

Further energy savings come from the way logic is done inside the superconducting computer. Instead of the transistor, the basic element in superconducting logic is the Josephson-junction.

A Josephson junction is a sandwich—a thin slice of insulating material squeezed between two superconductors. Connect the two superconductors, and you have yourself a Josephson-junction loop.

Under normal conditions, the insulating “meat” in the sandwich is so thin that it does not deter a supercurrent—the whole sandwich just acts as a superconductor. However, if you ramp up the current past a threshold known as a critical current, the superconducting “bread slices” around the insulator get briefly knocked out of their superconducting state. In this transition period, the junction emits a tiny voltage pulse, lasting just a picosecond and dissipating just 2 x 10-20 joules, a hundred-billionth of what it takes to write a single bit of information into conventional flash memory.

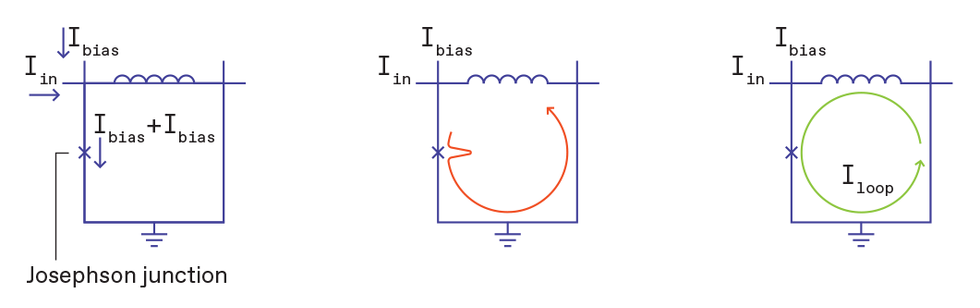

A single flux quantum develops in a Josephson-junction loop via a three-step process. First, a current just above the critical value is passed through the junction. The junction then emits a single-flux-quantum voltage pulse. The voltage pulse passes through the inductor, creating a persistent current in the loop. A Josephson junction is indicated by an x on circuit diagrams. Chris Philpot

A single flux quantum develops in a Josephson-junction loop via a three-step process. First, a current just above the critical value is passed through the junction. The junction then emits a single-flux-quantum voltage pulse. The voltage pulse passes through the inductor, creating a persistent current in the loop. A Josephson junction is indicated by an x on circuit diagrams. Chris PhilpotThe key is that, due to a phenomenon called magnetic flux quantization in the superconducting loop, this pulse is always exactly the same. It is known as a “single flux quantum” (SFQ) of magnetic flux, and it is fixed to have a value of 2.07 millivolt-picoseconds. Put an inductor inside the Josephson-junction loop, and the voltage pulse drives a current. Since the loop is superconducting, this current will continue going around the loop indefinitely, without using any further energy.

Logical operations inside the superconducting computer are made by manipulating these tiny, quantized voltage pulses. A Josephson-junction loop with an SFQ’s worth of persistent current acts as a logical 1, while a current-free loop is a logical 0.

To store information, the Josephson-junction-based version of SRAM in CPU cache, also uses single flux quanta. To store one bit, two Josephson-junction loops need to be placed next to each other. An SFQ with a persistent current in the left-hand loop is a memory element storing a logical 0, whereas no current in the left but a current in the right loop is a logical 1.

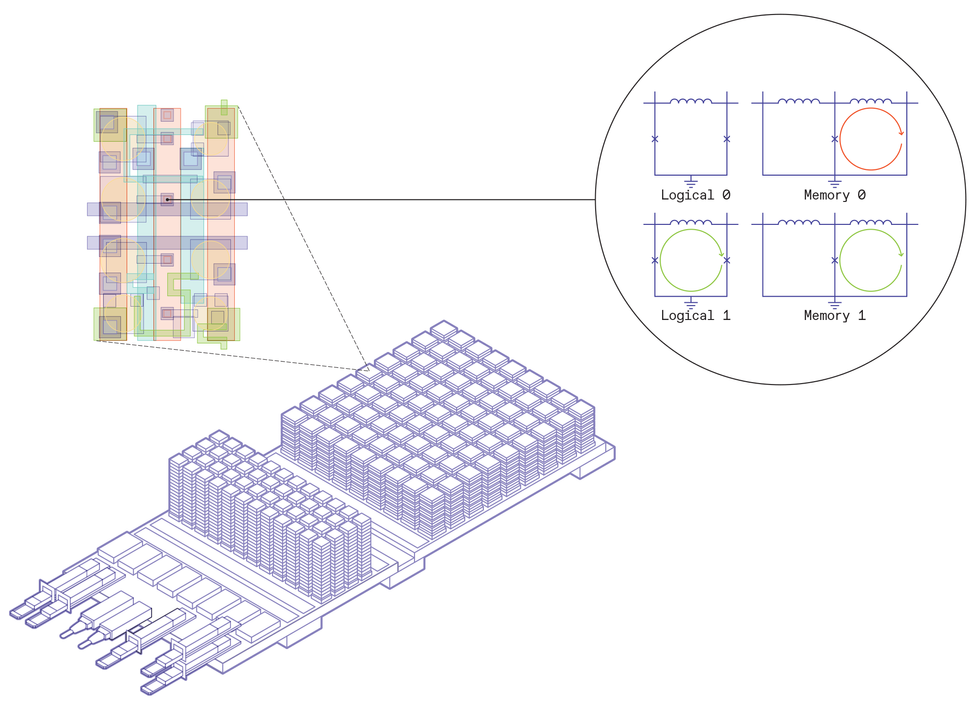

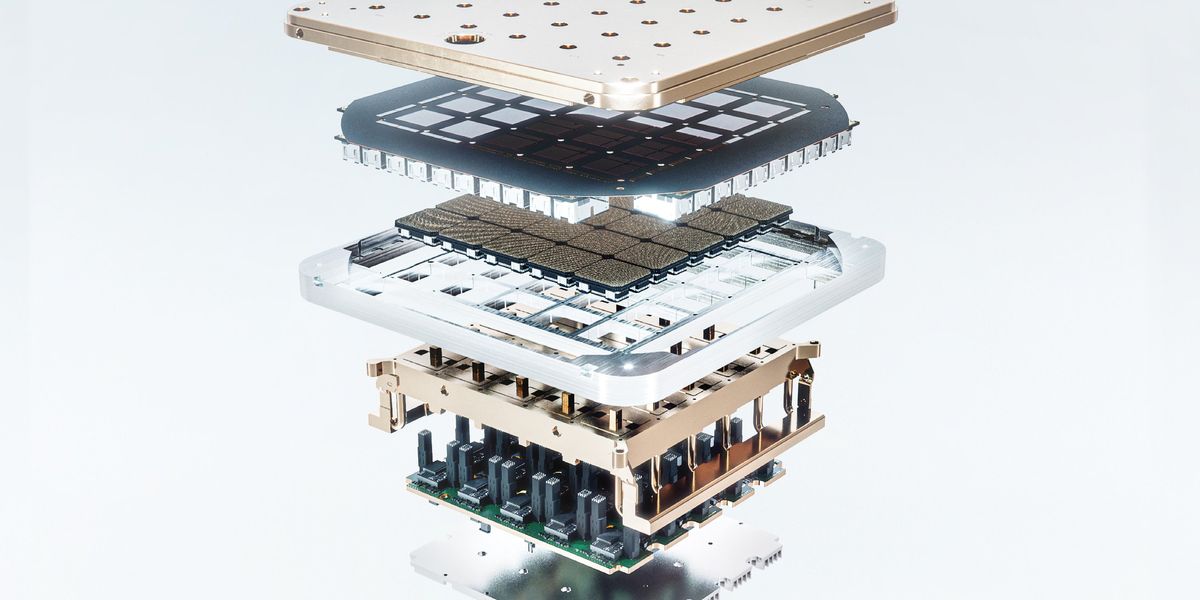

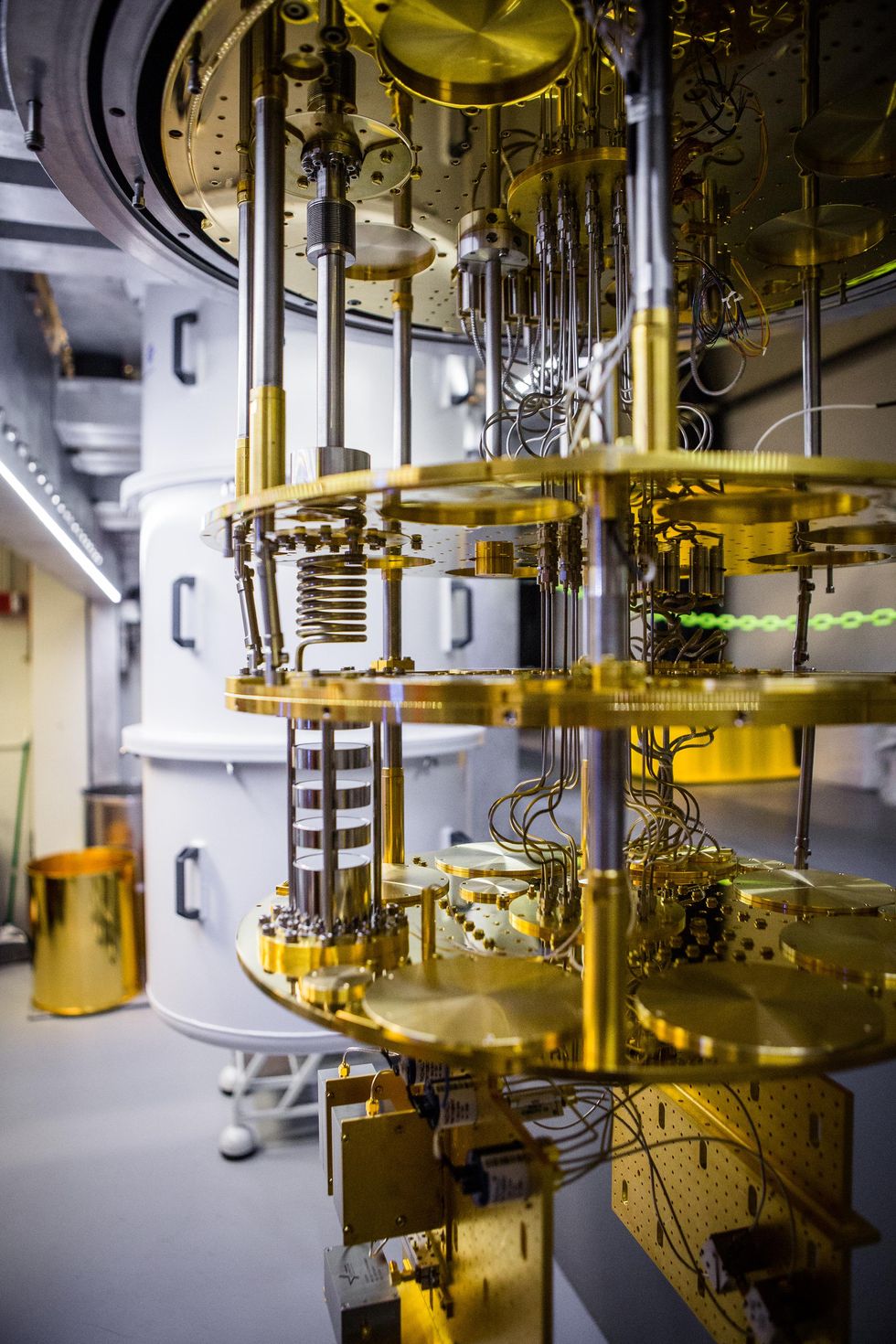

Designing a superconductor-based data center required full-stack innovation. Imec’s board design contains three main elements: the input and output, leading data to the room temperature world, the conventional DRAM, stacked high and cooled to 77 kelvins, and the superconducting processing units, also stacked, and cooled to 4 K. Inside the superconducting processing unit, basic logic and memory elements are laid out to perform computations. A magnification of the chip shows the basic building blocks: For logic, a Josephson-junction loop without a persistent current indicates a logical 0, while a loop with one single flux quantum’s worth of current represents a logical 1. For memory, two Josephson junction loops are connected together. An SFQ’s worth of persistent current in the left loop is a memory 0, and a current in the right loop is a memory 1. Chris Philpot

Designing a superconductor-based data center required full-stack innovation. Imec’s board design contains three main elements: the input and output, leading data to the room temperature world, the conventional DRAM, stacked high and cooled to 77 kelvins, and the superconducting processing units, also stacked, and cooled to 4 K. Inside the superconducting processing unit, basic logic and memory elements are laid out to perform computations. A magnification of the chip shows the basic building blocks: For logic, a Josephson-junction loop without a persistent current indicates a logical 0, while a loop with one single flux quantum’s worth of current represents a logical 1. For memory, two Josephson junction loops are connected together. An SFQ’s worth of persistent current in the left loop is a memory 0, and a current in the right loop is a memory 1. Chris PhilpotProgress Through Full-Stack Development

To go from a lab curiosity to a chip prototype ready for fabrication, we had to innovate the full stack of hardware. This came in three main layers: engineering the basic materials used, circuit development, and architectural design. The three layers had to go together—a new set of materials requires new circuit designs, and new circuit designs require novel architectures to incorporate them. Codevelopment across all three stages, with a strict adherence to CMOS manufacturing capabilities, was the key to success.

At the materials level, we had to step away from the previous lab-favorite superconducting material: niobium. While niobium is easy to model and behaves very well under predictable lab conditions, it is very difficult to scale down. Niobium is sensitive to both process temperature and its surrounding materials, so it is not compatible with standard CMOS processing. Therefore, we switched to the related compound niobium titanium nitride for our basic superconducting material. Niobium titanium nitride can withstand temperatures used in CMOS fabrication without losing its superconducting capabilities, and it reacts much less with its surrounding layers, making it a much more practical choice.

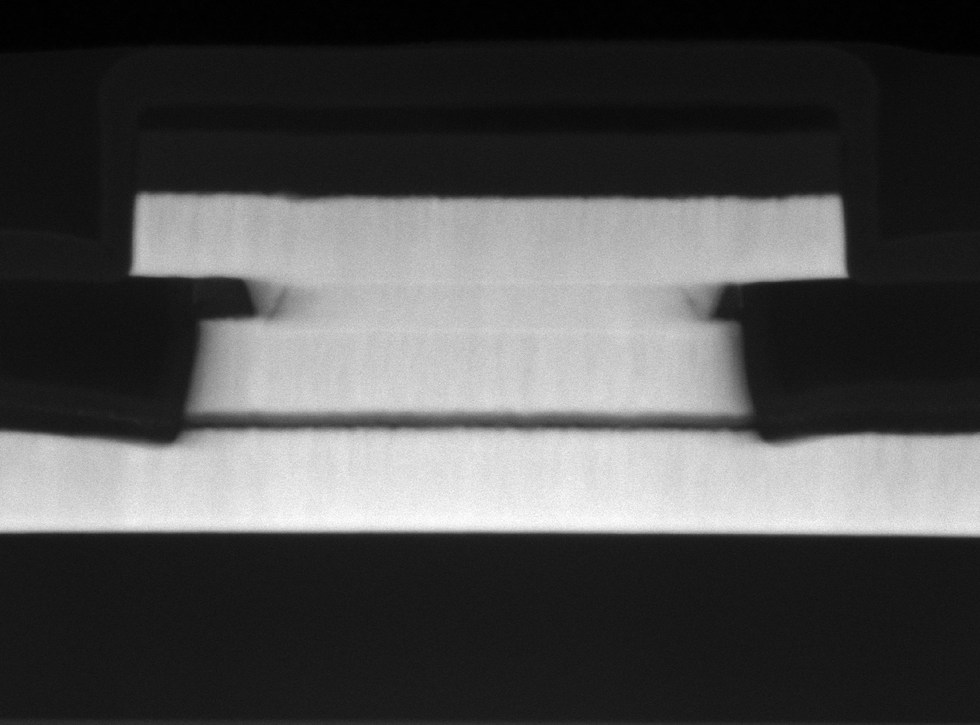

The basic building block of superconducting logic and memory is the Josephson junction. At Imec, these junctions have been manufactured using a new set of materials, allowing the team to scale down the technology without losing functionality. Here, a tunneling electron microscope image shows a Josephson junction made with alpha-silicon insulator sandwiched between niobium titanium nitride superconductors, achieving a critical dimension of 210 nanometers. Imec

The basic building block of superconducting logic and memory is the Josephson junction. At Imec, these junctions have been manufactured using a new set of materials, allowing the team to scale down the technology without losing functionality. Here, a tunneling electron microscope image shows a Josephson junction made with alpha-silicon insulator sandwiched between niobium titanium nitride superconductors, achieving a critical dimension of 210 nanometers. ImecAdditionally, we employed a new material for the meat layer of the Josephson-junction sandwich—amorphous, or alpha, silicon. Conventional Josephson-junction materials, most notably aluminum oxide, didn’t scale down well. Aluminum was used because it “wets” the niobium, smoothing the surface, and the oxide was grown in a well-controlled manner. However, to get to the ultrahigh densities that we are targeting, we would have to make the oxide too thin to be practically manufacturable. Alpha silicon, in contrast, allowed us to use a much thicker barrier for the same critical current.

We also had to devise a new way to power the Josephson junctions that would scale down to the size of a chip. Previously, lab-based superconducting computers used transformers to deliver current to their circuit elements. However, having a bulky transformer near each circuit element is unworkable. Instead, we designed a way to deliver power to all the elements on the chip at once by creating a resonant circuit, with specialized capacitors interspersed throughout the chip.

At the circuit level, we had to redesign the entire logic and memory structure to take advantage of the new materials’ capabilities. We designed a novel logic architecture that we call pulse-conserving logic. The key requirement for pulse-conserving logic is that the elements have as many inputs as outputs and that the total number of single flux quanta is conserved. The logic is performed by routing the SFQs through a combination of Josephson-junction loops and inductors to the appropriate outputs, resulting in logical ORs and ANDs. To complement the logic architecture, we also redesigned a compatible Josephson-junction-based SRAM.

Lastly, we had to make architectural innovations to take full advantage of the novel materials and circuit designs. Among these was cooling conventional silicon DRAM down to 77 kelvins and designing a glass bridge between the 77-K section and the main superconducting section. The bridge houses thin wires that allow communication without thermal mixing. We also came up with a way of stacking chips on top of each other and are developing vertical superconducting interconnects to link between circuit boards.

A Data Center the Size of a Shoebox

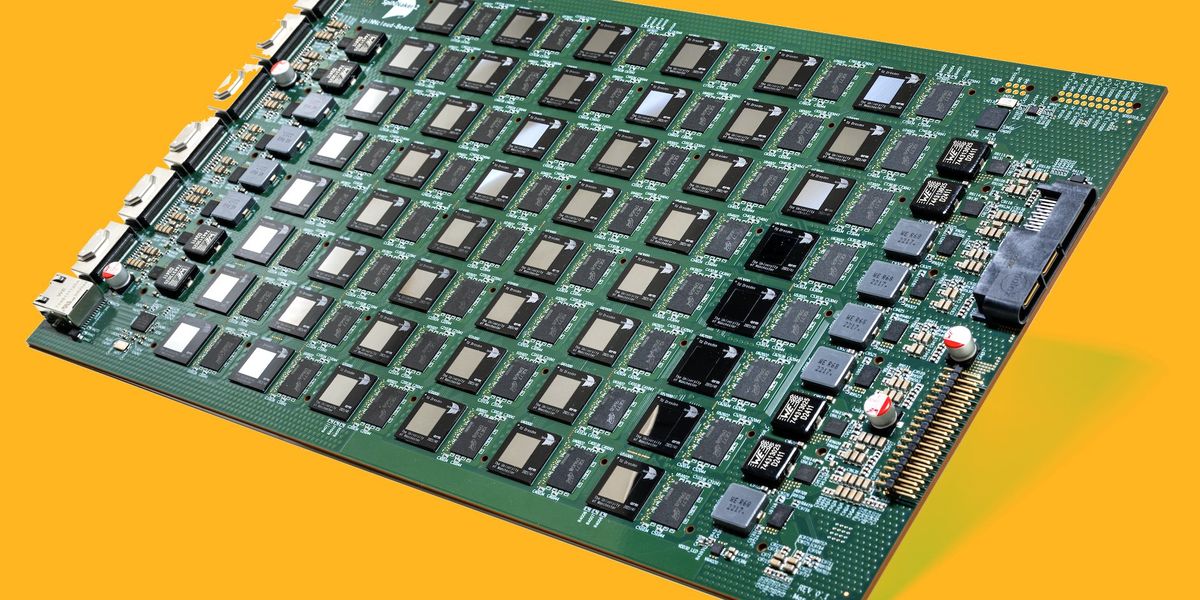

The result is a superconductor-based chip design that’s optimized for AI processing. A zoom in on one of its boards reveals many similarities with a typical 3D CMOS system-on-chip. The board is populated by computational chips: We call it a superconductor processing unit (SPU), with embedded superconducting SRAM, DRAM memory stacks, and switches, all interconnected on silicon interposer or on glass-bridge advanced packaging technologies.

But there are also some striking differences. First, most of the chip is to be submerged in liquid helium for cooling to a mere 4 K. This includes the SPUs and SRAM, which depend on superconducting logic rather than CMOS, and are housed on an interposer board. Next, there is a glass bridge to a warmer area, a balmy 77 K that hosts the DRAM. The DRAM technology is not superconducting, but conventional silicon cooled down from room temperature, making it more efficient. From there, bespoke connectors lead data to and from the room-temperature world.

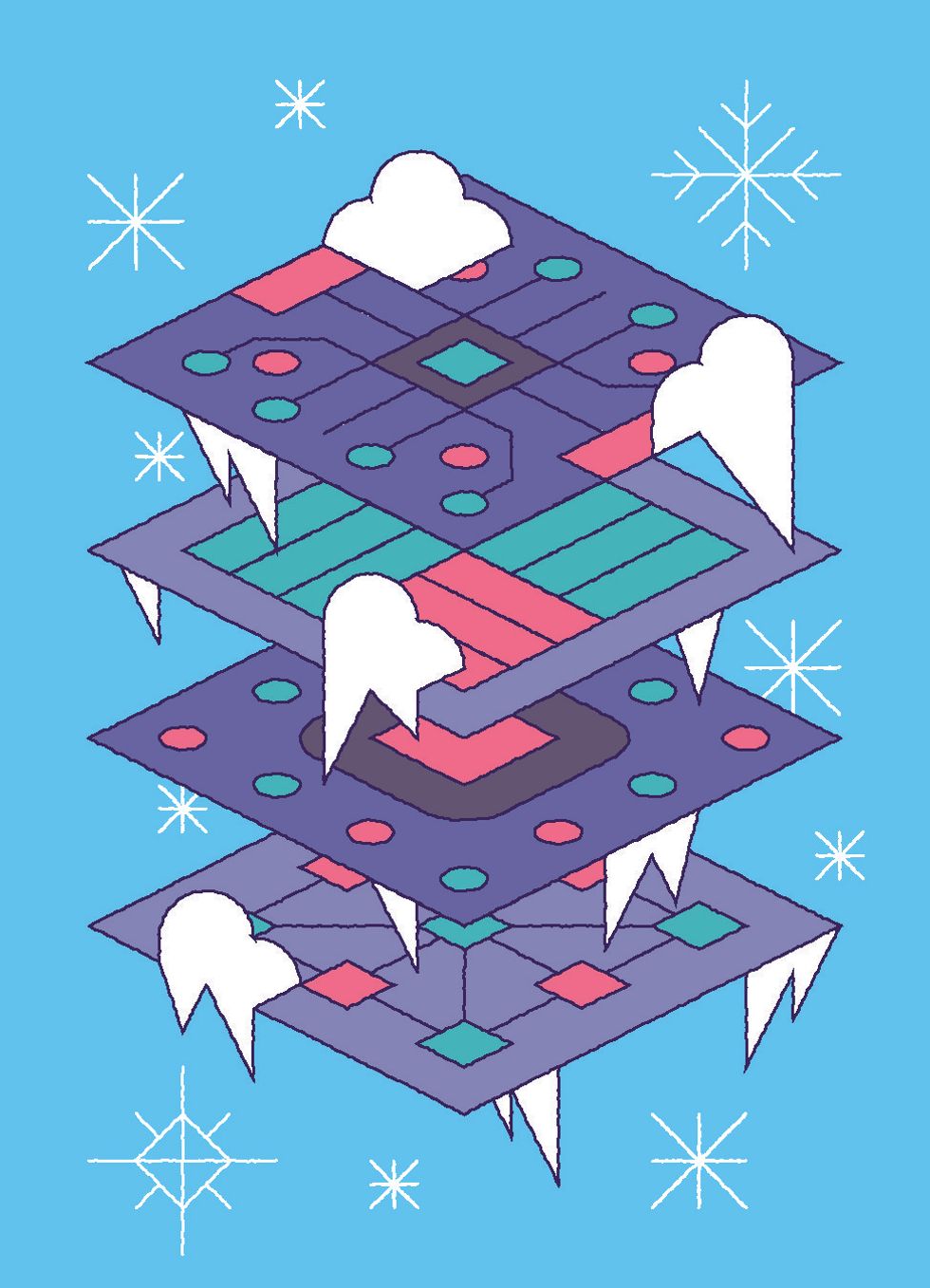

Davide Comai

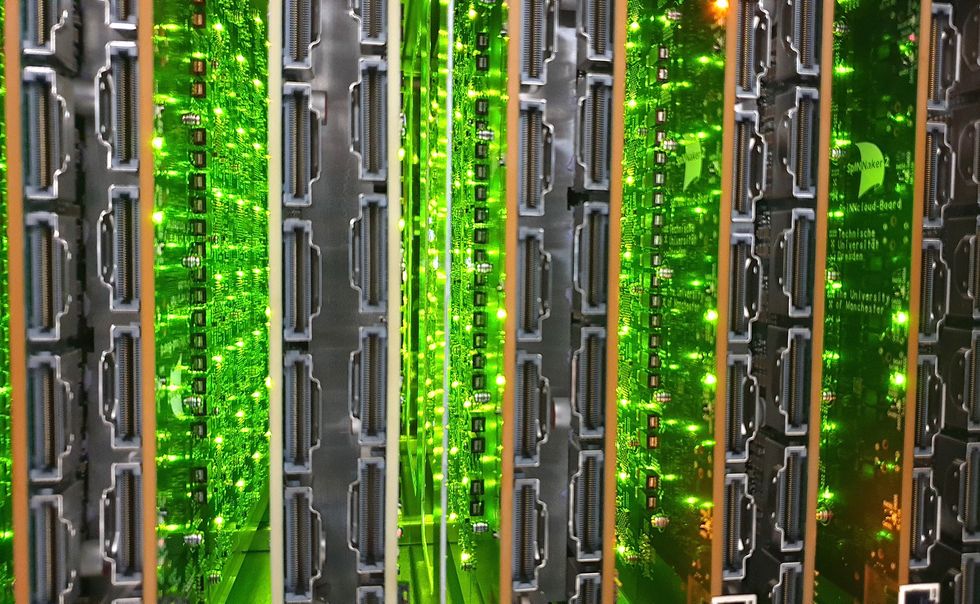

Davide ComaiMoore’s law relies on fitting progressively more computing resources into the same space. As scaling down transistors gets more and more difficult, the semiconductor industry is turning toward 3D stacking of chips to keep up the density gains. In classical CMOS-based technology, it is very challenging to stack computational chips on top of each other because of the large amount of power, and therefore heat, that is dissipated within the chips. In superconducting technology, the little power that is dissipated is easily removed by the liquid helium. Logic chips can be directly stacked using advanced 3D integration technologies resulting in shorter and faster connections between the chips, and a smaller footprint.

It is also straightforward to stack multiple boards of 3D superconducting chips on top of each other, leaving only a small space between them. We modeled a stack of 100 such boards, all operating within the same cooling environment and contained in a 20- by 20- by 12-centimeter volume, roughly the size of a shoebox. We calculated that this stack can perform 20 exaflops (in BF16 number format), 20 times the capacity of the largest supercomputer today. What’s more, the system promises to consume only 500 kilowatts of total power. This translates to energy efficiency one hundred times as high as the most efficient supercomputer today.

So far, we’ve scaled down Josephson junctions and interconnect dimensions over three succeeding generations. Going forward, Imec’s road map includes tackling 3D superconducting chip-integration and cooling technologies. For the first generation, the road map envisions the stacking of about 100 boards to obtain the target performance of 20 exaflops. Gradually, more and more logic chips will be stacked, and the number of boards will be reduced. This will further increase performance while reducing complexity and cost.

The Superconducting Vision

We don’t envision that superconducting digital technology will replace conventional CMOS computing, but we do expect it to complement CMOS for specific applications and fuel innovations in new ones. For one, this technology would integrate seamlessly with quantum computers that are also built upon superconducting technology. Perhaps more significantly, we believe it will support the growth in AI and machine learning processing and help provide cloud-based training of big AI models in a much more sustainable way than is currently possible.

In addition, with this technology we can engineer data centers with much smaller footprints. Drastically smaller data centers can be placed close to their target applications, rather than being in some far-off football-stadium-size facility.

Such transformative server technology is a dream for scientists. It opens doors to online training of AI models on real data that are part of an actively changing environment. Take potential robotic farms as an example. Today, training these would be a challenging task, where the required processing capabilities are available only in far-away, power-hungry data centers. With compact, nearby data centers, the data could be processed at once, allowing an AI to learn from current conditions on the farm

Similarly, these miniature data centers can be interspersed in energy grids, learning right away at each node and distributing electricity more efficiently throughout the world. Imagine smart cities, mobile health care systems, manufacturing, farming, and more, all benefiting from instant feedback from adjacent AI learners, optimizing and improving decision making in real time.

-

This Member Gets a Charge from Promoting Sustainability

by Joanna Goodrich on 14. May 2024. at 18:00

Ever since she was an undergraduate student in Turkey, Simay Akar has been interested in renewable energy technology. As she progressed through her career after school, she chose not to develop the technology herself but to promote it. She has held marketing positions with major energy companies, and now she runs two startups.

One of Akar’s companies develops and manufactures lithium-ion batteries and recycles them. The other consults with businesses to help them achieve their sustainability goals.

Simay Akar

Employer

AK Energy Consulting

Title

CEO

Member grade

Senior member

Alma mater

Middle East Technical University in Ankara, Turkey

“I love the industry and the people in this business,” Akar says. “They are passionate about renewable energy and want their work to make a difference.”

Akar, a senior member, has become an active IEEE volunteer as well, holding leadership positions. First she served as student branch coordinator, then as a student chapter coordinator, and then as a member of several administrative bodies including the IEEE Young Professionals committee.

Akar received this year’s IEEE Theodore W. Hissey Outstanding Young Professional Award for her “leadership and inspiration of young professionals with significant contributions in the technical fields of photovoltaics and sustainable energy storage.” The award is sponsored by IEEE Young Professionals and the IEEE Photonics and Power & Energy societies.

Akar says she’s honored to get the award because “Theodore W. Hissey’s commitment to supporting young professionals across all of IEEE’s vast fields is truly commendable.” Hissey, who died in 2023, was an IEEE Life Fellow and IEEE director emeritus who supported the IEEE Young Professionals community for years.

“This award acknowledges the potential we hold to make a significant impact,” Akar says, “and it motivates me to keep pushing the boundaries in sustainable energy and inspire others to do the same.”

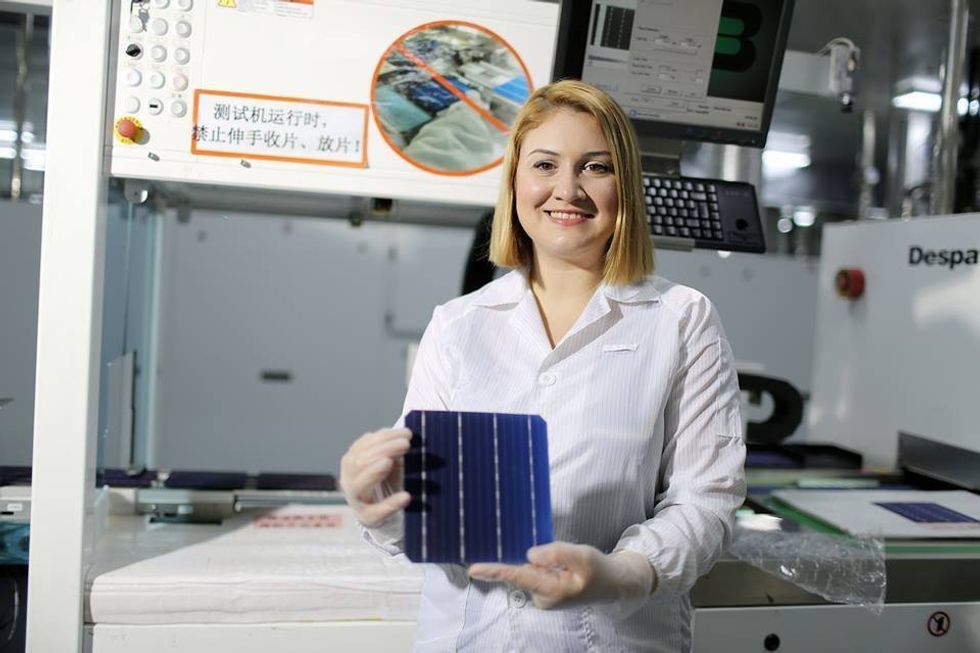

A career in sustainable technology

After graduating with a degree in the social impact of technology from Middle East Technical University, in Ankara, Turkey, Akar worked at several energy companies. Among them was Talesun Solar in Suzhou, China, where she was head of overseas marketing. She left to become the sales and marketing director for Eko Renewable Energy, in Istanbul.

In 2020 she founded Innoses in Shanghai. The company makes batteries for electric vehicles and customizes them for commercial, residential, and off-grid renewable energy systems such as solar panels. Additionally, Innoses recycles lithium-ion batteries, which otherwise end up in landfills, leaching hazardous chemicals.

“Recycling batteries helps cut down on pollution and greenhouse gas emissions,” Akar says. “That’s something we can all feel good about.”

She says there are two main methods of recycling batteries: melting and shredding.

Melting batteries is done by heating them until their parts separate. Valuable metals including cobalt and nickel are collected and cleaned to be reused in new batteries.

A shredding machine with high-speed rotating blades cuts batteries into small pieces. The different components are separated and treated with solutions to break them down further. Lithium, copper, and other metals are collected and cleaned to be reused.

The melting method tends to be better for collecting cobalt and nickel, while shredding is better for recovering lithium and copper, Akar says.

“This happens because each method focuses on different parts of the battery, so some metals are easier to extract depending on how they are processed,” she says. The chosen method depends on factors such as the composition of the batteries, the efficiency of the recycling process, and the desired metals to be recovered.

“There are a lot of environmental concerns related to battery usage,” Akar says. “But, if the right recycling process can be completed, batteries can also be sustainable. The right process could keep pollution and emissions low and protect the health of workers and surrounding communities.”

Akar worked at several energy companies including Talesun Solar in Suzhou, China, which manufactures solar cells like the one she is holding.Simay Akar

Akar worked at several energy companies including Talesun Solar in Suzhou, China, which manufactures solar cells like the one she is holding.Simay AkarHelping businesses with sustainability

After noticing many businesses were struggling to become more sustainable, in 2021 Akar founded AK Energy Consulting in Istanbul. Through discussions with company leaders, she found they “need guidance and support from someone who understands not only sustainable technology but also the best way renewable energy can help the planet,” she says.

“My goal for the firm is simple: Be a force for change and create a future that’s sustainable and prosperous for everyone,” she says.

Akar and her staff meet with business leaders to better understand their sustainability goals. They identify areas where companies can improve, assess the impact the recommended changes can have, and research the latest sustainable technology. Her consulting firm also helps businesses understand how to meet government compliance regulations.

“By embracing sustainability, companies can create positive social, environmental, and economic impact while thriving in a rapidly changing world,” Akar says. “The best part of my job is seeing real change happen. Watching my clients switch to renewable energy, adopt eco-friendly practices, and hit their green goals is like a pat on the back.”

Serving on IEEE boards and committees

Akar has been a dedicated IEEE volunteer since joining the organization in 2007 as an undergraduate student and serving as chair of her school’s student branch. After graduating, she held other roles including Region 8 student branch coordinator, student chapter coordinator, and the region’s IEEE Women in Engineering committee chair.

In her nearly 20 years as a volunteer, Akar has been a member of several IEEE boards and committees including the Young Professionals committee, the Technical Activities Board, and the Nominations and Appointments Committee for top-level positions.

She is an active member of the IEEE Power & Energy Society and is a former IEEE PES liaison to the Women in Engineering committee. She is also a past vice chair of the society’s Women in Power group, which supports career advancement and education and provides networking opportunities.

“My volunteering experiences have helped me gain a deep understanding of how IEEE operates,” she says. “I’ve accumulated invaluable knowledge, and the work I’ve done has been incredibly fulfilling.”

As a member of the IEEE–Eta Kappa Nu honor society, Akar has mentored members of the next generation of technologists. She also served as a mentor in the IEEE Member and Geographic Activities Volunteer Leadership Training Program, which provides members with resources and an overview of IEEE, including its culture and mission. The program also offers participants training in management and leadership skills.

Akar says her experiences as an IEEE member have helped shape her career. When she transitioned from working as a marketer to being an entrepreneur, she joined IEEE Entrepreneurship, eventually serving as its vice chair of products. She also was chair of the Region 10 entrepreneurship committee.

“I had engineers I could talk to about emerging technologies and how to make a difference through Innoses,” she says. “I also received a lot of support from the group.”

Akar says she is committed to IEEE’s mission of advancing technology for humanity. She currently chairs the IEEE Humanitarian Technology Board’s best practices and projects committee. She also is chair of the IEEE MOVE global committee. The mobile outreach vehicle program provides communities affected by natural disasters with power and Internet access.

“Through my leadership,” Akar says, “I hope to contribute to the development of innovative solutions that improve the well-being of communities worldwide.”

-

Startup Sends Bluetooth Into Low Earth Orbit

by Margo Anderson on 13. May 2024. at 19:54

A recent Bluetooth connection between a device on Earth and a satellite in orbit signals a potential new space race—this time, for global location-tracking networks.

Seattle-based startup Hubble Network announced today that it had a letter of understanding with San Francisco-based startup Life360 to develop a global, satellite-based Internet of Things (IoT) tracking system. The announcement follows on the heels of a 29 April announcement from Hubble Network that it had established the first Bluetooth connection between a device on Earth and a satellite. The pair of announcements sets the stage for an IoT tracking system that aims to rival Apple’s AirTags, Samsung’s Galaxy SmartTag2, and the Cube GPS Tracker.

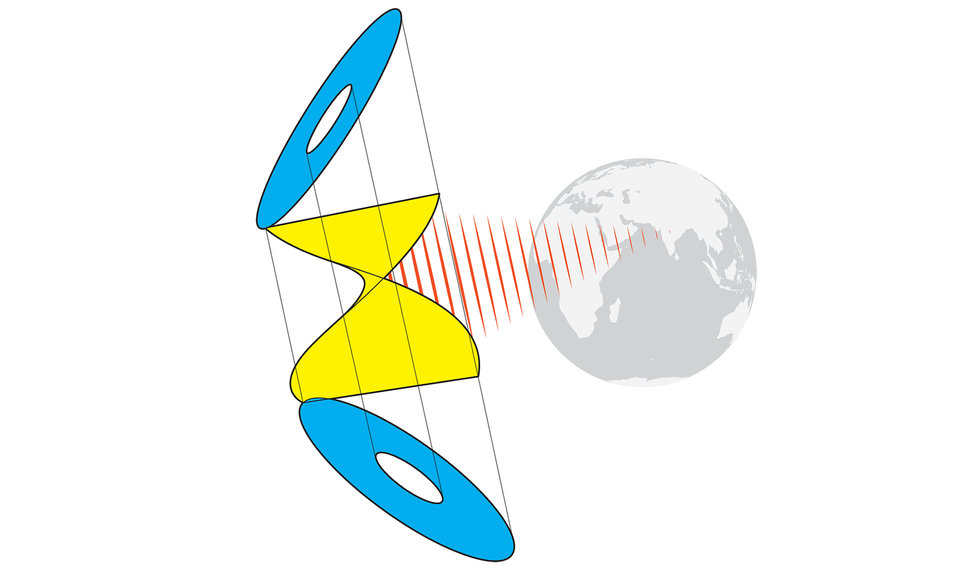

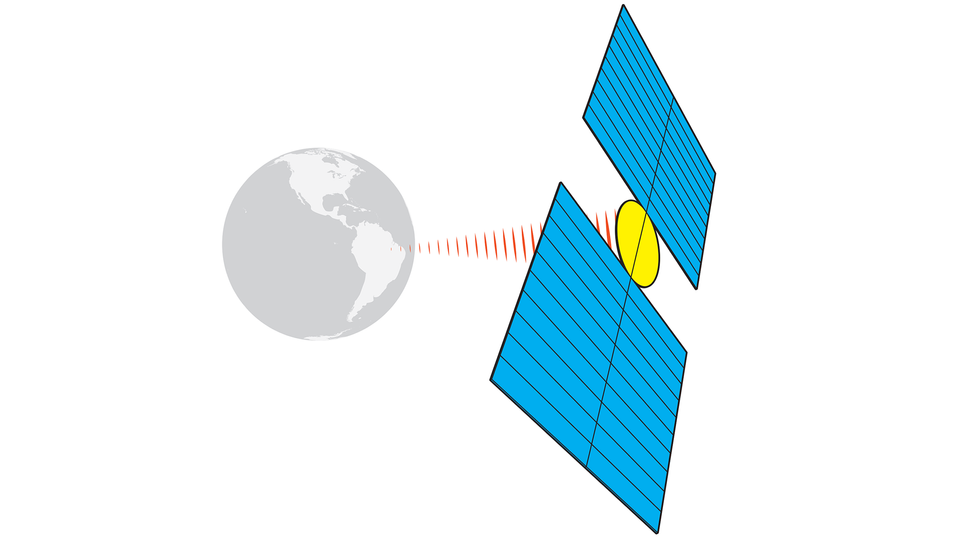

Bluetooth, the wireless technology that connects home speakers and earbuds to phones, typically traverses meters, not hundreds of kilometers (520 km, in the case of Hubble Network’s two orbiting satellites). The trick to extending the tech’s range, Hubble Network says, lies in the startup’s patented, high-sensitivity signal detection system on a LEO satellite.

“We believe this is comparable to when GPS was first made available for public use.” —Alex Haro, Hubble Network

The caveat, however, is that the connection is device-to-satellite only. The satellite can’t ping devices back on Earth to say “signal received,” for example. This is because location-tracking tags operate on tiny energy budgets—often powered by button-sized batteries and running on a single charge for months or even years at a stretch. Tags are also able to perform only minimal signal processing. That means that tracking devices cannot include the sensitive phased-array antennas and digital beamforming needed to tease out a vanishingly tiny Bluetooth signal racing through the stratosphere.

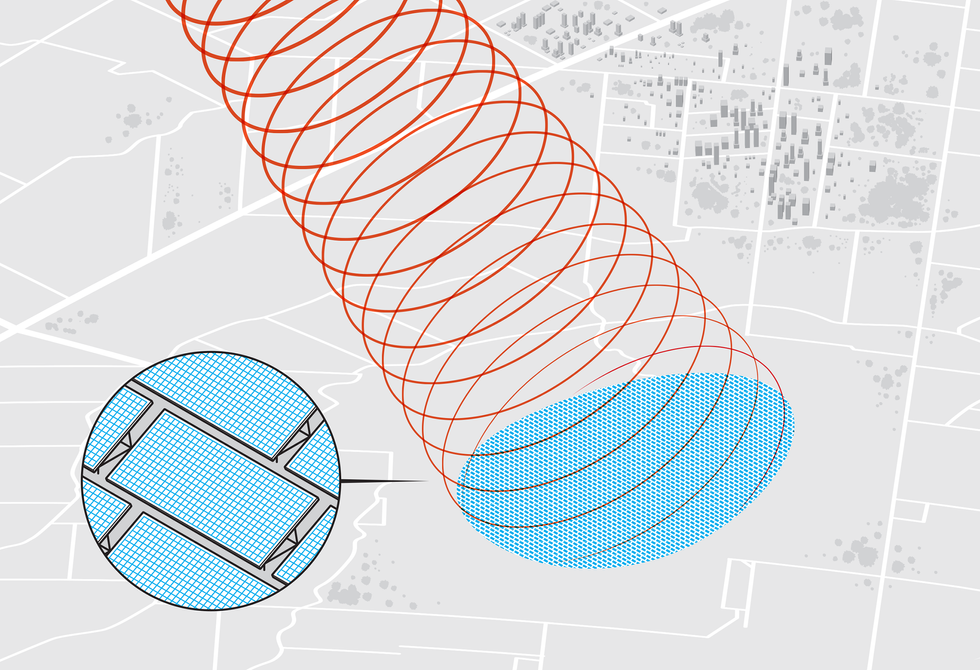

“There is a massive enterprise and industrial market for ‘send only’ applications,” says Alex Haro, CEO of Hubble Network. “Once deployed, these sensors and devices don’t need Internet connectivity except to send out their location and telemetry data, such as temperature, humidity, shock, and moisture. Hubble enables sensors and asset trackers to be deployed globally in a very battery- and cost-efficient manner.”

Other applications for the company’s technologies, Haro says, include asset tracking, environmental monitoring, container and pallet tracking, predictive maintenance, smart agriculture applications, fleet management, smart buildings, and electrical grid monitoring.

“To give you a sense of how much better Hubble Network is compared to existing satellite providers like Globalstar,” Haro says, “We are 50 times cheaper and have 20 times longer battery life. For example, we can build a Tile device that is locatable anywhere in the world without any cellular reception and lasts for years on a single coin cell battery. This will be a game-changer in the AirTag market for consumers.”

Hubble Network chief space officer John Kim (left) and two company engineers perform tests on the company’s signal-sensing satellite technology. Hubble Network

Hubble Network chief space officer John Kim (left) and two company engineers perform tests on the company’s signal-sensing satellite technology. Hubble NetworkThe Hubble Network system—and presumably the enhanced Life360 Tags that should follow today’s announcement—use a lower energy iteration of the familiar Bluetooth wireless protocol.

Like its more famous cousin, Bluetooth Low-Energy (BLE) uses the 2.4 gigahertz band—a globally unlicensed spectrum band that many Wi-Fi routers, microwave ovens, baby monitors, wireless microphones, and other consumer devices also use.

Haro says BLE offered the most compelling, supposedly “short-range” wireless standard for Hubble Network’s purposes. By contrast, he says, the long-range, wide-area network LoRaWAN operates on a communications band, 900 megahertz, that some countries and regions regulate differently from others—making a potentially global standard around it that much more difficult to establish and maintain. Plus, he says, 2.4 GHz antennas can be roughly one-third the size of a standard LoRaWAN antenna, which makes a difference when launching material into space, when every gram matters.

Haro says that Hubble Network’s technology does require changing the sending device’s software in order to communicate with a BLE receiver satellite in orbit. And it doesn’t require any hardware modifications of the device, save one—adding a standard BLE antenna. “This is the first time that a Bluetooth chip can send data from the ground to a satellite in orbit,” Haro says. “We require the Hubble software stack loaded onto the chip to make this possible, but no physical modifications are needed. Off-the-shelf BLE chips are now capable of communicating directly with LEO satellites.”

“We believe this is comparable to when GPS was first made available for public use,” Haro adds. “It was a groundbreaking moment in technology history that significantly impacted everyday users in ways previously unavailable.”

What remains, of course, is the next hardest part: Launching all of the satellites needed to create a globally available tracking network. As to whether other companies or countries will be developing their own competitor technologies, now that Bluetooth has been revealed to have long-range communication capabilities, Haro did not speculate beyond what he envisions for his own company’s LEO ambitions.

“We currently have our first two satellites in orbit as of 4 March,” Haro says. “We plan to continue launching more satellites, aiming to have 32 in orbit by early 2026. Our pilot customers are already updating and testing their devices on our network, and we will continue to scale our constellation over the next 3 to 5 years.”

-

Disney's Robots Use Rockets to Stick the Landing

by Morgan Pope on 12. May 2024. at 13:00

It’s hard to think of a more dramatic way to make an entrance than falling from the sky. While it certainly happens often enough on the silver screen, whether or not it can be done in real life is a tantalizing challenge for our entertainment robotics team at Disney Research.